AI Inference Cost in 2025: Architecture, Latency, and the Real Cost per Token

AI inference cost, not training expense, now defines the real scalability, latency, and budget limits of modern AI systems. In late 2025, latency, throughput, and cost per token form a single architectural design space shaped more by system choices than by model selection. This article explains why AI inference cost has become the dominant operational constraint and how inference-first architecture and specialized hardware reshape production AI.

Since the publication of this analysis, the AI landscape has evolved rapidly. In just a few weeks, the rise of agentic AI systems, long-context scaling, and hyperscaler infrastructure expansion has significantly reshaped inference economics.

For an updated and more comprehensive perspective, read our latest article, “AI Inference Economics 2026: The Real Cost of Agents, Long Context and Infrastructure Scale” (February 18, 2026). It explores how agentic workflows, sustained GPU utilization, and large-scale capital investment are redefining the real cost structure of AI deployment in 2026.

Why inference has become the dominant AI cost and constraint

Continuous workloads vs episodic training

Training remains episodic, bounded in time, and planned around defined projects. Inference operates continuously, often across regions and time zones, and scales with real user demand rather than internal milestones. Every query triggers computation, memory access, and scheduling overhead, turning inference into a permanent operational workload.

This distinction explains why AI inference cost grows faster than training expense. Training infrastructure can be amortized over long runs, while inference exposes cost per request and per token in real time. As reported in multiple infrastructure analyses, continuous inference workloads now dominate capacity utilization at scale.

Concurrency, SLAs, and tail latency

Inference systems operate under latency guarantees that training never faces. User-facing services typically define SLAs using P95 or P99 latency, meaning the response time that 95 % or 99 % of requests must stay below, rather than relying on average latency. Meeting these guarantees under concurrent load requires spare capacity, workload isolation, and careful queue management to prevent slow requests from cascading into user-visible delays.

Tail latency directly influences AI inference cost. To avoid SLA violations, systems often reserve capacity or limit batching, both of which reduce utilization. This behavior is explicitly discussed in NVIDIA’s description of disaggregated serving architectures in the NVIDIA Dynamo design documentation.

Why utilization, not FLOPs, drives inference economics

Inference economics are shaped by utilization rather than raw compute capability. FLOPs matter only insofar as they translate into sustained throughput under real workloads. Memory bandwidth, KV-cache behavior, request variability, and scheduling overhead often cap effective utilization well before compute saturation.

As a result, AI inference cost reflects how efficiently accelerators are kept busy while respecting latency constraints. Research and production reports consistently identify utilization losses as a primary driver of inference cost inflation.

Latency, throughput, and cost per token as a single design space

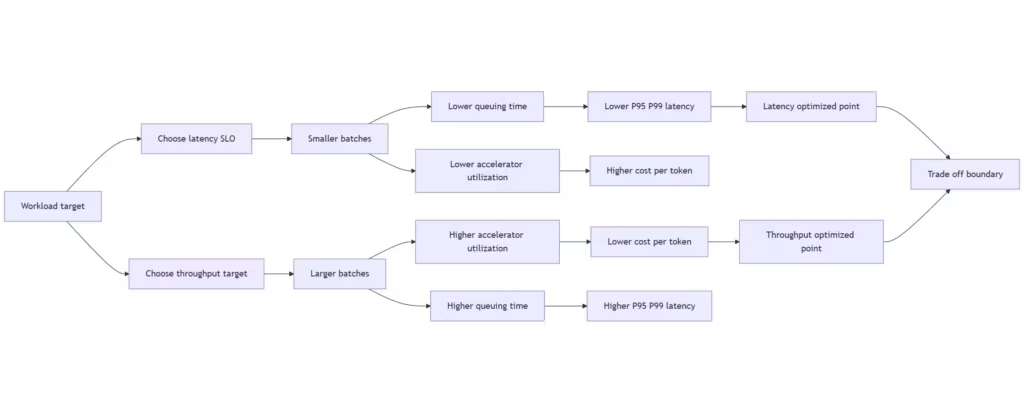

Latency vs throughput, the fundamental tension

Latency and throughput are inseparable in inference systems. Throughput gains usually come from batching, which increases latency. Latency reductions often require smaller batches or dedicated capacity, which lowers throughput.

This trade-off defines the core design problem of inference. There is no universal optimum, only workload-dependent balances shaped by SLAs, traffic patterns, and budget constraints, a point emphasized in infrastructure-focused guides such as RunPod’s analysis of AI inference optimization.

Cost per token as a production SLO

Cost per token has emerged as a production SLO alongside P95 and P99 latency. It captures the combined effects of hardware efficiency, batching strategy, scheduling policy, and memory behavior in a single operational metric.

Unlike aggregate infrastructure spend, cost per token can be tracked per workload and per deployment. Platforms exposing prompt or prefix caching mechanisms, such as those described in the OpenAI prompt caching guide and Anthropic’s documentation, explicitly position cost and latency reductions as first-class operational concerns.

When optimizing one metric breaks the others

Optimizing a single metric in isolation often degrades overall system performance. Aggressive batching can improve throughput while pushing tail latency beyond SLA limits. Latency-first tuning may meet response targets but waste capacity and inflate cost per token.

These second-order effects explain why inference optimization is fundamentally architectural. Changes to batching, caching, or routing ripple through the system and must be evaluated holistically rather than through isolated measurements.

Architecture choices that actually move cost per token

Batching and request shaping

Batching is one of the most effective levers for improving throughput by amortizing overhead across requests. Larger batches increase accelerator utilization but introduce waiting time that directly affects latency and tail behavior.

Request shaping techniques, such as dynamic batch sizing or deadline-aware batching, attempt to balance these effects. Systems like vLLM document how batching interacts with KV-cache allocation and scheduling in production inference environments, as explained in the vLLM design documentation.

Also read : vLLM vs TensorRT-LLM: Inference Runtime Guide

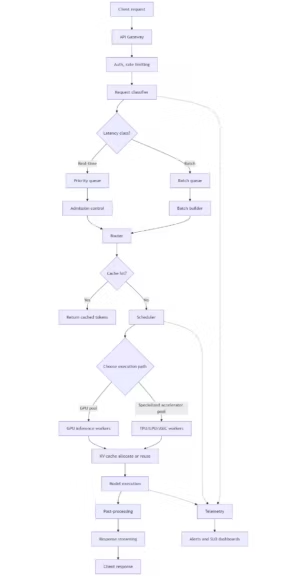

Scheduling, routing, and priority queues

Scheduling policy often has more impact on AI inference cost than hardware choice. Decisions about how requests are queued, prioritized, and routed determine whether accelerators are efficiently utilized or remain idle.

Advanced routing strategies isolate latency-sensitive traffic, prevent noisy neighbors, and ensure batch jobs do not block real-time inference. These patterns are described both in NVIDIA’s Dynamo design and in research systems such as DistServe, detailed in the DistServe paper.

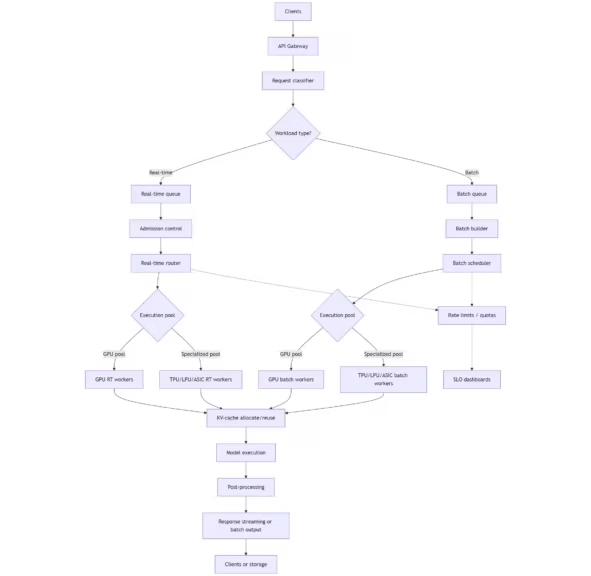

(Click to enlarge)

KV-cache management and memory pressure

KV-cache behavior is a central constraint in large-scale inference. As context lengths grow, memory pressure increases, limiting batch sizes and raising latency. Fragmentation and eviction policies further complicate capacity planning.

Efficient KV-cache management stabilizes latency and reduces memory waste. The vLLM project describes PagedAttention as a way to mitigate fragmentation and improve effective memory utilization, as detailed in the vLLM paper.

Quantization and accelerator utilization

Quantization reduces memory footprint and can increase throughput, but its impact depends on overall utilization. Lower precision alone does not guarantee efficiency if memory access or scheduling remains the bottleneck.

NVIDIA’s introduction of formats such as NVFP4 on Blackwell-class GPUs illustrates how quantization is increasingly designed to preserve accuracy while enabling higher utilization under inference workloads, as outlined in the NVIDIA Developer Blog.

| Optimization lever | Primary effect | Secondary impact | Typical trade-off | Best-fit workload |

|---|---|---|---|---|

| Batching | Increases throughput and utilization | Raises queuing time | Higher tail latency | Batch, high-volume inference |

| Dynamic batch sizing | Balances latency and utilization | Adds scheduler complexity | Less predictability | Mixed workloads |

| Request scheduling | Improves resource allocation | Requires policy tuning | Risk of starvation | Real-time and mixed workloads |

| Routing and isolation | Protects latency-sensitive traffic | Reduces global utilization | Fragmented capacity | Mixed real-time and batch |

| KV-cache reuse | Reduces memory overhead | Cache management complexity | Eviction and fragmentation | Long-context inference |

| Quantization | Lowers memory footprint | Accuracy and compatibility constraints | Model-specific tuning | Stable production models |

| Accelerator specialization | Improves predictability and efficiency | Reduced flexibility | Higher integration cost | High-scale, stable inference |

| Admission control | Enforces SLAs | Rejects or delays requests | Lower peak throughput | Real-time systems |

GPUs vs specialized inference hardware, when architecture beats specs

General-purpose GPUs, flexibility and its limits

GPUs dominate inference deployments due to their flexibility and mature software ecosystems. They support diverse models and workloads, making them suitable for heterogeneous environments and rapid experimentation.

This flexibility has limits. GPUs rely heavily on external memory, dynamic scheduling, and complex runtimes. Under latency-sensitive inference loads, these characteristics can reduce predictability and utilization, increasing AI inference cost.

TPUs, LPUs, and inference ASICs, predictable execution models

Specialized inference hardware emphasizes predictability over generality. TPUs, LPUs, and inference ASICs often use deterministic execution models, large on-chip memory, and simplified control paths to reduce variance and improve utilization.

Google positions TPU v6e and TPU v7 Ironwood as inference-first architectures designed for energy efficiency and predictable performance, as described in the Google Cloud blog and related documentation.

Also read : Understanding Google TPU Trillium: How Google’s AI Accelerator Works

Decision boundaries, workload stability, scale, and energy

The choice between GPUs and specialized hardware depends on workload characteristics. Stable models, high request volumes, and predictable traffic favor specialization. Rapidly changing models or diverse workloads favor GPUs.

Energy and power density increasingly influence these decisions. Analyses from the Uptime Institute and reporting by The Register show that power delivery and cooling are now first-order constraints.

| Dimension | General-purpose GPUs | Specialized inference hardware (TPU, LPU, ASIC) |

|---|---|---|

| Primary design goal | Flexibility across models and workloads | Predictable, inference-first execution |

| Execution model | Dynamic scheduling, general-purpose kernels | Deterministic or semi-static execution paths |

| Latency behavior | Variable under load, sensitive to contention | More stable, predictable tail latency |

| Throughput scaling | Strong with batching, limited by memory traffic | Optimized for sustained, steady-state inference |

| Utilization efficiency | Often reduced by latency constraints | Higher utilization for well-defined workloads |

| Memory architecture | Heavy reliance on external HBM and KV-cache | Large on-chip memory, reduced memory movement |

| Software ecosystem | Mature, broad framework and tooling support | Tighter coupling between compiler, runtime, and model |

| Model flexibility | High, supports frequent model changes | Lower, favors stable and standardized models |

| Energy efficiency | Good but workload-dependent | Optimized for energy per inference operation |

| Operational complexity | Easier experimentation, harder optimization | Higher upfront integration, simpler steady-state ops |

| Best-fit workloads | Heterogeneous, evolving, mixed use cases | High-volume, stable, latency-sensitive inference |

| Cost per token dynamics | Highly sensitive to utilization and batching | More predictable once architecture is tuned |

Real-time, batch, and mixed inference workloads

Real-time inference, latency-first systems

Real-time inference prioritizes tail latency above all else. Systems are designed to meet strict deadlines, often at the expense of utilization. Dedicated capacity and conservative batching are common patterns.

In these environments, cost per token reflects the price of predictability. Maintaining SLAs requires accepting lower average efficiency to avoid worst-case delays.

Batch inference, throughput and amortization

Batch inference workloads, such as offline analytics or periodic processing, emphasize throughput and amortization. Latency constraints are looser, enabling large batches and higher utilization.

When scheduling and memory management are optimized, batch inference typically achieves lower AI inference cost per token. Inefficient orchestration, however, can erase these gains.

Mixed workloads, routing and isolation patterns

Most production systems support both real-time and batch inference. Mixing these workloads on shared infrastructure introduces contention unless explicitly managed.

Effective architectures use routing, isolation, or tiered clusters to protect latency-sensitive traffic while preserving throughput for batch jobs. These designs are increasingly common in large-scale inference platforms.

Energy, power density, and the physical limits of inference

Power, cooling, and utilization ceilings

Inference at scale is constrained by physical limits. Power delivery, cooling capacity, and rack density cap how much compute can be deployed within a data center footprint.

As accelerators grow more powerful, these constraints tighten. According to analyses by the Uptime Institute, modern AI racks operate at power densities far above historical norms.

Why energy per token shapes architecture choices

Energy per token connects physical constraints directly to AI inference cost. Architectures that reduce memory movement, improve locality, and stabilize execution lower energy consumption per request.

This relationship increasingly drives hardware selection and system design. Reports from the International Energy Agency highlight AI inference as a growing contributor to global data center electricity demand.

For a deeper look at how power availability and grid constraints are shaping AI infrastructure decisions, see our analysis on AI Electricity Demand: Why Power Grids Are the Bottleneck.

What this means for teams designing inference-first systems

From model selection to system design

Inference performance and cost are shaped less by model choice than by system design. Teams that focus exclusively on models miss larger gains available through scheduling, routing, and workload management.

Treating inference as a distributed systems problem aligns optimization around utilization, predictability, and AI inference cost rather than peak benchmark performance.

A practical decision flow for modern inference systems

Designing inference-first systems starts with workload analysis, followed by explicit latency and cost per token targets. Hardware choice comes after architectural decisions, not before.

Teams should evaluate utilization, tail latency, and energy together, iterating on architecture before scaling infrastructure. This approach leads to predictable performance and sustainable AI inference cost in 2026 and beyond.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!