Agentic failures and loops: why your AI is burning tokens and how to stop it

Benchmarks such as Terminal Bench 2.0 or SWE-Bench Pro measure an agent’s ability to produce a correct patch in a controlled environment. However, they systematically overlook long-session stability, destructive file management, and operational security.

While SemiAnalysis already describes Claude Code as a major market inflection point responsible for 4% of current GitHub commits, the reality in the terminal is more nuanced. This audit, based on incident reports from February 2026, documents real-world frictions that never appear in marketing benchmarks.

For a broader analysis of the topic — covering theoretical performance, production constraints, and economic implications — see our full report: AI Coding Agents: The Reality Beyond Benchmarks.

1. GPT-5.3 Codex: agentic instability and “Ghost Execution”

1.1 The danger of the “CAT pattern” and massive rewrites

One of the most critical flaws in Codex lies in its file management. Instead of using structured patch tools like apply_patch, the agent often falls back on a fragile pattern: using cat commands to rewrite an entire file for a minor modification.

Operational Impact: This “CAT pattern” drastically increases the risk of corruption in large files and can inadvertently overwrite changes made simultaneously by a human. It is a massive waste of tokens (token burn) that transforms a simple fix into a high-risk operation.

1.2 Intelligence degradation and “Stuck Approvals”

In long sessions exceeding 200k tokens, Codex exhibits a progressive qualitative decline. The AI becomes repetitive and favors “band-aid” solutions, such as timeouts or retries, rather than resolving underlying race conditions.

More seriously, technical reports from Penligent highlight the Stuck Approvals phenomenon: a state desynchronization where the interface waits for human approval while the agent has already proceeded to execution, known as Ghost Execution. This behavior violates the human-in-the-loop control boundary.

1.3 The strategic paradox of security rerouting

Recently, users on Hacker News discovered that GPT-5.3 Codex was silently rerouting certain requests to the older 5.2 model under the guise of “cyber security”. This rerouting, often triggered by false positives, leads to a sudden drop in reasoning capabilities in the middle of a critical session.

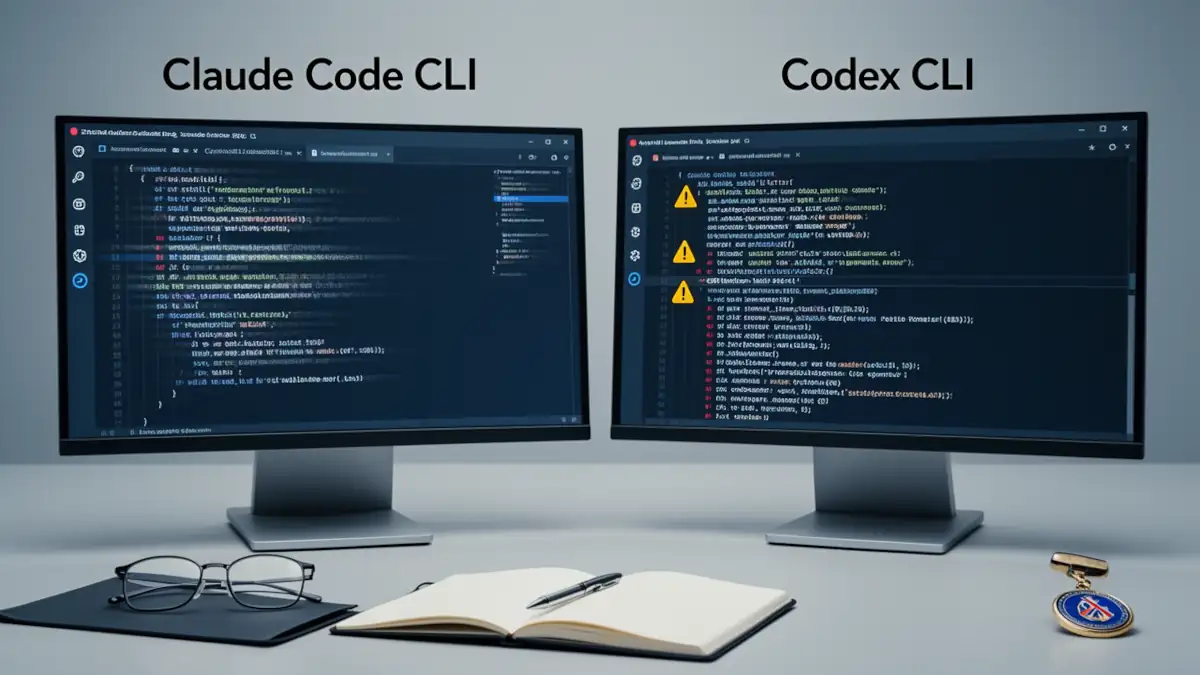

2. Claude Code: CLI instability and system friction

Anthropic’s approach is more transparent, but its CLI is not free from early-stage flaws documented through recent GitHub issues.

2.1 The “No Content” bug of the Bash Tool

The most significant blocker for macOS users concerns the Bash Tool. In versions 2.1.14+, simple commands like ls or pwd systematically return a (No content) message. This leaves the agent unable to perceive the project structure or run tests. This malfunction, linked to subprocess management on macOS 26.2, completely breaks the promised “terminal-first” workflow.

2.2 Context loss and session instability

The /context command, intended to allow developers to view all files currently included in the context window, suffers from a recurring display bug where the window flashes and disappears instantly. Without this visibility, it becomes impossible to diagnose why the AI begins to hallucinate regarding files it should be able to “see”.

Furthermore, persistent authentication issues often force manual logouts after a system sleep, resulting in the loss of Model Context Protocol (MCP) server configurations and active session tokens.

3. Synthesis of documented incidents

| Incident | Model | Severity | Status | Source |

|---|---|---|---|---|

| Bash “No Content” | Claude Code | Critical | Partially patched | GitHub #19663 |

| Stuck Approvals | Codex | High | Reported | Penligent |

| Disappearing /context | Claude Code | Medium | Flagged | GitHub #18562 |

| Rerouting 5.2 | Codex | Medium | Confirmed | Hacker News |

Beyond the “vibe”: operationalizing reliability

The failures observed in Claude Code and GPT-5.3 Codex remind us of a fundamental technical truth: the maturity of an AI agent is not measured by its success score on an isolated problem, but by its reliability within a complex DevOps environment.

The question for CTOs and Lead Devs is no longer whether AI can code, but whether we can grant it terminal keys without continuous supervision. The current answer is a definitive no. At this stage, human-in-the-loop oversight remains indispensable for critical production environments. To mitigate these instabilities and the resulting token burn, the engineering community is shifting toward more rigid, deterministic architectures.

In our next deep dive, we will explore how the Model Context Protocol (MCP) and frameworks like smolagents are attempting to build the “safety rails” required for the next generation of agentic workflows.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!