Context Packing vs. RAG: How Google Gemini 3 solves AI amnesia

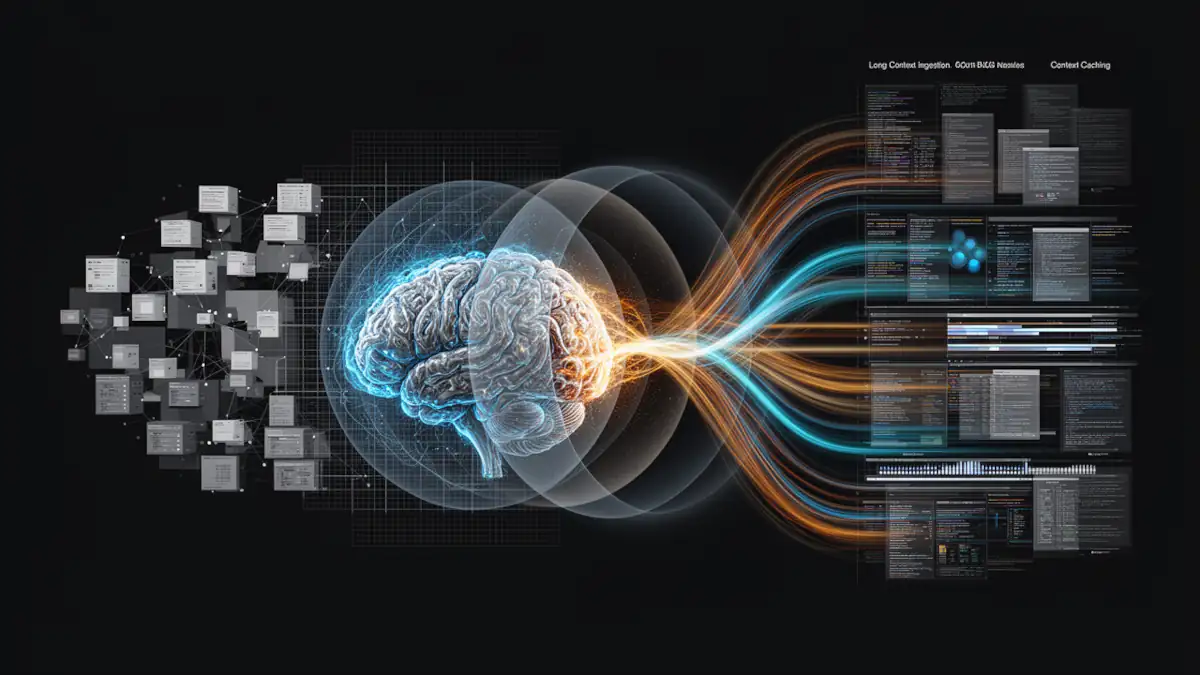

In the race for personalized artificial intelligence, managing long-term memory has become the primary technical bottleneck. While the industry standard relies on RAG (Retrieval-Augmented Generation), Google has introduced a hybrid approach with Gemini 3 that we define as “Context Packing”.

Note: “Context Packing” is an editorial term used to describe the synergy between Gemini’s native long context, context caching, and tool-driven retrieval. While not an official Google trademark, this concept helps structure the understanding of Google’s software architecture, as previously discussed in our analysis of Google Gemini’s strategy vs. OpenAI.

The problem with traditional RAG: fragmented memory

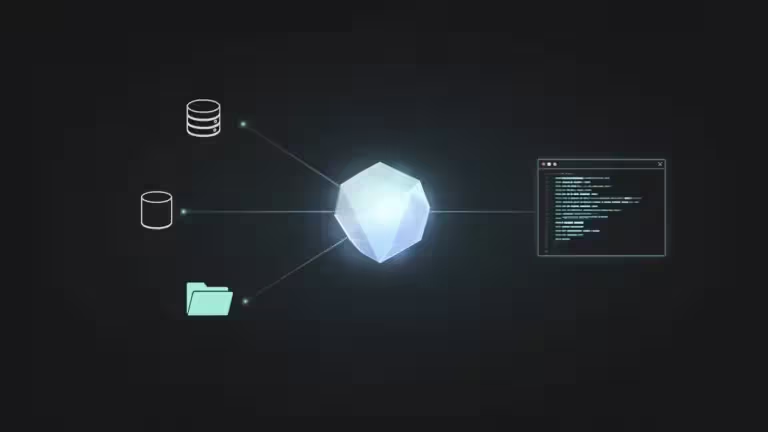

For an AI to access your documents, the current standard is traditional RAG. This process involves breaking your data into small segments called “chunks” stored in a vector database to create contextual memory, and then extracting fragments deemed mathematically close to a query.

While effective, this basic implementation suffers from real limitations:

- Loss of structure: By reading only isolated fragments, the AI can lose a document’s hierarchy or the fine chronology of a conversation.

- Retrieval imprecision: Relevance depends entirely on the vector search engine. If the extraction fails, the AI lacks the information needed to reason correctly.

The evolution toward “Context Packing” under Gemini 3

Rather than breaking away from RAG, Google is evolving the model into Very-Long-Context RAG. This architecture leverages Gemini 3’s native ability to process up to 1 million tokens simultaneously.

According to the Whitepaper Building Personal Intelligence, the process is no longer a simple extraction of fragments but a dynamic synthesis structured into three phases:

- Query Understanding: Precisely identifying what the user is looking for.

- Tool-driven Retrieval: Identifying entire sources or coherent subsets rather than random chunks.

- Synthesis and Injection (The Packing): Relevant data is synthesized and “packed” directly into the AI’s context window.

Supported by latest-generation Google TPUs (Trillium), this mechanism maintains extreme precision. While public benchmarks already showed scores exceeding 99% on the “Needle-in-a-Haystack” test, Gemini 3 optimizes this near-perfect retrieval capability within massive contexts.

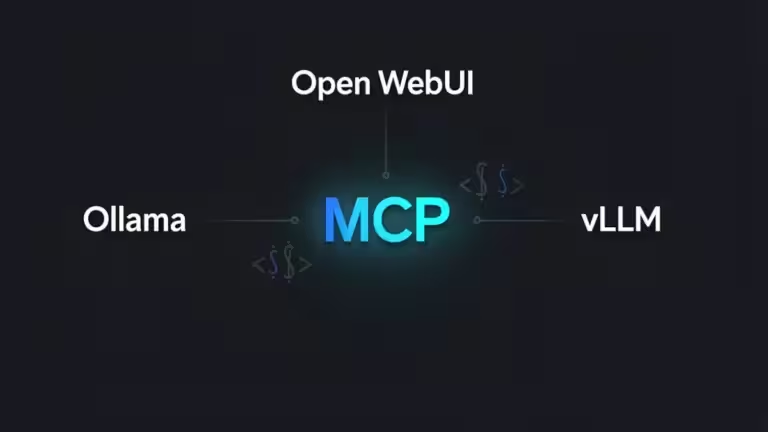

Why doesn’t Google use a single term for this architecture?

Unlike a “black box” product, Google’s infrastructure is modular. It combines distinct bricks such as Context Caching and semantic retrieval. This complexity prevents the use of a single term in technical documentation, even though the effect produced for the user is that of a unified and immediate memory.

Comparison: A new standard of precision

| Feature | “Basic” RAG | Gemini 3 Approach |

| Granularity | Isolated fragments (Chunks) | Synthesized subsets |

| Accuracy | Dependent on vector engine | Near 100% (Native context) |

| Reasoning | Limited to extracted segments | Global and chronological |

| Infrastructure | Standard Cloud / GPU | TPU Trillium Optimization |

Limitations: The cost of context-intensive usage

This omniscience comes at a price: intensive token usage for every query. While Google can absorb this cost through vertical integration, this centralization raises questions about data sovereignty and privacy. Furthermore, we have identified several specific technical limitations of Personal Intelligence that show the technology is still in a refinement phase.

For users seeking a different balance, understanding the differences between local AI and the Cloud becomes essential.

Conclusion: An architectural reading of the future of AI

Gemini 3’s “Context Packing” demonstrates that the context window is no longer just a passive reservoir but a dynamic reasoning space. By merging search and synthesis, Google offers a robust answer to the challenge of mass personalization.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!