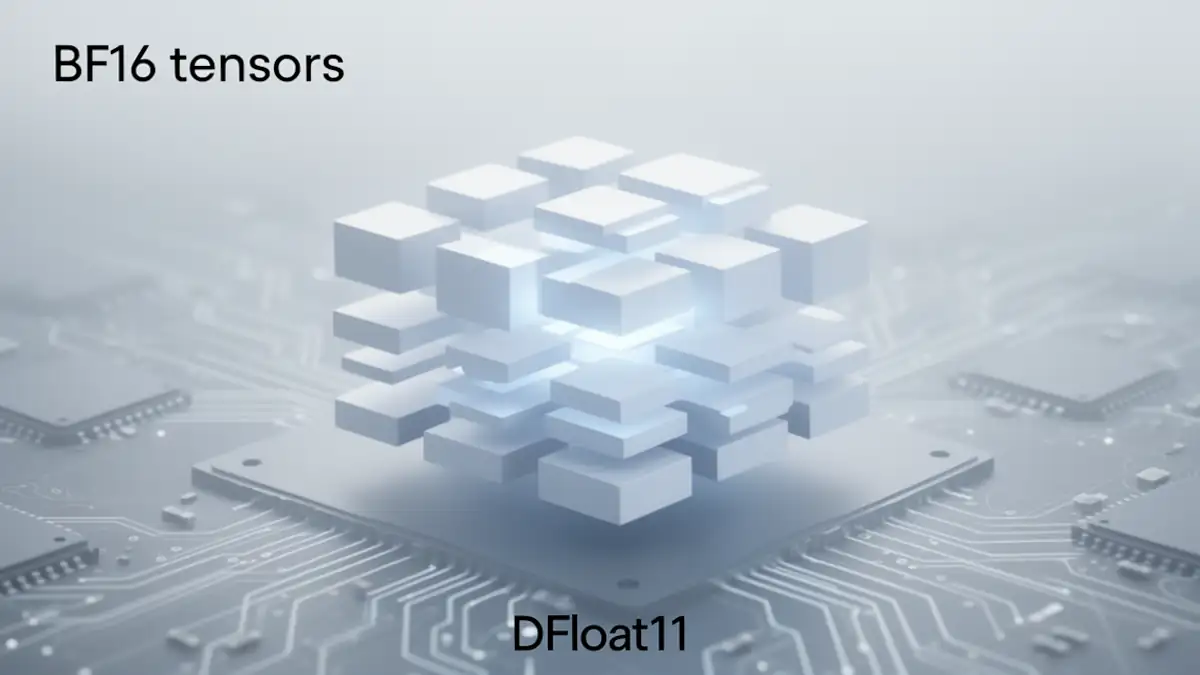

DFloat11 : Lossless BF16 Compression for Faster LLM Inference

DFloat11 compresses BF16 model weights by about 30 percent while preserving bit-perfect accuracy, enabling faster and more memory-efficient LLM inference on GPU-constrained systems. Its GPU-native decompression kernel reaches roughly 200 GB per second, offering a practical way to mitigate the 2025 GPU shortage without sacrificing model precision. This article explains how DFloat11 works, quantifies its gains, and shows how to integrate it across modern inference pipelines.

What DFloat11 Solves: Memory Pressure and the 2025 GPU Shortage

Large language models continue to grow in parameter count, memory footprint, and context length, pushing GPU resources to their limits. In 2025, GPU availability remains tight worldwide, with high-VRAM accelerators in short supply. Under these conditions, BF16 weight storage has become a critical bottleneck for inference workloads, limiting batch size, context length, and the ability to host multiple models on the same node.

| Year | AI Model | Parameters (approx.) | Context / capability | Impact on GPU demand |

|---|---|---|---|---|

| 2020 | GPT-3 | 175 billion | Short context, light inference | Start of GPU demand ramp-up |

| 2021 | Megatron-Turing NLG | 530 billion | Massive model but limited deployment | GPU load mainly on training |

| 2022 | PaLM | 540 billion | Improved reasoning | GPU infrastructure still sufficient |

| 2023 | LLaMA 2, GPT-4 | 70B → 1T (estimated) | First widespread usage | Early pressure on clusters |

| 2024 | Gemini 1.5, Mixtral, LLaMA 3 | 70B → 1.5T | Very long contexts (→ compute-intensive) | Significant increase in H100 demand |

| 2025 | Gemini 3 Pro, ERNIE 5.0, GPT-5.1 | 1T → multi-trillion | Advanced multimodality, 1M-token context | Compute saturation, hyperscaler wait queues |

Memory-bound behavior now dominates both cloud and on-prem deployments. GPUs have increased in FLOPs, but VRAM growth has plateaued. As a result, engineers face constraints that cannot be resolved by compute alone. DFloat11 directly addresses this problem by reducing BF16 storage requirements without resorting to lossy quantization, enabling more efficient use of available GPUs during the ongoing supply crunch.

LLM Growth Outpaces GPU Supply

Parameter counts have accelerated faster than GPU memory capacity. Models now routinely exceed 70B parameters, and research prototypes stretch into the hundreds of billions. BF16 keeps numerical fidelity but imposes a heavy VRAM cost, especially when replicated across nodes for distributed inference.

This mismatch leads to real-world issues: insufficient space for KV caches, reduced context windows, and forced reliance on multi-GPU tensor parallelism even for workloads that could otherwise run on a single accelerator.

BF16 Footprint as a Scaling Bottleneck

Despite recent advances in FP8 and quantization-aware formats, BF16 remains the safest and most widely adopted precision for LLM inference. It avoids accuracy regressions for reasoning, coding, and evaluation tasks, but its fixed-length representation consumes substantial memory.

Even a modest reduction in BF16 storage can unlock larger context lengths, more concurrent requests, or the ability to deploy a model on a single GPU rather than two. This is particularly relevant for consumer GPUs, where VRAM is limited, and for cloud instances where high-memory hardware is scarce or expensive.

Why Lossless Compression Matters During GPU Scarcity

The 2025 GPU shortage has amplified the importance of memory efficiency. Cloud clusters face high demand and long provisioning delays for H100-class hardware. On-prem teams often run models on GPUs that cannot accommodate large BF16 checkpoints without resorting to KV offload or aggressive quantization.

Alose read : GPU Shortage: Why Data Centers Are Slowing Down in 2025

Lossless compression offers a unique advantage: full accuracy preservation. For research, safety evaluation, and production systems where reproducibility matters, lossy formats introduce risk. DFloat11 offers measurable VRAM relief without modifying model behavior, making it well-suited to both mission-critical and experimental workloads.

How DFloat11 Works: Lossless BF16 Exponent Compression

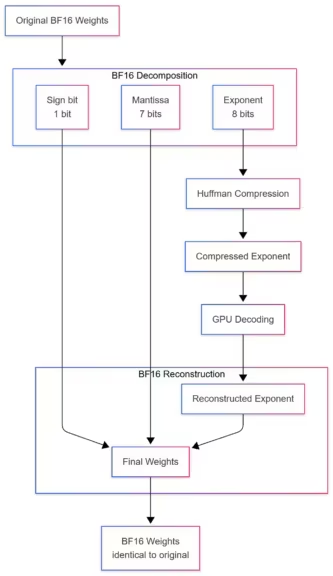

DFloat11 is a lossless compression method designed specifically for BF16 model weights. Instead of modifying mantissa or sign bits, it targets the exponent field, which exhibits predictable statistical patterns in transformer models. By encoding these exponents using a dynamic-length scheme similar to Huffman compression, DFloat11 reduces storage requirements by roughly 30 percent while reconstructing weights exactly.

(Click to enlarge)

Because decompression occurs on the GPU at very high bandwidth, the technique integrates seamlessly into existing inference stacks. The resulting tensors remain valid BF16 values, making DFloat11 compatible with PyTorch, Transformers, and other high-level frameworks.

Dynamic-Length Float and Exponent Encoding

DFloat11 focuses on entropy-compressing the BF16 exponent, which tends to cluster within a narrow range across modern transformer architectures. The method assigns shorter bit patterns to frequent exponent values and longer ones to rare cases. Mantissa and sign bits remain untouched, ensuring that reconstructed values exactly match their BF16 originals.

This approach provides meaningful storage savings without quantization noise or recalibration steps. Because the encoded bitstream is deterministic, the process supports reproducible inference across all hardware configurations.

Preserving Bit-Perfect BF16 Accuracy

Unlike quantization, DFloat11 guarantees that every decompressed weight is bit-identical to the original BF16 value. This is essential for reasoning-heavy workloads, long-context models, and research scenarios where accuracy fidelity is non-negotiable. Tasks involving code generation, safety evaluation, or scientific computation benefit from predictable and fully preserved numerical behavior.

Retaining BF16 precision also avoids accuracy drift and eliminates the engineering overhead associated with post-quantization validation.

GPU-Friendly Decompression Kernel

A defining aspect of DFloat11 is its GPU-native decompression kernel, which achieves throughput near 200 GB per second. The kernel stores compressed exponent tables in fast local memory, allowing rapid exponent mapping and reconstruction. Because decoding happens directly on the GPU, DFloat11 bypasses CPU involvement and avoids PCIe transfer bottlenecks.

These properties make DFloat11 suitable for real-time inference and for high-throughput batch workloads, especially when models must be loaded or swapped frequently across multiple accelerators.

Performance Gains: Memory Savings and Throughput Improvements

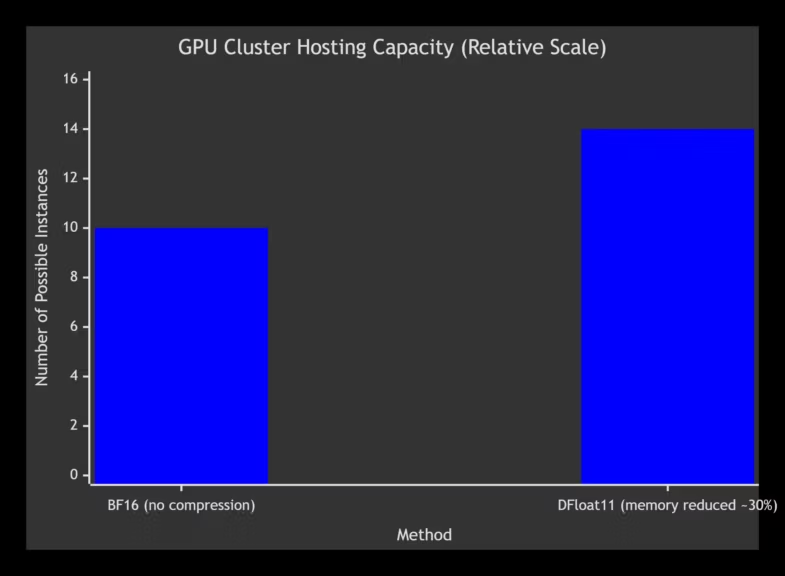

DFloat11 provides two core benefits for LLM inference: reduced VRAM usage and improved data-path efficiency thanks to on-GPU decompression. These advantages extend across transformer-based LLMs, diffusion models, and multimodal pipelines.

The ~30 percent footprint reduction can determine whether a model fits on a single GPU or requires multi-GPU parallelism. It also frees space for longer context windows, larger KV caches, and more concurrent requests in production environments.

~30% VRAM Reduction Across LLMs and Diffusion Models

Benchmarks across Llama, Qwen, Flux, and various diffusion checkpoints show reductions typically between 27 and 32 percent. These gains apply uniformly because the exponent distribution patterns arise naturally from transformer architectures, independent of model family.

For diffusion workflows, this reduction enables larger batch sizes or higher-resolution sampling. Because the format is lossless, image outputs remain identical to those produced with uncompressed BF16 weights.

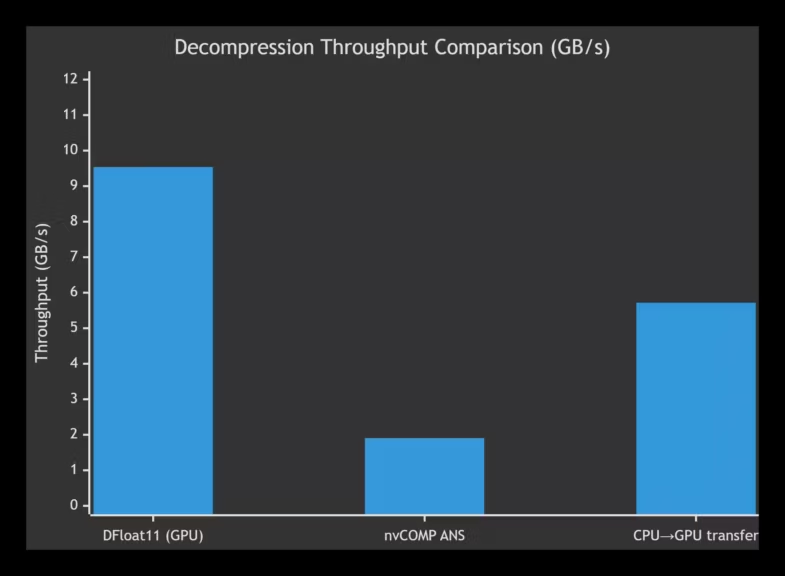

Decompression Throughput vs CPU Transfer and nvCOMP

One of the most significant performance advantages comes from DFloat11’s decompression throughput. GPU-resident decoding avoids costly CPU-to-GPU data transfers and surpasses generic compression methods not optimized for BF16 structure.

Approximate relative performance:

- DFloat11 GPU decompression: ~200 GB/s

- nvCOMP ANS decompression: 10× to 20× slower

- CPU-based decompression followed by GPU transfer: up to 30× slower

This bandwidth advantage directly affects end-to-end inference performance when models must be loaded frequently, such as in microservice-based architectures or dynamic multi-model deployments.

Long-Context Scaling Under Fixed VRAM

Context windows have expanded dramatically, with some models exceeding hundreds of thousands of tokens. Under fixed VRAM budgets, attention buffers and KV caches quickly consume memory. A model that occupies most of VRAM leaves insufficient room for these runtime structures.

DFloat11’s reduction in BF16 footprint enables:

- larger KV caches

- larger attention windows

- higher batch sizes

- more simultaneous inference streams

These improvements directly affect tokens-per-second performance and stability under high load.

Deployment and Integration: Hugging Face, Python Package, ComfyUI Nodes

DFloat11 is designed to integrate cleanly into modern inference stacks. Its compatibility with standard .safetensors workflows, the Python dfloat11 package, and community-maintained ComfyUI nodes makes the method usable across cloud GPUs, on-prem clusters, and consumer-grade hardware. The goal is to reduce BF16 storage without modifying model architectures or inference kernels.

Loading DFloat11 Models via Hugging Face

Developers can load DFloat11-compressed .safetensors files with the familiar Hugging Face workflow. No model-specific patches are required, and inference frameworks treat the decompressed tensors as standard BF16 values. The Python package handles decompression transparently, allowing teams to integrate DFloat11 without adjusting training checkpoints, KV cache implementations, or generation logic.

This approach accelerates onboarding and simplifies collaboration across distributed teams or research groups.

Python Package dfloat11 and GPU-Decompressed Weights

The dfloat11 package provides a reference implementation for encoding and decoding BF16 weights. Its GPU-native decompression kernel reconstructs tensors at high bandwidth, avoiding CPU involvement and PCIe bottlenecks. The API supports PyTorch and Transformers without requiring updates to attention kernels or CUDA extensions.

Because mantissa and sign bits remain untouched, the resulting tensors behave identically to the original BF16 weights. This makes the technique suitable for production inference, research reproducibility, and tasks with strict numerical correctness requirements.

ComfyUI Support via Community Nodes and Offload Modes

ComfyUI does not officially support DFloat11 as part of its core distribution, but the ecosystem provides stable custom nodes that enable loading and using DFloat11-compressed models in diffusion workflows. Installation typically involves adding the ComfyUI-DFloat11 repository to the custom_nodes folder, either through ComfyUI Manager or by cloning the repository directly.

These extensions introduce a dedicated DFloat11 Model Loader node, which replaces standard model-loading nodes in pipelines for Flux, Qwen-Image, or other architectures. Forks such as ComfyUI-DFloat11-Extended further expand compatibility, enabling support for additional models including certain Schnell variants.

DFloat11 also benefits from ComfyUI’s CPU offload mechanisms. The reduced VRAM footprint allows some models to run on consumer GPUs that previously lacked the capacity to load the full BF16 checkpoint. This enables large pipelines such as Flux or high-resolution diffusion sampling to operate on 24–32 GB GPUs without aggressive quantization.

Compatible DFloat11 models on Hugging Face include:

Some workflows embed ComfyUI graphs directly into PNG metadata, streamlining deployment and reducing manual configuration.

DFloat11 vs Quantization: Trade-offs and When to Use Each

Quantization and lossless compression address different constraints. Quantization modifies numerical precision to reduce compute and memory costs, while DFloat11 reduces BF16 storage without altering values. Engineers must choose based on accuracy requirements, latency targets, and hardware limitations.

Lossless vs Lossy Approaches

DFloat11 provides bit-perfect reconstruction, preserving accuracy across reasoning, coding, scientific workloads, and safety evaluations. Quantization introduces representational drift, which can affect stability and reduce output consistency. While quantization can offer additional memory and latency gains, it requires evaluation to ensure task-specific performance remains acceptable.

DFloat11 avoids calibration steps and ensures checkpoint reproducibility across clusters and hardware setups.

Latency, Batch Size, and Interactive Workloads

Quantization can reduce compute latency during attention and MLP operations, especially for batch-1 inference. DFloat11 does not accelerate matrix multiplications directly, but it improves load-time performance and allows larger batch sizes by reducing VRAM usage.

For high-throughput workloads, DFloat11’s smaller footprint can yield higher tokens per second by enabling more concurrent requests or larger batches.

Hybrid Pipelines: Combining DFloat11 and Quantization

Some deployments benefit from using both strategies. A hybrid design might:

- Keep transformer attention layers in DFloat11 for accuracy

- Quantize feed-forward layers to 8-bit or 4-bit

- Use lossy formats for embeddings while applying DFloat11 to sensitive components

This approach maximizes memory efficiency while preserving core reasoning performance.

Limitations and Engineering Constraints

While DFloat11 delivers meaningful memory and throughput benefits, engineers should be aware of specific constraints when evaluating the method for production or research environments. These limitations concern latency behavior, compatibility requirements, and the current maturity of tooling.

Batch-1 Overhead and Interactive Latency

DFloat11 introduces a small decompression overhead per weight, which can affect batch-1 or interactive inference. Although the impact is typically modest, latency-sensitive applications such as real-time assistants or step-by-step reasoning may observe slight delays at the token level. In most cases, the VRAM savings offset this cost by allowing larger KV caches or higher model concurrency.

CUDA Version Requirements and Compatibility

DFloat11 depends on GPU-side decompression kernels that require appropriate CUDA toolchains and relatively recent GPU architectures. Older GPUs or deployments with restricted driver versions may fall back to slower paths or encounter compatibility issues. Engineers running distributed environments or mixed hardware clusters should validate compatibility before large-scale adoption.

Lack of Long-Run Production Benchmarks

As a 2025 technology, DFloat11 has not yet accumulated extensive real-world benchmarking across long-running production workloads or trillion-parameter models. Early results are promising, but long-term performance under multi-tenant stress, cross-node scaling, and memory fragmentation remains an area for future measurement.

Forward Outlook: Scaling Toward Trillion-Parameter Models

As LLMs move closer to trillion-parameter architectures and context lengths stretch from hundreds of thousands to potentially millions of tokens, inference will increasingly operate in memory-bound regimes. GPU FLOPs will continue to grow, but VRAM will not scale at the same rate. Lossless compression formats such as DFloat11 are well-positioned to become essential infrastructure for managing these constraints.

Memory-Bound Regimes and Future GPU Architectures

Future GPU generations will likely increase compute throughput more aggressively than VRAM capacity. This asymmetry places pressure on software-side memory optimizations. DFloat11’s approach aligns with anticipated trends, from unified memory hierarchies to hardware-assisted decompression pathways.

Lossless BF16 compression also supports emerging inference patterns, such as speculative sampling, retrieval-augmented models, and multimodal systems requiring large parameter sets.

Potential Standardization of Lossless Compression

As model distribution pipelines mature, DFloat11 or similar formats may become standard for sharing large checkpoints. Lossless compression reduces bandwidth costs, accelerates deployments, and ensures reproducibility across organizations. Inference engines may adopt native support, and ecosystem tools could incorporate compression directly into training-to-serving workflows.

Key Takeaways

DFloat11 provides a practical, accuracy-preserving way to reduce BF16 storage footprints and increase inference flexibility during the ongoing 2025 GPU shortage. By compressing BF16 exponents without altering mantissa or sign bits, it preserves all numerical precision while reducing VRAM usage by roughly 30 percent.

The method enables larger context windows, higher batch sizes, and more efficient multi-model deployment without requiring model retraining or quantization calibration. Its GPU-native decompression kernel supports high-throughput inference and integrates smoothly with existing frameworks, from Hugging Face to ComfyUI.

As GPU memory constraints tighten and model scale continues to increase, lossless compression will become an important component of inference infrastructure, complementing quantization and offload strategies in modern AI pipelines.

Also read : Why AI Models Are Slower in 2025: Inside the Compute Bottleneck

Sources and references

Companies

- 2025, According to GitHub, official DFloat11 project repository https://github.com/LeanModels/DFloat11

- 2025, As noted by GitHub, official ComfyUI DFloat11 plugin repository https://github.com/LeanModels/ComfyUI-DFloat11

- 2025, As reported by GitHub, extended compatibility fork ComfyUI-DFloat11-Extended https://github.com/mingyi456/ComfyUI-DFloat11-Extended

- 2025, According to Hugging Face, DFloat11 model collection https://huggingface.co/DFloat11

- 2025, As listed on Hugging Face, CyberRealisticFlux-DF11-ComfyUI https://huggingface.co/mingyi456/CyberRealisticFlux-DF11-ComfyUI

- 2025, As provided on Hugging Face, Qwen-Image-DF11 https://huggingface.co/DFloat11/Qwen-Image-DF11

- 2025, As provided on Hugging Face, SRPO-DF11-ComfyUI https://huggingface.co/mingyi456/SRPO-DF11-ComfyUI

- 2025, Hugging Face collection for Flux models converted to DFloat11 https://huggingface.co/collections/DFloat11/dfloat11-flux1

Institutions

- 2025, According to Rice University, academic promotions related to contributors to the DFloat11 research effort https://engineering.rice.edu/news/rice-engineering-and-computing-promotes-11-faculty

Official sources

- 2025, As specified by arXiv, “Lossless LLM Compression for Efficient GPU Inference via Dynamic-Length Float” https://arxiv.org/abs/2504.11651

- 2025, Full HTML version on arXiv https://arxiv.org/html/2504.11651v2

- 2025, OpenReview PDF version of the DFloat11 paper https://openreview.net/pdf/6b1607ff4d79f3a474030fbbf74f738e0e5350c9.pdf

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!