Why Google Gemini is positioned to build a structural dominance in the AI race

The artificial intelligence industry is entering a consolidation phase where raw model performance is no longer sufficient. The battlefield has shifted toward infrastructure and deep software integration. While OpenAI initially caught the market off guard, Google has orchestrated a strategic comeback by aligning three fundamental pillars: hardware autonomy, massive distribution, and unparalleled personal data depth. However, this lead is not absolute; it depends on the firm’s ability to navigate the fine line between technical innovation and regulatory constraints.

Strategic autonomy: The TPU advantage

The launch of Gemini 3 marked a turning point, positioning Google at the top of benchmarks across various categories, including vision, long-context analysis and “Context Packing”. This success is not just a matter of software, but of a growing strategic autonomy in hardware.

Unlike its competitors, who are almost exclusively dependent on Nvidia’s supply chain, Google leverages its own specialized chips: Google TPUs. This vertical optimization allows Google to drastically reduce inference latency for large-scale operations. While Google still utilizes Nvidia for certain specific workloads, the deployment of TPU v5p and the recently announced TPU v6 “Trillium” offers energy efficiency and cost control—up to 2x cheaper at scale—that few players can match.

Technical insight: TPU vs. Nvidia GPU

The rivalry between TPUs and GPUs is more than a spec-sheet war; it is a clash between custom ASICs and general-purpose parallel computing. While Nvidia GPUs excel in versatility and broad framework support, the TPU is a “specialist” built around systolic arrays. This architecture allows data to stream through the chip efficiently, slashing latency and power consumption by up to 65% compared to standard GPUs for search-related queries.

Massive distribution and the Siri integration

One of the greatest challenges in AI is transforming a technical feat into a daily habit. Google is using its massive reach to embed Gemini at the heart of user workflows.

The January 2026 announcement of Gemini’s integration into Apple’s Siri illustrates this strategy. Although it is a multi-year, non-exclusive partnership, this presence on the iPhone grants Google access to over 1.5 billion daily requests. Simultaneously, Google is targeting future professionals by offering one free year of Gemini Premium to students, creating a long-term “lock-in” within the Google ecosystem.

2026 Benchmarks: A nuanced lead

While Gemini 3 is impressive, it would be reductive to speak of total global superiority. The current state of the market shows a clear specialization of models:

- Gemini 3 strengths: It leads significantly in multimodal reasoning, massive document analysis (1M+ context window), and Video-MMMU benchmarks.

- Competitor resilience: OpenAI’s GPT-5.2 maintains a notable lead in advanced coding tasks (scoring 55.6% on SWE-Bench Pro) and abstract reasoning benchmarks like ARC-AGI-2, where it outperforms Gemini 3 Pro by a wide margin.

Personal Intelligence: Strategic opportunity or trust risk?

The most significant offensive remains the “Personal Intelligence” project. By connecting Gemini to emails, photos, and YouTube history, Google creates a surgically relevant assistant capable of anticipating needs without complex prompting.

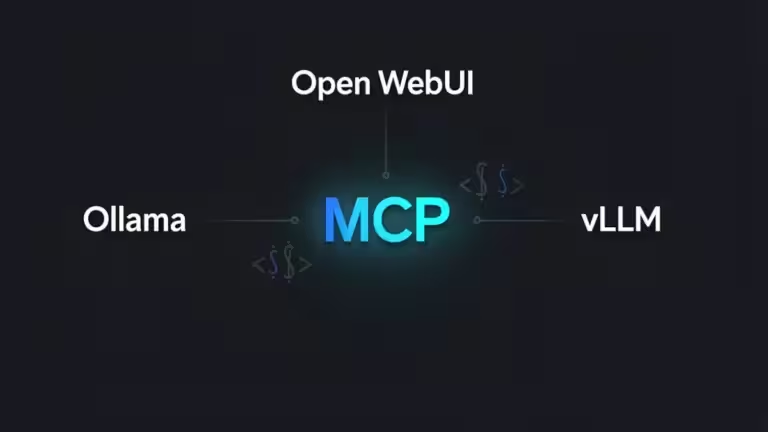

However, for a global audience, this integration raises major sovereignty questions. Under the EU AI Act and GDPR, Google must ensure strict “opt-in” protocols and technical safeguards. To maintain user trust, especially for Workspace customers, Google has committed that customer data is not used for training external models. In contrast to this cloud-centric approach, the rise of Local AI represents a credible alternative for those who prefer not to entrust their entire digital life to a remote server.

Toward a structural dominance?

Google has successfully mobilized its resources to transform an existential threat into an opportunity for technological reign. While OpenAI maintains a strong brand image, Google now possesses the models, the sovereign computing resources, and the distribution. The AI race is no longer just about who is the “smartest,” but who is the most useful, the fastest, and the most integrated into our daily digital lives.

After several months of using Gemini, I gradually realized that Google’s AI has strong arguments to pull me out of my привыч habits with ChatGPT and Claude.

What stood out to me first was the web interface. It is clearly designed for working with long, complex, sometimes heavy texts, without ever giving the impression that you are fighting the tool itself. On this specific point, Gemini currently feels more comfortable to me than ChatGPT for in-depth editorial or analytical work.

The integration with the Google ecosystem has also changed the way I work. Being able to rely directly on documents stored in Google Drive as reference material, without hacks or manual imports, is a real time-saver. This ability to enrich context using personal sources is something I plan to explore more deeply.

That said, not everything is neutral. Gemini embeds a digital signature, SynthID, into the text it generates. From a traceability standpoint, this is an interesting feature for fighting mass-produced AI content. However, it also raises legitimate questions depending on the use case, an issue that clearly deserves closer examination.

Will Google be able to surpass OpenAI in terms of user adoption? Do you think the execution speed enabled by Google’s cloud infrastructure will be enough to offset the rise of local AI and its advantages in terms of privacy? And will text digital watermarking through SynthID be well received by users?

Sources and references

- Apple & Google Gemini for Siri – CNN

- GPT-5.2 & ARC-AGI-2: A Benchmark Analysis – Intuition Labs

- AI Inference Costs 2025: Why Google TPUs Beat Nvidia – AI News Hub

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!