Understanding Google TPU Trillium: How Google’s AI Accelerator Works

Google’s TPU Trillium, also known as TPU v6e, is the latest generation of Google’s custom silicon for large-scale AI. At a time when the industry faces a global GPU shortage and rising demand for LLM training and inference, Trillium introduces a 4.7× compute boost, doubled HBM bandwidth, and major efficiency gains, according to the Google Cloud Blog introducing the chip. Designed to power models like Gemini 3 across tens of thousands of interconnected chips, it represents Google’s strongest attempt yet to challenge Nvidia’s dominance in AI hardware.

This article offers a clear, accessible explanation of how TPU Trillium works, how it compares to GPUs, and why it matters for both beginners and AI professionals looking to understand the future of high-performance AI acceleration.

What Google TPU Trillium Is (TPU v6e) and Why It Matters

Google TPU Trillium is a specialized processor designed to accelerate the mathematical operations that dominate machine learning workloads. Unlike GPUs that balance many computational tasks, Trillium focuses on massive parallelization of matrix multiplications, the backbone of transformer models.

The chip’s improvements are substantial. According to the official Google Cloud TPU v6e documentation, Trillium reaches up to 918 teraflops in BF16, 1836 TOPS for INT8 inference, provides 32 GB of HBM3 per chip, and doubles memory bandwidth to 1600 GB per second. The inter-chip interconnect also reaches 3200 gigabits per second, enabling efficient distributed training across large TPU pods.

For context, NVIDIA’s H100 delivers between 1,671 and 1,979 teraflops of BF16 Tensor Core performance depending on the form factor (PCIe or SXM5). TPU Trillium’s 918 BF16 teraflops place it in a lower raw-compute class than H100, although the two architectures differ significantly in execution model, memory hierarchy, and scaling behavior, which often leads to different real-world performance characteristics.

| Feature | TPU v5e | TPU v6e (Trillium) | Improvement |

|---|---|---|---|

| BF16 compute | ~195 TFLOPS (estimate) | 918 TFLOPS | ~4.7× |

| HBM capacity per chip | 16 GB | 32 GB | 2× |

| HBM bandwidth per chip | ~800 GB/s | 1600 GB/s | 2× |

| Interconnect bandwidth | ~1.6 Tb/s | 3.2 Tb/s | 2× |

| Energy efficiency | Baseline | +67 percent | +67 percent |

| Supported workloads | LLMs, vision | Long-context LLMs, diffusion | Expanded |

| Pod scale | 256 chips | 256 chips (higher throughput) | Better util |

Clear definition for beginners

A TPU, or Tensor Processing Unit, is Google’s purpose-built AI processor optimized for matrix math. Trillium is the sixth-generation design, built for workloads like transformers, diffusion models, and large-scale embedding systems.

Key improvements at a glance: compute, memory, efficiency

The expanded MXU, upgraded memory system, and higher interconnect bandwidth directly address bottlenecks in transformer-based workloads. Trillium’s major improvements are outlined in the documentation for TPU v6e, which notes its doubled memory capacity, doubled bandwidth, and significantly improved performance per watt.

| Specification | TPU v5e | TPU v6e (Trillium) | Improvement |

|---|---|---|---|

| BF16 compute | ~195 TFLOPS (estimate) | 918 TFLOPS | ~4.7× |

| HBM capacity per chip | 16 GB | 32 GB | 2× |

| HBM bandwidth per chip | ~800 GB/s | 1600 GB/s | 2× |

| Interconnect bandwidth | ~1.6 Tb/s | 3.2 Tb/s | 2× |

| Energy efficiency | Baseline | +67 percent | +67 percent |

| Pod size | 256 chips | 256 chips | Better scaling |

| Target workloads | LLMs, general ML | Long-context LLMs, diffusion | Broader workload fit |

For a deeper look at how Trillium compares to previous generations in real workloads, see TPU v6e vs v5e/v5p: How Trillium Delivers Real AI Performance Gains.

How TPU Trillium Differs From a GPU (Simple Comparison)

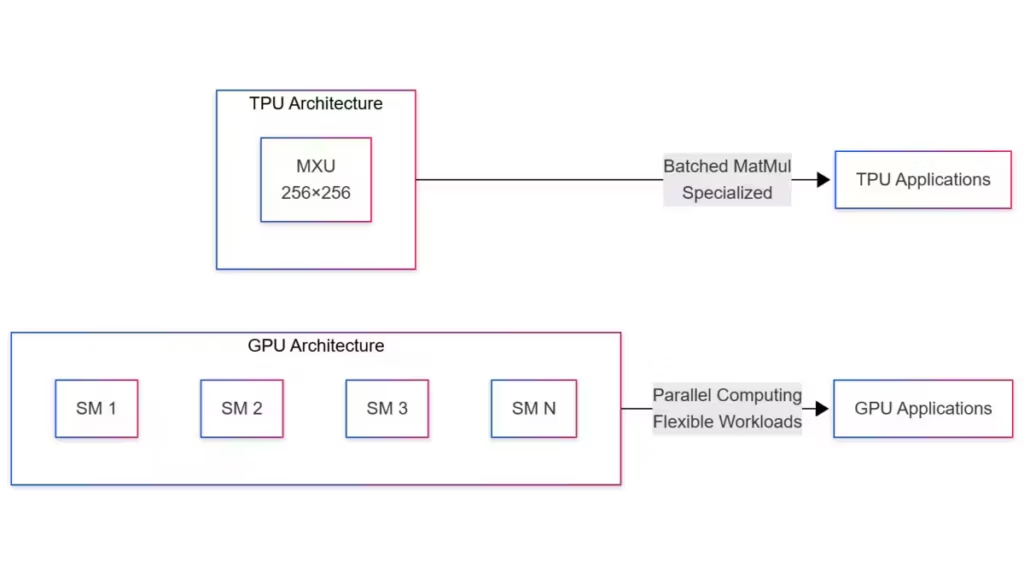

GPUs and TPUs both accelerate AI workloads, but they are built on different principles. GPUs remain general-purpose parallel processors used for graphics, simulation, and machine learning. TPUs focus tightly on accelerating tensor operations, especially the matrix multiplications central to transformers.

Architectural differences explained simply

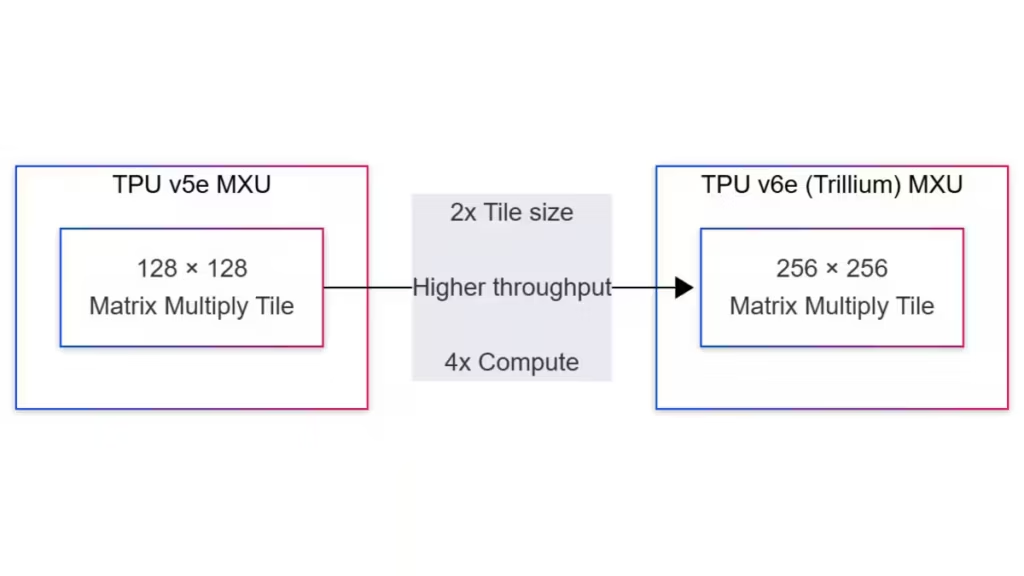

GPUs use thousands of small cores designed for flexible parallelism. Trillium is built around a much larger Matrix Multiply Unit (MXU) that executes massive batches of identical operations every cycle. According to the Trillium architecture overview from Google Cloud, expanding the MXU from 128×128 tiles to 256×256 tiles is one of the main reasons for the 4.7× FLOPs increase.

When a TPU is better, LLMs, training, large-scale inference

Trillium performs exceptionally well when workloads are dominated by dense linear algebra. Large language models, embedding-heavy recommender systems, and diffusion models benefit from Trillium’s high bandwidth and MXU width. Its 2D torus topology and high interconnect bandwidth, documented in Google Cloud’s v6e specifications, make distributed workloads far more efficient.

When GPUs still make more sense

GPUs are often superior for local experimentation, smaller models, mixed compute tasks, and workflows dependent on CUDA libraries. Developers needing broad software compatibility or relying on CUDA-based tooling still benefit most from NVIDIA GPUs. AMD GPUs are an option for some workloads through ROCm, but software support remains more limited and less mature than NVIDIA’s ecosystem, which continues to dominate deep learning development in practice.

Also read : OpenAI scales up: toward a global multi-cloud AI infrastructure

Trillium Architecture Explained (Beginner-Friendly but Accurate)

Trillium’s architecture revolves around three core improvements: a larger MXU, a faster memory subsystem, and a higher-bandwidth interconnect. These changes reduce bottlenecks in transformer models and enable stable scaling across many chips.

MXU upgrade, 128×128 to 256×256

The MXU is the centerpiece of TPU performance. The Google Cloud Blog confirms that increasing tile size from 128×128 to 256×256 multiplies FLOPs per cycle by four without increasing clock speed.

The chip does the calculations in BF16 but adds the results together in FP32, which helps keep the numbers accurate when training large transformer models.

HBM improvements, doubled capacity and bandwidth

Trillium includes 32 GB of HBM per chip, with bandwidth reaching 1600 GB per second, according to the official TPU v6e documentation. This enables larger batch sizes and reduces stalls in memory-bound workloads such as attention and KV-cache operations.

For context, NVIDIA’s H100 offers 80 GB of HBM3 and up to 3.35 TB/s of bandwidth on the SXM version, and around 2 TB/s on PCIe models. Trillium therefore provides less raw memory and bandwidth per chip, although its architecture and software stack are optimized for sustained transformer throughput rather than absolute peak HBM capacity. In practice, H100’s larger memory headroom benefits extremely large models or HPC-style workloads, while Trillium focuses on predictable scaling and efficiency for cloud-native LLM training and inference.

While each Trillium chip provides 32 GB of HBM, this is rarely a practical limitation. TPUs are designed to operate in tightly interconnected pods, and workloads are automatically sharded across many devices. Thanks to the 3.2 Tb/s inter-chip fabric and SPMD execution model, Trillium effectively treats memory as a distributed resource, allowing large models to run efficiently across dozens or hundreds of chips.

| Accelerator | HBM Capacity per Chip | Memory Bandwidth per Chip |

|---|---|---|

| NVIDIA H100 SXM | 80 GB HBM3 | 3.35 TB/s |

| NVIDIA H100 PCIe/NVL | 80 GB HBM2e or HBM3 | ~2.0 TB/s |

| Google TPU Trillium | 32 GB HBM | 1.6 TB/s |

Interconnect and pod topology, why scaling matters

Each Trillium chip provides 3200 gigabits per second of inter-chip bandwidth. A Trillium pod connects 256 TPU chips together in a loop-style network. All these chips can exchange data with each other extremely fast, with a total communication capacity of more than 100 terabytes per second. This huge bandwidth lets the TPUs work together as if they were one large processor. This topology is documented in detail in the TPU v6e architecture guide.

This high-speed fabric ensures that training large models across many hosts remains efficient and predictable.

Performance Gains in Real AI Workloads

The improvements introduced in Trillium translate into measurable gains across training, inference, and image generation. Google’s performance data outlines significant benefits in MLPerf benchmarks, JetStream inference, and diffusion workloads.

According to Google’s MLPerf 4.1 training benchmarks, Trillium reduces GPT-3–class training cost by up to 45 percent and improves performance-per-dollar by 1.8× compared to TPU v5p. Google’s AI Hypercomputer update reports that JetStream inference delivers up to 2.2× faster LLM decoding in high-traffic scenarios, as detailed in the inference updates announcement. Diffusion models follow a similar trend, with Stable Diffusion XL achieving up to 3.5× higher throughput on Trillium according to the same Google Cloud report.

LLM training, MLPerf results and cost-to-train drops

In Google’s MLPerf 4.1 submission, detailed in the Trillium MLPerf benchmarks report, Trillium reduces cost to train GPT-3-class models by 45 percent and improves performance-per-dollar by 1.8× compared to TPU v5p. It also maintains near-perfect 99 percent weak scaling efficiency when distributed across eight Trillium-256 pods.

LLM inference, JetStream, vLLM TPU backend, KV-cache optimizations

JetStream is Google’s optimized inference engine designed to maximize throughput on large language models. It improves dynamic batching, KV-cache usage, and attention execution, allowing TPUs like Trillium to generate tokens faster and more efficiently under heavy load.

Inference benefits from upgrades to Google’s JetStream engine, described in the AI Hypercomputer update published in the Google Cloud Blog. In parallel, the unified vLLM TPU backend, announced in October 2025 on the vLLM Blog, improves throughput for PyTorch and JAX models through unified lowering, optimized caching, and improved paged attention.

Image generation, SDXL throughput improvements

Diffusion models also benefit significantly. According to Google’s AI Hypercomputer inference update, Stable Diffusion XL sees up to 3.5× higher throughput on Trillium compared to TPU v5e. This improvement results from better HBM bandwidth and enhanced MXU tiling.

| Metric | TPU v5e | TPU v6e (Trillium) | Improvement |

|---|---|---|---|

| SDXL throughput | Baseline, limited by HBM | Significantly higher throughput | Substantial |

| HBM capacity | 16 GB | 32 GB | 2× |

| Memory bandwidth | ~800 GB/s | 1600 GB/s | 2× |

| MXU tile size | 128 × 128 | 256 × 256 (4× compute area) | Higher perf |

| Efficiency for diffusion | Moderate | Strong improvements | Improved |

| Ideal batch size | Smaller batches | Larger batches without stalls | Increased |

| Best use cases | Light/medium diffusion tasks | SDXL, large diffusion models | Expanded |

Practical Software Stack: JAX, TensorFlow, PyTorch/XLA, and vLLM

TPU Trillium is deeply integrated into Google’s machine learning ecosystem. Its performance advantages depend not only on hardware but also on compiler optimizations and multi-framework support. Thanks to this ecosystem, developers can use familiar tools while benefiting from the architectural improvements in TPU v6e.

XLA compiler and PJRT runtime

The XLA compiler is central to TPU performance. It optimizes model graphs from TensorFlow, JAX, and PyTorch/XLA by fusing operations, selecting efficient memory layouts, and tiling workloads to match the MXU’s geometry. The TPU v6e runtime documentation explains how XLA manages precision handling, operator fusion, and device sharding to maximize throughput.

PJRT, the Portable JAX Runtime, provides a unified execution interface across TPU, GPU, and CPU. As described in the PJRT announcement, PJRT simplifies cross-platform development by allowing one codebase to target multiple accelerators.

PyTorch/XLA and vLLM TPU backend

PyTorch users execute models on TPUs through PyTorch/XLA, which compiles PyTorch programs into XLA graphs with minimal code modifications. On Trillium, improvements in operator lowering and BF16/INT8 execution paths reduce training time and improve stability.

In October 2025, the vLLM team introduced a unified TPU backend described in the vLLM TPU backend announcement. This backend provides higher throughput for LLM inference, better KV-cache handling, and optimized Ragged Paged Attention v3 kernels. It also allows mixed execution across JAX and PyTorch.

Pathways runtime: multi-host inference in plain English

Pathways is Google’s system for orchestrating inference across multiple TPU hosts. Instead of running the entire sequence on a single device, Pathways splits work between “prefill” (processing the prompt) and “decode” (generating tokens).

The Pathways multi-host inference documentation explains how disaggregation lowers time-to-first-token and improves throughput for models that exceed a single host’s memory capacity.

Real-World Use Cases: Where TPU Trillium Shows Up Today

TPU Trillium is not theoretical hardware. It already powers production workloads inside Google and increasingly across Google Cloud customers. These real-world applications show how Trillium performs under demanding, high-volume AI scenarios.

Gemini 3 training and inference on Trillium

Google confirmed that 100 percent of Gemini 3 training and inference ran on Trillium hardware. Industry coverage, including summaries from Digitimes, highlights how Google interconnected over 100,000 Trillium chips into a unified network for large-scale AI workloads. This deployment showcases Trillium’s pod-level scalability and its suitability for extremely large transformer models.

Enterprise LLM serving with GKE and vLLM

Google Cloud provides a complete reference tutorial for deploying vLLM on TPU Trillium using Kubernetes, described in the GKE vLLM tutorial. In this workflow, autoscaling relies on metrics such as num_requests_waiting and cache usage. Combined with tensor parallelism, this setup enables serving large LLMs more efficiently than on standard GPU clusters.

Diffusion models and ComfyUI-TPU workflows

Stable Diffusion pipelines benefit greatly from Trillium through MaxDiffusion, described in Google’s MaxDiffusion inference guide. In addition, the open-source community introduced TPU support to ComfyUI. The ComfyUI-TPU GitHub repo explains how developers can run diffusion workflows on a single TPU or across multiple devices through SPMD execution. These improvements provide faster image generation and more cost-efficient large-scale diffusion workloads.

Should You Use TPU Trillium? Clear Guidance for Beginners and Pros

TPU v6e is designed for workloads dominated by linear algebra and large-scale parallelism. It is not always the ideal choice, but for many modern AI applications, it offers strong performance-per-dollar and excellent scaling characteristics.

When TPU Trillium is a strong choice

Trillium is a compelling option for organizations training large transformer models, running long-context inference, or serving high request volumes under strict latency constraints. With a 67 percent improvement in energy efficiency and strong scaling characteristics, Trillium is particularly effective for production environments focused on predictability and throughput.

When GPUs or alternatives are better

GPUs remain a better fit for experimentation, local development, and workloads relying heavily on CUDA libraries. They also excel in hybrid compute tasks that combine training with visualization or simulation. Developers who iterate frequently on model architectures often prefer GPUs due to their broader ecosystem support.

Cost considerations and cloud access

TPU Trillium is available exclusively through Google Cloud. Pricing varies by commitment period, with multi-year reservations providing significantly lower cost-per-chip-hour. The Google Cloud pricing page for TPUs lists on-demand prices, one-year commitments, and three-year commitments, helping developers calculate cost-per-token or cost-per-training-step for their specific workloads.

Glossary: TPU Terms Explained Simply

TPU (Tensor Processing Unit) Google’s custom AI accelerator optimized for parallel matrix operations used in modern neural networks.

MXU (Matrix Multiply Unit) The large systolic compute array that performs high-throughput matrix multiplications. The expansion to 256×256 tiles is documented in the Google Cloud Trillium introduction.

BF16 (bfloat16) A 16-bit floating-point format supported natively on TPU v6e. Details appear in the Google Cloud BF16 guide.

INT8 Low-precision integer format used for efficient transformer inference, as noted in the TPU v6e documentation.

HBM (High Bandwidth Memory) On-chip memory delivering high bandwidth to feed the MXU. Specifications are listed in the TPU v6e hardware documentation.

Pod A 256-chip cluster arranged in a 2D torus topology with more than 100 TB per second of all-reduce bandwidth.

XLA (Accelerated Linear Algebra) The compiler that optimizes JAX, TensorFlow, and PyTorch/XLA programs for execution on TPU. Integration details appear in the TPU runtime documentation.

SPMD (Single Program Multiple Data) A distributed execution model where identical programs run on separate chips with different data shards.

Pathways Google’s multi-host inference runtime, described in the Pathways multi-host guide.

What to Watch Next

Google’s TPU Trillium arrives at a moment when the industry is confronting a global GPU shortage, slowing down data center deployments and driving up the cost of training and serving large AI models. In this context, Trillium acts as a strategic answer to the GPU bottleneck, offering a scalable, energy-efficient alternative that can absorb part of the demand currently concentrated on NVIDIA hardware. Google’s deployment of more than 100,000 Trillium chips inside its AI Hypercomputer underlines how central this custom silicon approach has become to its long-term infrastructure strategy.

The combined effect of hardware specialization and software optimization also plays a crucial role in reducing operational costs. Techniques such as improved attention kernels, KV-cache management, or advanced compression formats contribute to lowering inference expenses. For example, the DFloat11 lossless compression approach, described in DFloat11: Lossless BF16 Compression for Faster LLM Inference, illustrates how software-side innovation can complement large-scale hardware gains without requiring architectural changes.

Trillium also positions Google as an increasingly credible competitor to NVIDIA. Its improvements in throughput, energy efficiency, and pod-level scaling make it attractive for multimodal and mixture-of-experts models. These advancements have even caused moderate market reactions, with NVIDIA’s stock seeing temporary dips as investors evaluated the potential impact of Google’s AI silicon strategy, as reported by CNBC. Still, NVIDIA remains the market leader thanks to its extensive CUDA ecosystem and unmatched versatility, and Google continues to be a major NVIDIA customer for GPUs.

Finally, recent reports suggest Google may explore offering its TPUs for on-premise deployments beyond Google Cloud, particularly for hyperscalers and large enterprises. While a desktop or workstation TPU remains unlikely, this shift could mark the beginning of a more hybrid AI infrastructure landscape, where cloud-scale accelerators and local server deployments coexist. Understanding TPU Trillium now provides valuable insight into how large-scale AI compute will evolve over the next generation.

Also read : GPU Shortage: Why Data Centers Are Slowing Down in 2025

Sources and references

Companies

- Google Cloud Blog, Introducing Trillium, Sixth-Generation TPUs, available at: https://cloud.google.com/blog/products/compute/introducing-trillium-6th-gen-tpus

- Google Cloud Blog, AI Hypercomputer inference updates, covering JetStream improvements, available at: https://cloud.google.com/blog/products/compute/ai-hypercomputer-inference-updates-for-google-cloud-tpu-and-gpu

- Google Cloud Blog, Trillium MLPerf 4.1 training benchmarks, available at: https://cloud.google.com/blog/products/compute/trillium-mlperf-41-training-benchmarks/

- vLLM Blog, A New Unified Backend Supporting PyTorch and JAX, available at: https://blog.vllm.ai/2025/10/16/vllm-tpu.html

- ComfyUI-TPU GitHub repository, multiprocess TPU support, at: https://github.com/radna0/ComfyUI-TPU

Institutions

- MLPerf 4.1 benchmark findings on transformer training performance, as referenced in Google’s MLPerf blog post.

- OpenXLA SparseCore deep dive documentation: https://openxla.org/xla/sparsecore

Official sources

- TPU v6e hardware documentation: https://docs.cloud.google.com/tpu/docs/v6e

- TPU runtime documentation: https://docs.cloud.google.com/tpu/docs/runtimes

- BF16 precision guide: https://docs.cloud.google.com/tpu/docs/bfloat16

- MaxDiffusion inference guide: https://docs.cloud.google.com/tpu/docs/tutorials/LLM/maxdiffusion-inference-v6e

- Pathways multi-host inference guide: https://docs.cloud.google.com/ai-hypercomputer/docs/workloads/pathways-on-cloud/multihost-inference

- Google Cloud pricing for TPUs: https://cloud.google.com/tpu/pricing

- GKE tutorial for serving vLLM on TPU Trillium: https://docs.cloud.google.com/kubernetes-engine/docs/tutorials/serve-vllm-tpu

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!