GPU Shortage: Why Data Centers Are Slowing Down in 2025

In 2025, the world’s largest cloud providers are hitting a severe GPU shortage that is slowing down the entire AI ecosystem, from startups to major enterprises. Queue delays, rationed compute, and deployment slowdowns illustrate an unprecedented crisis in AI infrastructure. This analysis explains why data centers are running out of GPUs, how this global compute famine emerged, and what teams can do to limit its impact today.

Find the latest weekly AI news on our main page, updated regularly.

Quick Answer: Why There Are No GPUs Left in 2025

The global GPU shortage in data centers is the result of a structural imbalance between exploding demand for AI accelerators and a manufacturing pipeline that cannot keep up. High-end GPUs like Nvidia’s H100, H200, and Blackwell require advanced packaging such as CoWoS, a process with extremely limited global capacity. Hyperscalers face month-long GPU queues, enforce strict allocation policies, and prioritize their largest customers. Meanwhile, the surge of frontier AI models like Gemini 3 Pro, GPT-5.1 Codex Max, and ERNIE 5.0 drives compute requirements to unprecedented levels, heavily impacting inference and training workloads. The outcome is a real compute famine, where GPU compute becomes a scarce and strategic resource.

Record GPU Queues for Hyperscalers (Azure, AWS, Google)

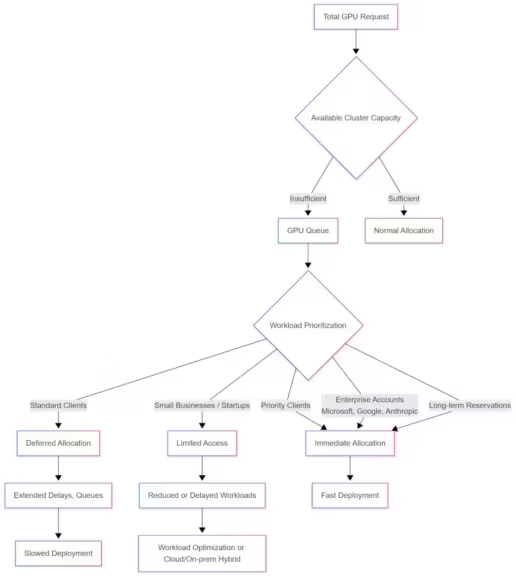

Public cloud providers are dealing with massive saturation. Companies must reserve their GPU capacity weeks or months in advance, sometimes even before new data centers open. Queue delays vary by region and cluster load, creating planning challenges and slowing down AI development cycles. This congestion forces hyperscalers to prioritize workloads, limit flexibility, and impose quotas on GPU allocation. [TODO: diagram → file d’attente GPU et priorisation des workloads]

(Clic to enlarge)

Blackwell and H100: Demand Exceeds Production Capacity

Nvidia’s accelerators dominate the AI market, but their production relies on CoWoS, HBM, and advanced packaging steps that only a handful of facilities can manufacture at scale. Even with capacity expansion, demand from hyperscalers and AI labs far exceeds supply. This imbalance drives long delays, unpredictable availability, and strong competition between cloud providers for the same limited GPU capacity.

Industrial Causes Behind the GPU Shortage

The 2025 GPU crisis is not tied to a single event but to a combination of structural industrial constraints. Manufacturing AI GPUs involves complex, specialized steps, and every link in the supply chain is under pressure. At the same time, AI adoption is accelerating across industries, and the rise of massive multimodal frontier models pushes compute requirements beyond what production lines can sustain.

CoWoS: The Bottleneck at the Heart of TSMC’s Production Pipeline

CoWoS is the most constrained step in the entire GPU manufacturing chain. It connects GPU dies with stacked HBM memory on a silicon interposer, a delicate and time-consuming process that only TSMC and a few others can perform. Orders from hyperscalers saturate available lines, and expanding CoWoS capacity takes time, money, and specialized equipment.

HBM and Advanced Packaging: Critical Component Shortages

HBM memory is essential for high-performance AI GPUs, but production cannot ramp up quickly. Manufacturers struggle with yields, rising demand and limited supply of high-bandwidth memory stacks. The pairing of HBM with advanced packaging creates a second choke point that delays GPU availability even further. Without HBM, no H100, H200, or Blackwell GPU can ship to cloud providers.

The Explosion of AI Models (Gemini 3, GPT-5.1, ERNIE 5.0)

Recent frontier models require enormous compute volumes. Gemini 3 Pro introduces ultra-long context windows, ERNIE 5.0 expands multimodal reasoning, and GPT-5.1 Codex Max executes multi-step workflows. These capabilities demand huge GPU clusters for both training and inference. As a result, infrastructure already under pressure must absorb workloads far beyond previous generations of AI models.

| Year | AI Model | Parameters (approx.) | Context / capability | Impact on GPU demand |

|---|---|---|---|---|

| 2020 | GPT-3 | 175 billion | Short context, light inference | Start of GPU demand ramp-up |

| 2021 | Megatron-Turing NLG | 530 billion | Massive model but limited deployment | GPU load mainly on training |

| 2022 | PaLM | 540 billion | Improved reasoning | GPU infrastructure still sufficient |

| 2023 | LLaMA 2, GPT-4 | 70B → 1T (estimated) | First widespread usage | Early pressure on clusters |

| 2024 | Gemini 1.5, Mixtral, LLaMA 3 | 70B → 1.5T | Very long contexts (→ compute-intensive) | Significant increase in H100 demand |

| 2025 | Gemini 3 Pro, ERNIE 5.0, GPT-5.1 | 1T → multi-trillion | Advanced multimodality, 1M-token context | Compute saturation, hyperscaler wait queues |

How the GPU Shortage Is Slowing Down Cloud Giants

The saturation of GPU clusters directly impacts public cloud performance. Even as hyperscalers open new data centers and purchase massive volumes of accelerators, available capacity remains insufficient to power the rapid scaling of AI infrastructure. Cloud providers must enforce quotas, restructure allocation priorities, and rearchitect workloads around scarce compute resources.

Quotas, Rationing, and Deployment Delays

Cloud platforms have implemented strict allocation rules to avoid overwhelming their GPU clusters. Organizations often need to reserve compute capacity long before launching training or inference jobs, which delays testing and production cycles. In some regions, provisioning times can double depending on cluster saturation. These constraints reshape AI planning, forcing engineering teams to adapt their development pipelines.

Top Priority for Major Accounts (Microsoft, Anthropic, Google)

Hyperscalers prioritize customers with long-term, large-volume commitments. Companies like Microsoft, Google, Anthropic, and other AI labs secure early access to new GPU batches through strategic partnerships and multi-year contracts. Mid-size organizations, lacking similar purchasing power, face longer queues, uncertain availability, and reduced scheduling flexibility.

Impact on Inference and AI Production Workflows

Inference workloads, critical for real-time AI services, also suffer from saturation. When GPU clusters are overloaded, latency increases, affecting user experience and production reliability. Teams may need to delay deployments, reduce real-time features, or shift workloads to alternative regions. In highly saturated clusters, inference pipelines become unstable and unpredictable.

Consequences for Companies and AI Projects

The GPU shortage affects far more than training workflows. Costs are rising, performance is inconsistent, and innovation cycles are slowing down across industries. To maintain operational efficiency, companies must rethink their architectures and reduce their reliance on scarce cloud GPU resources.

Rising Costs and Longer Lead Times

With cloud GPU demand at record levels, prices have surged across regions. Some H100 instances now cost more than double their 2023 equivalent. Provisioning delays add uncertainty to project timelines, and fluctuating spot prices penalize companies operating in high-demand periods. Budget predictability has become a major challenge across AI organizations.

| Type of AI GPU Resource | 2023 (estimated) | 2025 (estimated, based on trends and reports) | Evolution 2023 → 2025 |

|---|---|---|---|

| H100 rental (cloud, per hour) | 3.00 to 4.00 USD/h | 6.50 to 9.00 USD/h | ×2 to ×2.5 (shortage + AI demand) |

| H100 multi-GPU rental (8× cluster, per hour) | 25 to 32 USD/h | 55 to 75 USD/h | ×2 to ×3 |

| A100 rental (cloud, per hour) | 1.80 to 2.50 USD/h | 3.50 to 5.00 USD/h | ×1.5 to ×2 |

| H100 purchase price | ~30,000 USD | 45,000 to 60,000 USD (saturated market) | +50% to +100% |

| A100 purchase price | ~12,000–15,000 USD | ~20,000 USD (secondary market) | +30% to +60% |

| Dedicated H100 datacenter slot cost | ~2,500 USD/month | 5,000 to 7,000 USD/month | ×2 to ×3 |

| Average provisioning delay | 3–7 days | 30–90 days | ×10 to ×20 (wait queues) |

| H100/H200 spot price | Stable | Sharp increase (continuous rise 2024–2025) | Tight market |

Performance Degradation in Saturated Environments

In saturated clusters, workloads slow down significantly. Training jobs take longer to complete, inference pipelines become more variable, and autoscaling can fail. These bottlenecks force organizations to migrate workloads, adjust configurations, or invest in hybrid setups to maintain stability.

Impact on Innovation: Slower R&D, Fewer Experiments

When GPU resources are limited, R&D teams must reduce the number of experiments, limit model iterations, and focus only on high-priority tasks. Rapid prototyping becomes difficult, slowing innovation in AI-driven products. As a result, companies lose the iteration speed that once drove progress in deep learning and generative AI.

What Solutions Can Mitigate the GPU Shortage?

Even though the compute famine is structural, several practical strategies can help organizations reduce their dependence on scarce cloud GPUs. By optimizing workloads, adopting hybrid architectures, or exploring alternative accelerators, companies can regain control over their AI infrastructure and limit exposure to saturation.

Optimize Workloads: Quantization, Sparsity, Batching

Techniques like 8-bit or 4-bit quantization, structured sparsity, or more aggressive batching can dramatically reduce compute requirements. These optimizations lower memory consumption and GPU usage while preserving acceptable model performance. Modern AI frameworks make these transformations easier, allowing teams to cut compute costs and shorten queue times.

Hybrid Deployment: Cloud + Local GPUs

Hybrid architectures distribute workloads between local hardware and the cloud. Smaller or distilled models can run on local GPUs, while high-intensity training jobs remain in the cloud. Hybrid setups also allow teams to reserve cloud GPUs only when essential, reducing dependency on saturated regions and improving reliability.

Exploring Alternatives: TPU, FPGA, and Optimized Open-Weight Models

In an environment defined by scarcity, alternative accelerators become more appealing. Google TPUs offer competitive performance for certain architectures, while FPGAs allow efficient inference for specialized tasks. At the same time, modern open-weight models, often lighter than proprietary frontier models, help reduce GPU consumption without sacrificing capability.

| Technology | Strengths | Limitations | Typical use cases |

|---|---|---|---|

| GPU | Highly versatile, excellent performance for training and inference, rich ecosystem | High power consumption, strong demand leading to rising costs | LLM training, computer vision, multimodal AI, generative AI |

| TPU | Optimized for matrix computation, energy-efficient, high performance for deep learning | Google-specific, less flexible, limited availability outside Google Cloud | Large-scale training, production on Google Cloud |

| FPGA | Very low latency, high energy efficiency, hardware-level reconfigurability | Complex programming, lower raw performance than GPUs for modern AI workloads | Embedded inference, edge AI, deterministic workloads |

How Long Will the GPU Shortage Last?

The GPU shortage is expected to persist well into 2026. While packaging capacity expansions are underway, the backlog of orders and the growth of AI workloads exceed the pace of infrastructure build-out. Deployment timelines for new data centers, geopolitical constraints, and skyrocketing demand all contribute to a prolonged compute scarcity period.

CoWoS Capacity and Blackwell Production in 2025–2026

TSMC and other suppliers are expanding CoWoS capacity, but these efforts take time to materialize. The next-generation Blackwell GPUs require even more advanced packaging than Hopper, placing additional pressure on already saturated production lines. With hyperscalers pre-purchasing massive GPU batches, availability will remain limited throughout the next year.

New Data Centers and Geopolitical Priorities

Hyperscalers are investing heavily in new facilities, but construction, power upgrades, and equipment installation take months or years. Meanwhile, geopolitical restrictions on advanced AI chips affect global distribution, making some regions more vulnerable to prolonged shortages. Priority access often goes to the United States and select Asian markets, leaving Europe and emerging regions with fewer units.

Key Takeaways

The GPU shortage slowing down data centers in 2025 stems from industrial bottlenecks, heavy dependence on advanced packaging, and the rapid scaling of AI workloads. Public clouds face demand levels far beyond their existing capacity, leading to delays, higher costs, and performance inconsistencies. Yet practical solutions exist, from optimizing workloads to adopting hybrid strategies or exploring alternative accelerators. As manufacturing capacity increases gradually through 2026, GPU compute will remain a strategic resource and a key differentiator for organizations capable of anticipating and adapting to this new era of compute scarcity.

Also read : DFloat11 : Lossless BF16 Compression for Faster LLM Inference

Sources and References

Technology Media

- CNN, November 19, 2025, analysis of rising GPU demand and Nvidia’s data center constraints, https://edition.cnn.com/2025/11/19/tech/nvidia-earnings-ai-bubble-fears

- The Wall Street Journal, 2025, Nvidia results and Blackwell demand exceeding supply, https://www.wsj.com/tech/ai/nvidia-earnings-q3-2025-nvda-stock-9c6a40fe

- TechCrunch, November 18, 2025, analysis of Gemini 3 Pro and its compute requirements, https://techcrunch.com/2025/11/18/google-launches-gemini-3-with-new-coding-app-and-record-benchmark-scores/

Companies

- Microsoft Blog, November 18, 2025, strategic partnership with Nvidia and Anthropic including access to Blackwell and Vera Rubin compute, https://blogs.microsoft.com/blog/2025/11/18/microsoft-nvidia-and-anthropic-announce-strategic-partnerships/

- Anthropic, Claude integration into Microsoft Foundry and compute infrastructure notes, https://www.anthropic.com/news/claude-in-microsoft-foundry

- OpenAI, GPT-5.1 Codex Max presentation and associated compute requirements, https://openai.com/index/gpt-5-1-codex-max/

Official Sources

- Nvidia, official information and financial filings related to data center demand, https://www.nvidia.com

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!