How to Detect AI: Text, Image, and Audio – The Complete 2025 Guide

Detecting AI-generated content has become a real challenge in 2025. The latest generation of models, ChatGPT 5, Claude 4.5, and Gemini 2.5 Pro, now produce text, images, and voices so natural that they rival human creativity. Yet learning how to detect AI remains essential to preserve academic integrity, identify deepfakes, and ensure transparency across media, research, and marketing.

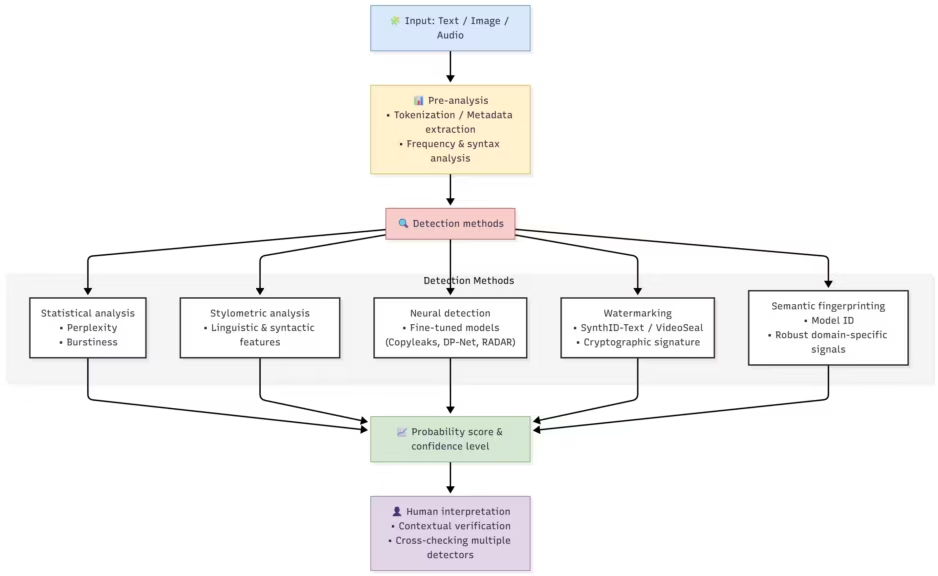

This complete 2025 guide draws on the latest studies and the most reliable AI detection tools to help you identify AI-generated text, images, and synthetic voices. As generative models become increasingly sophisticated, detection methods evolve too, blending linguistic analysis, neural modeling, and statistical watermarking.

Why Detecting AI Has Become Essential

Since the launch of ChatGPT 5, the line between human writing and AI-generated content has nearly disappeared. Newsrooms, universities, and social platforms now face an ever-growing flood of AI-produced text and visuals. But the goal is not to ban artificial intelligence. The real issue is transparency: distinguishing legitimate use cases (editing assistance, data synthesis, creative support) from deceptive or manipulative applications.

Google, for instance, has made it clear that it does not penalize AI-generated content by default. What it does target is scaled content abuse, mass-produced, low-value content without human supervision (SEOpital, 2024). In this context, knowing how to detect AI content is no longer a technical curiosity but a critical skill for anyone working with digital media, research, or education.

Detecting AI in Text: Methods and Limitations

Identifying text generated by modern models such as ChatGPT 5, Claude 4.5, or Gemini 2.5 Pro has become a constant race between detection and generation. Researchers agree that no detector can guarantee absolute reliability. Depending on context, accuracy rates range between 52% and 99% (PMC, 2025). The best AI text detectors perform well on raw outputs but struggle when faced with paraphrased, edited, or collaborative writing.

Perplexity and Burstiness Analysis: The Classic Method

The earliest tools, such as GPTZero and ZeroGPT, relied on perplexity analysis, a measure of how predictable a text is. The more uniform and statistically “smooth” a passage, the more likely it is to have been generated by an AI model. This method still works for older systems, but it fails against ChatGPT 5 and Claude 4.5, which can now mimic human-like irregularity and stylistic variety.

Perplexity analysis also introduces major bias: non-native speakers are often misclassified as AI in 61.3% of cases (Stanford HAI, 2023). As a result, perplexity is now considered a weak indicator, useful only when combined with more advanced linguistic or neural signals.

Stylometry: Detecting AI Through Writing Style

Stylometry focuses on linguistic style rather than content. It measures lexical richness, sentence length, syntactic complexity, and the frequency of rare or complex verbs. The model StyloAI (2024) showed that a well-tuned algorithm could reach up to 98% accuracy on academic datasets by using 31 distinct linguistic features.

Its main advantage is interpretability: analysts can see why a text was classified as AI-generated. Its main limitation is adaptability: stylometric models must be retrained for each domain (technical, literary, scientific) to remain accurate as AI writing styles evolve.

Watermarking: The Next Generation of Invisible Signatures

Statistical watermarking is the most promising approach for 2025. Google DeepMind released SynthID-Text, an open-source system that embeds an invisible signature during text generation. Each word’s probability is slightly adjusted according to a secret key, creating a statistical pattern that is undetectable by readers but verifiable by authorized checkers.

Internal testing showed that 20 million Gemini responses were successfully watermarked without any noticeable loss of quality (Euronews, 2024). OpenAI is also experimenting with a similar feature within ChatGPT 5. However, watermarking is not foolproof: paraphrasing with another model often removes the mark, and adversarial attacks can even insert fake watermarks into human-written text (EY, 2024).

Fine-Tuned Neural Detectors: The Learned Approach

The most advanced AI content detection tools, including Copyleaks, Originality.ai Turbo, and DP-Net, use fine-tuned neural models trained on millions of human and AI-generated samples. These systems can achieve up to 99% accuracy on well-curated datasets (Copyleaks, 2025), though their effectiveness drops significantly on unseen domains or new model architectures.

Their main weakness is cross-model generalization: a detector trained for GPT-4 often fails to identify outputs from Claude 4.5 or Gemini 2.5 Pro. That’s why newer research initiatives, such as RADAR and DP-Net, use adversarial reinforcement learning to dynamically adapt to emerging writing styles (ACL Anthology, 2025).

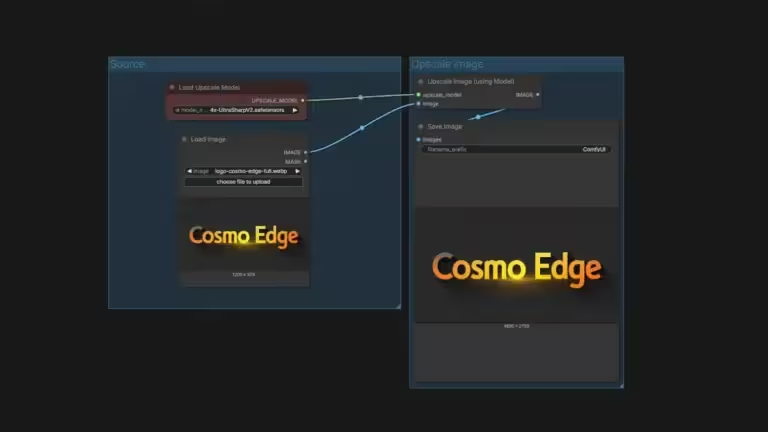

Detecting AI in Images and Videos

AI image detection has made major progress since 2023, yet models like Gemini 2.5 Pro Vision and Midjourney V7 now blur the line between photography and synthetic imagery. The results are so realistic that even trained professionals struggle to tell them apart. Still, it is possible to detect AI-generated images using a mix of visual, statistical, and neural analyses.

Common Visual Clues

Before using a detection tool, certain details can already reveal an AI origin:

- Physical inconsistencies: unnatural shadows, distorted reflections, or impossible proportions.

- Text and logo issues: fuzzy characters, uneven spacing, inconsistent typography.

- Anatomical errors: extra fingers, distorted jewelry, misaligned gaze.

- Repetitive patterns: especially visible in hair, fabrics, or foliage.

These artifacts still exist, although Midjourney and Gemini have drastically improved coherence and fine-detail rendering since 2024.

The Most Reliable AI Image Detection Tools

Modern AI image detectors are trained to recognize the statistical artifacts left by generative models. The top AI detection tools for images in 2025 include:

- SynthID Image (Google DeepMind): embeds an invisible watermark directly into pixels during generation.

- Intel FakeCatcher: analyzes micro-lighting variations in faces to expose deepfake videos.

- Forensically: open-source forensic software that inspects EXIF metadata and noise patterns.

- Content Authenticity Initiative (Adobe / Nikon / Microsoft): an emerging cross-industry standard for provenance authentication of images and videos.

Transformer-based visual detectors such as Swin Transformer V2-B and Meta’s VideoSeal now reach up to 98% accuracy on benchmark datasets (Arxiv, 2025).

The Limits of Image Detection

The biggest challenge remains robustness to post-processing. A simple JPEG compression, color filter, or crop can erase the statistical signal of a watermark. Furthermore, multimodal models like Gemini 2.5 Pro can generate realistic visuals that include perfectly legible text, making typography-based detection nearly obsolete.

Researchers are moving toward hybrid approaches that combine cryptographic watermarking with blockchain-verified provenance tracking, supported by Adobe, Sony, and Nikon for professional content workflows.

Detecting AI in Audio and Voice

Voice generation has also taken a leap forward in 2025. Systems like VoiceCraft 3, ElevenLabs Prime Voice v2, and ChatGPT Audio 5 can now clone any voice with uncanny realism. As a result, AI-generated audio deepfakes are being used in scams, misinformation campaigns, and identity fraud. Learning how to detect AI voice and synthetic speech has become a critical task for security, media, and education sectors alike.

How to Recognize AI-Generated Audio

Even the most convincing synthetic voices often show subtle irregularities:

- Lack of micro-imperfections: breathing too regular, transitions too clean.

- Neutral accent and constant rhythm: AIs still struggle to mimic spontaneous hesitation.

- Limited emotional depth: prosody sounds mechanically consistent or overly smooth.

- Subtle artifacts: tiny breaks between syllables, audible through headphones.

Trusted AI Audio Detection Tools

- Resemble Detect: a spectral analysis API that identifies synthetic acoustic signatures.

- Deepware Scanner: detects manipulated or generated audio/video through frequency analysis and machine learning.

- Loki LibrAI: open-source tool featured by Cosmo Games, designed for fact-checking and audio-text provenance tracking.

Recent neural detectors achieve 92–96% accuracy, according to Frontiers in AI and IEEE 2025 publications.

Limits and Risks of Confusion

Hybrid voices, part-human and part-synthetic, remain the hardest to identify. Many content creators now use AI for minor voice enhancements or retiming, which blurs the line between editing and generation. Moreover, humanizer tools like VoiceHumanizer or BypassAudioAI inject natural background noise or micro-errors to evade detectors. The most effective strategy today combines acoustic analysis with contextual verification: source reliability, metadata consistency, and speaker history.

AI detection tools: the 2025 comparison

The market for AI detection tools has matured in 2025. The era of unreliable gadgets is over: the new generation of solutions relies on fine-tuned neural models, cross-model databases, and in some cases, hybrid approaches combining machine learning and watermarking.

Here is a comparison of the most reliable tools according to recent studies and independent benchmarks.

AI text detector comparison (2025)

| Tool | Average accuracy | False positives | Strengths | Limitations |

|---|---|---|---|---|

| Scribbr Premium | 84% | 0% | Very reliable on academic writing, clean interface | Not suited for creative content |

| Copyleaks AI Detector | 98–99% | < 1% | High accuracy on non-native speakers (Copyleaks, 2025) | Lower performance on paraphrased text |

| Originality.ai Turbo | 95–99% | 1–2% | Excellent robustness to simple rewording | Expensive for large-scale use |

| GPTZero v4 | 70–92% | 5–15% | Fast, user-friendly interface | Inaccurate on short texts |

| DetectGPT / Fast-DetectGPT | 87–98% | 1–10% | Open source, customizable | Requires an appropriate proxy model |

| Turnitin AI Detector | 60–85% | Not disclosed | Integrated with LMS platforms | Reliability varies by institution |

| DP-Net / RADAR | 86% (cross-domain) | < 2% | Dynamic adversarial training (ACL Anthology, 2025) | Still in testing phase |

Verdict: commercial tools like Copyleaks and Originality.ai Turbo remain the most reliable, but the open-source Fast-DetectGPT provides a credible alternative for developers and researchers. Universal 2025 models such as DP-Net are starting to solve the generalization problem across different models (GPT-5, Claude 4.5, Gemini 2.5 Pro).

AI image and video detectors

| Tool | Type | Specificity | Accuracy |

|---|---|---|---|

| SynthID (DeepMind) | Embedded watermark | Invisible cryptographic marker | ~99% on Gemini images |

| VideoSeal (Meta) | Deepfake detector | Frame-by-frame diffusion network analysis | 95–98% |

| FakeCatcher (Intel) | Facial biosignal | Detection via micro light variations | 92% |

| Forensically | Open source | Traditional forensic analysis (EXIF, noise) | Variable |

| Content Authenticity Initiative | Industry standard | Provenance tracking via blockchain/metadata | Deployment in progress |

The most conclusive results come from native watermarking, a proactive approach adopted by Google, Meta, and Adobe. However, compression, resizing, or screen capture can neutralize these markers, highlighting the need for multi-layer verification.

AI audio and voice detectors

| Tool | Accuracy | Method | Notes |

|---|---|---|---|

| Resemble Detect | 93–95% | Spectral analysis | Reliable API for studios and media |

| Deepware Scanner | 90–94% | Frequency-based machine learning | Good balance for general users |

| FakeCatcher Audio | 92–96% | Temporal correlation | Effective on political speeches |

| Loki LibrAI | ~90% | Open source / fact-checking | Useful for journalistic verification |

Modern models capable of mimicking breathing, accent, and hesitation make detection harder, which is why contextual verification systems are emerging (analyzing source, metadata, and recording date).

Structural Limits and Biases

Bias Against Non-Native Writers

The bias against non-native speakers remains the most serious ethical flaw in AI text detection. A Stanford study found that 61.3% of TOEFL essays written by non-native students were wrongly flagged as AI-generated, versus only 5.1% for native English writers (Stanford HAI, 2023). This happens because linguistic simplicity, shorter sentences, limited vocabulary, is statistically closer to AI language patterns.

Copyleaks, in contrast, claims to have corrected this bias with new multilingual datasets, reaching 99.84% accuracy and <1% false positives on non-native texts (Copyleaks, 2025).

Cross-Model and Cross-Domain Generalization

Most detectors perform well only in-distribution, that is, when evaluating text similar to their training data. When facing new domains (creative, scientific, technical) or unseen models (e.g., Claude 4.5, Gemini 2.5 Pro), performance often drops below 60% accuracy. Newer research such as DP-Net and RADAR introduces dynamic perturbations and adversarial training to maintain 86% average accuracy across seven unseen domains (ACL Anthology, 2025).

Vulnerability to Paraphrasing and Humanizers

Paraphrasing remains the most effective evasion method. Tools like Quillbot, Undetectable AI, and BypassGPT can reduce detection accuracy from 90% to less than 15% (ACL Anthology, 2025). A 2025 benchmark called DAMAGE confirmed that 19 commercial humanizers successfully bypassed nearly every existing AI detector.

Researchers note that even sophisticated watermarking can be erased by recursive paraphrasing or translation loops through multiple languages (Arxiv, 2025).

Ethical and Legal Implications

Risks of False Accusations

False positives can have serious human consequences. In 2024, several universities falsely accused students of AI plagiarism based solely on detection scores. One autistic student was marked as cheating because of her unique writing style (Tandfonline, 2024). Such cases led many institutions to adopt hybrid verification policies combining automated detection with human review.

Data Privacy and Model Transparency

Watermarking and detection raise new privacy concerns. Since watermarks can act as identifiers, they might allow tracing user outputs through legal requests or data leaks. The EU AI Act now requires traceability, auditability, and clear documentation for all high-risk AI systems (Arxiv, 2024). Frameworks like the AI Model Passport help standardize these audit processes.

Academic and Journalistic Use

Academic institutions now emphasize AI literacy rather than prohibition. For example, American University requires attribution for AI assistance, while the University of Pittsburgh forbids disciplinary action based solely on detector results (Teaching Pitt, 2024). In journalism, AI detection assists fact-checking, especially for deepfakes and synthetic news articles (Digital Content Next, 2024).

Future Directions and Research in AI Detection

The arms race between AI generators and AI detectors is accelerating. Each breakthrough in generation, such as ChatGPT 5’s dynamic reasoning, Claude 4.5’s contextual coherence, or Gemini 2.5 Pro’s multimodal synthesis, forces researchers to invent new detection paradigms. The future of AI detection depends on integrating watermarking, adversarial training, and explainable models.

Watermarking and Cryptographic Signatures

Embedding native watermarks directly into model outputs is considered the most promising approach. Google, OpenAI, Meta, and Anthropic have begun deploying systems like SynthID, which insert statistical or cryptographic signatures into generated text and images.

The World Economic Forum (2025) lists generative watermarking among the ten most transformative technologies of the year. However, for watermarking to truly scale, researchers must solve key issues:

- Cross-model robustness (preserving signals even after paraphrasing or translation)

- Anti-spoofing protection (preventing false watermarks in human text)

- Cross-platform verification standards to allow interoperable checks across tools and vendors

Semantic watermarking, an emerging variant, encodes hidden statistical patterns conditioned on meaning rather than raw tokens. It achieves near-perfect detection within defined semantic domains while remaining invisible to the user (Arxiv, 2025).

Built-In Detectors and Model Fingerprinting

Future LLMs could include native self-identification: the ability for a model to signal whether it generated a given text. Combined with fingerprinting, tracking the unique statistical behavior of each model, this would enable reliable authorship attribution even after fine-tuning or quantization.

The AI Model Passport initiative (Arxiv, 2024) standardizes documentation of datasets, training, validation, and deployment. This ensures that models remain traceable throughout their lifecycle, a key requirement of the EU AI Act and upcoming U.S. regulations.

Adversarial Training and Robust Detection

Research in adversarial learning shows that detectors trained alongside generative adversaries, like DP-Net or RADAR, learn to anticipate evasion strategies such as paraphrasing or re-prompting. These models apply dynamic perturbations during training, improving cross-domain generalization and resilience to humanizers.

Next-generation approaches will likely rely on meta-learning (fast adaptation to unseen models) and self-supervised learning (reduced dependence on labeled data), combined with multi-signal fusion (watermarking + linguistic + neural).

Multilingual and Multimodal Detection

Most detectors still perform best in English. Expanding to other languages is a crucial research frontier. Pangram AI (2025) claims 81.46% average F1-score across nine languages using LLaMA3-1B, thanks to multilingual datasets and balanced sampling.

The next challenge is multimodal detection, identifying AI not just in text, but in combinations of text, image, audio, and video. Tools like SynthID now cover both image and text, while Meta’s VideoSeal adds video watermarking (Arxiv, 2025). Researchers aim to unify these capabilities into cross-modal frameworks capable of verifying entire multimedia narratives, from scripts to soundtracks.

Practical Recommendations for 2025

Use AI Detectors Responsibly

No AI detector should ever be treated as a definitive proof of misconduct. Institutions and professionals should apply multi-layered protocols:

- Require human review for any flagged case

- Compare with previous writing samples or stylistic baselines

- Conduct follow-up interviews to confirm authorship

Tools like Scribbr Premium, Copyleaks, and Originality.ai Turbo currently offer the most documented reliability (false positive rate below 2%), but human interpretation remains essential.

Define Confidence Thresholds

Set clear thresholds for interpretation:

- >90%: strong indicator of AI generation, requires investigation

- 50–90%: ambiguous, needs contextual review

- <50%: likely human

Short texts (<300 tokens) produce weaker signals, while technical or academic texts are 9% harder to classify. In testing, GPT-4 and newer models like ChatGPT 5 evade detection more frequently than GPT-3.5.

Combine Automated and Human Judgment

Using multiple detectors in parallel drastically reduces false positives. A triad such as Copyleaks + GPTZero + Originality.ai lowers the combined false-positive probability to below 0.01% (Physiology Journal, 2024).

Experts bring qualitative insights, recognizing inconsistencies in argumentation, unnatural coherence, or stylistic breaks that algorithms miss.

Reinvent Evaluation Methods

Rather than policing AI use, educators and organizations can design AI-resilient assessments:

- Oral defenses and live demonstrations of understanding

- Iterative projects with checkpoints that track progress

- Adaptive questions preventing reuse of pre-generated answers

These human-centered methods not only discourage plagiarism but also enhance authentic learning and creativity.

Promote AI Literacy

Training users and students to understand how generative models work is more effective than punishment. Workshops should cover ethical use, correct attribution, and the limits of detection tools. Institutions that adopt AI literacy programs report higher compliance and reduced misconduct (Frontiers in Education, 2024).

Conclusion

In 2025, reliably detecting AI-generated content remains an open problem. Accuracy ranges from 52% to 99% depending on model, dataset, and domain. The race favors generators, not detectors.

The most promising direction combines native watermarking, semantic fingerprinting, and adversarially trained detectors, supported by strong human oversight.

Ultimately, the goal is not to eliminate AI but to ensure transparency, fairness, and accountability in its use. Hybrid systems, where algorithms assist but humans decide, represent the only viable path forward for ethical, robust, and verifiable AI detection.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!