Hugging Face Transformers: which graphical interfaces make it easier to use?

Since its launch, Hugging Face Transformers has become a key reference for leveraging natural language processing (NLP) models, as well as multimodal models (text, image, audio). However, for many users, the absence of a native graphical interface makes the first steps intimidating.

The library is primarily aimed at Python developers, and the standard usage requires writing code, managing environments, and installing dependencies. But that doesn’t mean you always have to dive into scripts to take advantage of the power of Hugging Face models. Today, several simplified interfaces and graphical tools offer an accessible experience, both for experienced researchers and for curious beginners who just want to quickly test AI models.

In this article, we’ll explore the main solutions available in 2025: Pipeline API, Hugging Face Spaces, OpenWebUI, Gradio, and LM Studio. Each has its strengths, limitations, and ideal use cases.

Hugging Face has no native graphical interface

First clarification: unlike other solutions such as Ollama, LM Studio, or Text Generation WebUI, the Transformers library does not provide a ready-to-use graphical interface.

This means that if you install Transformers via pip install transformers, you won’t get any GUI or chatbox by default. Everything goes through Python code. The Hugging Face ecosystem is designed primarily for developers, with a strong focus on flexibility and customization.

Still, this doesn’t prevent you from using external graphical interfaces. Hugging Face has built a rich ecosystem around its library, and the community has developed many tools to make the experience more accessible.

Pipeline API: the simplified entry point for developers

The first “plug-and-play” solution provided by Hugging Face is the Pipeline API.

With just a few lines of code, you can call a pretrained model for tasks such as:

- text generation,

- sentiment classification,

- translation,

- document summarization,

- question answering.

Minimal example:

from transformers import pipeline

generator = pipeline("text-generation", model="gpt2")

print(generator("Hello Hugging Face", max_length=30))For someone who doesn’t want to set up a complex environment, this approach is ideal. It still involves code, but the simplicity makes it accessible without advanced programming knowledge.

As highlighted by the Milvus Blog, the Pipeline API is the best entry point for discovering the ecosystem without diving into the architecture details of the models.

Hugging Face Spaces: a web-based graphical interface

If writing code is not an option, there’s an even simpler alternative: Hugging Face Spaces.

Spaces is a collaborative platform hosted by Hugging Face. It allows the community to publish interactive applications built around models. These GUIs usually rely on Gradio or Streamlit and are accessible directly from the browser.

For example, you can test:

- an image generation model,

- an audio transcription AI,

- a text-based chatbot,

- or even complex pipelines combining multiple models.

The advantage? Zero local installation. Just open a browser, choose a Space, and interact with the model. As explained by Data Scientist Guide, this approach democratizes model access by removing the technical barrier.

Note: free Spaces hosted by Hugging Face have usage limits. Some apps may be restricted depending on server load.

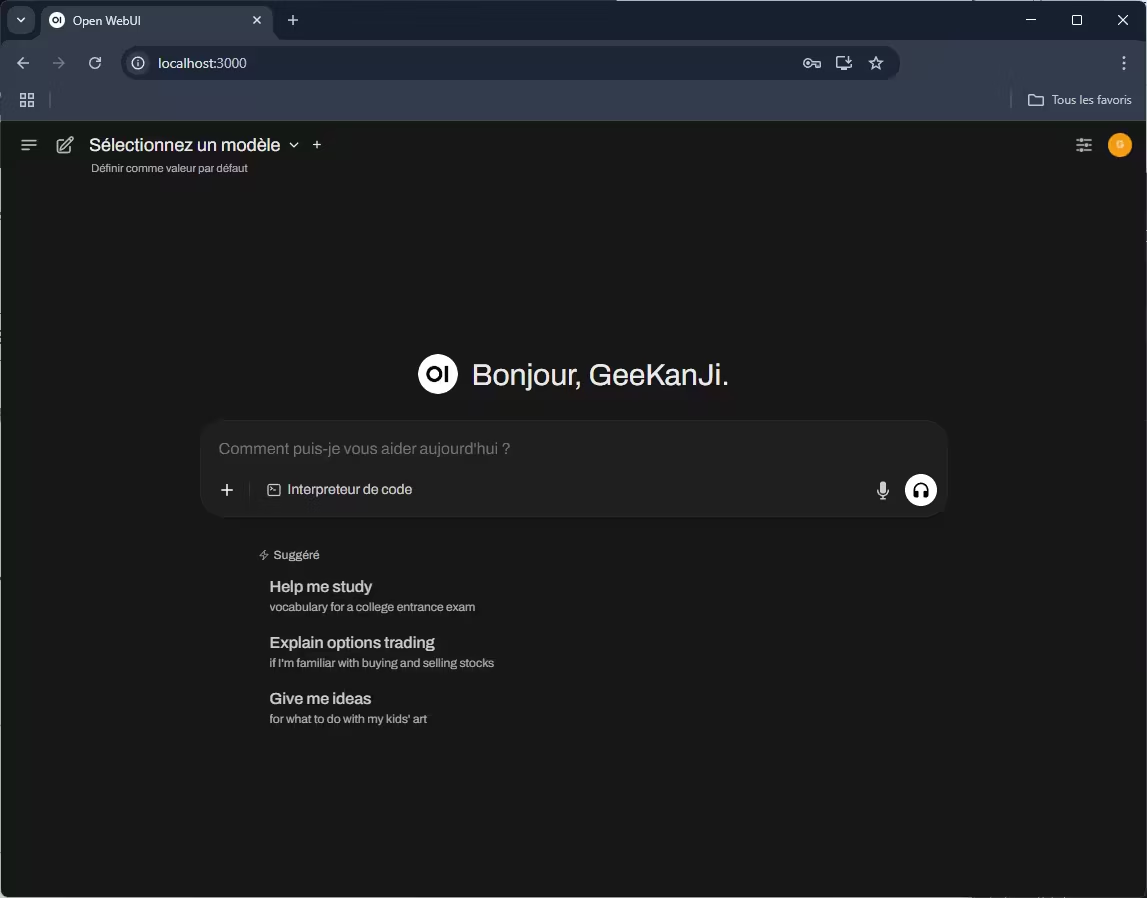

OpenWebUI: a local integration option

For those who prefer a local graphical experience, an interesting solution is to combine Hugging Face Transformers with OpenWebUI. It’s a web interface very similar to ChatGPT, Claude, or other chat systems.

With the command:

transformers serve --enable-corsYou can deploy a local REST server that OpenWebUI can query. As detailed in the Hugging Face documentation, this enables running models locally and accessing them via a modern graphical interface.

This scenario is particularly suited for:

- audio transcription (e.g., with openai/whisper-large-v3),

- text generation,

- other NLP tasks supported by Transformers.

The OpenWebUI + Transformers integration is less known than Spaces or Gradio, but it offers a serious alternative for users who want to keep their data local.

Gradio: the go-to graphical tool for Hugging Face

Gradio plays a central role in the Hugging Face ecosystem.

This tool allows you to create an interactive web interface in just a few lines of code. For example:

import gradio as gr

from transformers import pipeline

generator = pipeline("text-generation", model="gpt2")

def generate_text(prompt):

return generator(prompt, max_length=50)[0]["generated_text"]

demo = gr.Interface(fn=generate_text, inputs="text", outputs="text")

demo.launch()By running this script, you instantly get a mini web application accessible in your browser, without needing to build a full interface.

As explained by Deep Learning Nerds, Gradio has become the de facto standard for quickly testing models. It’s also the core technology behind many Spaces.

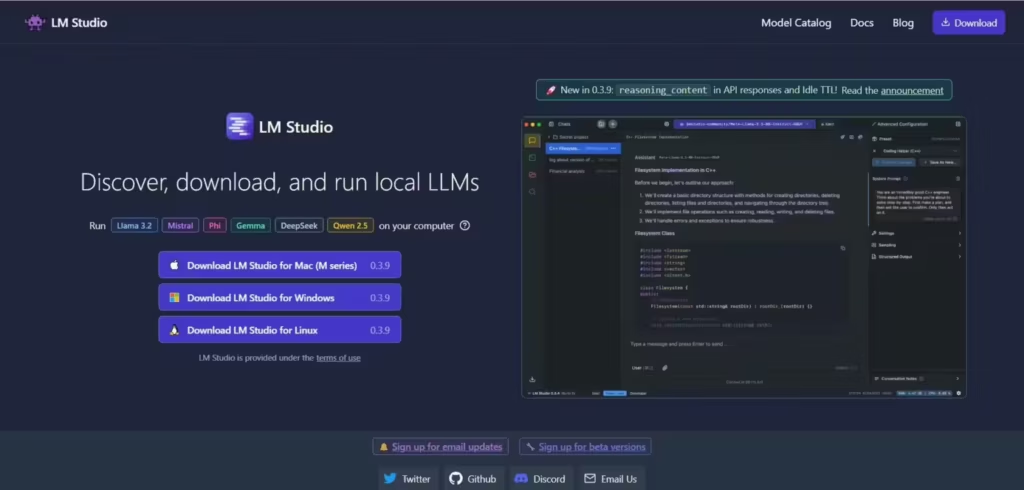

LM Studio: the desktop alternative

Finally, for those who want a 100% graphical and local experience without relying on Python, LM Studio is an option worth considering, especially for anyone taking their first steps with local AI.

Available on Windows, macOS, and Linux, LM Studio lets you:

- download Hugging Face models from the Model Hub,

- run them locally via a chat interface,

- use a local API server to interact with other applications.

LM Studio is similar to tools like Ollama or Text Generation WebUI, but with direct Hugging Face compatibility. As noted by CodeWatchers, it’s currently one of the simplest ways to run a Hugging Face model without writing a single line of code.

Note: LM Studio is not designed for audio/vision models—the tool is text-focused.

Conclusion: which interface should you choose?

The choice depends on your needs:

- Pipeline API: best for beginner developers.

- Spaces: perfect for testing models without local installation.

- OpenWebUI + Transformers Serve: local solution for keeping full control over your data.

- Gradio: flexible tool for demos and prototypes.

- LM Studio: turnkey desktop app, no Python required.

In short, even though Transformers does not offer a native graphical interface, the ecosystem is full of tools that simplify its use, whether through the web, a desktop app, or a local API.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!