Install vLLM with Docker Compose on Linux (compatible with Windows WSL2)

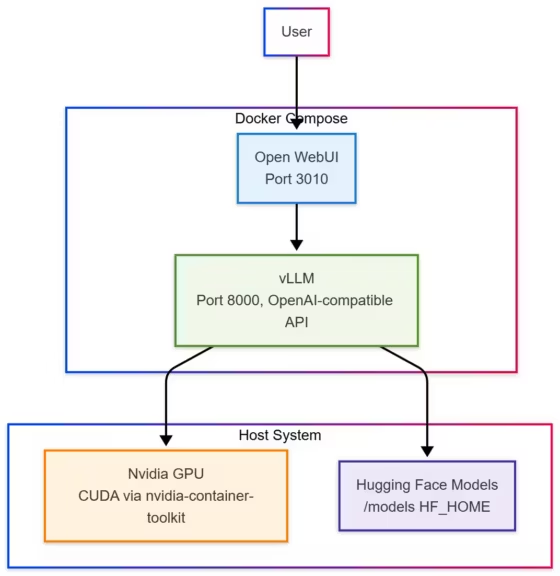

Installing vLLM with Docker Compose on Linux is one of the most efficient and reliable methods to run a local AI inference server with NVIDIA GPU acceleration. This open source inference engine, fully compatible with the OpenAI API, allows you to run Hugging Face models locally with exceptional performance and optimized parallel computation.

Thanks to Docker Compose, vLLM’s setup becomes reproducible, portable, and easy to maintain. Whether you use a standard Linux distribution (Ubuntu, Debian, Fedora) or Windows 11 through WSL2, you’ll achieve the same speed and flexibility.

This guide explains how to create a full local environment: installing the prerequisites, building the docker-compose.yml file, configuring the Hugging Face cache, integrating a web interface (Open WebUI), and troubleshooting common errors like GPU memory limits or model loading failures.

The goal: a local AI server that is fast, stable, and production-ready, capable of running your favorite open source language models without relying on the cloud.

Why install vLLM with Docker Compose

vLLM is an open source inference engine designed to fully utilize GPU acceleration for language model inference. It runs OpenAI-compatible LLMs like Qwen, Mistral, Llama, and DeepSeek locally with far greater efficiency than traditional Python servers.

Combined with Docker Compose, it offers a modular and maintainable architecture:

- each service (vLLM, Open WebUI, storage) is isolated,

- configurations can be versioned and replicated,

- compatibility across Linux, WSL2, and macOS (with virtual GPU) is ensured.

This method avoids complex CUDA or PyTorch installations. Everything runs inside a pre-built Docker image, minimizing dependency errors and software conflicts.

In short, Docker Compose provides a stable and portable framework for deploying vLLM on any GPU-enabled machine, while keeping the setup modular enough to add more AI services like Open WebUI or local vector databases.

System requirements and environment setup

Before starting the installation, make sure your system meets the requirements for running containers with GPU pass-through. This procedure works identically on Linux (Ubuntu, Debian, Fedora, Arch) and Windows 11 with WSL2.

Hardware and software requirements

- NVIDIA GPU with CUDA support

- Latest NVIDIA drivers installed

- Docker Engine and Docker Compose

- nvidia-container-toolkit for GPU pass-through inside containers

Installing the NVIDIA toolkit

On Ubuntu / Debian:

sudo apt update

sudo apt install -y nvidia-container-toolkit

sudo systemctl restart dockerChecking GPU access inside Docker

Run the following command:

docker run --rm --runtime=nvidia --gpus all nvidia/cuda:12.3.1-base nvidia-smiYou should see your GPU details (name, memory, drivers). If this fails, check your driver and toolkit configuration before proceeding.

Creating the .env file

At the root of your project, create a .env file to store your Hugging Face token, required for downloading models:

HF_TOKEN=your_huggingface_tokenOnce validated, your environment is ready to install vLLM using Docker Compose.

Docker Compose configuration

Next, create the docker-compose.yml file to orchestrate the vLLM service. This file defines the Docker image, mounted volumes, environment variables, and GPU resources.

Here’s a complete and working example that automatically downloads a model from Hugging Face:

services:

vllm:

image: vllm/vllm-openai:latest

container_name: vllm

ports:

- "8000:8000"

environment:

HF_TOKEN: ${HF_TOKEN}

HF_HOME: /models

volumes:

- ./models:/models

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

runtime: nvidia

shm_size: "16g"

command: >

--model openai/gpt-oss-20b

--max-model-len 32768

--gpu-memory-utilization 0.9

--disable-log-stats

Key parameters explained

- image: pulls the latest official GPU-ready vLLM image

- HF_TOKEN: authenticates access to Hugging Face models

- HF_HOME: redirects Hugging Face cache to ./models

- volumes: preserves models even if the container is removed

- runtime: nvidia: enables GPU pass-through

- shm_size: increases shared memory for large models

- command: defines the model and runtime parameters

Launching the service

Place both docker-compose.yml and .env files in the same directory, then start the container:

docker compose up -dvLLM will automatically download and launch the model. To follow the logs:

docker logs -f vllmOnce ready, access the local OpenAI-compatible API at:

http://localhost:8000/v1You can interact with vLLM through any OpenAI client (Python, cURL, or GUI tools).

Managing Hugging Face models

When first launched, vLLM downloads the model specified in docker-compose.yml from Hugging Face. Because HF_HOME=/models, all files are stored in ./models, making them easy to manage.

Directory structure

After the first run, your folder should look like this:

./models/

├── models--openai--gpt-oss-20b/

│ ├── config.json

│ ├── model.safetensors

│ ├── tokenizer.json

│ └── ...Each model stays cached for future reuse.

Deleting a model

To free disk space:

rm -rf ./models/models--openai--gpt-oss-20bThe model will re-download automatically if the service restarts.

Using a local model

You can also load a local model directly from disk:

command: >

--model /models/Qwen3-7B-InstructThis allows offline inference without Internet access or Hugging Face Hub connection.

Switching models

Update the –model line:

--model Qwen/Qwen2.5-7B-InstructThen restart:

docker compose down && docker compose up -dAll models remain cached under ./models for easy management.

Add Open WebUI to interact with vLLM

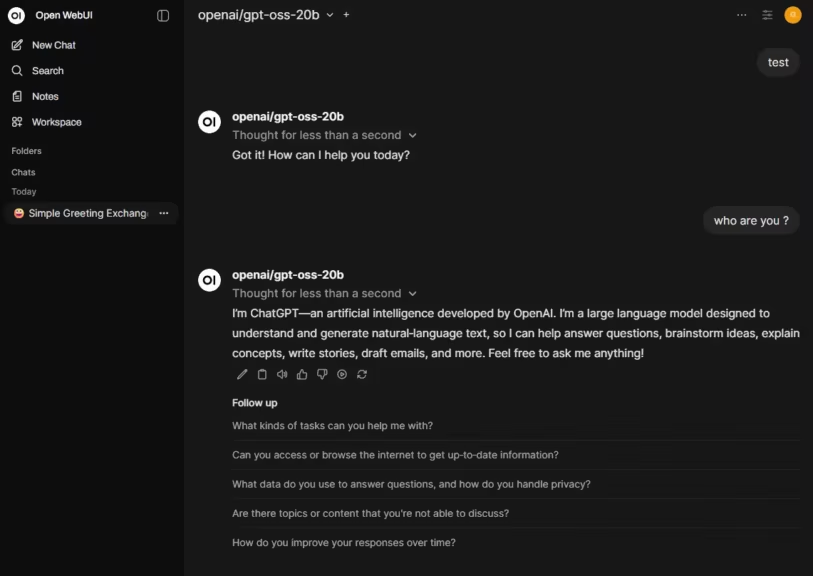

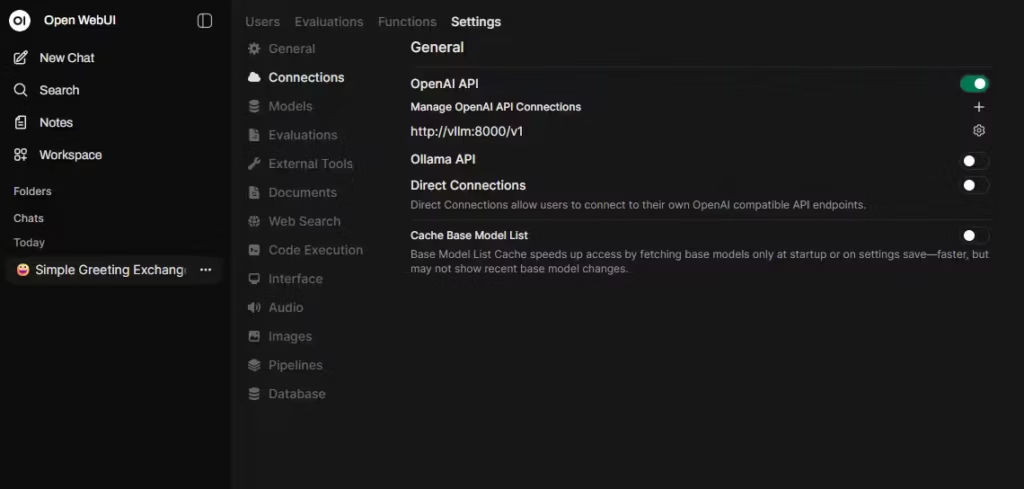

To get a graphical interface similar to ChatGPT, add Open WebUI. It connects to vLLM’s OpenAI-compatible API (port 8000), letting you send prompts, track sessions, and run local chat completions.

Example configuration

Append this to your docker-compose.yml:

openwebui_vllm:

image: ghcr.io/open-webui/open-webui:main

container_name: openwebui_vllm

ports:

- "3010:8080"

environment:

- OPENAI_API_BASE_URL=http://vllm:8000/v1

- OPENAI_API_KEY=none

depends_on:

- vllm

volumes:

- openwebui_data_vllm:/app/backend/dataThen add this at the end:

volumes:

openwebui_data_vllm:Launch everything:

docker compose up -dAccessing the web interface

Once running, open your browser at:

http://localhost:3010

You’ll get a modern web UI to interact directly with your vLLM API server. All requests go through the local OpenAI API endpoint, ensuring privacy and low latency.

Useful notes

- If you already use Open WebUI for Ollama or another API, make sure ports differ. Using port 3010 avoids conflicts.

- All data and chat history are saved in openwebui_data_vllm.

- Unlike Ollama, vLLM runs one model at a time, defined at startup.

Open WebUI provides the best of both worlds: the power of vLLM with a friendly chat interface for testing, comparing, or developing prompts.

Common issues and troubleshooting

Even with a stable Docker Compose setup, several issues may arise depending on your GPU configuration or the selected model. Below are the most frequent vLLM errors and their fixes.

| Problem | Likely cause | Solution |

|---|---|---|

| “out of memory” error on startup | Model too large for available VRAM | Choose a lighter model (for example Qwen3-7B instead of 30B) or use a quantized version (4-bit, 8-bit, mxfp4). |

| Incomplete download or “Model not found” | Experimental or missing Hugging Face files | Use a stable release or verify the model files on the Hub. |

| No models visible in the API | Wrong –model path | Check your ./models directory and correct the path. |

| GPU not detected | Missing drivers or NVIDIA toolkit | Reinstall nvidia-container-toolkit and test with docker run –gpus all nvidia/cuda:12.3.1-base nvidia-smi. |

| Shared memory (shm) errors | Insufficient shared memory | Increase shm_size to “16g” or “32g” in Docker Compose. |

| Port conflict with another Open WebUI instance | Port already in use | Change port mapping, e.g. 3011:8080. |

Useful diagnostic commands

- Follow vLLM container logs: docker logs -f vllm

- List loaded models:curl http://localhost:8000/v1/models

- Monitor GPU memory usage: nvidia-smi

- Remove problematic cached models: rm -rf ./models/models–ModelName

These quick checks solve most vLLM runtime issues without needing to rebuild containers.

Complete Docker Compose file (vLLM + Open WebUI)

services:

vllm:

image: vllm/vllm-openai:latest

container_name: vllm

ports:

- "8000:8000"

environment:

HF_TOKEN: ${HF_TOKEN}

volumes:

- ~/.cache/huggingface:/root/.cache/huggingface

- ./models:/models

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

runtime: nvidia

shm_size: "16g"

command: >

--model openai/gpt-oss-20b

--max-model-len 32768

--gpu-memory-utilization 0.9

--disable-log-stats

openwebui_vLLM:

image: ghcr.io/open-webui/open-webui:main

container_name: openwebui_vLLM

ports:

- "3010:8080"

environment:

- OPENAI_API_BASE_URL=http://vllm:8000/v1

- OPENAI_API_KEY=none

depends_on:

- vllm

volumes:

- openwebui_data_vllm:/app/backend/data

volumes:

openwebui_data_vllm:Optionally, you can define a dedicated Docker network:

networks:

default:

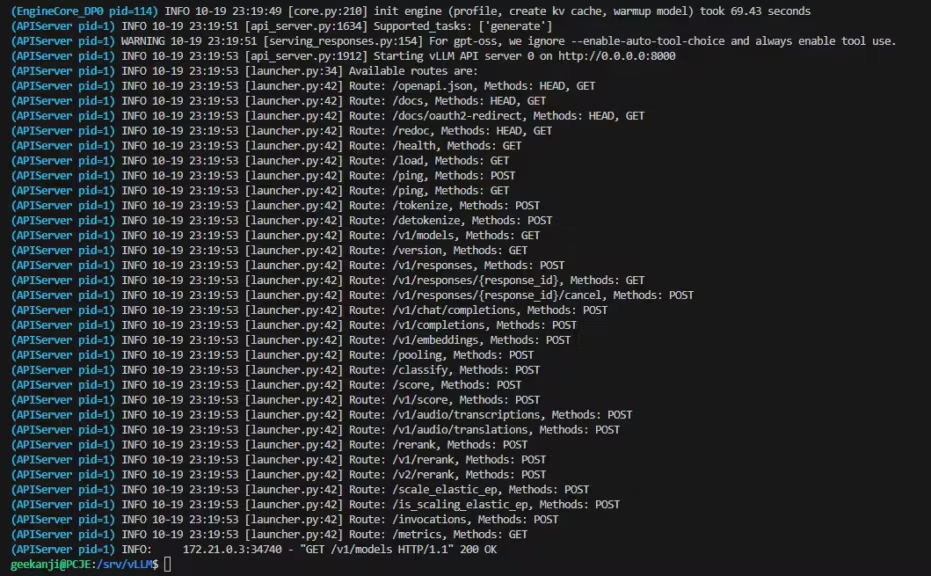

name: vllm_netChecking that vLLM runs correctly

After launching your containers, verify that vLLM is operational and that the OpenAI-compatible API responds properly.

API verification

Run this command:

curl http://localhost:8000/v1/modelsIf installation succeeded, the response will include your model’s name:

{

"object": "list",

"data": [

{

"id": "openai/gpt-oss-20b",

"object": "model",

"owned_by": "vllm"

}

]

}That confirms the model is active and ready to process requests.

Test a completion request

curl http://localhost:8000/v1/completions

-H "Content-Type: application/json"

-d '{

"model": "openai/gpt-oss-20b",

"prompt": "Explain the difference between CPU and GPU in one sentence.",

"max_tokens": 50

}'A text response should appear in the terminal.

Test from Open WebUI

Open http://localhost:3010, type your prompt, and submit. If the interface freezes, verify that:

- the vLLM container is running (docker ps)

- the API base URL is correct (http://vllm:8000/v1)

- the vLLM log shows a valid request line like INFO: “GET /v1/models HTTP/1.1” 200 OK

- no local firewall blocks port 8000

Once confirmed, your local inference server is fully operational.

Conclusion

Running vLLM with Docker Compose on Linux (or Windows 11 via WSL2) is a simple, reproducible, and high-performance way to deploy AI models locally. This method combines vLLM’s GPU-optimized inference engine, compatible with the OpenAI API, and Docker Compose’s modular management for services, volumes, and dependencies.

Beyond local execution, vLLM stands out as a production-ready inference solution. It supports optimized tensor formats like NVFP4, enabling faster loading and better memory efficiency. While slightly more technical to configure than Ollama, it provides precise parameter control, strong scalability, and stability suited to professional environments. For high-performance, optimized, and controllable AI deployments, vLLM is a benchmark solution.

To explore differences, see Ollama vs vLLM: which local LLM server should you choose?

With this setup, you now have:

- vLLM configured with GPU acceleration

- centralized Hugging Face model management

- Open WebUI interface for interaction

- full compatibility across Linux and WSL2

This architecture suits both developers and AI researchers who want to test open source models (Qwen, Mistral, Llama, DeepSeek, etc.) without cloud dependency. It can also serve as the foundation for a local AI infrastructure, extendable with tools like Ollama, LangChain, or vector databases.

In summary, vLLM + Docker Compose is among the most efficient ways to run AI locally: fast to deploy, stable, and perfectly suited for both professional and experimental use.

Additional resources

For deeper customization or advanced integration, see these resources:

Official documentation

- vLLM: https://docs.vllm.ai → Full API reference, GPU optimizations, and configuration details.

- Docker Compose: https://docs.docker.com/compose/ → YAML syntax, networking, and dependency management.

- Open WebUI: https://github.com/open-webui/open-webui → Interface customization and backend integration guide.

Recommended tools and articles

- Hugging Face Hub: Explore and download open source models like Qwen, Mistral, Llama, and DeepSeek.

- NVIDIA Container Toolkit: Official guide to GPU support and CUDA configuration.

- Ollama: A practical alternative for multi-model setups.

- LM Studio: Desktop app for local inference benchmarking.

Handy commands

# Launch all services

docker compose up -d

# View vLLM logs

docker logs -f vllm

# List available models

curl http://localhost:8000/v1/models

# Delete a cached model

rm -rf ./models/models--ModelName

Next steps

- Integrate a local vector database (ChromaDB or Qdrant) for embeddings

- Combine vLLM with an agent framework (LangChain, LlamaIndex, n8n) to build autonomous AI assistants

This setup forms a solid foundation for a local AI infrastructure: performant, modular, and easy to maintain, whether on a Linux server or a Windows machine using WSL2.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!