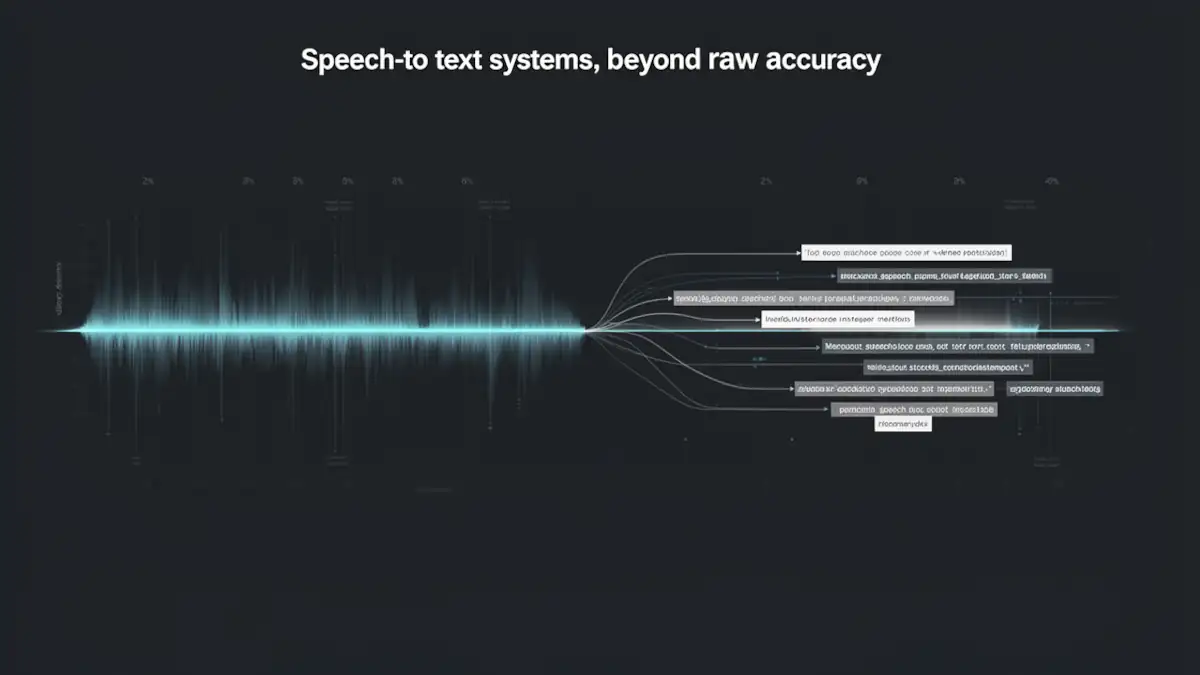

Why raw accuracy is a trap in speech-to-text evaluation

In recent months, several publications have suggested that YouTube’s automatic captions can outperform Whisper, even in its largest variants. One widely shared Medium post argues that scaling Whisper models yields diminishing returns, while YouTube’s ASR pipeline remains more accurate in practice.

At first glance, this conclusion is surprising. Whisper large-v3 is a state-of-the-art open-weight model, while YouTube captions are primarily designed for accessibility. Yet, both claims can be true, depending on how accuracy is defined, measured, and ultimately used.

This article explains why comparisons between Whisper and YouTube often lead to conflicting conclusions, and why raw metrics alone are insufficient when transcription serves as an editorial or analytical source.

The Medium article: a valid result in a narrow frame

The experiment presented in the Medium article relies on a clear protocol: a single real-world video, a comparison between multiple Whisper model sizes, a word-level accuracy estimate, and a practical conclusion suggesting that if YouTube captions exist, they should be used.

Within this specific frame, the result is consistent. YouTube benefits from a closed-source ASR pipeline that may include domain-specific optimizations, historical corrections, and contextual signals unavailable to standalone models. On certain content, especially well-produced videos in major languages, YouTube captions can indeed appear highly accurate. The problem is not the result itself, but what is inferred from it.

What accuracy actually means in transcription

Accuracy is not a single metric

In transcription evaluation, the term accuracy is often used loosely. In practice, it can refer to word-level match percentage, Word Error Rate (WER), readability, or perceived usefulness for a specific task. Two systems can achieve similar surface accuracy while producing very different editorial outcomes.

Why WER is necessary but insufficient

The Word Error Rate (WER) measures lexical distance through substitutions, insertions, and deletions. While it is a useful baseline, it does not measure semantic impact, argumentative distortion, or the reliability of the text as a source. A trivial error on an article and a meaning-reversing substitution count the same way. From an editorial perspective, they are not equivalent.

YouTube is not just another ASR model

A fundamentally different objective

YouTube captions are optimized for real-time consumption and accessibility, featuring continuous text flow, a tolerance for insertions, and segmentation aligned with video display. Whisper, by contrast, is optimized for faithful offline transcription, even if that results in pauses, hesitations, or a less fluid text.

Structural advantages

YouTube’s pipeline can leverage channel-level context, previous human corrections, and proprietary post-processing. Comparing YouTube to Whisper as if they were both isolated models ignores these structural differences. When YouTube “wins,” it is often because it is playing a different game.

Insights from our methodological study

To move beyond anecdotes, we conducted a deep-dive analysis on a defined corpus, specifically a long narrative discourse in French intended for editorial use. You can find the full technical breakdown in our Whisper large-v3 vs YouTube Auto-captions study.

- The importance of time alignment: Comparing subtitle files is misleading if timestamps diverge. In our case, YouTube exhibited a significant initial offset, making re-alignment a prerequisite for any meaningful metric.

- Global WER hides local failures: By calculating WER over 10-second windows, we observed error spikes exceeding 30% on conceptually dense passages, exactly where fidelity matters most.

- The hierarchy of errors: A human audit showed that Whisper errors were mostly trivial orthographic issues, while YouTube errors more often involved substitutions that altered the argumentative structure.

Rethinking high-fidelity transcription

The Medium article and our study do not contradict each other, they answer different needs. The former seeks the fastest tool for viewing a video, while the latter seeks a reliable textual source for analysis or RAG pipelines.

Without clarifying the objective, accuracy numbers become misleading. A system optimized for accessibility can outperform a research-grade model on the surface while being unsuitable for editorial reuse. For critical projects, the most robust workflow remains hybrid: using Whisper as a base, followed by targeted human review on complex segments and audio verification for key passages.

This distinction between reading comfort and analytical rigor redefines how we must evaluate voice AI in the future. What will be the next metrics capable of truly measuring semantic fidelity rather than simple character matching?

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!