NVFP4: Everything You Need to Know About NVIDIA’s New 4-Bit Format for AI

Artificial intelligence is moving fast, with new announcements every week, and behind some of them are technical innovations with major potential impact. In 2025, it is NVFP4, a new 4-bit floating-point format introduced by NVIDIA, that is drawing all the attention. Designed to train and run large language models (LLMs) faster and with fewer resources, this quantization format is not entirely new, but it is optimized in a way that could reshape the landscape for researchers, developers, and enterprises.

Why did NVIDIA launch NVFP4? How does it differ from known formats like FP8 or BF16? Could it become the standard for 4-bit quantization compared to other approaches like Unsloth’s?

What is NVFP4?

NVFP4 is a proprietary 4-bit floating-point format from NVIDIA. Each numerical value is represented using only 4 bits:

- 1 bit for the sign

- 2 bits for the exponent

- 1 bit for the mantissa (E2M1 format)

At first glance, this looks extremely minimalistic. And it is: moving from 16-bit (BF16) to 8-bit (FP8) and then down to 4-bit (NVFP4) is like going from a four-lane highway to a narrow country road. But NVIDIA has found a way to keep the traffic flowing.

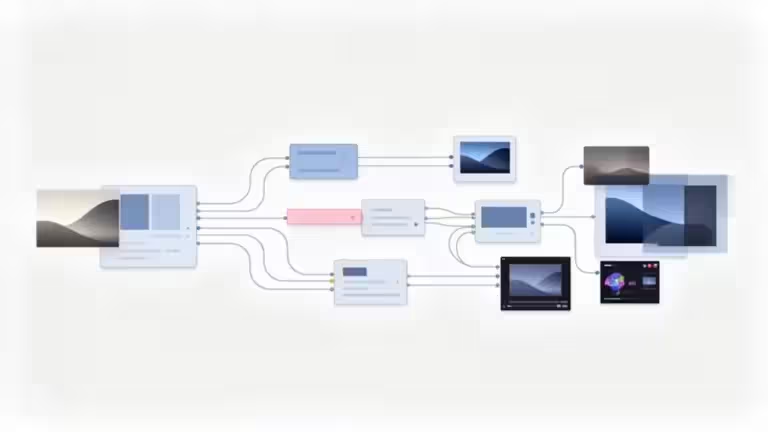

The secret lies in a multi-scale system:

- Each block of 16 values uses an FP8 scale to avoid local precision loss.

- A global FP32 scale keeps everything harmonized.

The result: the numerical accuracy remains close to FP8, while memory consumption and compute time are drastically reduced (NVIDIA Developer Blog).

Why NVIDIA created NVFP4

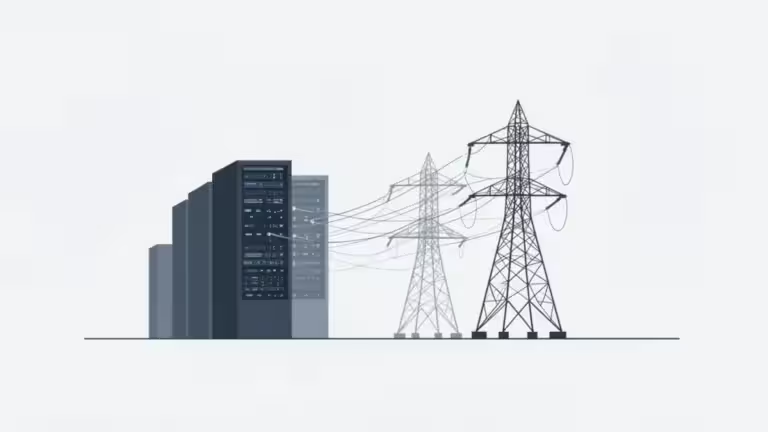

The goal is clear: to train and run inference on larger AI models, faster, with less energy. Cloud giants and research labs are facing skyrocketing AI costs.

- Reduced memory footprint: NVFP4 cuts memory use in half compared to FP8.

- Higher speed: On Blackwell GPUs, NVFP4 can be 4 to 6 times faster than BF16 (Tom’s Hardware).

- Energy efficiency: some analyses estimate up to 50× higher inference efficiency compared to heavier formats.

In short, NVFP4 is a strategic lever for NVIDIA, addressing the massive demands of generative AI while also reassuring enterprises facing exploding energy bills.

NVFP4 vs FP8 vs BF16: Key differences

To understand the value of NVFP4, we need to compare it to the most widely used formats:

- BF16: historically the reference, very precise, but costly in both memory and compute.

- FP8: adopted around 2022–2023, much lighter than BF16 with only minor precision losses.

- NVFP4: even more compact, but thanks to multi-scaling and stabilization techniques like stochastic rounding, it delivers performance close to FP8.

According to a study published on arXiv in September 2025, a 12B parameter model trained on 10 trillion tokens using NVFP4 showed only a 1–1.5% difference compared to FP8 in validation loss. In practice, this means almost no noticeable difference in final results.

How NVIDIA solved the stability challenge

Training a model with as few as 4 bits is not trivial. Without safeguards, calculations quickly become unstable. To solve this, researchers combined several techniques:

- High-precision layer selection: only critical final layers stay in FP8 or FP16.

- Random Hadamard transforms: a mathematical trick to reduce variance.

- 2D quantization and stochastic rounding: to avoid systematic rounding bias during training.

These methods were validated in the study by Abecassis et al., Pretraining Large Language Models with NVFP4, which has become the academic reference in this field (arXiv).

Adoption across the ecosystem

NVFP4 is not just a theoretical idea. It is already supported in multiple tools and projects:

- Transformer Engine and TensorRT-LLM (open source NVIDIA frameworks).

- Open-weight models like Nemotron or Gemma SEA-LION 27B NVFP4 available on Hugging Face.

- Real-world deployments such as DeepSeek-R1, which tested NVFP4 to boost throughput (Introl Tech Blog).

- vLLM already supports the NVFP4 format.

- Ollama does not yet support NVFP4, with no announcements on its release page.

- Many open-weight models are available in NVFP4 on Hugging Face, including Mistral, Qwen, Llama, and Apertus (Hugging Face search).

Also read : Install vLLM with Docker Compose on Linux (compatible with Windows WSL2)

What this means for developers and enterprises

For developers and researchers, NVFP4 opens the door to:

- training larger models locally than previously possible,

- reducing energy consumption on compute clusters,

- accelerating experimentation cycles by cutting training costs.

For enterprises, this translates into a way to reduce overall AI costs, whether by leveraging their own Blackwell GPUs or through cloud providers that will adopt this format at scale.

Limitations and open questions

NVFP4 looks promising, but there are still questions to address:

- Precision burn-in on certain tasks? → Early tests show minor drops in coding tasks, but it is unclear if this persists across more models.

- Wide compatibility? → At present, NVFP4 is mostly tied to Blackwell GPUs, with earlier architectures unable to fully benefit.

- Competing formats? → Will rivals like MXFP4 or other open FP4 standards find their place?

Conclusion

NVFP4 is a bit like moving from a fuel-hungry SUV to a high-performance electric car: the same power, but with much lower consumption. By halving memory compared to FP8 and accelerating training up to 6× versus BF16, NVIDIA has introduced a tool designed for the era of generative AI.

Whether NVFP4 will become the new industry standard or remain confined to Blackwell GPUs and NVIDIA-driven projects remains to be seen. But one thing is certain: anyone interested in the future of LLM training will need to keep an eye on NVFP4.

NVFP4 article series

- NVFP4: Everything You Need to Know About NVIDIA’s New 4-Bit Format for AI ← You are here

- NVFP4 vs FP8 vs BF16 vs MXFP4: Comparing Low Precision Formats for AI

- NVFP4 Stability and Benchmarks: What Academic Studies and Tests Show

- Why NVFP4 Matters for Businesses: Cost, Speed, and Adoption in AI

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!