Ollama by default in Q4: why your AI models lose accuracy

When installing an AI model through Ollama, it’s easy to believe you’re running the full version with all its accuracy and capabilities. In reality, things are different: by default, Ollama distributes most of its models in 4-bit quantization (Q4_K_M), a compression method designed to reduce memory usage and speed up execution. While convenient, this optimization leads to a loss of quality and precision compared to full precision versions (FP16 or FP32).

In this article, we’ll explore why Ollama made this choice, what the limitations are, and most importantly how users can work around this compromise when they need higher fidelity results and have the right hardware.

What is quantization in Ollama?

Quantization means reducing the numeric precision used to represent a model’s parameters. Instead of storing weights in 16 or 32 bits, Ollama often uses 4-bit formats like Q4_K_M, or sometimes Q4_0 for older releases (Hugging Face).

- Main advantage: a Q4-compressed model requires much less memory and can run on consumer hardware, even with GPUs limited in VRAM or on CPUs.

- Downside: part of the model’s nuance and precision is lost, which can result in less nuanced answers or more frequent mistakes.

As explained by Dataiku, quantization is always a trade-off between speed and quality: the smaller the numbers, the faster the execution, but at the cost of the model’s reasoning performance.

Why does Ollama default to Q4?

Ollama’s stated goal is simple: democratize local AI models. By offering default variants in Q4_K_M, the platform enables users without high-end GPUs to run models like LLaMA 2, Gemma, or DeepSeek directly on their machines.

This approach focuses on accessibility:

- A 7B model in FP16 may need more than 16 GB of VRAM, while in Q4 it only requires 4–5 GB.

- Inference speed is significantly improved, making the user experience smooth even on modest systems.

In other words, Ollama prioritizes compatibility and speed over raw accuracy. This explains why many users later discover their models are less precise than expected.

The limits of Q4: when accuracy fails

Even if Q4_K_M quantization is seen as one of the best current compromises (Towards AI), it comes with limitations:

- Loss of nuance: answers may sound more generic, with reduced reasoning depth.

- Subtle errors: sensitive tasks (precise calculations, complex logic) may return wrong results.

- Weakened abilities: in highly compressed models, coding or advanced reasoning can degrade.

As one user pointed out on Stack Overflow, the same model can produce less reliable answers in Ollama compared to its non-quantized Hugging Face version.

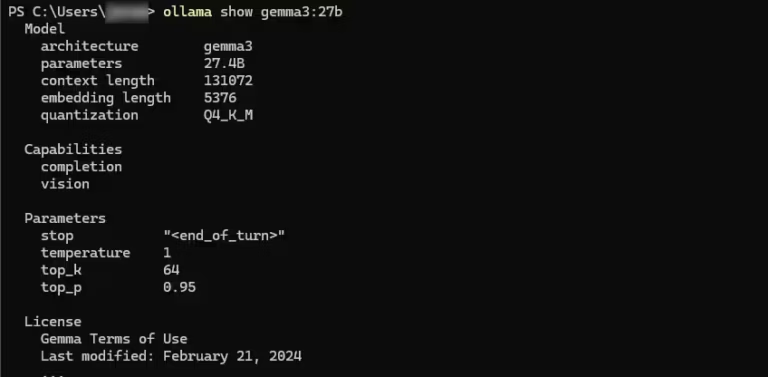

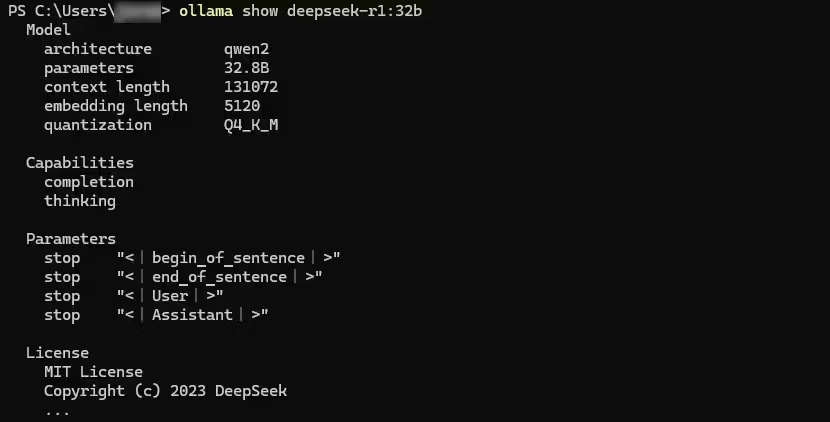

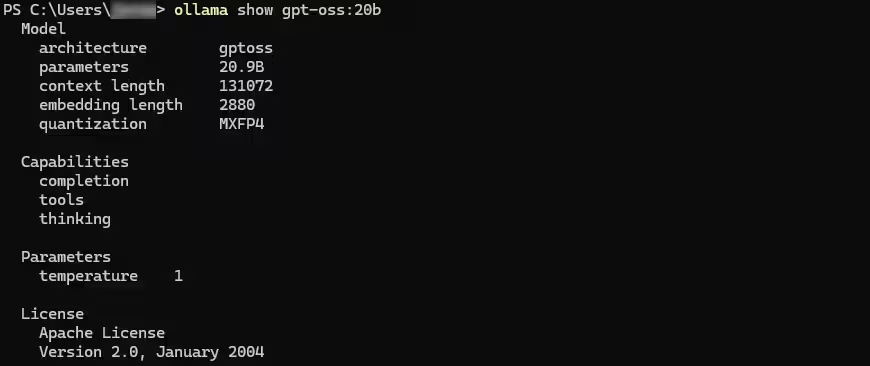

How to check your model’s quantization?

A common trap is assuming the ollama list command shows quantization. It doesn’t. It only displays the model’s name, ID, and size.

To see the exact quantization level, you need to use:

ollama show <model-name>For example:

ollama show gemma3:27bThe output will display detailed information, including the line:

quantization: Q4_K_M

This is the most reliable way to know if your model runs in Q4, Q5, Q6, or Q8.

Are there alternatives for better accuracy?

Fortunately, Ollama is not limited to Q4. You can:

- Download higher-precision variants like Q6_K or Q8_0, suited for more powerful GPUs.

- Import your own models from Hugging Face with the quantization of your choice (Ollama Docs).

- Mix approaches: use a Q4 model for lightweight tasks and a Q8 model for critical workloads.

When Q4 is enough… and when it becomes a problem

The Q4 choice isn’t inherently bad. It depends on the use case:

- Use cases suited for Q4:

- Basic conversational chat

- Quick summaries

- General questions

- Prototyping on laptops or modest machines

- Cases where Q4 is limiting:

- Complex code analysis

- Advanced math or logic reasoning

- Demanding academic research

- Professional critical applications

In these scenarios, default quantization becomes a bottleneck, and it’s better to choose a higher-precision variant even if it requires more resources.

Conclusion: a strength that is also a weakness

Ollama’s decision to enforce Q4_K_M quantization by default aligns with its mission: making local AI accessible to as many users as possible without requiring a multi-thousand-euro GPU. Thanks to this approach, powerful models like LLaMA, Gemma, and DeepSeek can run on laptops or modest desktops.

However, this democratization comes at a price: loss of precision and reliability. For daily or exploratory usage, this trade-off is acceptable. But when dealing with sensitive applications (development, research, data analysis), the limitation becomes obvious.

In short, Ollama is a great entry point into local AI, but users who want to unlock the full richness of models must quickly consider other quantizations or alternative solutions.

See also: Ollama vs vLLM: which solution should you choose for serving local LLMs?

Practical tips: how to choose the right quantization?

Here are some simple guidelines to adapt your usage:

1. Evaluate your hardware

- Less than 8 GB VRAM (or CPU only) → stick with Q4_K_M. It’s the only realistic option for smooth usage.

- Between 12 and 24 GB VRAM → go for Q6_K, which balances better accuracy while staying accessible.

- 32 GB VRAM and above (RTX 5090, dedicated servers, Pro GPUs) → choose Q8_0 or even FP16 if available, to keep maximum quality.

Note that BF16 models are not currently supported by Ollama. Even though they can be imported, they are converted to FP16. A future update of Ollama could eventually improve this.

2. Match the use case

- General chat, summaries, prototyping → Q4 is more than enough.

- Development, code generation, mathematical logic → Q6 is recommended.

- Critical research, production AI, scientific generation → Q8 or FP16 to avoid reliability issues.

3. Check quantization before use

Always run:

ollama show <model>This ensures you know the exact format your model is running. Many users realize too late that their results are biased by overly aggressive quantization.

4. Import your own variants

If Ollama doesn’t provide the quantization you want, you can import a Hugging Face model and convert it to the right format (Ollama Docs).

Final word

Ollama isn’t trying to trick its users: its default choice reflects a pragmatic vision where accessibility and speed matter more than perfection. But understanding the implications of quantization is crucial for any advanced user.

With this knowledge, you can decide whether to:

- stick with Q4 to enjoy a lightweight and fast local AI,

- or invest in higher-precision quantization to unlock the full potential of modern models.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!