Ollama vs vLLM: which solution should you choose to serve local LLMs?

The rise of local LLM inference is reshaping how developers, researchers, and companies deploy artificial intelligence. Two names dominate most conversations: Ollama and vLLM. These two local LLM servers embody very different philosophies. One prioritizes simplicity, the other focuses on performance and scalability. So, Ollama vs vLLM, which solution should you choose to serve your language models locally? These solutions are sometimes presented as similar, yet they differ on many points. Choosing the right tool for your use case is essential, whether to maintain output quality or fit hardware constraints.

Why install a local LLM server?

Historically, using a large language model meant running in the cloud. That creates issues, high costs, constant network dependency, privacy risks for data confidentiality. Today, with tools like Ollama and vLLM, you can run an LLM on a PC or GPU server.

The advantages are clear:

- Privacy: prompts and data remain in house.

- Responsiveness: no network latency, ideal for interactive use.

- Cost control: no subscriptions, no per-request billing.

- Customization: add RAG, Retrieval-Augmented Generation, fine-tuning, or system prompts tailored to your context.

- Hardware optimization: fully leverage a powerful GPU like an RTX 4090, an RTX 5090, or a professional card such as Quadro, A100, H100.

Overview: Ollama vs vLLM

Ollama: simplicity first

Ollama has become the most accessible way to try local LLM inference.

- Fast install on Windows, macOS, and Linux.

- Built-in model hub with ready-to-run variants, LLaMA, Mistral, Qwen, Gemma, DeepSeek.

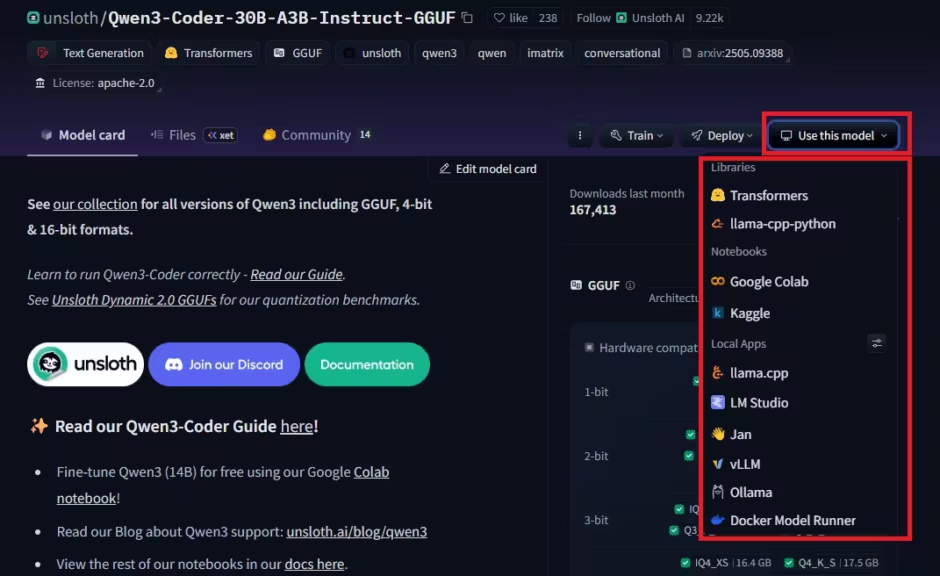

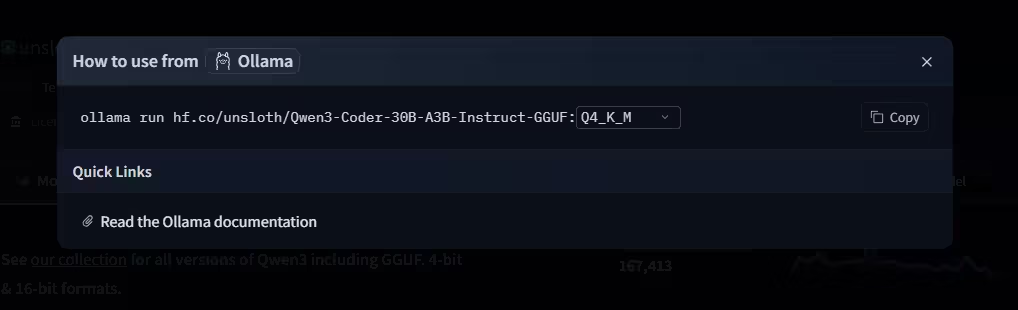

- Compatibility with GGUF formats, Unsloth dynamic quantization is supported. By default you get Q4-quantized models from the Ollama library. You can also pull models from Hugging Face, including Unsloth models. The Hugging Face UI shows commands to run models in Ollama and other tools.

- CPU and GPU support (NVIDIA, AMD, Apple Silicon).

- OpenAI-compatible API, easy integration with Open WebUI, LangChain, or your Python scripts.

There are trade-offs:

- By default, Ollama prioritizes Q4_K_M quantization. Result, easier startup, but lower accuracy than FP16 or BF16.

- Hard to saturate high-end GPUs like an RTX 5090 with 32 GB VRAM. Even with large models, Ollama may not fully load the GPU. The server also plateaus and latency climbs quickly, as many reports on Reddit and Hacker News show.

- Limited performance once you exceed about a dozen concurrent connections.

In short, Ollama is ideal for quick model trials, offline work, or exploring the LLM ecosystem with minimal effort. The simplicity comes with a cost, lower precision.

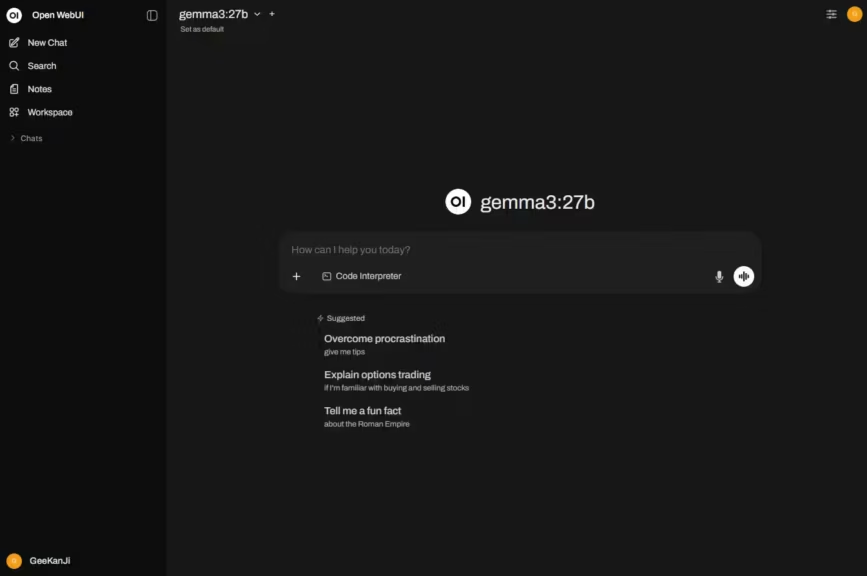

If you prefer not to use the command line, you can layer a web interface similar to ChatGPT. Open WebUI is one such option and also installs very easily.

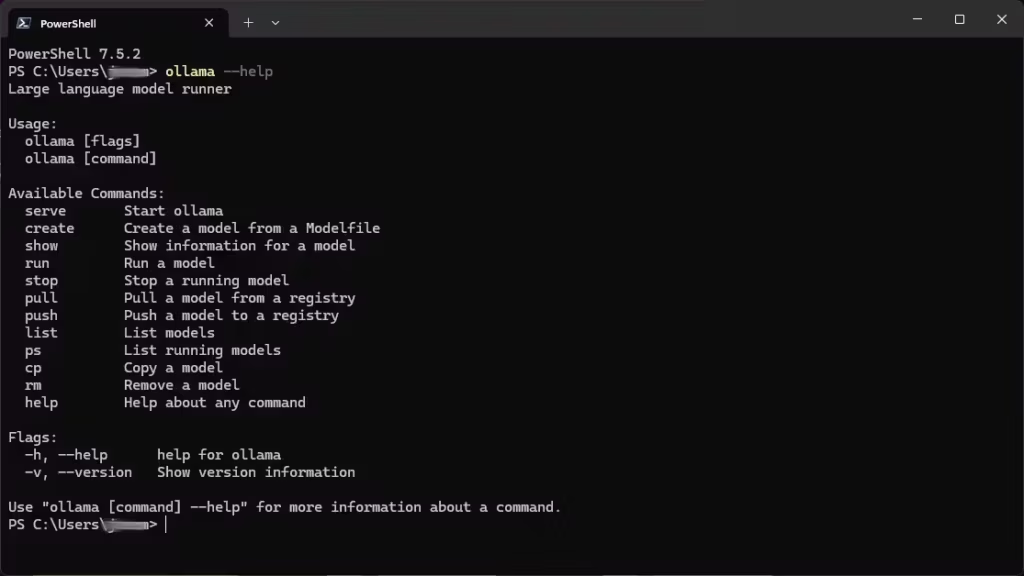

Installation and first steps

Setup is accessible to everyone, download Ollama from the official site, or use a package manager, then run a single command, for example ollama run mistral. Ollama downloads, configures, and serves the model without advanced configuration. The minimal CLI lets you:

- List models (ollama list)

- Find a model on Ollama’s Search page and download it. The exact commands are shown on each model page.

- Start or stop an instance

- Check server status

- Manage multiple local models

How to use Hugging Face models with Ollama

As noted, Ollama defaults to Q4-quantized models, which supports wide accessibility. If you want more accuracy and control, you can download models from Hugging Face and run them in Ollama.

Hugging Face provides a simple helper to streamline this process:

Copy the command line and run it in your environment:

Here is the translated and SEO-optimized version in English, with the requested adaptation:

Ollama and BF16 support: what really happens

A frequent question in the AI community is whether Ollama truly runs models in BF16 when you execute a command like:

ollama run hf.co/unsloth/Qwen3-30B-A3B-Instruct-2507-GGUF:BF16In practice, Ollama does download the model in BF16 format (either safetensors or GGUF). However, during the import pipeline, all tensors are automatically converted to FP16. This means that even if the model file is labeled BF16, inference inside Ollama is always performed in FP16.

The implications are clear:

- No native BF16 hardware acceleration on modern GPUs such as NVIDIA RTX 40-series or H100.

- Loss of the BF16 advantage in balancing memory usage and accuracy, since the model is stored and executed in FP16.

This behavior has been confirmed in multiple GitHub discussions:

- Importing BF16/FP32 models converts to FP16 (#9944)

- BF16 models run as FP16 without native BF16 support (#4670)

- Bug when importing BF16 GGUF models (#9343)

As of today, Ollama offers no option to preserve a model in native BF16 or FP32. If you aim to maximize your performance and AI accuracy, this limitation is important to keep in mind.

vLLM: power for professional environments

vLLM targets a different audience, demanding developers, researchers, and enterprises. It is built for production deployment on servers, yet it also works well on a local workstation.

- CUDA-optimized: specialized GPU kernels, advanced memory management.

- Supports Hugging Face Transformers (LLaMA, Mistral, Falcon, Qwen, Gemma, DeepSeek), efficient FP16 and BF16 loading.

- Better long context handling (128k tokens and beyond), which enables advanced use cases.

- High throughput: handles hundreds or thousands of concurrent requests.

- Multi-GPU and clustering via Ray: ideal for heavy workloads.

There are constraints:

- More complex setup (Linux or Windows via WSL, Python, up-to-date CUDA).

- NVIDIA GPUs only: no official support for CPU inference, AMD GPUs, or Apple Silicon.

- High default VRAM usage, about 90 percent of the GPU allocated.

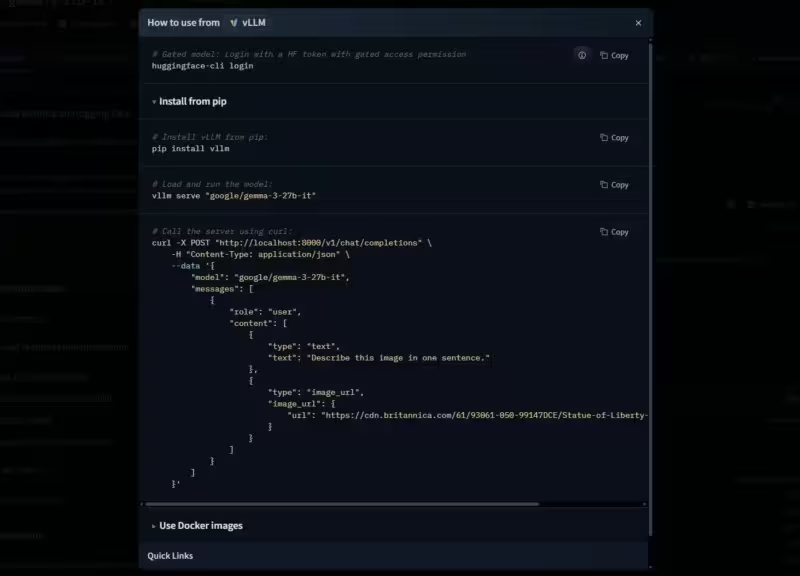

- No integrated model hub: you fetch models from Hugging Face. To simplify this, Hugging Face shows how to install models with vLLM and other tools such as Ollama, Llama.cpp, LM Studio, Docker Model Runner.

In summary, vLLM is the right choice to fully utilize a GPU workstation, RTX 4090 or 5090, A100, H100, and to deploy LLMs in production. In professional environments it is a common production engine, so learning vLLM will be more useful if you are a developer, consultant, or ML engineer.

Example workflow: download a Hugging Face model, configure vLLM to use two GPUs, connect the API to an interface like Open WebUI or LangChain, and serve hundreds of parallel requests without saturating the machine.

vLLM is the turbo engine to deploy when the highway is open and you want to go faster.

Installation and configuration

To use vLLM, you need:

- Linux, or WSL on Windows

- A modern Python environment, ideally Python 3.10 or 3.12

- At least one recent NVIDIA GPU with an up-to-date CUDA driver

- Install vLLM via pip (pip install vllm) or with a manager like UV, for environment isolation

- Download a compatible model, for example Gemma, Mistral, Llama, DeepSeek, from Hugging Face, then serve it with

vllm serve <model_name> --host 127.0.0.1 --port 8000 - Tune settings to your hardware, multi-GPU, VRAM control with –gpu-memory-utilization, etc.

Installation requires more technical comfort than Ollama, yet remains accessible if you are used to Python and managing AI servers.

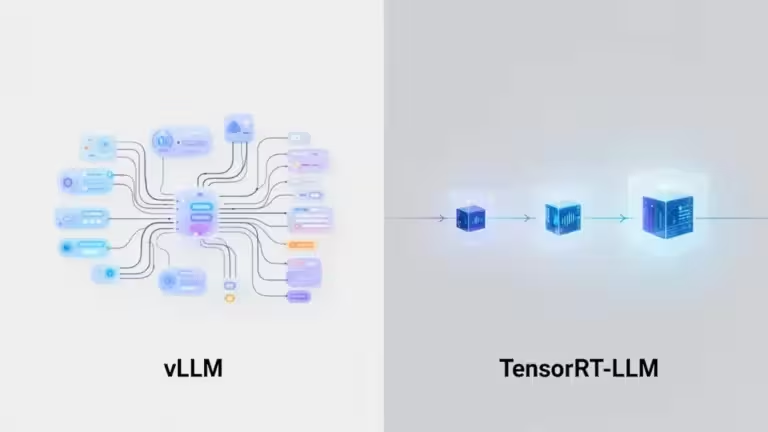

Alternatives to Ollama and vLLM

Even if Ollama and vLLM dominate local LLM inference, other backends are worth mentioning:

- Hugging Face Transformers: flexible and central to the ecosystem, but slower and less scalable without an optimized backend.

- Text Generation Inference, TGI: Hugging Face’s production-ready serving stack.

- TensorRT-LLM, NVIDIA: highly optimized for CUDA, more complex to set up.

- LM Studio: user-friendly cross-platform alternative, personal use focus.

Benchmarks Ollama vs vLLM: performance and latency

To understand the real-world gap between Ollama and vLLM, you need a like-for-like benchmark on the same hardware and model. Robert McDermott presents such a test focusing on latency, scalability, and throughput. It does not cover vLLM’s ability to run larger models while preserving accuracy, which is a key criterion even for local inference.

Throughput and scalability

- Ollama hits limits fast, about 22 requests per second with a 14B model on GPU.

- vLLM keeps scaling and delivers up to 3.2x more requests per second with 128 concurrent connections.

Latency

- Ollama feels smooth for a few users, but latency spikes above 10 to 20 connections.

- vLLM keeps latency low under heavy load.

VRAM usage

- Ollama uses VRAM conservatively, which limits efficiency on high-end GPUs.

- vLLM aggressively allocates VRAM, configurable, to maximize throughput.

Stability

- Ollama can become unstable during very long sessions or intensive workloads.

- vLLM is widely recognized for production-grade robustness.

Recommended use cases

When to choose Ollama

- Quick trial of an LLM.

- Personal or exploratory use, chatbots, summarization, content generation.

- Offline work on laptops, PCs without a GPU, or Mac M1, M2, M3.

- Scenarios where simplicity matters more than raw performance.

When to choose vLLM

- Enterprise deployment, internal API, team chatbot, SaaS.

- Preserve model accuracy.

- Need scalability with hundreds of users.

- Optimal usage of RTX 5090 or professional GPUs, A100, H100.

- Use cases requiring long context and long-running stability.

Comparative summary Ollama vs vLLM

| Criterion | Ollama | vLLM |

|---|---|---|

| Installation | Simple, cross-platform | Complex, Linux or WSL |

| Supported hardware | CPU, NVIDIA or AMD GPU, Apple Silicon | NVIDIA GPU only |

| Performance | Very good under 10 users, plateaus fast | Extreme scalability, 1000+ users |

| Models | Packaged GGUF Import from Hugging Face | Hugging Face Transformers, safetensors, PyTorch partial GGUF support |

| Accuracy | Q4_K_M by default Other formats possible via import | FP16, BF16, quantized |

| VRAM management | Conservative | Optimized, 90 percent VRAM |

| Multi-GPU | Basic | Advanced, Ray and clustering |

| Stability | Good for light use | Excellent in production |

| API | OpenAI compatible | OpenAI compatible |

| Use cases | Tests, personal projects, limited users | Production, SaaS, research |

Conclusion: Ollama vs vLLM, which solution should you choose?

The duel Ollama vs vLLM captures two visions of local LLM inference.

- Ollama: the mainstream tool, simple and fast, perfect to explore and test.

- vLLM: the race engine, built for performance, accuracy, scalability, and professional environments.

If you are starting out or need a local chatbot that launches quickly, Ollama is hard to beat. If you want to fully utilize a high-end GPU, keep large-model accuracy, and serve hundreds of parallel users, vLLM is the reference.

In practice, the best advice is to test both on your hardware and real use cases. You will quickly see whether your needs fit Ollama’s simplicity or vLLM’s power.

Also read : Install vLLM with Docker Compose on Linux (compatible with Windows WSL2)

FAQ Ollama vs vLLM: frequent questions answered

Does vLLM run on Windows or CPU?

No, vLLM runs on Linux or on Windows via WSL, with one or more NVIDIA CUDA GPUs. There is no official support for macOS, AMD GPUs, Apple Silicon, or CPU-only execution. For cross-platform or no-GPU scenarios, Ollama is recommended.

Is Ollama limited to quantized models?

Yes, by default Ollama favors quantized models (Q4_K_M, Q5, Q6) in GGUF format to reduce memory usage and run on modest PCs. This makes 7B or 13B models feasible on consumer GPUs. The trade-off is lower accuracy than FP16 or BF16 models typically used with vLLM.

Can you use multiple GPUs with Ollama or vLLM?

- Ollama supports multiple GPUs, but control is limited and not very transparent.

- vLLM natively handles multi-GPU and multi-node clustering with Ray, with settings like –tensor-parallel-size or CUDA_VISIBLE_DEVICES. This is a strong point for enterprise scalability.

Does vLLM handle long context better than Ollama?

Yes. vLLM supports extended context windows up to 128k tokens, useful for RAG, long document analysis, or applications that require a lot of context. Ollama is more limited. It works well for classic prompts, but does not offer the same context depth.

What are the advantages of local inference over the cloud?

- Privacy: prompts and data remain on your infrastructure, no potential leaks.

- Responsiveness: no network latency or internet dependency.

- Cost control: once you own the hardware, for example an RTX 5090, you do not pay per request like with a cloud API.

- Customization: fine-tuning, system prompts, integration into internal workflows.

Which solution should you choose for professional use?

- Ollama: sufficient for a small team, quick tests, or offline use.

- vLLM: essential for large-scale professional environments, SaaS, internal APIs, research labs, enterprise chatbots, thanks to stability and the ability to serve hundreds of users in parallel.

Can Ollama and vLLM integrate with Open WebUI or other interfaces?

Yes, both expose an OpenAI-compatible API. You can use them with Open WebUI, LangChain, Python scripts, or even no-code apps. Switching backends is a matter of changing the API URL.

How do you limit VRAM consumption with vLLM?

At startup you can set GPU memory usage with:

--gpu-memory-utilization 0.5This caps vLLM at 50 percent of VRAM, useful when you want to keep resources for other applications.

Should you choose FP16, BF16, or a quantized model?

- FP16 or BF16: best generation quality, but heavy VRAM needs

- Quantized, Q4, Q5, Q6: enables larger models on modest GPUs, with a small quality loss. Ollama ships many packaged quantized models, vLLM is more oriented to FP16 or BF16.

What about licensing for Ollama and vLLM?

Both are open source under permissive licenses, MIT or similar. Note that usage rights depend on the LLM model itself, for example LLaMA 3 has usage restrictions.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!