Google Antigravity on Windows 11: Strategic Installation Guide for Agentic Workflows

The emergence of Google Antigravity redefines software development standards by introducing the concept of the agentic IDE. Unlike legacy environments, this…

The emergence of Google Antigravity redefines software development standards by introducing the concept of the agentic IDE. Unlike legacy environments, this…

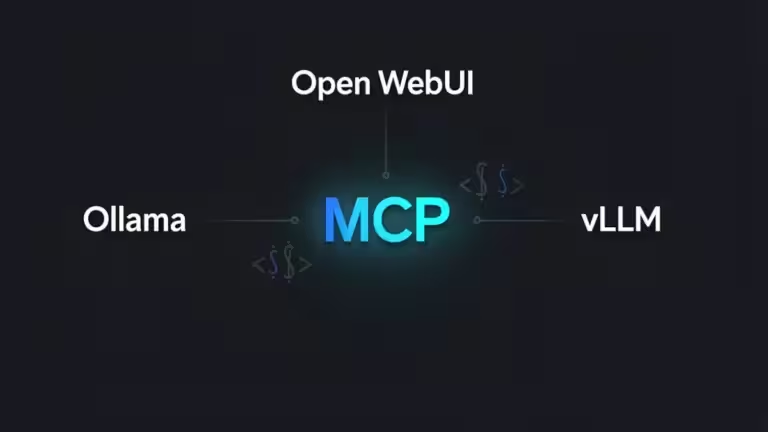

The Model Context Protocol (MCP) is an open standard initiated by Anthropic in November 2024, currently undergoing rapid adoption within the LLM…

AI Inference Economics 2026 defines the real cost structure behind agentic AI systems, long-context scaling, and hyperscaler infrastructure expansion. Between…

This Weekly AI News edition covers January 28 – February 17, 2026, and maps the period through four connected lenses:…

Agentic AI 2026 marks a structural transition from conversational copilots to workflow-executing systems embedded directly into enterprise infrastructure. Hyperscalers are…

The evolution toward AI-assisted development in 2026 marks the end of the “pure executor” coder, giving rise to the agentic engineer….