TPU v6e vs v5e/v5p: How Trillium Delivers Real AI Performance Gains

TPU Trillium, also known as TPU v6e, delivers more than the 4.7 times peak compute uplift Google highlights on the Google Cloud Blog. Real workloads show around 4 times faster dense LLM training, nearly 3 times higher SDXL throughput, and roughly 2.8 to 2.9 times higher LLM inference rates. This article explains how those gains emerge at the architecture, compiler, and pod scale. It also clarifies when v6e is the right choice over v5e or v5p and which tuning steps help teams unlock the expected performance. You can also explore our detailed overview of the architecture in Understanding Google TPU Trillium: How Google’s AI Accelerator Works.

Executive Summary: Real Gains at a Glance

Trillium’s uplift comes from improvements in compute density, memory bandwidth, interconnect behavior, and execution pipelines. Google’s announcement of general availability on the Google Cloud Blog describes 4.7 times BF16 compute and 2 times HBM and ICI bandwidth compared to v5e. More importantly, these architectural changes translate into stable and repeatable performance gains under real workloads, from Llama 2 70B training to SDXL inference.

Inference workloads benefit from improvements in JetStream scheduling and Pathways multi host serving, described in the AI Hypercomputer inference update. Meanwhile, training workloads see higher FLOP utilization thanks to larger MXU tiles and improved XLA kernels.

| Specification | TPU v5e | TPU v5p (incertain*) | TPU v6e (Trillium) |

|---|---|---|---|

| Peak compute per chip (BF16) | ~197 TFLOPs | ~459 TFLOPs* | ~918 TFLOPs |

| HBM capacity per chip | 16 GB | ~95 GB* | 32 GB |

| HBM bandwidth | ~800 GB/s | ~2765 GB/s* | ~1600 GB/s |

| Inter-chip interconnect BW | ~1600 Gbps | ~4800 Gbps* | ~3200 Gbps |

| Typical pod size (chips) | 256 | up to 8960 chips* | 256 |

| Approx. BF16 peak per pod | ~50.6 PFLOPs | ~1.77 EFLOPs* (scaled estimation) | ~234.9 PFLOPs |

| Energy efficiency | baseline | high bandwidth, higher power* | improved perf/W (public GA claims) |

| Performance / cost (relative) | cost-efficient | premium tier* | 1.8×–2.5× perf/$ uplift (typical workloads) |

*Données incertaines ou partielles : Les caractéristiques du TPU v5p ne sont pas toutes documentées publiquement dans le détail (HBM capacity, HBM bandwidth, interconnect, pod size complet). Les valeurs indiquées ici proviennent de sources secondaires, d’analyses industrielles ou d’observations indirectes, et peuvent varier selon les configurations réelles ou non divulguées. Les chiffres sont fournis à titre d’indication comparative uniquement et ne doivent pas être considérés comme des valeurs exactes ou contractuelles.

These gains also translate into 1.8 to 2.5 times better performance per dollar depending on workload and pricing, making v6e compelling for both large scale pretraining and high traffic inference deployments.

Why TPU v6e Is Faster: Architecture Changes That Matter

Trillium’s uplift reflects deep hardware changes rather than incremental tuning. Teams migrating from v5e or v5p benefit from improvements across numerical throughput, memory behavior, interconnect performance, and embedding workloads.

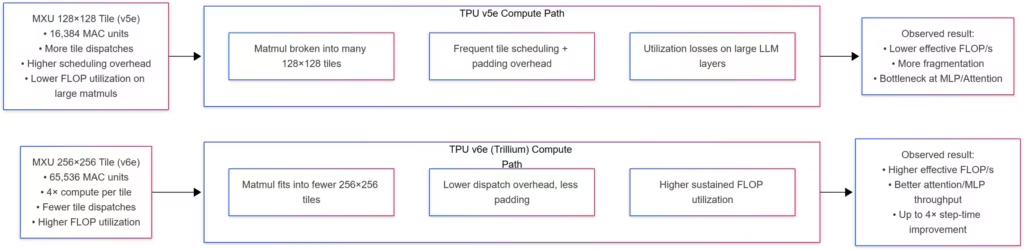

MXU Expansion: 256×256 Tiling and Compute Scaling

Trillium doubles MXU tile size from 128×128 to 256×256, significantly improving FLOP utilization during dense matrix multiplications. This improves step time for transformer workloads. Google highlights the MXU redesign in its Trillium introduction article, explaining how it contributes directly to the 4 times reduction in step time seen in dense LLM training.

(click to enlarge)

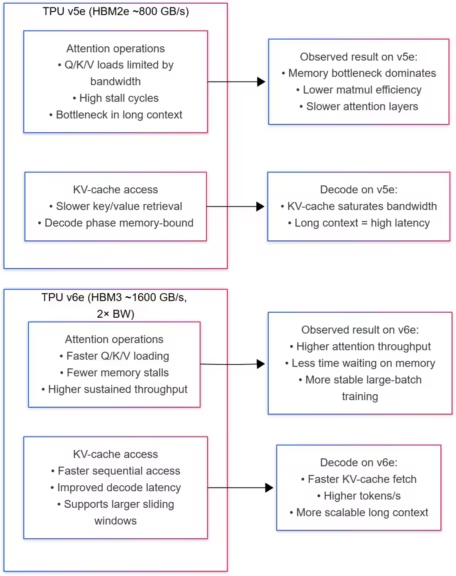

HBM3 Bandwidth and Capacity: Memory Pressure and Batch Size

Each v6e chip features 32 GB of HBM3 with 1600 GB per second bandwidth, double that of v5e. This reduces attention stalls and improves KV cache stability during inference. Google outlines HBM behavior in its TPU v6e documentation, highlighting how bandwidth improvements benefit attention-heavy layers.

How HBM Bandwidth Impacts Attention and KV-Cache Performance

An attention operation is the mechanism that allows a transformer model to determine which parts of a sequence are relevant to the current token. It does this by loading and processing three matrices, Q (queries), K (keys), and V (values), then computing their interactions through large matrix multiplications. These operations are heavily memory-bound, meaning their speed is limited not by the available compute, but by how fast Q, K, and V can be fetched from high-bandwidth memory.

The KV-cache plays a critical role during the decode phase, when a model generates tokens one by one. Instead of recomputing all keys and values for the previous tokens, the model stores them in a cache and reuses them at each step. This drastically improves efficiency, but it also turns generation into a memory-intensive process. As sequence length grows, the KV-cache becomes a bottleneck because each new token requires accessing more stored keys and values.

This is where TPU v6e’s doubled HBM bandwidth makes a measurable difference. Moving from roughly 800 GB/s on v5e to about 1600 GB/s on v6e reduces stall cycles, the moments when compute units wait for data. Attention layers load Q/K/V faster and maintain stability even with long sliding-window configurations, while KV-cache reads during decoding become significantly faster. In practical terms, this means higher sustained throughput, more tokens per second, and lower latency, especially for long-context models and high-traffic inference workloads.

Interconnect and All Reduce: Scaling Across Pods

Trillium doubles inter chip interconnect bandwidth, reducing synchronization overhead during gradient all reduce operations. According to the AI Hypercomputer inference update, higher interconnect bandwidth also improves stability at high pod counts, which is crucial for multi pod LLM pretraining.

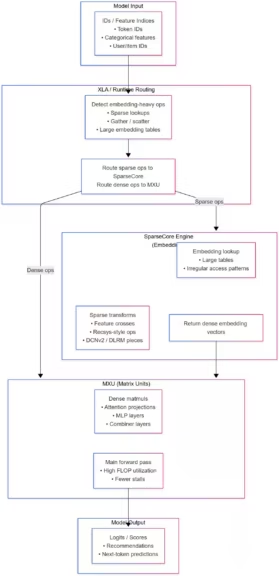

SparseCore Improvements: Embedding and Recsys Workloads

The new SparseCore significantly accelerates embedding-heavy workloads. Models such as DLRM DCNv2 benefit from faster scatter gather patterns. Google references sharper SparseCore performance in its training solutions documentation, which helps explain why some recsys pipelines see improvements approaching 5 times.

(Click to enlarge)

Training Performance: Dense and MoE LLMs

Large language model training is where Trillium’s improvements are most visible, with gains driven by wider MXU tiles, higher HBM bandwidth, and improved interconnect behavior.

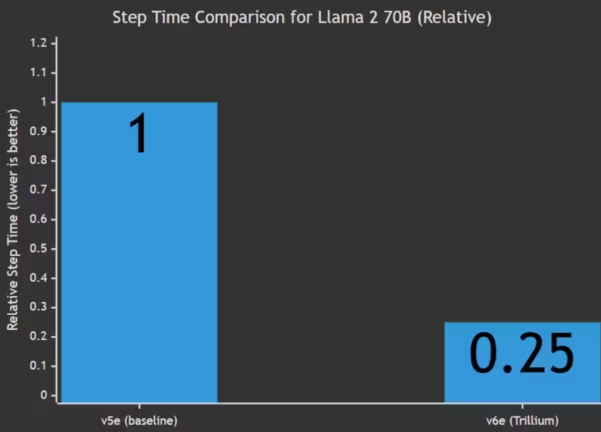

Dense LLMs (Llama 2, Llama 3, GPT class): 4× Faster Step Time

Using MaxText as a reference implementation, dense LLMs achieve about 4 times faster step time on Trillium compared to v5e. Better FLOP utilization and reduced attention stalls help explain these improvements. Google’s MLPerf benchmark analysis confirms similar uplift trends.

*This chart compares the relative step time of Llama 2 70B when trained on TPU v5e versus TPU v6e. Values are normalized to v5e (1.0). A score of 0.25 on v6e indicates a four-fold reduction in step time, meaning each training step completes roughly four times faster thanks to Trillium’s larger MXU tiles, doubled HBM bandwidth, and improved interconnect performance.

In large language model training, step time refers to the duration required to complete a single forward and backward pass over a batch of data. It captures all the computation needed to update the model’s parameters, including matrix multiplications, attention operations, gradient calculations, and inter-device communication. Because step time directly determines how long it takes to train a model, even small improvements translate into substantial reductions in total training time and cost. On TPUs, step time is influenced not only by raw compute throughput, but also by memory bandwidth, interconnect efficiency, and compiler optimizations. As a result, architectural changes in TPU v6e—especially the 256×256 MXU tiles and the doubled HBM bandwidth—lead to much higher FLOP utilization and significantly shorter step times compared to TPU v5e.

Mixture of Experts: Why MoE Scales Differently

MoE models reach around 3.8 times faster step time. Router balance and expert distribution prevent fully matching dense model gains, but SparseCore changes and faster interconnect reduce routing instability.

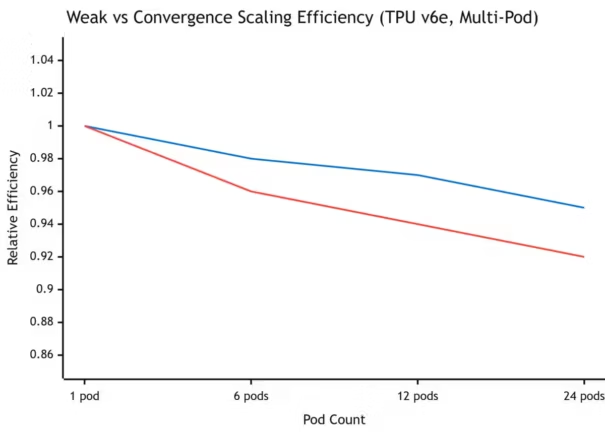

Scaling Efficiency at Thousands of Chips

Trillium maintains 94 to 99 percent weak scaling efficiency at multi pod scale. Google’s AI Hypercomputer update explains how integrated scheduling and faster interconnect bandwidth help maintain scaling stability even beyond 3,000 chips.

Weak scaling measures how well training throughput increases as more TPU chips are added. TPU v6e maintains between 94% and 99% weak scaling even across multi-pod clusters. Convergence scaling reflects how the total number of training steps changes with larger batch sizes. It remains slightly lower but still highly efficient, indicating that v6e preserves training stability even at extreme cluster sizes.

Cost to Convergence and Perf per Dollar

v6e improves performance per dollar by 1.8 to 2.5 times depending on workload. These gains reflect more efficient convergence, higher throughput, and lower energy consumption at large batch sizes. Teams training large LLMs benefit most because convergence time dominates cost.

Inference Performance: LLM Serving at Scale

Trillium improves both throughput and latency for LLM inference, particularly when using JetStream and Pathways.

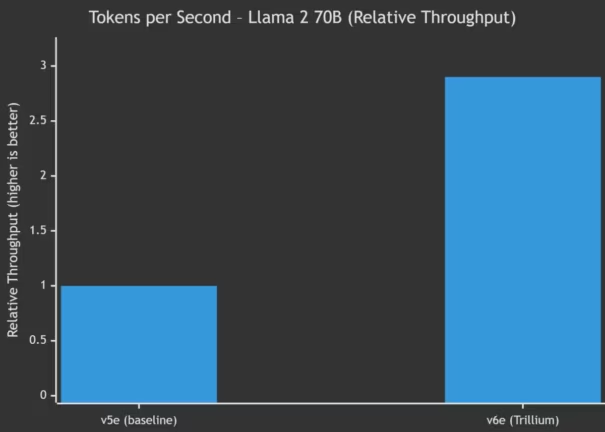

Throughput: 2.8 to 2.9× Higher on Llama 2 70B

JetStream’s continuous batching and sliding window attention methods raise throughput significantly. Google details these runtime improvements in the AI Hypercomputer inference update, which explains how both hardware and scheduling contribute to higher token throughput.

TPU v6e achieves around 2.8× to 2.9× higher token throughput than v5e for Llama 2 70B inference. This improvement comes from doubled HBM bandwidth, a faster interconnect, larger MXU tiles, and JetStream’s optimized batching and scheduling pipeline.

Latency: Prefill and Decode with Pathways

Pathways splits prefill and decode across TPU slices, reducing time to first token by up to 7 times and decode latency by about 3 times. These gains matter for chat interfaces, real time assistants, and interactive workloads where responsiveness is essential.

KV Cache, Sliding Window, and Continuous Batching

Higher HBM bandwidth improves KV cache performance under load. Sliding window attention reduces memory pressure for long context models, and JetStream increases throughput by merging varied requests into unified batches.

Diffusion Workloads: SDXL and MaxDiffusion

Diffusion pipelines benefit from Trillium’s improved compute throughput and doubled memory bandwidth.

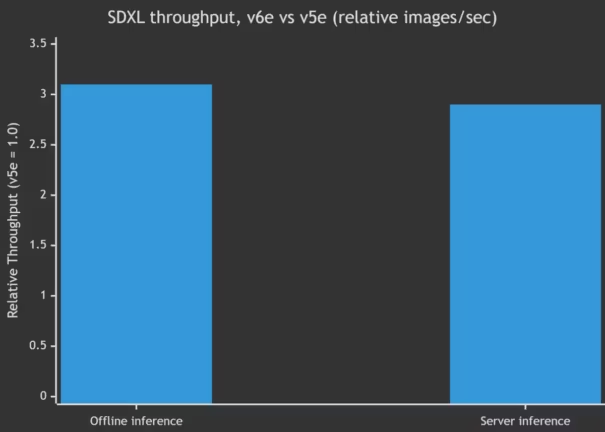

SDXL Offline and Server Inference vs v5e

Google’s MaxDiffusion framework, available on GitHub, pairs with v6e to deliver 3.1 times faster SDXL offline inference and nearly 3 times faster server inference throughput. MLPerf v5.0 results referenced on the MLCommons website show around 3.5 times uplift in optimized conditions.

*This chart shows the relative throughput improvement of TPU v6e over v5e when running SDXL inference. Values are normalized to v5e (1.0). In offline mode, TPU v6e delivers roughly 3.1 times more images per second, while server-mode inference reaches about 2.9 times higher throughput. These gains reflect improvements in HBM bandwidth, MXU tile size, and end-to-end diffusion optimizations within the MaxDiffusion pipeline.

Stable Diffusion XL is a model that combines heavy memory access patterns with large convolutional and transformer components, making it especially sensitive to bandwidth constraints and compute utilization. On TPU v5e, SDXL inference is often limited by the rate at which feature maps and attention tensors can be moved through the memory hierarchy. TPU v6e addresses these bottlenecks through doubled HBM bandwidth, larger 256×256 MXU tiles for more efficient matmul execution, and a faster interconnect for multi-stage diffusion pipelines. In offline workloads, these architectural changes translate into about 3.1 times more images per second, while server-mode inference—typically bound by scheduling, batching, and continuous serving constraints—still achieves close to a 2.9× improvement. Together, these gains illustrate how Trillium significantly reduces end-to-end latency and increases throughput for modern diffusion models.

MLPerf v5.0 Results: What They Indicate

MLPerf captures Trillium’s strengths under optimized conditions but does not represent long context workloads or multi pod inference. Developers should treat MLPerf as a baseline rather than a complete predictor of production behavior.

Practical Considerations: Batch Size, Chip Count, and I/O

Smaller batch configurations remain memory bound, while larger ones become interconnect or I/O bound. Profiling helps expose these inflection points and guides scaling decisions.

When to Use v6e vs v5e vs v5p

Choosing hardware depends on workload requirements and operational constraints.

Where v6e Wins: LLMs, Diffusion, MoE, Perf per Dollar

Trillium outperforms previous generations across dense LLM training, diffusion workloads, long context inference, and MoE routing. Gains stem from balanced improvements across compute, memory, and interconnect subsystems.

Where v5p Still Makes Sense

v5p remains viable for models requiring exceptional memory bandwidth and for architectures deeply tuned to v5p’s behavior. Some large models may need retuning to realize full v6e performance.

v5e for Cost Constrained or Legacy Workloads

v5e continues to serve legacy deployments and cost sensitive projects. Its lower price and wide availability make it appropriate for smaller scale inference or research use cases.

Implementation Notes for Developers

Practical considerations often determine whether teams realize v6e’s promised uplift.

Frameworks: MaxText, PyTorch XLA, MaxDiffusion

MaxText is the most efficient framework for LLM workloads on TPUs. PyTorch XLA support is strong, but developers must avoid dynamic shapes that trigger XLA recompilation. MaxDiffusion on GitHub is the recommended choice for SDXL pipelines. OpenXLA’s documentation on GitHub clarifies compilation pathways and helps diagnose bottlenecks.

Pathways and Multi Host Serving: Common Pitfalls

Pathways requires careful slice mapping, KV cache transfer control, and topology alignment. Google’s GKE tutorial on multi host model serving outlines best practices to avoid degraded latency and uneven load distribution.

Migration Checklist: v5e or v5p to v6e

Migration involves adjusting batch sizes, enabling sliding window attention, enabling INT8 inference paths when compatible, and tuning MaxText for larger MXU tiles. Profiling helps expose kernel level bottlenecks early, preventing scaling instability at multi pod size.

2025 Updates and Evolving Benchmarks

Trillium’s performance profile continues to improve as Google updates its compiler stack, frameworks, and serving runtimes. Since mid 2024, the company has released a series of enhancements to XLA kernels, JetStream scheduling, MaxDiffusion pipelines, and SparseCore behavior. These updates narrow the gap between peak and realized performance across LLM training, inference, and diffusion workloads.

Google’s early MLPerf submissions, summarized in the TPU v6e MLPerf benchmarks, confirm efficiency gains for Llama class models, with improved utilization and more stable pipelines. For inference, continued updates to the AI Hypercomputer runtime, highlighted in Google’s article on inference updates for TPU and GPU, improve both latency and throughput by refining prefill and decode scheduling.

These updates are particularly relevant for long context models, MoE architectures, and diffusion workloads such as SDXL, Flux, and SD3, where KV cache pressure, routing patterns, and bandwidth utilization traditionally limit scaling. As frameworks like MaxText and MaxDiffusion evolve and compiler optimizations stabilize, teams should expect incremental but meaningful gains throughout 2025. Regular profiling remains essential because many improvements appear only at large batch sizes, multi host configurations, or multi pod scales.

Summary and Forward View

TPU Trillium offers a substantial performance upgrade over v5e and v5p, with improvements across MXU design, HBM bandwidth, interconnect throughput, and SparseCore efficiency that consistently translate into real gains for dense and MoE LLMs, long context inference, and SDXL pipelines. These improvements also enhance performance per dollar, making v6e one of Google’s most competitive accelerators for both training at scale and high demand inference.

Teams evaluating 2025 hardware strategy should benchmark their workloads directly on v6e, focusing on FLOP utilization, memory pressure, interconnect limits, and KV cache behavior. Profiling reveals whether enabling INT8 inference paths, adjusting batch sizes, or tuning MaxText configuration can unlock additional gains. With Google deploying Trillium broadly across its AI Hypercomputer platform, the surrounding ecosystem will continue to mature, and new kernels, routing strategies, and serving optimizations will likely extend Trillium’s advantage throughout 2025.

Also read : Understanding Google TPU Trillium: How Google’s AI Accelerator Works

Sources and references

Companies

- Google Cloud, Introducing Trillium 6th Gen TPUs (2024), https://cloud.google.com/blog/products/compute/introducing-trillium-6th-gen-tpus

- Google Cloud, Trillium TPU is GA (2024), https://cloud.google.com/blog/products/compute/trillium-tpu-is-ga

- Google Cloud, AI Hypercomputer inference updates (2024), https://cloud.google.com/blog/products/compute/ai-hypercomputer-inference-updates-for-google-cloud-tpu-and-gpu

- Google Cloud, TPU v6e documentation, https://cloud.google.com/tpu/docs/v6e

- Google Cloud, MLPerf v4.1 training benchmarks (2024), https://cloud.google.com/blog/products/compute/trillium-mlperf-41-training-benchmarks

Institutions

- MLCommons, MLPerf Inference v5.0, https://mlcommons.org/en/inference-edge-50/

Frameworks and tools

- MaxText, https://github.com/google/maxtext

- MaxDiffusion, https://github.com/google/maxdiffusion

- OpenXLA project, https://github.com/openxla/openxla

- vLLM Project, https://github.com/vllm-project/vllm

- vLLM TPU backend, https://github.com/vllm-project/tpu-inference

Tutorials and guides

- Google Cloud, Multi host model serving tutorial, https://cloud.google.com/kubernetes-engine/docs/tutorials/multi-host-model-serving

- Google Cloud, Llama 2 multi host serving tutorial, https://docs.cloud.google.com/kubernetes-engine/docs/tutorials/serve-multihost-tpu-jetstream

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!