vLLM vs TensorRT-LLM: Inference Runtime Guide

Developers comparing vLLM and TensorRT-LLM are usually evaluating how each runtime handles scheduling, KV cache efficiency, quantization, GPU utilization, and production deployment. This guide provides a concise, architecture-aware overview of both engines, using verified documentation such as the official vLLM scheduling design notes and NVIDIA’s TensorRT-LLM developer resources. It highlights the practical differences that matter for real workloads running on H100, A100, and ROCm clusters.

As of late 2025, NVIDIA’s new RTX 5000 series, including the 32 GB RTX 5090, brings datacenter-level inference capabilities to consumer workstations. This shift makes the comparison between vLLM and TensorRT-LLM increasingly relevant not only for server deployments but also for developers running large LLMs locally on high-end RTX hardware.

For ongoing updates on inference runtimes, GPU kernels, quantization formats, and developer tooling, see our Latest AI Developer News Coverage.

Quick comparison: where the runtimes diverge

vLLM emphasizes flexible dynamic batching, a paged KV cache, and broad hardware support including AMD ROCm. TensorRT-LLM focuses on maximum efficiency on NVIDIA GPUs, using fused kernels, optimized FP8/INT8 paths, and deep integration with Triton, as documented in the TensorRT-LLM user guide.

| Dimension | vLLM | TensorRT-LLM |

|---|---|---|

| Batching model | Multi-step scheduling, dynamic batching, chunked prefill | In-flight batching, separate encoder/decoder batching |

| KV cache architecture | Paged KV cache with copy-on-write prefix sharing | Dual KV cache (self-attention + cross-attention) |

| Quantization modes | GPTQ, AWQ, bitsandbytes, FP8 via ROCm Quark, INT8/INT4 (model-dependent) | FP8, INT8, INT4 integrated into engine build; Tensor Core optimized |

| Hardware support | NVIDIA + native AMD ROCm (MI-series), multi-vendor clusters | Optimized for NVIDIA H100/A100, requires TensorRT + CUDA stack |

| CUDA Graphs | Supported but limited by batch variability | Strong integration via static engines and fused kernels |

| Multimodal / enc-dec | Supported but still maturing, varies by operator | Highly optimized, fused cross-attention, strong enc-dec performance |

| Deployment stack | Lightweight server, Kubernetes friendly, multi-backend serving | Deep integration with Triton Inference Server + Kubernetes autoscaling |

| Best suited workloads | Long-context, RAG, heterogeneous clusters, cost-optimized inference | Latency-critical chat, translation, multimodal, NVIDIA-centric production |

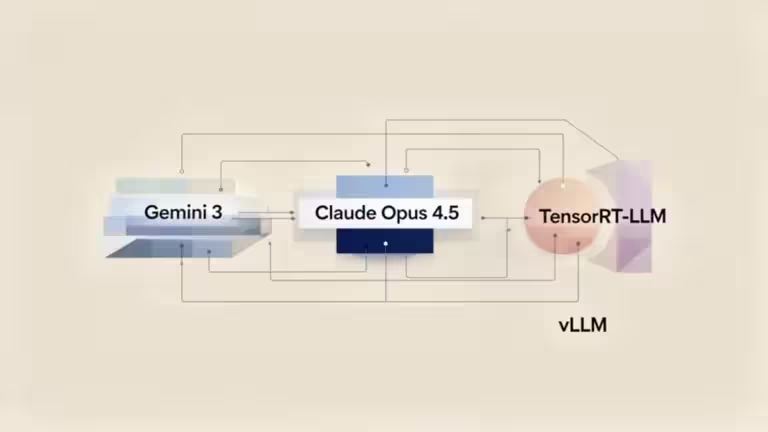

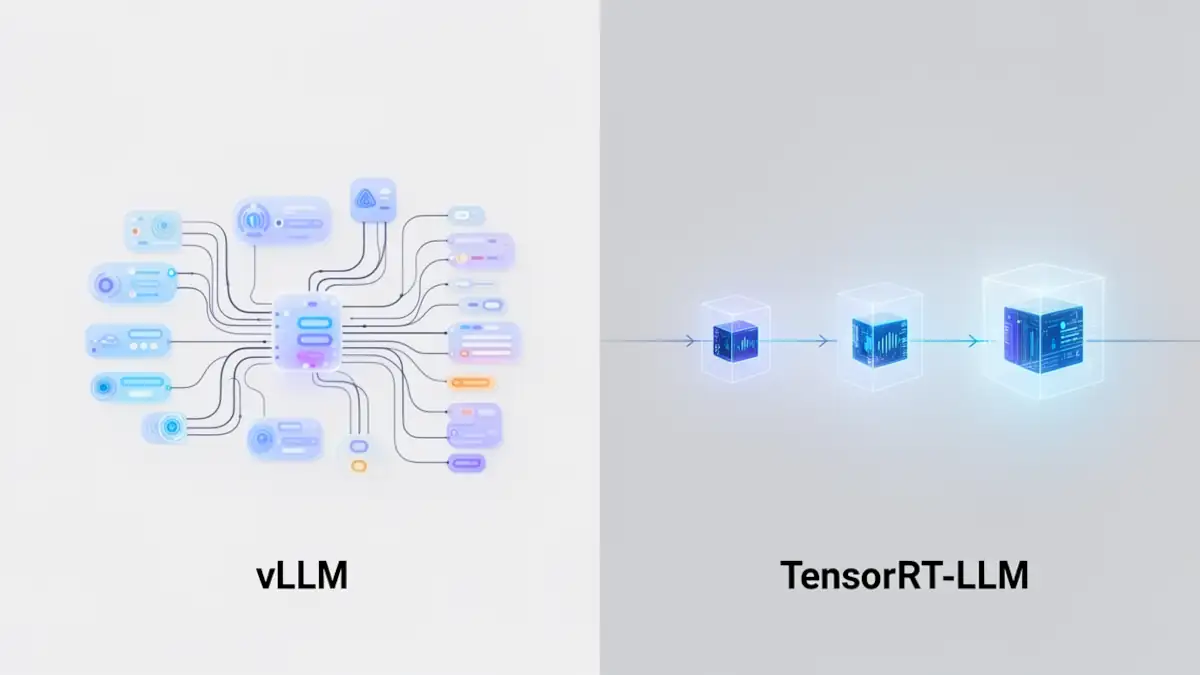

Core differences in one view

vLLM handles workloads with variable prompt lengths and long-context RAG pipelines gracefully thanks to its paged KV cache and multi-step scheduler. TensorRT-LLM, built on TensorRT engines, delivers stable latency and high throughput on NVIDIA GPUs through graph execution and operator fusion.

When to choose vLLM or TensorRT-LLM

Choose vLLM for mixed hardware fleets, long-context inference, and flexibility across models. Choose TensorRT-LLM for latency-sensitive chat workloads, encoder–decoder applications, and NVIDIA-centric production environments relying on Triton.

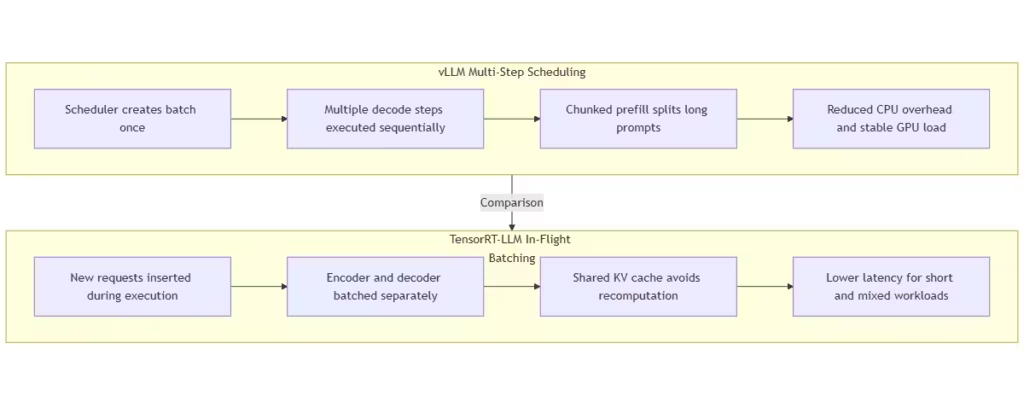

Scheduling and batching: how each runtime maximizes GPU efficiency

vLLM multi-step scheduling and chunked prefill

According to the vLLM architecture documentation, multi-step scheduling reduces CPU overhead by planning multiple decode iterations at once. Chunked prefill divides long inputs into segments, allowing parallelism and better GPU utilization for long-context tasks and RAG pipelines.

TensorRT-LLM in-flight batching and encoder–decoder flow

The TensorRT-LLM batching guide explains how in-flight batching admits new requests during execution without waiting for batch synchronization. Combined with separate encoder and decoder batching using shared KV caches, it improves responsiveness for translation, summarization, and VLM workloads.

KV cache architecture and its performance impact

vLLM paged KV cache with prefix sharing

vLLM uses a paged KV cache that allows efficient copy-on-write prefix sharing. This design, highlighted in the KV cache documentation, reduces memory duplication and improves throughput for workloads dominated by repeated prompts, such as chat and agentic systems.

TensorRT-LLM dual-paged KV cache for encoder–decoder models

TensorRT-LLM maintains separate self-attention and cross-attention KV caches, as detailed in the attention mechanism documentation. This structure enables more efficient use of encoder states and reduces redundant computation in translation and multimodal flows.

Quantization strategies: FP16, FP8, INT8, INT4

vLLM quantization options

vLLM supports GPTQ, AWQ, bitsandbytes, and FP8 execution via AMD’s ROCm Quark stack, described in the ROCm FP8 documentation. Its flexibility makes it suitable for heterogeneous clusters, though operator coverage varies by hardware.

TensorRT-LLM quantization with Tensor Core acceleration

TensorRT-LLM integrates FP8, INT8, and INT4 directly into its engine-building process. NVIDIA’s quantization guide explains how calibration and kernel fusion enable predictable improvements in latency and throughput on H100 and A100 GPUs.

New: INT4 and FP8 acceleration on RTX 5000

The RTX 5000 series introduces a new generation of FP8 and INT4 acceleration, enabling substantial speed-ups for Transformer workloads. TensorRT-LLM fully exploits these kernels through its engine-building process, delivering consistent improvements in token throughput and latency. vLLM supports these formats as well, but TensorRT-LLM benefits more directly from NVIDIA’s hardware-specific fusion and graph optimizations, especially on Blackwell GPUs such as the RTX 5090 and 5080.

If you want a deeper technical look at emerging precision formats, you can read our explainer on DFloat11, a lossless BF16 compression technique designed to accelerate LLM inference without sacrificing accuracy.

Handling encoder–decoder and multimodal workloads

TensorRT-LLM advantages in encoder–decoder workloads

TensorRT-LLM excels in encoder–decoder batching and fused cross-attention kernels, making it well suited for translation, speech processing, and VLM pipelines. These capabilities are described in NVIDIA’s encoder–decoder examples.

vLLM’s developing multimodal support

vLLM continues to expand multimodal and encoder–decoder support, but kernel optimization on NVIDIA hardware is less mature. Its roadmap in the vLLM GitHub issues highlights ongoing improvements.

GPU utilization on H100, A100, and ROCm clusters

NVIDIA-focused performance behavior

TensorRT-LLM benefits from fused kernels, CUDA Graph integration, and FP8 acceleration, enabling high token throughput and stable latency on H100 and A100. NVIDIA’s performance guides emphasize these optimizations.

vLLM on AMD ROCm and multi-vendor clusters

vLLM supports AMD Instinct GPUs natively through ROCm. As documented in the ROCm LLM optimization notes, FP8 execution via Quark improves efficiency on MI-series hardware. This makes vLLM appealing for hybrid clusters and cost-sensitive deployments.

CUDA Graphs and kernel execution behavior

vLLM graph capture constraints and scheduling considerations

vLLM supports CUDA Graph capture for stable batch geometries, which reduces CPU overhead and improves decode latency. However, as noted in the vLLM performance discussions, workloads with variable sequence lengths limit graph reuse. When batch structures shift, vLLM reverts to standard kernel launches, affecting predictability.

TensorRT-LLM engine autotuning and fused operator consistency

According to NVIDIA’s TensorRT-LLM engine overview, TensorRT-LLM compiles optimized static graphs with fused attention kernels and preselected memory layouts. These engines integrate naturally with CUDA Graphs, delivering consistent execution patterns for short prompts, translation workloads, and multimodal pipelines.

GPU utilization on RTX 5000 series (RTX 5090, 5080, 5070 …)

The RTX 5000 generation significantly changes local inference performance. With 32 GB of VRAM on the RTX 5090 and next-generation Blackwell Tensor Cores, developers can run larger models locally, benefit from faster FP8 execution, and achieve lower latency under load.

vLLM benefits from the increased memory capacity, enabling longer context windows and more efficient paged KV cache reuse. TensorRT-LLM gains even more from this architecture, since Blackwell’s upgraded Tensor Cores and sparsity-aware INT4 kernels align closely with TensorRT’s fused-operator design. As a result, TensorRT-LLM on RTX 5000 GPUs often delivers the highest single-GPU throughput for optimized models.

The RTX 5090 32 GB dramatically improves local LLM capabilities, but it still cannot host a full 70B model, even with aggressive quantization. With TensorRT-LLM and the NVFP4 format, the largest dense model that fits on a single 32 GB GPU is currently around 49B parameters, assuming a short context and batch size of one. NVFP4 reduces VRAM usage to roughly 0.5 GB per billion parameters, which allows 30B–49B models to run locally while leaving just enough capacity for the KV cache and runtime overhead. In practice, 8B–32B models remain the sweet spot on RTX 5090 hardware, benefiting the most from Blackwell’s upgraded Tensor Cores and INT4/NVFP4 acceleration.

At the time of writing, NVFP4 is not exclusive to TensorRT-LLM. TensorRT-LLM offers the most mature and stable NVFP4 support on RTX 5090 and other Blackwell GPUs, with native handling of NVFP4 weights and activations through the TensorRT Model Optimizer. vLLM provides early and conditional NVFP4 support for specific models, although compatibility is still evolving and not universally available across architectures. Other frameworks are also progressing: SGLang has NVFP4 support planned, while llama.cpp does not yet support NVFP4 natively, with open discussions regarding kernel and conversion support. NVIDIA’s tooling, including the TensorRT Model Optimizer and LLM Compressor, can export NVFP4-quantized checkpoints compatible with multiple ecosystems, but TensorRT-LLM remains the most performant and reliable choice on RTX 5090 as of today.

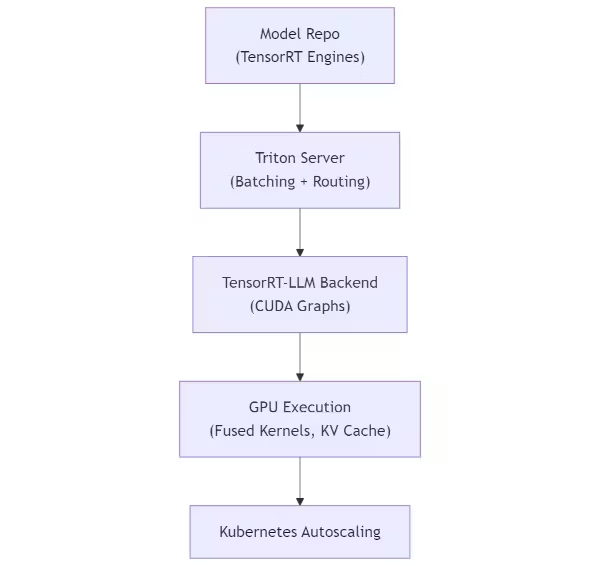

Deployment strategies with Triton, Kubernetes, and production MLOps

TensorRT-LLM with Triton Inference Server

The NVIDIA Triton documentation describes how Triton manages model repositories, batching, and GPU scheduling. TensorRT-LLM connects to Triton seamlessly, enabling production-grade autoscaling via Kubernetes and rich observability through Prometheus and Grafana. This integration reduces operational overhead for teams standardizing on NVIDIA infrastructure.

Local inference on RTX 5000 workstations

The arrival of the RTX 5000 series makes local LLM inference more viable than ever. Developers running models on RTX 5090 or 5080 GPUs can replicate near-production behavior, experiment with quantization, and test scheduling performance without needing a datacenter cluster.

vLLM is ideal for multi-model iteration, rapid prototyping, long-context RAG workflows, and development environments where flexibility matters more than maximum throughput. Its paged KV cache benefits directly from the large VRAM capacity of the RTX 5090.

If you want to experiment with vLLM locally on a workstation or laptop, you can follow our step-by-step guide on installing vLLM with Docker Compose, compatible with Linux and Windows WSL2.

TensorRT-LLM, however, delivers the highest performance for a single optimized model, making it the preferred choice for engineers who want to prototype a production-ready engine locally. With FP8 and INT4 acceleration on RTX 5000 hardware, TensorRT-LLM produces latency and throughput characteristics that closely match Triton deployments on NVIDIA datacenter GPUs.

This duality makes RTX 5000 workstations a powerful environment to evaluate and refine inference pipelines before scaling to the cloud.

Also read: NVFP4 vs FP8 vs BF16 vs MXFP4: comparison of low-precision formats for AI

vLLM in Kubernetes and multi-backend environments

vLLM’s lightweight server integrates cleanly with Kubernetes. Developers can combine vLLM with other inference backends, route traffic through service meshes, and scale horizontally with standard deployment patterns. Its flexibility is documented throughout the vLLM GitHub repository, making it suitable for hybrid-cloud or multi-vendor GPU fleets.

To illustrate this flexibility, developers can spin up a fully compatible OpenAI API server locally in seconds. Unlike runtimes requiring complex compilation steps, vLLM allows you to serve a model immediately for rapid prototyping:

# Quick start: Launching an OpenAI-compatible vLLM server

docker run --runtime nvidia --gpus all

--ipc=host

-v ~/.cache/huggingface:/root/.cache/huggingface

-p 8000:8000

vllm/vllm-openai:latest

--model "mistralai/Mistral-7B-Instruct-v0.3"Benchmarking methodology for real workloads

Prompt mixes and workload modeling

Realistic benchmarking requires more than static throughput tests. According to best practices, evaluations should include short chat queries, long-context RAG prompts, batch summarization, and quantized inference. Mixed QPS patterns reveal scheduler reactivity and KV cache behavior under shifting loads.

Metrics: throughput, TTFT, TPOT, and stability

Throughput remains a core metric, but time-to-first-token and time-per-output-token better reflect user-perceived latency. Stability at p95 and p99 under load indicates scheduler efficiency and GPU utilization balance. The SqueezeBits benchmarking work illustrates how quantization modes influence latency and tail behavior.

Decision matrix for selecting the right inference runtime

Criteria by workload category

vLLM is best for long-context inference, retrieval-augmented workloads, and services with high prompt diversity. Its paged KV cache and multi-step scheduling offer good stability when context lengths fluctuate.

TensorRT-LLM is preferred for encoder–decoder tasks, multimodal workloads, and latency-sensitive applications. Its fused kernels, optimized quantization paths, and in-flight batching provide consistent advantage on NVIDIA GPUs.

Criteria by hardware and cluster design

TensorRT-LLM delivers maximum value on H100 and A100 clusters, where FP8 acceleration, CUDA Graphs, and fused operators yield substantial performance gains. vLLM is ideal for organizations using heterogeneous clusters or running AMD Instinct GPUs, taking advantage of ROCm and Quark FP8 execution as documented in AMD’s official ROCm guides.

The broader outlook: how inference runtimes will evolve

Inference runtimes are converging toward longer context windows, more aggressive quantization (especially FP8), and deeper multimodal integration. Both vLLM and TensorRT-LLM are investing in improved attention kernels, better memory efficiency, and more robust deployment workflows. Increased support for tool-use models and distributed execution frameworks will further shape runtime evolution. Teams should benchmark regularly with realistic workloads and consider total cost per million tokens rather than raw tokens per second.

| Scenario Type | vLLM Recommended When… | TensorRT-LLM Recommended When… |

|---|---|---|

| Workload: Long-context / RAG | You need efficient paged KV cache, chunked prefill, flexible scheduling. | Less optimal for long prompts unless fully NVIDIA optimized. |

| Workload: Chat / Short prompts | You want cross-hardware flexibility and simpler deployment. | Low-latency decode is critical, benefits from fused kernels + CUDA Graphs. |

| Workload: Encoder–Decoder (NMT, speech, VLM) | Supported but still maturing for some operators. | Highly optimized encoder/decoder batching + fused cross-attention. |

| Workload: Multimodal pipelines | Early-stage support, evolving operator coverage. | Strong VLM performance, optimized cross-attention and multi-stage engines. |

| Hardware: NVIDIA H100/A100 | Works but not as optimized at kernel level. | Peak performance with FP8, fused kernels, Tensor Core acceleration. |

| Hardware: AMD ROCm / MI-series | Fully supported, FP8 via ROCm Quark, ideal for heterogeneous fleets. | Not supported, restricted to NVIDIA CUDA stack. |

| Hardware: Mixed vendor clusters | Best choice for unified serving across GPU vendors. | Not suitable for mixed environments. |

| Deployment: Lightweight / flexible | Simple to run, Kubernetes friendly, good for multi-backend setups. | Requires Triton for optimal results, heavier but production-focused. |

| Deployment: Enterprise MLOps | Useful for hybrid setups, custom routing, or cost-optimized clusters. | Tight Triton integration offers autoscaling, monitoring, and stable runtimes. |

| Primary Goal: Throughput | Strong for long-context workloads and varied prompt shapes. | Best for NVIDIA-centric batch workloads with consistent shapes. |

| Primary Goal: Latency | Good but depends on batch variability and scheduling patterns. | Industry-leading on NVIDIA GPUs thanks to fused kernels + CUDA Graphs. |

| Hardware: RTX 5000 (5090/5080) | Best for flexible multi-model testing, long-context RAG, rapid iteration. | Best for maximum single-model throughput using Blackwell FP8/INT4 acceleration. |

The introduction of the RTX 5000 generation accelerates a shift toward local inference becoming a first-class deployment target. With up to 32 GB of VRAM and Blackwell Tensor Core improvements, consumer GPUs now offer enough memory and throughput to run optimized LLMs locally with performance previously limited to smaller datacenter nodes. This trend will continue pushing runtimes like vLLM and TensorRT-LLM to refine their support for consumer hardware, hybrid clusters, and mixed local-cloud workflows.

Developers interested in comparing GPU and TPU inference trends can also explore our analysis of TPU v6e vs v5e/v5p and what Google’s Trillium architecture means for future large-scale inference.

Sources and references

Companies

- NVIDIA TensorRT-LLM documentation, engine design, batching guides: https://github.com/NVIDIA/TensorRT-LLM

- NVIDIA Triton Inference Server documentation: https://github.com/triton-inference-server/server

- NVIDIA developer blogs and technical posts: https://developer.nvidia.com/blog

- AMD ROCm official documentation including FP8 and kernel coverage: https://rocm.docs.amd.com

- AMD Quark FP8 execution stack: https://rocm.docs.amd.com/projects/rccl/en/latest/

Official sources and project documentation

- vLLM official documentation (scheduler, KV cache, multimodal roadmap): https://docs.vllm.ai

- vLLM GitHub repository and discussions: https://github.com/vllm-project/vllm

- TensorRT-LLM GitHub repository including quantization, engine building, encoder–decoder examples: https://github.com/NVIDIA/TensorRT-LLM

- SqueezeBits quantization and benchmarking resources: https://github.com/SqueezeAILab/SqueezeBits

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!