Why AI Models Are Slower in 2025: Inside the Compute Bottleneck

AI models like ChatGPT, Claude, and Gemini feel slower in 2025 because cloud providers are running out of the GPU capacity required for real-time inference. This global shortage affects H100, H200, and Blackwell-class accelerators, which forces queueing, throttling, and strict prioritization of enterprise workloads. This article details why the slowdown occurs, how modern architectures increase compute pressure, and what to expect as shortages continue into 2026.

What Is Really Behind the Slowdown in 2025

Many users first notice longer response times, pauses, or degraded interactivity across AI services. These effects appear even with a stable connection and modern hardware because the bottleneck is not local, it is entirely cloud-side. The core issue is that the demand for GPU compute is now far higher than the available supply in major data centers.

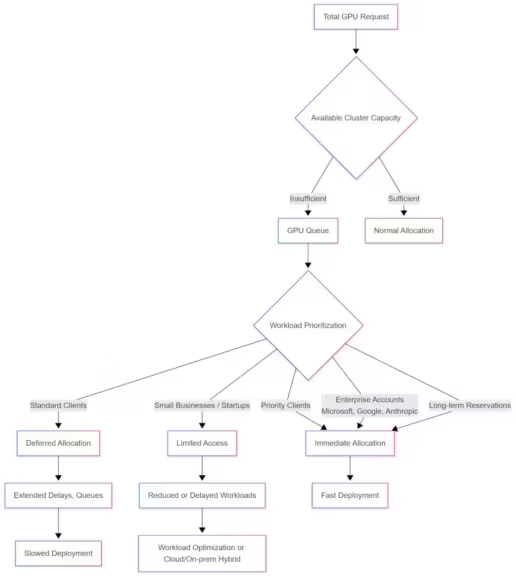

Large-scale inference requires immediate access to high-end accelerators. When several thousand requests arrive concurrently, cloud clusters cannot serve them all at once. Providers must queue, defer, or throttle workloads, and consumer tiers are the first to feel the impact during peak hours. The result is inconsistent responsiveness and the perception that AI models are “slower” than before.

(Clic to enlarge)

The underlying cause is straightforward, the volume and complexity of queries have grown faster than the GPU resources needed to process them. This mismatch drives the slowdown observed across ChatGPT, Claude, Gemini, and other major systems.

How Cloud Congestion Drives AI Latency

Behind every prompt, cloud platforms must allocate GPUs to process the request. When inference clusters reach their limits, performance drops as the system delays scheduling or reduces throughput to maintain overall stability. This congestion directly affects token generation speed, responsiveness, and interaction flow.

This dynamic is closely related to the broader compute crisis described in GPU Shortage: Why Data Centers Are Slowing Down in 2025. For a deeper look at how providers attempt to absorb these spikes, our piece OpenAI scales up: toward a global multi-cloud AI infrastructure examines the shift toward distributed multi-cloud deployments.

How Internal GPU Queueing Works

Inference runs on shared pools of advanced accelerators. When these pools are saturated, incoming requests enter internal queues, adding several seconds or more before processing begins. This queueing happens silently in the background. For developers and researchers, it appears as intermittent lag or brief pauses in output.

Some platforms also apply inference throttling to avoid instability when capacity is strained. These mechanisms ensure uptime but contribute to the slowdown perceived by end users.

Regional Saturation and Multi-Cloud Distribution

AI providers distribute workloads across AWS, Azure, Google Cloud, and other infrastructures to reduce bottlenecks. However, each cloud region has its own resource profile, and heavy traffic in one zone cannot always be shifted to another. Regional saturation amplifies latency spikes, especially during overlapping peak usage periods.

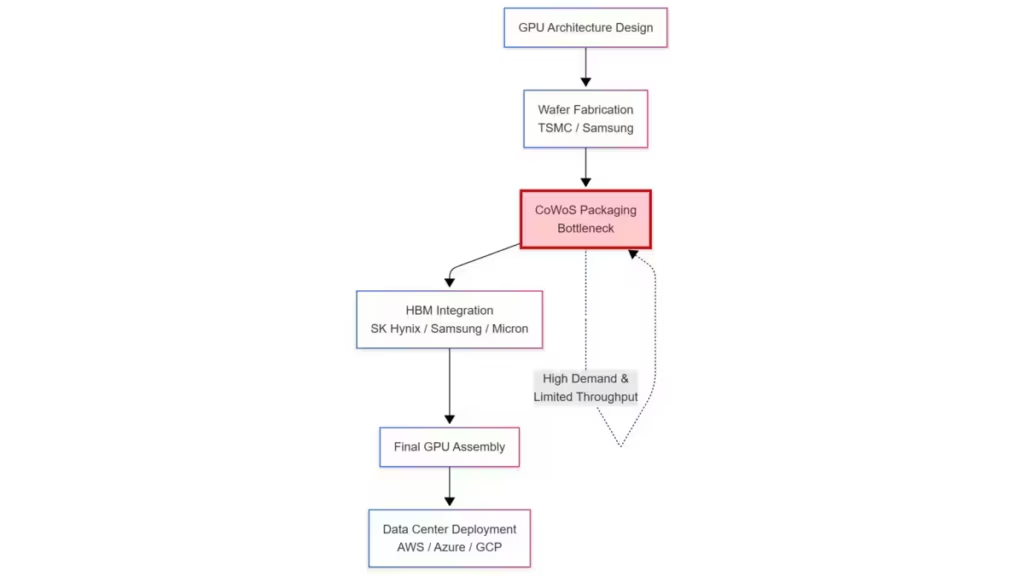

The GPU Shortage: CoWoS, HBM, and Supply-Chain Limits

The compute crisis ultimately originates in the supply chain. Modern accelerators depend on advanced packaging technologies like CoWoS and high-bandwidth memory (HBM), which remain extremely limited. Even with additional manufacturing lines coming online, throughput is far below the pace required by global AI demand.

This constraint has become more visible as the industry transitions to Nvidia’s Blackwell architecture. Blackwell GPUs require even more packaging bandwidth, further tightening the supply chain and slowing deployment of new compute clusters.

CoWoS Packaging and Global Output Constraints

CoWoS (Chip-on-Wafer-on-Substrate) is essential for assembling high-performance GPU modules. TSMC’s CoWoS production capacity is finite, and significant portions of it are already reserved by hyperscalers through long-term commitments. This bottleneck means that GPU availability depends less on design cycles and more on packaging throughput.

HBM Memory and Its Impact on AI Availability

HBM3 and HBM3E memory require specialized stacking and testing processes that only a few manufacturers can deliver at scale. These constraints affect both training clusters and inference fleets, slowing the rollout of new GPU nodes and constraining the expansion of existing deployments.

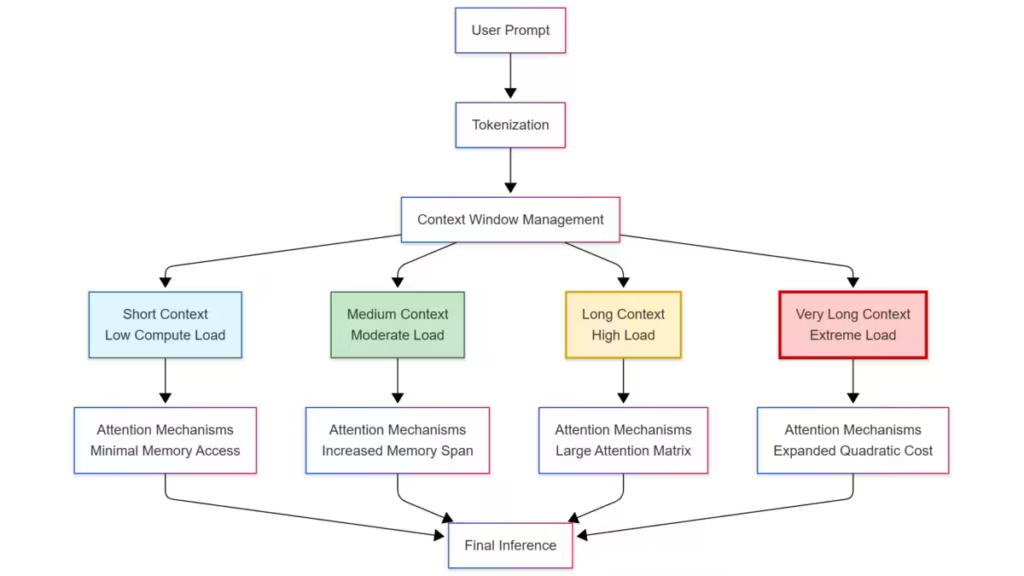

Why Modern Models Consume More Compute

Even if GPU supply were abundant, today’s AI models require far more compute per request than earlier generations. Long-context windows, multimodal processing, and agentic workflows increase the number of operations needed for every query.

The Hidden Cost of Long-Context Windows

Supporting hundreds of thousands or millions of tokens increases the workload of attention mechanisms, even for short user prompts. These long-context features must track and manage far more internal state, adding to the compute cost of each inference cycle.

Multimodal and Agent Workflows Add More Layers of Processing

Text-only models are no longer the norm. AI systems now combine text, image, audio, and code processing alongside tool calls and agent chains. Each additional modality or step adds its own compute path, multiplying GPU usage per query.

How Enterprise Prioritization Shapes Consumer Latency

Compute is not allocated equally across user tiers. Enterprises pay for guaranteed access to GPU resources, while free or low-cost consumer traffic relies on leftover capacity. When demand spikes, these contracts reduce the supply available for public use, increasing latency and reducing stability.

Priority Tiers in Cloud Deployment

Enterprise SLAs provide immediate access to compute regardless of cluster load. When GPUs are scarce, these workloads bypass queues entirely. Consumer traffic, however, may experience throttling, delayed execution, or restricted throughput when capacity tightens.

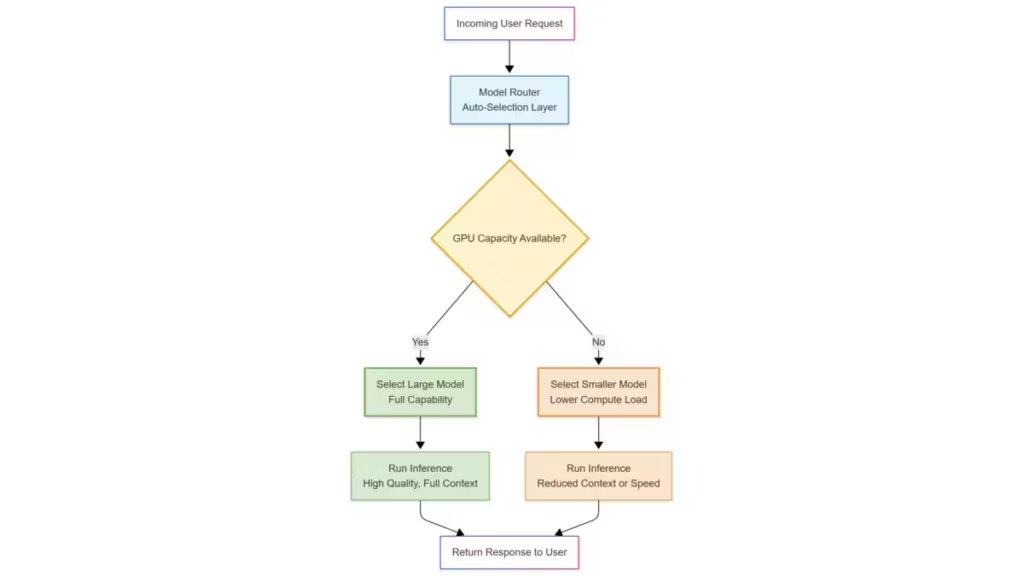

Model Downgrading and Automatic Variant Selection

Some platforms use automatic selection systems that switch to smaller or more efficient models when GPU availability drops. These transitions preserve uptime but can reduce response speed and consistency. Users sometimes notice that answers feel less direct or take longer to appear.

What Users Can Actually Do

Because the slowdown originates in the cloud, user-side optimizations have limited effect. Still, certain adjustments can reduce complexity and improve responsiveness in some situations.

When User Optimizations Help

Shortening prompts, limiting output length, or avoiding unnecessary multimodal content can reduce compute needs. These changes shorten inference paths and improve the likelihood of faster responses.

| Action | Effect on Compute | Expected Benefit |

|---|---|---|

| Reduce prompt length | Fewer tokens to process | Faster initial response |

| Avoid unnecessary multimodal inputs | Removes extra inference paths | Lower GPU load |

| Limit output length | Smaller generation window | More stable latency |

| Remove redundant context | Reduced memory and attention cost | Better consistency |

| Use concise instructions | Simplifies model routing | Higher speed and accuracy |

When User Actions Cannot Overcome Cloud Saturation

During regional or global peak periods, no local adjustments can bypass GPU scarcity. If the GPUs required for inference are unavailable, the platform must queue or throttle requests until more capacity becomes free.

What to Watch Next

Over the next year, several factors may ease the pressure on AI services. Expanded CoWoS packaging, increased HBM production, and next-generation accelerators such as Blackwell and Vera Rubin will gradually expand global compute capacity. However, scaling advanced packaging and memory fabrication takes time. Demand from enterprises, research labs, and new AI-enabled applications continues to grow faster than supply.

Emerging optimizations, including more efficient attention mechanisms, quantization strategies, and distributed inference workflows, will help stretch available compute. Yet slowdowns will likely remain common until the supply chain stabilizes and data centers significantly expand GPU fleets.

Real improvements will depend on long-term investment in manufacturing and infrastructure, not user-side workarounds.

Also read : DFloat11 : Lossless BF16 Compression for Faster LLM Inference

Sources and references

Tech media

- As reported by CNN, 19 November 2025, analysis of GPU demand and infrastructure strain, https://edition.cnn.com/2025/11/19/tech/nvidia-earnings-ai-bubble-fears

- As reported by The Wall Street Journal in 2025, analysis of Nvidia earnings and Blackwell demand, https://www.wsj.com/tech/ai/nvidia-earnings-q3-2025-nvda-stock-9c6a40fe

- According to TechCrunch, 18 November 2025, coverage of Gemini 3 Pro and compute implications, https://techcrunch.com/2025/11/18/google-launches-gemini-3-with-new-coding-app-and-record-benchmark-scores/

Companies

- According to Microsoft, 18 November 2025, details on Blackwell and Vera Rubin partnerships, https://blogs.microsoft.com/blog/2025/11/18/microsoft-nvidia-and-anthropic-announce-strategic-partnerships/

- The Anthropic announcement detailing Claude’s integration into Foundry, https://www.anthropic.com/news/claude-in-microsoft-foundry

- OpenAI’s GPT-5.1 Codex Max introduction, https://openai.com/index/gpt-5-1-codex-max/

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!