Highlights

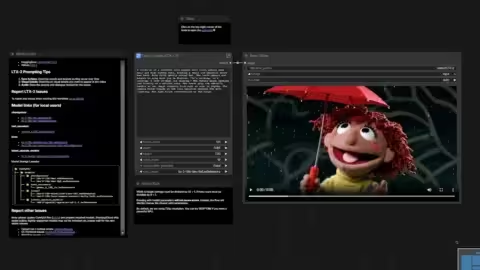

LTX-2 Technical Optimization: Benchmarking FP8, NVFP4, and Schedulers

While our initial LTX-2 technical guide covers the deployment fundamentals, professional exploitation of Lightricks’ model requires a granular understanding of quantization formats. With an asymmetric 19-billion parameter architecture (14B video, 5B audio),…

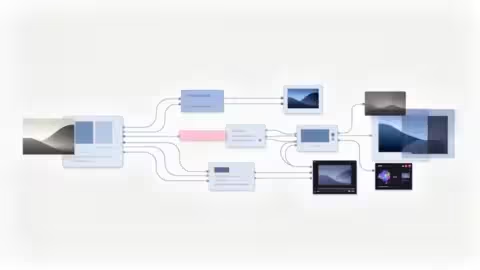

Complete guide to ComfyUI upscale models: performance and use cases

Optimizing images in ComfyUI relies on a precise selection of upscale models, as each architecture, whether ESRGAN-based or more recently DAT-based, interacts differently with pixels and textures. To integrate these…

ComfyUI GGUF: how and why to use this format in 2026?

The year 2026 marks a turning point where the GGUF format is no longer an experimental alternative, but an absolute necessity for anyone wishing to exploit cutting-edge models, such as…

ComfyUI: where to find and download the best models and workflows in 2026

The year 2026 marks a major transformation in how we consume local generative AI, as downloading a model is no longer just about choosing the largest file, but finding the…

ComfyUI: the best information sources and ecosystem resources in 2026

The year 2026 represents a major turning point for ComfyUI, as the interface has evolved from a niche tool for local AI enthusiasts into an industrial standard for creative production….

GA4 and AdSense: The Ultimate Guide to Verifying Consent Mode V2 (G111)

Since Google introduced Consent Mode V2 (GCM V2) and made the two new parameters (ad_user_data and ad_personalization) mandatory for EEA/UK traffic, many website owners have seen a sharp drop in…

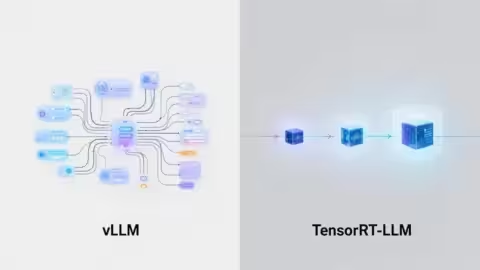

Developer AI News : Latest Updates for Engineers

The AI ecosystem is entering a phase of rapid structural change. Models are updating faster, runtimes are fragmenting across increasingly specialized hardware, and agent frameworks are evolving from experimental prototypes…

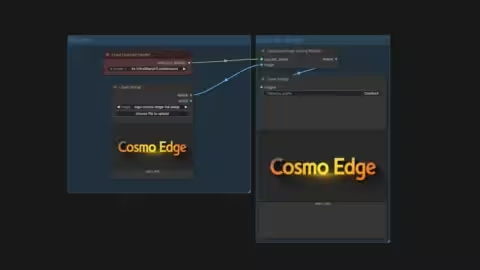

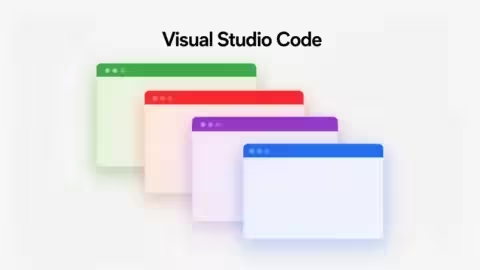

How to Color-Code VS Code Windows Per Project Using .code-workspace

When several VS Code windows are open, it becomes easy to lose track of which project you are actually working in. Using a .code-workspace file, you can assign a unique,…

AI News December 1–6: Chips, Agents, Key Oversight Moves

This weekly briefing highlights the AI developments that matter most, from new accelerator hardware and enterprise agent pivots to global oversight milestones shaping deployment in 2026. Covering December 1 to…

TPU v6e vs v5e/v5p: How Trillium Delivers Real AI Performance Gains

TPU Trillium, also known as TPU v6e, delivers more than the 4.7 times peak compute uplift Google highlights on the Google Cloud Blog. Real workloads show around 4 times faster dense…

Understanding Google TPU Trillium: How Google’s AI Accelerator Works

Google’s TPU Trillium, also known as TPU v6e, is the latest generation of Google’s custom silicon for large-scale AI. At a time when the industry faces a global GPU shortage…

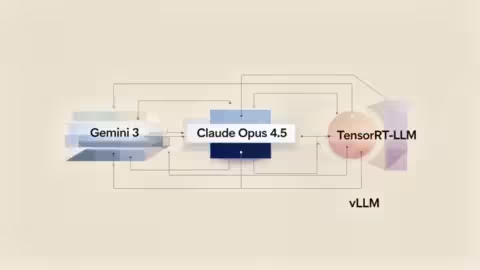

Weekly AI highlights for November 24–29: Claude 4.5 reasoning leap, FLUX 2 4-MP imaging, DeepSeek Math V2, and GPT 5.1 now in Windows 11 Copilot.

The pace of artificial intelligence continues to accelerate, and late November (24 to 29) 2025 highlights a striking contrast between rapid innovation and mounting pressure on the infrastructure that powers…

ChatGPT Timeline Explained: Key Releases from 2022 to 2025

ChatGPT’s evolution from 2022 to 2025 is not just a sequence of model releases, but the story of how modern AI systems adapt to growing global demand, hardware limitations, and…

DFloat11 : Lossless BF16 Compression for Faster LLM Inference

DFloat11 compresses BF16 model weights by about 30 percent while preserving bit-perfect accuracy, enabling faster and more memory-efficient LLM inference on GPU-constrained systems. Its GPU-native decompression kernel reaches roughly 200…

Why AI Models Are Slower in 2025: Inside the Compute Bottleneck

AI models like ChatGPT, Claude, and Gemini feel slower in 2025 because cloud providers are running out of the GPU capacity required for real-time inference. This global shortage affects H100,…

GPU Shortage: Why Data Centers Are Slowing Down in 2025

In 2025, the world’s largest cloud providers are hitting a severe GPU shortage that is slowing down the entire AI ecosystem, from startups to major enterprises. Queue delays, rationed compute,…

AI News – Highlights from November 14–21: Models, GPU Shifts, and Emerging Risks

This week’s AI news highlights major shifts across frontier models, GPU infrastructure, autonomous agents, and global regulation. Readers gain a clear understanding of the technologies shaping 2025 and how they…

Disable VBS in Windows 11 for Real Gaming Performance Gains

Windows 11’s Virtualization-Based Security, often enabled by default, can quietly reduce performance in CPU-bound games by 10 to 15 percent. This guide explains how to check whether VBS is active,…

WordPress URL Structure: 4 SEO Mistakes You Must Avoid in 2025

The structure of your WordPress URLs directly affects SEO performance, long-term stability, and overall site visibility. And yes, I know, you might be thinking that in the age of AI,…

GPT-5.1 Redefines Professional AI Workflows

GPT-5.1 marks a new milestone in the adoption of artificial intelligence across organizations. Faster, more compact, and more reliable, OpenAI’s new model significantly boosts the productivity of developers, researchers, and…