Agentic AI 2026: Capital Repricing, Long-Context Scaling and China’s Acceleration

Agentic AI 2026 marks a structural transition from conversational copilots to workflow-executing systems embedded directly into enterprise infrastructure. Hyperscalers are committing more than $600 billion to AI-related capital expenditure, software markets are repricing under multi-step agent pressure, and Chinese open-weight ecosystems are scaling rapidly. Understanding this inflection point requires linking infrastructure density, inference economics, governance signals, and long-context scaling into a single architectural analysis.

The Structural Break: From Copilots to Execution-Layer Agents

Agentic AI 2026 is not a user interface enhancement. It represents a systems-layer transformation in which models move from assisting users inside tools to executing workflows across tools. That distinction matters because execution requires persistent state, tool invocation, structured memory, and integration into enterprise runtimes.

Traditional copilots generate text or code within bounded prompts. Execution-layer agents coordinate tasks, call APIs, chain reasoning steps, and interact with external systems. This architectural shift alters infrastructure requirements, pricing models, and competitive positioning across the AI stack.

Agentic AI as Architecture, Not Interface

Agentic systems operate as orchestration layers. They integrate retrieval-augmented generation, tool execution, memory persistence, and multi-step planning into cohesive workflows. The structural change is from response generation to process automation.

Engineering patterns already visible in production coding agents and orchestration frameworks illustrate this shift. In prior analysis of agentic engineering and the “Vibe Coder” transition, structured orchestration replaced improvised prompt chaining, see Agentic engineering guide. In 2026, that engineering evolution intersects directly with capital allocation and enterprise software economics.

Why February 2026 Signals an Inflection Point

Between late January and mid-February 2026, three signals converged. First, according to Reuters reporting on hyperscaler AI capex projections, hyperscaler AI-related capex projections exceeded $600 billion. Second, software equities declined amid concerns that AI agents could erode traditional SaaS pricing power, as described in Reuters coverage of the software selloff. Third, Chinese firms accelerated open-weight and multi-step model releases, covered in Reuters reporting on the Spring Festival model wave.

Taken together, these developments indicate a structural shift. Infrastructure scale enables agent execution. Agents compress SaaS margins. Open-weight ecosystems challenge closed API dominance. The alignment of these signals suggests that agentic AI 2026 reflects a capital-backed transition rather than a feature cycle.

Hyperscaler AI Capex 2026: The $600B Infrastructure S-Curve

The first structural layer of agentic AI 2026 is infrastructure expansion. Multiple reports indicate that a small group of hyperscalers plan more than $600 billion in combined capital and operating expenditure related to AI infrastructure in 2026, see Reuters on capex projections. The scale itself defines a new phase in the infrastructure S-curve.

| Company | 2026 Projected Capex (USD) | AI-Related Share (if disclosed) | Source |

|---|---|---|---|

| Amazon (AWS) | ~$125B+ | Significant AI infrastructure allocation | Reuters (Feb 6, 2026) |

| Microsoft | ~$100B+ | AI data centers and accelerators emphasized | Reuters (Feb 6, 2026) |

| Alphabet | ~$75B+ | Increased AI cluster deployment | Reuters (Feb 6, 2026) |

| Meta | ~$60B+ | AI compute and training expansion | Reuters (Feb 6, 2026) |

| Alibaba | Not fully disclosed | Qwen-related AI expansion highlighted | Reuters (Feb 16, 2026) |

Verified Capex Breakdown (Snippet Table)

The capex figures referenced above originate from publicly reported projections and financial disclosures. The table should list company names, projected 2026 expenditure, AI-related share where disclosed, and source attribution from outlets such as Reuters and CNBC.

The key variable is magnitude, not single-digit precision. When infrastructure spending crosses this threshold, it alters compute availability, cluster density, and model deployment feasibility. Agentic workloads require sustained inference throughput rather than sporadic chatbot queries.

Infrastructure Density and Accelerator Scaling

Agent execution depends on dense GPU clusters with high interconnect bandwidth and optimized scheduling. Scaling multi-agent systems increases memory requirements, inter-node communication, and inference concurrency. These dynamics place pressure on accelerator supply, power distribution, and data center topology.

Infrastructure S-curve dynamics matter because once sufficient density is reached, new classes of workloads become economically viable. Multi-step agents with persistent context and tool invocation loops are computationally heavier than prompt-response interactions.

Capex to Control: Why Infrastructure Enables Agents

Capital reallocation toward AI infrastructure directly enables agent execution at enterprise scale. When hyperscalers deploy additional clusters, they increase available inference throughput and reduce marginal cost per token.

Lower marginal inference cost supports continuous workflow automation. Enterprises can run agents persistently rather than episodically. The link between hyperscaler AI capex 2026 and agentic AI 2026 is therefore structural and operational.

This massive deployment of capital reveals a major strategic contradiction: companies are preparing to spend hundreds of billions on infrastructure to automate business processes whose marginal value is collapsing. In reality, the 2026 Capex is not an investment in efficiency; it is a technological sovereignty tax. We are not paying for AI to work better than a human, we are paying to avoid dependency on a competitor’s infrastructure when execution becomes the market’s only commodity.

SaaS Repricing Under Agent Pressure

The second structural layer is economic. Multi-step agents reduce the need for human-mediated coordination inside SaaS platforms, creating margin compression in seat-based pricing models.

When an agent can draft documents, manage tickets, generate code, and coordinate tasks across systems, it challenges traditional value capture. The result is SaaS repricing under agent pressure.

From Seat-Based Pricing to Workflow Automation

Seat-based SaaS pricing assumes humans as primary operators. Agentic AI 2026 challenges that assumption. If a single agent can perform tasks equivalent to multiple users across tools, pricing models based on human seats become unstable.

This does not eliminate SaaS platforms, but it shifts value from interface access to infrastructure integration, orchestration reliability, and execution quality. Software margin compression follows when execution layers become more standardized.

Anthropic Trigger and Market Signal

According to Reuters coverage of the market reaction to AI agents, part of the software equity selloff was attributed to fears that AI agents could erode pricing power in traditional software markets. This attribution reflects market interpretation rather than confirmed displacement.

It remains important to separate reporting from extrapolation. However, market repricing often anticipates structural change before it appears in revenue statements.

Disintermediation of Coordination Layers

Agents disintermediate coordination tasks. They aggregate information, synthesize context, and execute workflows without requiring manual transitions between tools. This affects project management, customer support, legal drafting, and coding workflows.

Production deployments of AI coding agents already illustrate these dynamics beyond benchmark comparisons, see AI coding agents: the reality on the ground beyond benchmarks. The economic implications are now emerging at market scale.

China’s Acceleration: Open-Weight Strategy at Scale

The third structural layer involves ecosystem divergence. Chinese firms are scaling open-weight and enterprise-deployable models alongside hosted services, while major US providers continue to emphasize API-centric delivery.

This divergence is visible in Alibaba’s Qwen 3.5 release, ByteDance’s Doubao 2.0, and DeepSeek’s long-context upgrades, as summarized in Reuters reporting on China’s model launches.

| Dimension | Open-Weight Models (e.g. Qwen, DeepSeek) | Closed API Models (e.g. OpenAI, Anthropic) |

|---|---|---|

| Deployment Model | Self-hosted or hybrid | Fully managed cloud API |

| Infrastructure Responsibility | Enterprise-managed | Provider-managed |

| Customization Depth | High (fine-tuning, system control) | Limited to API parameters |

| Data Sovereignty | Local control possible | Data processed via provider infrastructure |

| Capex Requirement | Higher internal infrastructure investment | Lower upfront, usage-based Opex |

| Operational Complexity | High (DevOps + MLOps required) | Lower (abstracted operations) |

| Strategic Control | Greater architectural autonomy | Vendor dependency |

Alibaba Qwen 3.5: Claims vs Verified Benchmarks

Alibaba described Qwen 3.5 as optimized for the agentic AI era, offering both hosted services and downloadable weights, covered by Reuters and CNBC. Company statements referenced cost reductions of up to 60 percent and increased workload capacity compared with previous internal models.

These figures are company-reported. Independent benchmark comparisons against leading US systems remain limited. Any evaluation must clearly distinguish between vendor-provided metrics and third-party validation.

ByteDance Doubao 2.0 and Multi-Step Agents

As reported by Reuters on Doubao 2.0, ByteDance positioned Doubao 2.0 as tailored for multi-step task execution. The model was framed as an upgrade focused on workflow capability rather than conversational refinement.

The emphasis is execution. This aligns with the broader framing of agentic AI 2026 as an execution-layer transformation affecting enterprise automation.

DeepSeek and the 1M Token Window

DeepSeek upgraded its chatbot context window from 128,000 to 1,000,000 tokens, reported in Reuters coverage of the Spring Festival model wave. A 1 million token context window enables book-length inputs in a single interaction but introduces significant hardware and memory constraints.

Long context is not simply a product feature. It reshapes inference economics, GPU memory requirements, and system design decisions for production deployments.

Open-Weight vs Closed API: Strategic Matrix

Chinese firms increasingly release open weights alongside hosted services. US firms predominantly emphasize managed API access. The strategic difference affects enterprise deployment flexibility, data sovereignty considerations, and customization depth.

Open-weight models enable local deployment but require internal infrastructure investment. Closed APIs offer managed scaling and operational abstraction but limit architectural control.

Long-Context Scaling: Technical Constraints Behind 1M Tokens

Long-context LLM scaling is often framed as capability expansion. In practice, it is a hardware and memory constraint problem.

The massive expansion of context windows, such as the move to one million tokens, introduces a technical reliability paradox. In “needle in a haystack” testing, agents can be neutralized not by a direct command, but by the injection of massive semantic “noise” that saturates the attention mechanism. This bypass, similar to a cognitive Denial of Service (DoS) attack, demonstrates that reading capacity does not guarantee discernment. For the engineer, the challenge is no longer RAM size, but the robustness of weighting in the face of intentionally polluted data.

KV Cache and Memory Scaling Math

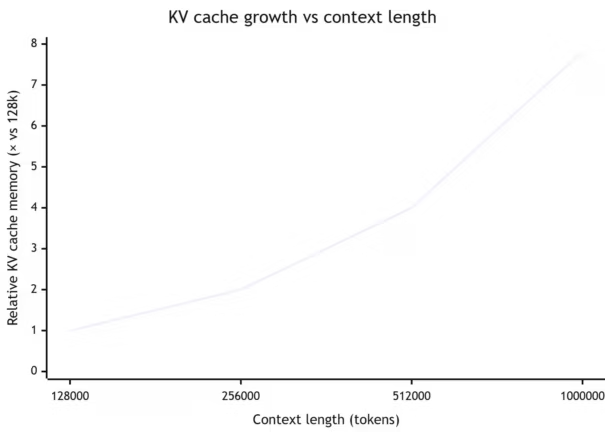

Transformer architectures store key-value pairs for each token in the context window. KV cache memory grows linearly with context length. Moving from 128,000 to 1,000,000 tokens multiplies memory requirements proportionally, assuming fixed hidden dimensions.

This affects GPU memory allocation, cluster scheduling, and inference strategy. Large context windows require high-memory accelerators or distributed inference approaches. For ML engineers, the constraint is physical and budgetary.

Throughput vs Context Length Trade-Offs

Increasing context length typically reduces throughput. Larger KV caches increase memory bandwidth pressure and reduce tokens processed per second. Inference density per GPU declines unless compensated by architectural optimization.

This trade-off directly affects agentic workloads. Multi-agent orchestration with persistent long context can saturate memory and degrade concurrency if not carefully managed.

RAG and Multi-Agent Orchestration Implications

Long context interacts with retrieval-augmented generation strategies. Rather than relying exclusively on expanded context windows, many production systems combine moderate context lengths with retrieval pipelines to reduce memory overhead while preserving relevance.

In multi-agent systems, context partitioning becomes critical. Agents must share state without duplicating full context windows. Structured memory, controlled tool invocation, and orchestration discipline are essential to keep inference costs predictable, see Agentic engineering guide.

OpenAI Governance Signal: Mission Alignment Restructuring

Organizational changes can influence external trust and strategic interpretation.

Verified Reporting: What Changed

Multiple outlets reported that OpenAI disbanded its Mission Alignment team in February 2026, redistributed members internally, and appointed a former lead to a futurist role, including TechCrunch and Platformer. Reporting characterized the team as small and focused on articulating mission and long-term impact.

These details are derived from mainstream technology and financial reporting.

Interpretation Boundaries: Signal vs Strategy

Interpretation must remain bounded. The restructuring does not, by itself, demonstrate reduced commitment to safety or alignment. However, governance signals matter when enterprise clients evaluate long-term reliability and oversight posture.

For CTOs assessing agentic AI 2026 deployment risk, organizational transparency and continuity of safety processes remain relevant variables.

2026–2028: Monitoring the Structural Transition

Agentic AI 2026 represents the early phase of a multi-year structural transition.

What CTOs and Infra Teams Should Track

Infrastructure density and accelerator availability will determine feasible agent workloads. Inference cost per token and memory efficiency will shape deployment economics. Open-weight adoption will influence internal capability building versus API dependence.

Security models for agent orchestration, including controlled tool execution and environment isolation, remain central to stable deployment of execution-layer systems, see Securing agentic AI: the MCP ecosystem and smolagents.

Where Structural Risk Remains

Risks include overreliance on vendor benchmark claims, underestimation of memory constraints in long-context deployments, and premature assumptions about SaaS displacement. Infrastructure scaling enables agents, but workflow integration remains a systems engineering challenge.

Agentic AI 2026 is best understood as a capital-backed execution-layer shift. Through 2028, competitive advantage will depend on aligning infrastructure investment, inference economics, governance posture, and secure orchestration design into coherent production systems.

Sources and references

1. Tech media

- As reported by CNBC on Qwen 3.5, February 17, 2026.

- As reported by Reuters on Alibaba Qwen 3.5, February 16, 2026.

- As reported by Reuters on ByteDance Doubao 2.0, February 14, 2026.

- As reported by Reuters on Chinese AI models around Spring Festival, February 14, 2026.

- As reported by TechCrunch on OpenAI’s Mission Alignment restructuring, February 11, 2026.

- As reported by Platformer on the same restructuring, February 2026.

- As reported by Reuters on hyperscaler AI capex projections, February 6, 2026.

- As reported by Reuters on software stocks and agent disruption fears, February 4, 2026.

- As reported by Reuters feature on China’s low-cost model wave, February 12, 2026.

2. Companies

- According to Alibaba, Qwen 3.5 model family announcement as covered by Reuters and CNBC.

- According to ByteDance, Doubao 2.0 release statements as covered by Reuters.

- According to DeepSeek, long-context upgrade reporting as covered by Reuters.

3. Institutions

- Hyperscaler financial disclosures and earnings projections referenced by Reuters, early 2026.

4. Official sources

- Public statements from OpenAI regarding internal restructuring as covered by TechCrunch and Platformer, February 2026.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!