AI Inference Economics 2026: The Real Cost of Agents, Long Context and Infrastructure Scale

AI Inference Economics 2026 defines the real cost structure behind agentic AI systems, long-context scaling, and hyperscaler infrastructure expansion. Between unprecedented capital expenditure exceeding $600 billion and vendor claims of lower inference costs, the economic model of AI is shifting from experimental deployment to sustained execution at scale. This article separates confirmed reporting from company-reported performance and examines how agents, 1M-token context windows, and infrastructure density reshape enterprise AI economics.

Hyperscaler Capex and the Infrastructure S-Curve

In early February 2026, Reuters reported that major hyperscalers are preparing more than $600 billion in AI-related capital and operating expenditure for the year. This level of investment marks a structural transition in AI infrastructure, shifting from experimental scaling to sustained, production-grade deployment.

According to Reuters coverage of hyperscaler AI spending, the projected spending spans both capital expenditure for data centers and operational costs tied to compute, networking, and energy. The scale of this commitment reframes AI infrastructure as a long-term strategic asset rather than a short-cycle innovation budget.

$600B in AI Capex: What Is Actually Confirmed

The confirmed figure reported by Reuters aggregates projected AI-related spending across a small group of leading cloud providers. While precise company-level breakdowns vary by reporting source, the key signal is directional: AI infrastructure investment is entering a capital-intensive phase comparable to previous cloud expansion waves.

| Company / Institution | Reported Amount (2026) | Source | Date | Strategic Implication |

|---|---|---|---|---|

| Major hyperscalers (aggregate) | >$600B AI-related capex/opex | Reuters | Feb 6, 2026 | Structural AI infrastructure expansion |

| Selected cloud leaders | Company-specific multi-billion AI budgets | Earnings calls cited by Reuters | Q1 2026 | Increased accelerator deployment and cluster density |

It is important to separate confirmed reporting from extrapolation. Reuters attributes the $600B figure to aggregate projected spending, not a single entity. There is no confirmation in the reporting that all funds are dedicated exclusively to inference, but the implication is clear: sustained GPU deployment and data center expansion will support persistent AI workloads.

For infrastructure architects, this matters because inference economics are directly shaped by cluster density, interconnect bandwidth, and memory configuration. When spending crosses this magnitude, the bottleneck shifts from model novelty to compute allocation efficiency.

Why Inference Density, Not Model Size, Drives Cost

In 2026, the primary economic driver is no longer just model parameter count. It is inference density, defined by how many tokens per second can be processed per accelerator while maintaining acceptable latency.

Agentic AI systems, which execute multi-step workflows rather than single prompts, generate longer sessions and higher sustained token throughput. Persistent agent workloads increase average GPU utilization, reducing idle cycles but raising energy and cooling demands.

From an economic standpoint, the cost of inference depends on:

- GPU hourly cost or amortized hardware cost

- Memory footprint, including KV cache usage

- Tokens processed per second

- Session length and concurrency

When hyperscalers invest at this scale, they are implicitly betting that inference workloads will remain high enough to justify cluster expansion. This dynamic links capital expenditure directly to AI inference economics.

For a broader macro view of how infrastructure expansion intersects with agentic execution, see our structural analysis in Agentic AI 2026: Capital Repricing, Long-Context Scaling and China’s Acceleration.

AI Inference Cost: Claims vs Verified Economics

AI Inference Economics 2026 cannot be understood through headline claims alone. The critical distinction is between vendor-reported efficiency improvements and independently verifiable cost structures.

In February 2026, multiple announcements referenced cost reductions and workload scaling improvements. However, these figures often originate from company statements rather than third-party benchmark audits. For technical decision-makers, separating pricing from total cost of ownership, TCO, is essential.

Cost Per Token: Vendor Pricing vs Real TCO

API providers typically publish pricing per million tokens, MTok, which offers a visible reference point for developers. These prices reflect usage fees, not the underlying hardware, energy, and infrastructure amortization costs.

In contrast, open-weight deployment shifts cost structure toward hardware acquisition, GPU utilization, storage, networking, and engineering overhead. While per-token cost may appear lower at scale, the effective TCO depends on:

- Hardware amortization period

- Average GPU utilization rate

- Energy cost per kWh

- Operational staffing and orchestration complexity

For example, pricing pages from major API providers such as OpenAI and Anthropic provide transparent token pricing tiers. However, those prices do not directly reveal cluster-level operating margins or memory overhead associated with long-context usage.

The key economic difference is this: API pricing externalizes infrastructure complexity, whereas open-weight deployment internalizes it. The inference cost comparison must therefore include hardware efficiency and sustained throughput, not just token rates.

The “60% Cheaper” and “8x Workload” Problem

On February 16, Alibaba introduced Qwen 3.5 and stated that the model could operate at up to 60 percent lower cost and support up to eight times higher workload capacity compared to its previous flagship, according to Reuters coverage of the launch and additional financial reporting.

These figures are company-reported. The reporting period does not include independent, standardized benchmarks comparing Qwen 3.5 against US-based frontier models under identical workload conditions.

The economic ambiguity lies in the definition of “cost” and “workload.” Cost may refer to internal inference efficiency improvements, while workload capacity could reflect higher concurrency under specific conditions. Without standardized evaluation criteria, these metrics cannot be directly compared across ecosystems.

| Model | Claimed Improvement | Verified Data | Source | Notes |

|---|---|---|---|---|

| Alibaba Qwen 3.5 | Up to 60% lower cost | No independent benchmark in reporting window | Reuters, Feb 16, 2026 | Company-reported |

| Alibaba Qwen 3.5 | Up to 8x workload capacity | Not independently validated | Reuters, Feb 16, 2026 | Definition of workload unspecified |

For CTOs and ML engineers, the relevant question is not whether cost improvements are possible, but whether they are reproducible under enterprise-scale workloads. AI inference economics depend on stable throughput under realistic session lengths and concurrency constraints.

The broader risk is benchmarking opacity. When vendors publish performance improvements without standardized context, cross-model economic comparison becomes speculative. Inference cost must therefore be analyzed at the system level, including memory footprint and hardware constraints, not just token pricing.

Long Context Scaling and KV Cache Constraints

Long-context scaling has become one of the most visible performance signals in early 2026. In mid-February, Reuters reported that DeepSeek expanded its chatbot context window from 128,000 tokens to 1,000,000 tokens, allowing book-length inputs within a single session.

From a user perspective, a one million token window suggests fewer retrieval steps and more coherent long-form reasoning. From an engineering perspective, it fundamentally alters memory requirements and inference cost dynamics.

Why 1M Tokens Is Not Just a Feature

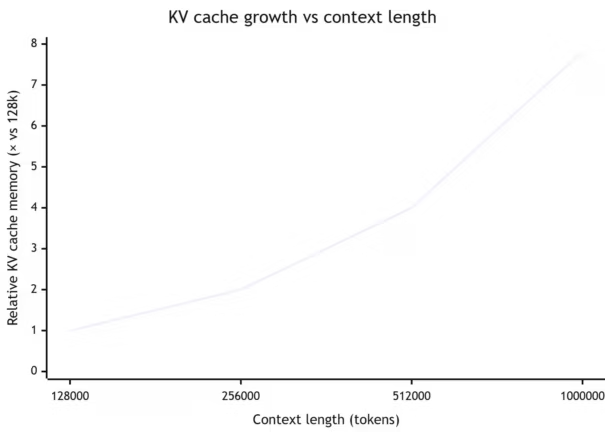

Transformer-based models store key and value tensors for each processed token in the KV cache during inference. As context length increases, KV cache memory grows approximately linearly with the number of tokens retained in attention.

Moving from 128K to 1M tokens represents nearly an eightfold increase in stored context. Even with quantization and memory optimization techniques, the VRAM requirement per active session increases substantially. This directly affects:

- Maximum batch size per GPU

- Concurrent session capacity

- Latency stability under load

The engineering trade-off is straightforward. Larger context windows reduce the need for aggressive document chunking or external retrieval in some workflows. However, they increase memory pressure and may reduce tokens per second if hardware constraints are reached.

In AI Inference Economics 2026, long context is therefore not a marketing feature. It is a cost multiplier tied to GPU memory configuration and session concurrency.

Throughput Trade-Offs and Multi-Agent Workflows

Agentic AI systems often operate as multi-step workflows rather than single exchanges. An agent may retrieve data, generate intermediate reasoning steps, call tools, and produce structured outputs. Each step consumes tokens and may extend session length.

When long context is combined with multi-agent orchestration, two constraints emerge:

- Sustained token throughput per GPU

- Memory allocation per concurrent workflow

Higher context ceilings can simplify reasoning chains, but they also reduce effective concurrency if VRAM becomes the limiting factor. In high-density inference clusters, this trade-off influences cost per task and overall infrastructure efficiency.

For teams evaluating coding agents and orchestration pipelines, the practical question becomes whether to prioritize long context or retrieval-augmented generation, RAG, strategies. We explore real-world execution patterns and trade-offs in AI coding agents: the reality on the ground beyond benchmarks.

In short, context length, KV cache scaling, and multi-agent concurrency form a tightly coupled economic system. Long context can improve capability, but it must be budgeted at the infrastructure level.

Open-Weight vs Closed API: Strategic Divergence

In 2026, AI inference economics are increasingly shaped by a structural divergence between open-weight deployment and closed API consumption. This divergence affects cost visibility, compliance control, and infrastructure burden.

Open-weight models, such as those distributed by Alibaba with Qwen 3.5, can be deployed on internal infrastructure. Closed API models, typical of major US providers, abstract infrastructure behind managed services. The economic comparison extends beyond token pricing into governance, scaling, and operational complexity.

Control, Compliance, and Infrastructure Burden

Open-weight deployment offers direct control over data residency, logging, and model behavior tuning. Enterprises operating in regulated sectors may prefer this approach to maintain auditability and minimize external data transfer.

However, this control shifts responsibility to internal teams. Organizations must manage:

- GPU provisioning and maintenance

- Memory allocation and scaling

- Security hardening and access controls

- Model updates and compatibility

Closed API models reduce operational complexity but increase dependency on vendor pricing, availability, and policy changes. The cost per token is transparent, yet the underlying infrastructure decisions remain opaque.

| Dimension | Open-Weight Deployment | Closed API Model |

|---|---|---|

| Infrastructure Control | Full internal control | Vendor-managed |

| Data Residency | Configurable on-prem or private cloud | Dependent on provider |

| Cost Structure | Hardware + operations | Usage-based token pricing |

| Scalability | Limited by internal cluster capacity | Scales with provider infrastructure |

| Operational Complexity | High | Lower for integration teams |

This matrix illustrates that AI inference economics are not purely numerical. They are organizational and architectural.

China’s Acceleration: Qwen, Doubao, DeepSeek

Recent reporting by Reuters highlights rapid iteration across Chinese model ecosystems, including Alibaba’s Qwen 3.5 and ByteDance’s Doubao 2.0. These releases emphasize open-weight availability combined with hosted services, creating a hybrid distribution strategy.

DeepSeek’s expansion to a 1M token context window reinforces this positioning. Larger context combined with downloadable weights increases enterprise flexibility, particularly for organizations prepared to invest in infrastructure.

This acceleration does not automatically imply superior performance. Company-reported cost and throughput claims require independent validation. However, it demonstrates a strategic emphasis on deployability and scale, aligning open-weight economics with agentic workflow growth.

Security and orchestration concerns remain central in both deployment models. For a detailed analysis of containment and agent governance frameworks, see Securing agentic AI: the MCP ecosystem and smolagents against terminal chaos.

Market Repricing and the Economics of Agents

In early February 2026, Reuters reported a significant selloff in global software stocks tied to concerns that AI agents could disrupt traditional SaaS revenue models. The reaction reflects investor expectations that agentic AI will alter workflow economics, not just user interfaces.

According to Reuters coverage of the software market reaction, fears center on the idea that multi-step agents may absorb tasks previously monetized through per-seat subscriptions. This market signal is not proof of revenue decline, but it is evidence of repricing risk.

Agentic systems differ from conversational copilots because they execute coordinated sequences of actions. An agent can draft, revise, retrieve, and integrate outputs across tools in a single workflow. This reduces friction between applications and potentially reduces dependency on intermediate coordination software.

From an economic perspective, the shift introduces three pressures:

- Reduced marginal value of seat-based pricing

- Increased importance of API-level integration

- Greater emphasis on orchestration reliability and execution traceability

It is essential to distinguish confirmed reporting from extrapolation. Reuters documents equity movement and investor commentary, not verified declines in SaaS adoption or earnings.

For enterprises, the structural question is how to reposition value. If execution shifts toward agent-driven automation, differentiation may move toward domain specialization, workflow customization, and compliance-layer tooling rather than general-purpose productivity interfaces.

This repricing dynamic connects directly to AI inference economics. If agents increase token throughput per user while reducing seat counts, cost structures must be recalibrated around compute consumption rather than user licensing.

What AI Inference Economics 2026 Means for Decision-Makers

AI Inference Economics 2026 is not a theoretical debate about model quality. It is a structural question about capital allocation, memory constraints, and workflow automation economics.

For CTOs and infrastructure leaders, the >$600B hyperscaler spending reported by Reuters signals sustained expansion of accelerator clusters and data center capacity. Planning must therefore account for long-context memory pressure, persistent agent workloads, and sustained GPU utilization rather than short interactive bursts.

For ML engineers, the key constraint is no longer only model accuracy. It is throughput under realistic concurrency. KV cache growth, batch size trade-offs, and context-length decisions directly shape cost per task. Long context may reduce retrieval complexity, but it increases memory and hardware demands.

For product and enterprise strategists, the repricing of software markets reflects a deeper shift. If agents execute workflows across multiple systems, value migrates toward orchestration reliability, domain adaptation, and governance layers. Token pricing alone does not determine competitiveness, execution efficiency does.

AI Inference Economics 2026 therefore sits at the intersection of infrastructure scale, cost transparency, and deployment architecture. As agentic systems move from assistive tools to execution layers, the economic model of AI becomes inseparable from hardware density and systems engineering discipline.

The next 18 to 24 months will test whether vendor-reported cost reductions and long-context expansions translate into reproducible enterprise performance. Until independent benchmarks and sustained production metrics are widely available, inference economics must be evaluated cautiously, at the system level rather than the marketing layer.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!