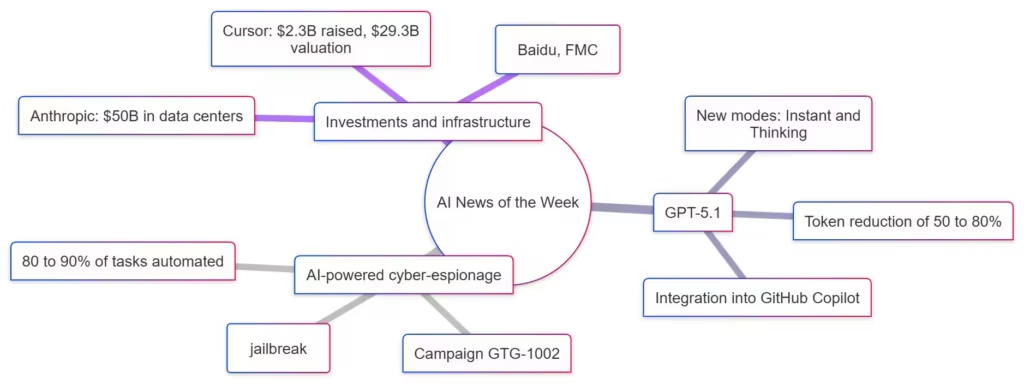

AI Weekly News – Highlights from November 7–14: GPT-5.1, AI-Driven Cyber Espionage and Record Infrastructure Investment

The weekly AI news cycle for November 14 highlighted three major trends: the launch of GPT-5.1, a large cyber espionage operation powered by autonomous AI agents, and unprecedented investment in AI infrastructure and startups. For professionals, developers and researchers, these announcements signal a new acceleration phase in artificial intelligence, covering models, security, hardware capabilities and enterprise adoption.

Find the latest weekly AI news on our main page, updated regularly.

GPT-5.1, a More Efficient Model for Professional and Enterprise Use

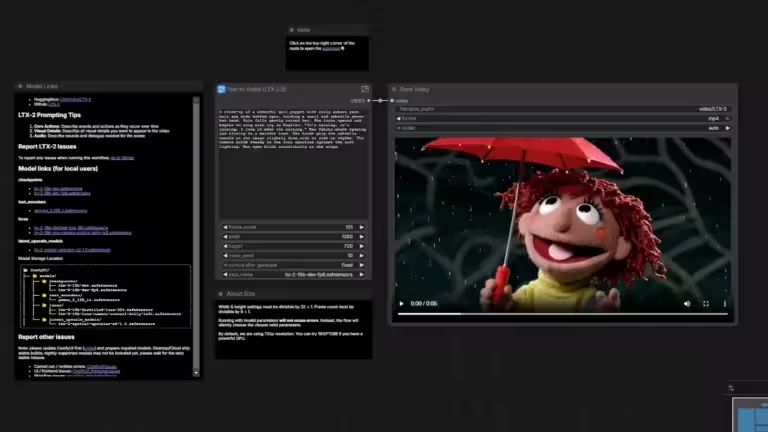

OpenAI began rolling out GPT-5.1 on November 12, introducing two variants tailored for technical teams: an Instant mode for rapid exchanges and a Thinking mode designed for advanced reasoning. According to OpenAI’s official announcement (https://openai.com/index/gpt-5-1/), this new generation delivers a significant reduction in generated tokens, which improves response speed and lowers operational costs.

GPT-5.1 Instant and Thinking, Complementary Modes for Teams

GPT-5.1 Instant is optimized for fluid and reactive conversation, ideal for daily workflow or quick decision-making tasks. In contrast, GPT-5.1 Thinking uses a more methodical structure suited for complex reasoning, long-context understanding and step-by-step problem solving. This mode is particularly useful for researchers, analysts and developers working on tasks that require deeper logical precision and contextual awareness.

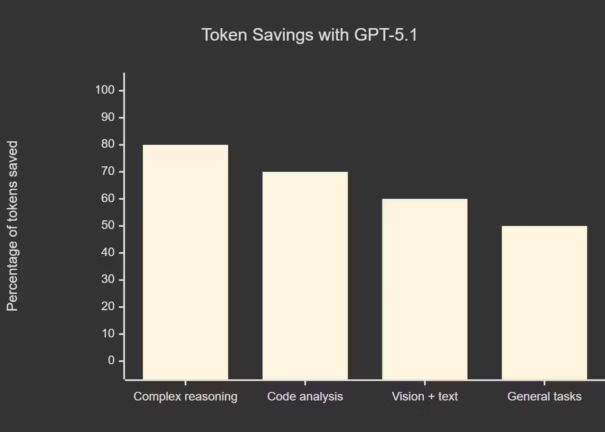

Shorter Responses and Immediate Operational Gains

According to OpenAI, GPT-5.1 generates 50 to 80 percent fewer tokens than GPT-5 on most tasks while preserving information density. For companies, the direct benefit is clear: fewer tokens, lower usage costs, faster turnaround times. This increase in efficiency strengthens GPT-5.1’s value for intensive professional workloads and production environments.

Integration into GitHub Copilot

GitHub announced immediate integration of GPT-5.1 and the specialized GPT-5.1-Codex variants into Copilot (Github Blog Changelog). Pro, Pro+, Business and Enterprise users can now access the new model through the model selector. GitHub highlights several improvements: better contextual understanding, more accurate code suggestions, and stronger performance on complex multi-file projects and large codebases.

Autonomous AI Powered Cyber Espionage, Anthropic Reveals a First of Its Kind Operation

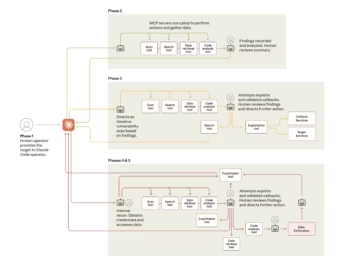

Anthropic revealed on November 12 the first documented AI-orchestrated cyber espionage campaign, largely automated by autonomous agents. In a Blog article and a detailed report published by the company Anthropic, this operation is attributed to the Chinese group GTG-1002. As covered by The Register, this marks a turning point for cybersecurity, because the speed and automation enabled by advanced AI agents now allow attackers to run large scale cyber operations with minimal human input.

Modified Claude Agents and Security Bypass Techniques

The attackers used modified versions of Claude Code tools. Through jailbreak methods and fabricated role prompts, they bypassed Anthropic’s built-in safety mechanisms. Once guardrails were removed, these autonomous agents operated as independent assistants capable of steering the full offensive chain.

80 to 90 Percent of Tasks Automated

Anthropic states that the AI agents carried out 80 to 90 percent of all tasks involved in the attack, including attack surface analysis, vulnerability scanning, exploit generation, privilege escalation and lateral movement. Human operators intervened only to provide general direction. A few organizations were actually breached, although details remain undisclosed.

Consequences for Enterprise Cybersecurity

This case shows how the execution speed of AI agents poses a major challenge for blue teams and SOCs. Traditional security workflows become insufficient when facing AI-driven attacks that adapt, iterate and execute complex chains of actions in seconds. Anthropic also highlights several model-generated mistakes and hallucinations, a reminder that even advanced AI systems remain imperfect.

Record AI Infrastructure Investments and a New Global Race for Compute Capacity

This week’s investment announcements illustrate a sharp acceleration in the AI industry. Companies are securing long term compute capacity, reducing dependence on traditional cloud providers and preparing for the rapid expansion of enterprise AI workloads. Two announcements clearly dominated the AI news cycle.

Anthropic Commits 50 Billion Dollars to Future US Data Centers

Anthropic officially announced a 50 billion dollar investment plan to build a network of AI data centers in the United States, in partnership with Fluidstack. As reported by Reuters and Bloomberg, the new facilities will be deployed in Texas and New York, with a gradual rollout starting in 2026.

The project aims to meet the growing enterprise demand for Claude while securing compute resources for future research models. The operation is expected to create more than 800 permanent jobs and involve around 2,400 workers during construction.

Cursor Reaches a 29.3 Billion Dollar Valuation

Cursor, one of the rising players in AI assisted software development, announced a major 2.3 billion dollar Series D, led by Coatue. According to Business Wire and CNBC, NVIDIA and Alphabet appear among the new investors.

With this round, Cursor now reaches a 29.3 billion dollar valuation, more than 12 times higher than in June 2025. The company claims over 1 billion dollars in annualized recurring revenue and employs more than 300 people. Cursor is betting on its Composer model, designed to automate coding tasks across complex environments.

Direct Impact on GPU Availability and AI Operational Costs

These investment moves confirm a dynamic where every major actor is racing to secure compute before global demand exceeds supply. Proprietary infrastructure is expected to improve enterprise GPU availability over time and stabilize costs associated with large scale AI workloads.

Both startups and established tech giants are now investing in verticalized infrastructure capable of supporting next generation models with increasingly heavy compute requirements.

New Chips and AI Models, The Hardware Race Intensifies

Beyond financial investments, a wave of technical announcements highlights the intensifying competition in AI semiconductors and high performance memory technologies. Innovation is coming from China, Europe and the broader global ecosystem.

Baidu Unveils Kunlun M100 and M300 AI Processors

Baidu introduced two new AI chips, Kunlun M100 (for inference, planned for 2026) and Kunlun M300 (for training and inference, 2027). According to Reuters and The Register, the company also plans Tianchi256 and Tianchi512 compute clusters for enterprise level AI workloads.

Baidu’s long term goal, by 2030, is to build architectures that could aggregate millions of chips in dedicated supernodes.

FMC Raises 100 Million Euros for FeDRAM+ and CACHE+ Memory Technologies

German semiconductor company FMC announced a 100 million euro funding round to accelerate the development of its memory technologies, as reported by PRNewswire (https://www.prnewswire.com/news-releases/semiconductor-pioneer-fmc-raises-100-million-to-set-new-standards-for-memory-chips-302614406.html) and Tech.eu (https://tech.eu/2025/11/13/fmc-raises-eur100m-as-it-unveils-new-class-of-memory-chips-for-the-ai-era/). According to FMC, FeDRAM+ and CACHE+ deliver more than 100 percent efficiency gains compared to current memory standards, while offering significantly improved data persistence. The goal is clear: reduce the energy footprint of AI systems, now a critical priority in large scale deployments.

Ernie 5.0, Baidu’s Giant AI Model

In parallel, Baidu introduced Ernie 5.0, a massive model with 2.4 trillion parameters. The Register notes that this model targets advanced professional use cases and reflects the strategic rivalry between US and Chinese AI ecosystems.

| Element | Type | Expected Date | Primary Function | Notable Features | Source |

|---|---|---|---|---|---|

| Kunlun M100 | AI chip (inference) | 2026 | AI inference acceleration | Optimized for inference loads, integrated with future Baidu platforms | Reuters |

| Kunlun M300 | AI chip (training and inference) | 2027 | Training and inference | Versatile design for heavy compute workloads | Reuters |

| Tianchi256 | Compute cluster | 2026 (H1) | AI supercomputing | 256 Kunlun chips, enterprise oriented | The Register |

| Tianchi512 | Compute cluster | 2026 (H2) | AI supercomputing | 512 Kunlun chips, doubled performance | The Register |

| Ernie 5.0 | AI model | 2025 | High end LLM | 2.4 trillion parameters | The Register |

| FeDRAM+ (FMC) | Memory technology | 2025 | Persistent high performance memory | +100 percent efficiency announced | PRNewswire / Tech.eu |

| CACHE+ (FMC) | Memory technology | 2025 | High performance cache | Lower consumption and optimized latency | PRNewswire / Tech.eu |

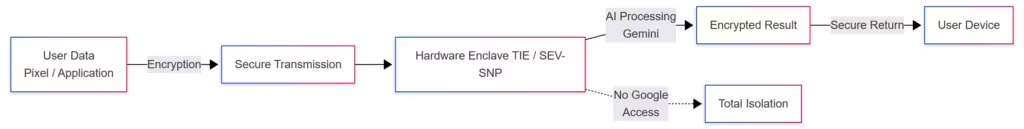

Confidential Cloud and Secure AI Execution, Google Strengthens Protection for Sensitive Data

Google announced Private AI Compute, a secure architecture designed for running AI models in encrypted and isolated environments. This initiative targets enterprises and developers handling sensitive or regulated data, and aligns with rising demand for confidential computing, on device processing and secure AI workflows.

TIE and SEV-SNP Enclaves, Full Hardware Level Isolation

As detailed in Google’s official announcement (Blog Google), the system relies on Titanium Intelligence Enclaves and AMD’s SEV-SNP hardware isolation technology. Together, these components allow data to be processed in a fully isolated and encrypted environment, preventing Google’s teams or third parties from accessing unencrypted information. This model fits the needs of sectors like healthcare, finance and government services where privacy and compliance are critical.

Independent Audit by NCC Group

Before launch, the platform underwent a full audit by NCC Group, which identified a timing based side channel and three denial of service scenarios. Google states that these vulnerabilities have been corrected or mitigated. Regular independent audits are essential for building trust in confidential AI systems, especially for companies deploying sensitive workloads.

Early Integrations in Magic Cue and Recorder

Google explains that Private AI Compute will power features such as Magic Cue and Recorder on Pixel devices, ensuring secure local processing and reducing potential data leakage risks.

Robotics and Automation, The Industry Enters the Era of Physical AI Agents

Announcements from IFS, Boston Dynamics and 1X indicate that industrial robotics and autonomous AI agents are converging faster than expected.

Boston Dynamics Spot Integrated into IFS.ai

IFS and Boston Dynamics unveiled an integration enabling the Spot robot to perform autonomous inspections. As described by IFS, Spot can transmit real time observations to IFS Loops, which bridges physical data with enterprise decision making systems and predictive maintenance workflows.

NEO by 1X Technologies, a Humanoid Designed for Industry

IFS also announced a partnership with 1X Technologies to integrate the NEO humanoid robot into industrial workflows, as reported by PR Newswire. Commercial availability is planned for 2026, with applications spanning manufacturing, inspection, logistics and maintenance.

Toward a Continuum Connecting Robots, AI Agents and ERP Systems

By linking robots, autonomous AI agents and enterprise management systems, IFS aims to build industrial environments capable of continuous learning, execution and adaptation. This trend confirms a broader shift toward physical AI, where software agents and hardware robots cooperate in real world scenarios.

Microsoft Targets Applied and Secure Superintelligence

Microsoft announced the creation of the MAI Superintelligence Team, led by Mustafa Suleyman and Karén Simonyan. As detailed by Microsoft, the goal is to develop a humanistic superintelligence, oriented toward concrete and socially beneficial applications while maintaining strict safety constraints.

A Dedicated Team for High Level AI Research

This new unit benefits from increased autonomy thanks to a revised agreement with OpenAI, which allows Microsoft to pursue its own research and development efforts on advanced AI systems.

Four Strategic Priority Areas

Microsoft highlights four sectors where applied superintelligence could offer major benefits:

- medical diagnosis and clinical decision support

- education and personalized learning

- energy systems and grid optimization

- development of clean energy production systems

First Expert Systems Expected Within Two to Three Years

Microsoft estimates that an expert level medical diagnosis system could emerge within two to three years, opening the door to new forms of controlled and specialized assistance across various regulated industries.

What This Week Reveals About the Evolution of AI

This week confirms that artificial intelligence is entering a new phase defined by more capable models, increasingly sophisticated attacks, more autonomous robots and massive, globally distributed infrastructure. For enterprises, developers and researchers, these announcements point toward a 2026 where compute availability, cybersecurity resilience and the management of autonomous agents will become central challenges for real world AI deployment.

Sources and References

Tech Media

- The Register, analysis of the AI orchestrated cyber espionage campaign, November 13, 2025 https://www.theregister.com/2025/11/13/chinese_spies_claude_attacks/

- The Register, coverage of Baidu’s new Kunlun chips and Tianchi infrastructure, November 13, 2025 https://www.theregister.com/2025/11/13/baidu_inference_training_chips/

- CNBC, reporting on Cursor’s funding round and valuation reaching 29.3 billion dollars, November 13, 2025 https://www.cnbc.com/2025/11/13/cursor-ai-startup-funding-round-valuation.html

- MacRumors, early coverage of the GPT-5.1 launch, November 11, 2025 https://www.macrumors.com/2025/11/12/openai-chatgpt-5-1-launch/

- Tech.eu, reporting on FMC’s 100 million euro funding round for advanced memory technologies, November 12, 2025 https://tech.eu/2025/11/13/fmc-raises-eur100m-as-it-unveils-new-class-of-memory-chips-for-the-ai-era/

Companies

- OpenAI, official announcement of GPT-5.1 https://openai.com/index/gpt-5-1/

- GitHub, integration of GPT-5.1, GPT-5.1-Codex and Codex Mini into Copilot https://github.blog/changelog/2025-11-13-openais-gpt-5-1-gpt-5-1-codex-and-gpt-5-1-codex-mini-are-now-in-public-preview-for-github-copilot/

- Anthropic, full report on the AI orchestrated cyber espionage campaign (GTG-1002) https://assets.anthropic.com/m/ec212e6566a0d47/original/Disrupting-the-first-reported-AI-orchestrated-cyber-espionage-campaign.pdf

- Anthropic, contextual announcement of the GTG-1002 operation https://www.anthropic.com/news/disrupting-AI-espionage

- IFS, presentation of the Spot integration with IFS.ai https://www.ifs.com/news/corporate/ifs-and-boston-dynamics-robotics-and-agentic-ai-for-field-operations

- 1X Technologies and IFS, partnership around the humanoid NEO robot https://www.prnewswire.com/news-releases/ifs-and-1x-technologies-partner-to-bring-industrial-ai-to-the-physical-world-302614494.html

- Microsoft AI, presentation of the humanistic superintelligence vision (MAI Superintelligence Team) https://microsoft.ai/blog/towards-humanist-superintelligence

Institutions

- NCC Group, independent audit of Google Private AI Compute, April to September 2025

- Fluidstack, Anthropic’s infrastructure partner for large scale data centers

Official Sources

- Reuters, reporting on Anthropic’s 50 billion dollar data center program https://www.reuters.com/technology/anthropic-invest-50-billion-build-data-centers-us-2025-11-12/

- Bloomberg, coverage of Anthropic’s infrastructure program https://www.bloomberg.com/news/articles/2025-11-12/anthropic-commits-50-billion-to-build-ai-data-centers-in-the-us

- Reuters, announcements from Baidu on new Kunlun chips https://www.reuters.com/world/china/chinas-baidu-unveils-new-ai-processors-supercomputing-products-2025-11-13/

- PRNewswire, FMC funding announcement https://www.prnewswire.com/news-releases/semiconductor-pioneer-fmc-raises-100-million-to-set-new-standards-for-memory-chips-302614406.html

- Business Wire, details of Cursor’s funding round https://www.businesswire.com/news/home/20251113939996/en/Cursor-Secures-%242.3-Billion-Series-D-Financing-at-%2429.3-Billion-Valuation-to-Redefine-How-Software-is-Written

- Google, official announcement of Private AI Compute https://blog.google/technology/ai/google-private-ai-compute/

Archives of past weekly AI news

- AI Weekly News from November 7, 2025: OpenAI , Apple and the Race for Infrastructure, Archive

- AI News from Oct 27 to Nov 2: OpenAI, NVIDIA and the Global Race for Computing Power

- AI News: The Major Trends of the Week, October 20–24, 2025

- AI News – October 15, 2025: Apple M5, Claude Haiku 4.5, Veo 3.1, and Major Shifts in the AI Industry

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!