Claude Haiku 4.5 and the new wave of efficient AI

Artificial intelligence is no longer the domain of massive, expensive frontier models. With Claude Haiku 4.5, Anthropic opens a new chapter in AI development: the era of efficient AI models, designed to deliver high-level performance at a fraction of the cost.

In a world where AI agents, automation, and LLM-assisted programming are multiplying, the need to balance power, speed, and cost efficiency has become crucial. The challenge is no longer just accuracy, but economic efficiency in large-scale AI operations.

What is “efficient AI”?

An efficient AI model is not necessarily the most powerful one, but the one that does more with fewer resources. It combines execution speed, reasoning quality, and optimized cost per request.

Performance, cost, and latency: finding the new balance

Historically, AI models have followed a simple trajectory: bigger and more expensive. But that approach has reached its limits. Each call to a large model like GPT-5 or Claude 4.5 can consume hundreds of tokens, or even more when dealing with autonomous AI agents performing iterative tasks.

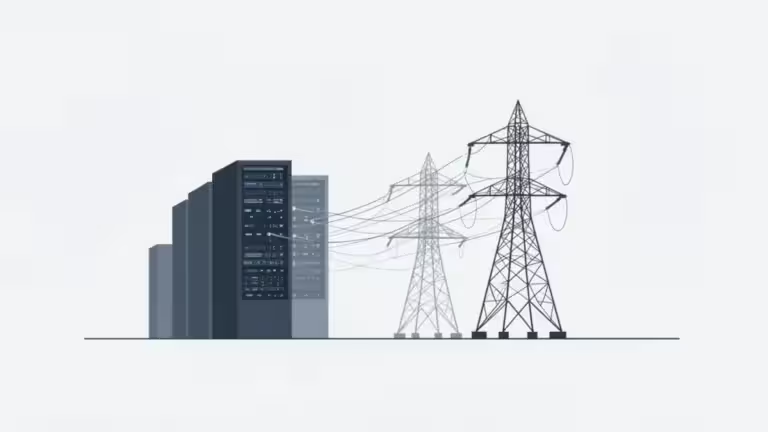

With the explosion of use cases, personal assistants, chatbots, code generation, automated research, the number of API calls has skyrocketed. Companies deploying large-scale automation workflows through such models see their cloud bills spiral upward, prompting a growing focus on AI cost efficiency: reducing expenses without sacrificing quality.

From heavyweight to optimized models

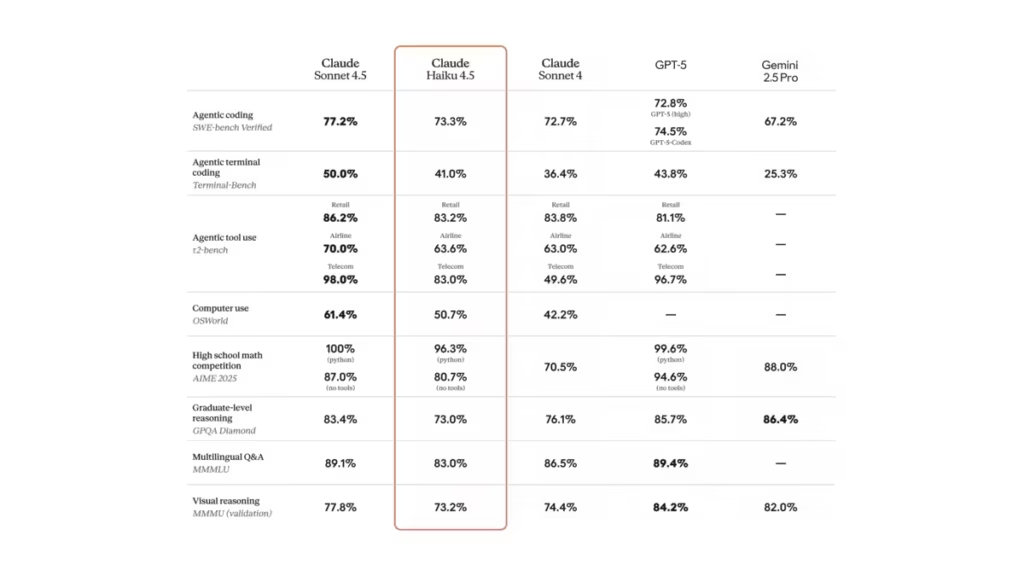

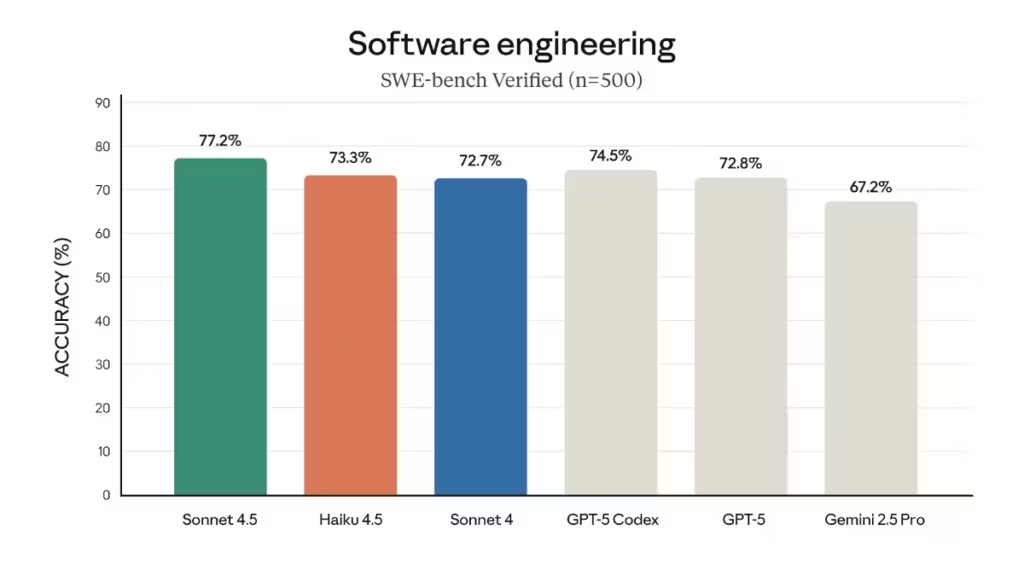

A new generation of “small but powerful” models has emerged: compact architectures that perform nearly as well as top-tier models while being faster and cheaper. Claude Haiku 4.5 perfectly illustrates this trend, offering 90% of Claude Sonnet 4.5’s performance at one-third of the cost and twice the speed (Anthropic, 2025).

Why efficiency matters for businesses and developers

For companies, this evolution is strategic. An efficient model allows teams to automate more tasks, summarization, monitoring, code analysis, or content generation, while maintaining control over budgets. It also helps reduce dependency on the cloud, as more organizations migrate toward open-source LLMs hosted on-premise for greater autonomy and data privacy.

Claude Haiku 4.5: performance and positioning

Launched in October 2025, Claude Haiku 4.5 is the fastest and most capable model in Anthropic’s Haiku line. It stands out for its near-frontier intelligence, record-breaking speed, and exceptional energy efficiency.

Frontier-level performance at lower cost

According to Anthropic, Haiku 4.5 scores 73.3% on the SWE-bench Verified benchmark, a key indicator of real-world programming ability. This places it just behind Claude Sonnet 4.5, widely considered the best coding model available. It also runs four to five times faster than Sonnet on reasoning and editing tasks, while costing one-third as much ($1/$5 per million tokens).

This balance makes Haiku 4.5 an ideal solution for developers and high-volume workloads such as pair programming, rapid prototyping, code analysis, and multi-agent workflows.

Built for real-time and low-latency tasks

Haiku 4.5 is optimized for interactive and low-latency use cases. In Claude for Chrome or Claude Code, users experience smoother sessions and instant responsiveness. Its integration into GitHub Copilot confirms its purpose: a fast and cost-effective AI model for everyday coding.

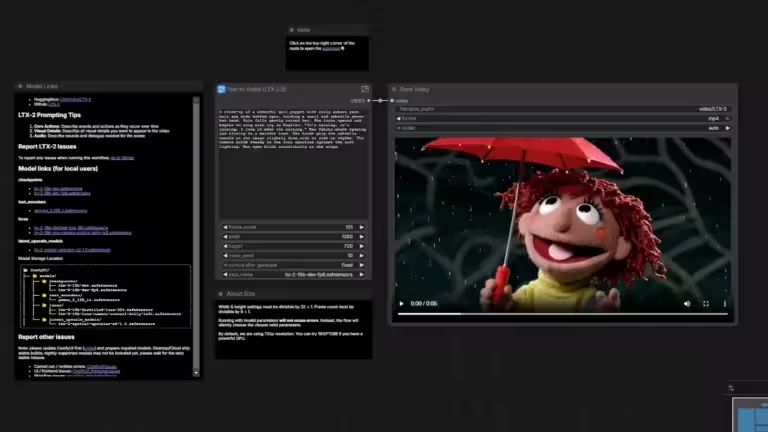

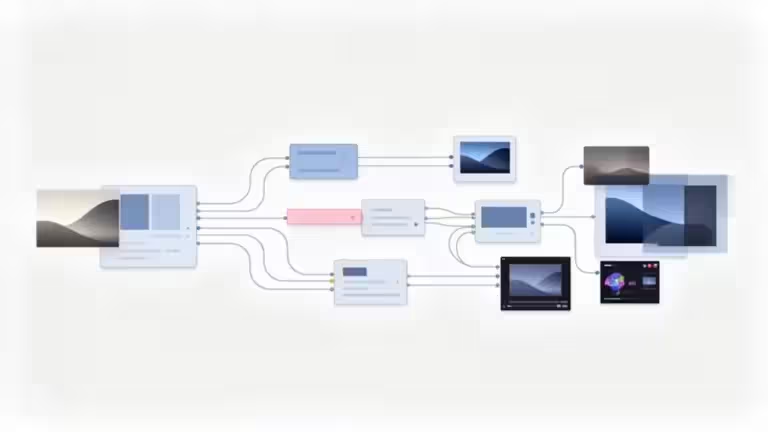

Designed for multi-agent architectures

Anthropic is also pioneering multi-agent orchestration, where Sonnet 4.5 acts as a planner delegating subtasks to multiple Haiku 4.5 instances running in parallel (Anthropic, 2025). This approach is central to modern AI systems, enabling faster workflows at lower cost. Instead of one large model handling everything, a cluster of smaller Haiku 4.5 agents can execute in parallel, delivering results more quickly and economically.

Why “small but powerful” AI models are shaping the future

The optimal trade-off between cost and latency

Efficiency is no longer just about accuracy; it’s about achieving the right balance between performance, cost, and speed. Haiku 4.5 embodies this principle, offering sufficient intelligence for most use cases while remaining extremely affordable. These mid-sized models are becoming the default choice for operational AI, powering internal assistants, automation pipelines, document analysis, and code refactoring at scale.

Scalability and industrialization of AI

In an ecosystem where AI agents proliferate, every additional task adds cost. A model that’s three times cheaper makes large-scale deployments far more feasible. For AI platforms, this unlocks massive applications such as customer support, automated testing, content generation, and sub-agent orchestration.

Smarter and lighter architectures

This wave of efficient AI is driven by techniques such as distillation, quantization, and hybrid architectures (Mixture of Experts). The goal is to reduce model size without sacrificing reasoning ability. Haiku 4.5 represents this philosophy perfectly, a lightweight model capable of outperforming premium systems in specific tasks (Reddit).

This pursuit of efficiency extends beyond AI models to the hardware level. NVIDIA, for example, introduced the NVFP4 format, a 4-bit precision standard designed to accelerate inference and training while reducing energy consumption and memory usage. This kind of innovation reflects the same mindset as Claude Haiku 4.5: accepting a small loss in precision for massive gains in speed and cost-efficiency. It’s a defining trend across the industry, where raw performance is slowly giving way to computational efficiency.

The advantages, limits, and risks of Claude Haiku 4.5

Advantages: speed, cost, and versatility

Early feedback from Reddit and GitHub users is almost unanimous: Claude Haiku 4.5 excels where it matters, speed and affordability. Developers report noticeably smoother sessions, even on complex tasks, with significantly lower token consumption (Reddit).

This gain in efficiency translates to:

- lower latency, ideal for real-time agents

- three times lower cost than Sonnet 4 with similar output quality

- scalability across APIs and production environments

- an outstanding price-to-performance ratio for high-volume workloads

For many companies, this combination is a turning point, making it possible to automate hundreds of requests per minute without exploding the budget. In coding, customer service, or AI-driven monitoring, Haiku 4.5 stands out as a cost-effective production model.

Limitations: complex reasoning and long-context handling

However, efficiency comes with trade-offs. Claude Haiku 4.5 remains a “small” model: excellent for single tasks, but weaker at long-term reasoning or projects requiring deep contextual memory. Some users report loss of coherence during extended sessions or multi-file coding projects, where Sonnet 4.5 maintains a better global perspective (Reddit).

In addition, testers have noticed context instability in large multi-agent systems where several Haiku 4.5 instances interact simultaneously. These cases are rare but highlight the challenge of orchestrating many fast models without proper supervision.

Risks: hidden costs and standardization

The irony is that a “cheaper” AI can actually cost more, by encouraging overuse. In automated systems where agents run continuous loops, even a low-cost model can generate significant expenses. This apparent efficiency requires careful governance: token quotas, task prioritization, and a balance between lightweight and powerful models.

Another risk is market uniformity. If all providers converge toward similar mid-sized architectures, innovation could stagnate. The industry may end up with a generation of fast yet homogenized models, reducing creative diversity in AI design.

Perspectives: toward more efficient and distributed AI

The rise of hybrid architectures

The future looks hybrid. Anthropic is already demonstrating it: Sonnet 4.5 plans tasks, while a swarm of Haiku 4.5 models executes them. This combination of a strategic brain and operational arms may become the new norm. Organizations will build modular systems where each AI plays a distinct role, planning, execution, or verification, according to cost and capability.

Toward local and distributed AI

Amid cost pressure and growing privacy concerns, open-source models are gaining traction. LLMs like Qwen 3 Next, DeepSeek V3.2, and Mistral Large are increasingly deployed on in-house servers, providing control and independence from cloud providers.

Claude Haiku 4.5, thanks to its API efficiency and modularity, fits naturally into this shift toward distributed infrastructure, an AI that’s fast, adaptable, and deployable almost anywhere.

A new era of computational sobriety

We are entering an age where computational sobriety becomes a key innovation metric. Models like Haiku 4.5 prove that progress is no longer about size, but about optimizing every token. This “efficient AI” mindset could shape the next generation of models, practical, specialized assistants rather than monolithic encyclopedic systems.

How to use Claude Haiku 4.5 effectively

Choose the right model for each task

- Haiku 4.5 for reactive workloads, prototyping, pair programming, or fast agents

- Sonnet 4.5 for deep analysis, complex reasoning, or multi-file coding

- A hybrid approach to balance speed and accuracy

Think in terms of architecture

A simple workflow: Sonnet 4.5 plans → Haiku 4.5 executes → Sonnet verifies. This multi-model strategy reduces overall cost while maintaining output quality.

Monitor and control costs

Set token limits per agent, centralize billing, and adjust the reasoning depth (thinking tokens) as needed. Anthropic already provides fine-grained control via the Claude API for real-time consumption tracking (Claude Docs).

Conclusion

Claude Haiku 4.5 is more than a faster, cheaper model. It represents a shift in how artificial intelligence evolves: useful, cost-efficient, and accessible, moving away from scale for the sake of scale toward measured efficiency.

In a landscape where every AI request has a cost, where agents flood APIs with calls, and where speed is strategic, Haiku 4.5 paves the way for a smarter, more modular, and efficient future. The era of efficient AI has only just begun, but as open-source and open-weight models rise, one question remains: will efficiency alone be enough to slow their unstoppable momentum?

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!