Claude Opus 4.6 vs GPT-5.3 Codex: the agentic AI duel of 2026

The market for AI coding agents in 2026 is defined by a fierce competition for control of the terminal. This duel opposes two distinct philosophies: on one side, Anthropic’s context-driven ecosystem integration with Claude Code; on the other, OpenAI’s raw power and massive user base with GPT-5.3 Codex. Independent analyses from Gigazine estimate that Claude Code may already be involved in approximately 4% of public GitHub commits, a figure that illustrates the scale of this shift. Choosing between these tools is no longer just about performance, but about operational reliability in a real-world development lifecycle.

Performance and benchmarks: beyond static code

The war of numbers has reached new heights. Claude Opus 4.6 now posts scores exceeding 80% on SWE-bench Verified (approximately 80.8%), establishing itself as the benchmark for resolving complex, multi-layered problems. While GPT-5.3 Codex remains highly competitive in isolated tasks, community feedback often describes it as feeling smoother for real-time interactive usage within Integrated Development Environments (IDEs).

Context window and user perception

Claude’s strategic advantage lies in its massive context window and multi-file consistency. Where Codex may lose the thread in distributed architectures, Claude maintains a holistic vision of the project. However, Codex retains a responsiveness that is highly valued for real-time pair programming, despite documented instabilities during prolonged sessions.

Real-world friction: the terminal test

Beyond marketing promises, intensive usage reveals disparities in tool reliability. The ability to maintain a stable session is the new deciding factor.

- Destructive file management: GPT-5.3 Codex suffers from a recurring defect known as the “CAT pattern”, where the AI rewrites an entire file for a minor modification. This approach significantly increases the risk of corruption and token waste. In contrast, Claude Opus 4.6 prioritizes structured diffs, targeting only the specific lines of code affected.

- Surgical corrections: OpenAI is attempting to address these criticisms by introducing dedicated patch tools to limit massive rewrites.

- System instabilities: Claude Code is not without its own friction, notably reported bugs in the Bash tool under macOS or context loss during long-duration tasks.

For a deeper dive into these technical failures, see our complete audit of agentic bugs.

Architecture and strategy: the MCP opening

The true line of fracture lies in interoperability. Anthropic is actively pushing the Model Context Protocol (MCP) as an open standard for agentic orchestration.

By transforming the AI into an orchestrator capable of connecting natively to data sources or monitoring tools, this protocol allows for better-framed code execution. This architecture makes it possible to govern AI actions rather than granting unlimited, unsupervised access. While Codex CLI supports MCP via configuration, OpenAI currently favors a more vertically integrated and proprietary approach, which is more opaque for enterprise security audits.

We have analyzed this architectural shift in detail in our guide on securing via MCP and smolagents.

The agentic economy: TCO and token burn

In 2026, the cost of AI is no longer calculated by isolated requests but by the work session. Decision-makers must choose between Anthropic’s “depth premium” and OpenAI’s “volume premium”.

Cost comparison and pricing structures (est. Feb. 2026)

| Cost Metric | Claude Opus 4.6 (Public) | GPT-5.3 Codex (est.) |

|---|---|---|

| Input (per M tokens) | $5.00 | $1.75 |

| Output (per M tokens) | $25.00 | $14.00 |

| Economic Model | Hybrid (Seat + Usage) | SaaS / API Subscription |

| Team Access | Included from $30/month | Included in Teams/Enterprise |

Note: Claude rates are based on public Anthropic data. GPT-5.3 Codex rates are estimates based on GPT-5.1 benchmarks and partner communication.

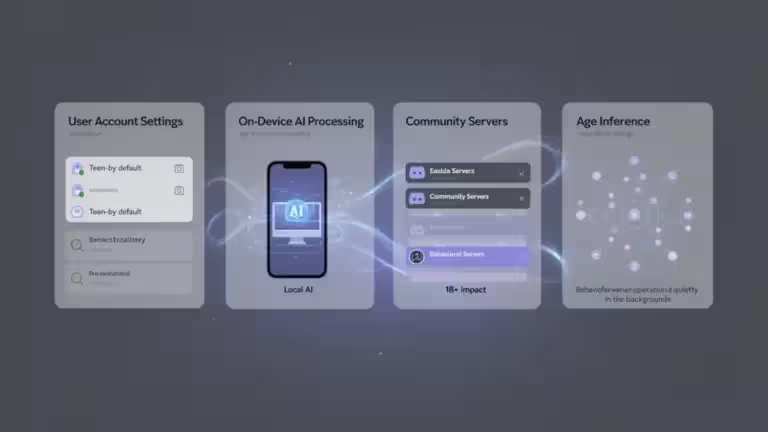

The Accenture inflection point: from speed to governance

The massive adoption of these technologies by industry leaders such as Accenture, which has deployed Claude Code to tens of thousands of its developers, marks a structural shift in the software economy. In sectors such as financial services and healthcare, the priority is no longer just “vibe coding” speed, but the ability to integrate agents into a secure and compliant SDLC.

Accenture’s partnership with Anthropic highlights a move toward transforming engineers into “supervisors” of specialized agent teams. In this model, the human developer focuses on specifying business intent and auditing diffs, while the agent handles the heavy lifting of legacy modernization and compliance mapping.

Token burn and the $20,000 session paradox

As we highlighted in our report on the ground reality of agents, one of the most significant operational challenges in the 2026 agentic landscape remains “token burn”. Autonomous agents engaged in multi-day, complex reasoning cycles can consume massive volumes of context, leading to high cost unpredictability.

A definitive case study in this architectural overhead was documented during the development of a C compiler written in Rust by a swarm of 16 parallel Claude Opus 4.6 agents. This project, aimed at engineering a compiler capable of building the Linux 6.9 kernel from source, required nearly 2,000 Claude Code sessions over a two-week sprint. The operation reached a staggering scale, consuming 2 billion input tokens and 140 million output tokens, resulting in a total inference cost of $20,000.

For enterprise architects and lead engineers, this experiment serves as a critical proof of concept (PoC) for agentic capabilities at the 100k LoC scale. However, it also highlights that the marginal cost of inference remains the primary friction point for the economic viability of fully autonomous large-scale software engineering.

Strategic ROI: from seat to outcome

The ROI of agentic AI is no longer a projection but a reality, with some integrators reporting a 40% increase in velocity after migrating to Opus 4.6 workflows. As organizations move toward Edge AI for sensitive code—using frameworks like ECCC to anonymize data before cloud processing—the economic focus is shifting from simple monthly subscriptions to the value of “successful outcomes”.

For a complete guide on managing these new architectural requirements, refer to our analysis on securing the terminal with MCP and smolagents.

FAQ: Essential B2B insights for 2026

Which model is better for rapid engineering execution?

GPT-5.3 Codex generally finishes coding tasks about 25% faster and excels in raw coding ability for isolated fixes and rapid prototyping.

Which model should be used for large-scale codebase refactoring?

Claude Opus 4.6 is preferred for deep reasoning over large project contexts, thanks to its 1 million token context window and its ability to spawn sub-agents for specialized tasks.

Is GPT-5.3 Codex as reliable as Claude in production?

Claude tends to be more structured, avoiding the “CAT pattern” (massive, destructive rewriting) often criticized in Codex, which significantly reduces regression risks in legacy code.

How do I control the cost of autonomous agents?

The key is architecture: using the Model Context Protocol (MCP) to limit tool access and implementing strict Task Supervisor modes in the SDK to prevent infinite, costly loops.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!