ComfyUI: where to find and download the best models and workflows in 2026

The year 2026 marks a major transformation in how we consume local generative AI, as downloading a model is no longer just about choosing the largest file, but finding the one that best fits your hardware architecture. With the emergence of massive models like Flux 2 and high-fidelity video solutions, curation has become the essential step to ensure optimal performance without saturating your video memory.

Top platforms for next-generation models

The search for ComfyUI models now revolves around a few major platforms that have adapted to the technical demands of 2026. Civitai remains the absolute reference, particularly for the Flux.1 Kontext ecosystem, a 12-billion parameter model that continues to lead the image editing market. According to community reports, this hub offers the widest variety of certified LoRAs, which are indispensable for personalizing your generations.

For video models, Hugging Face has established itself as the primary repository. It hosts the official weights for Tencent’s HunyuanVideo-1.5, capable of generating remarkably fluid clips thanks to its advanced temporal and spatial compression. Technical analyses show that this platform is also the preferred source for Wan 2.2 models, which now rival closed cloud solutions in terms of temporal consistency. Finally, for specific image enhancement needs, OpenModelDB remains a valuable niche, offering specialized upscaling models like the latest versions of Supir or Real-ESRGAN.

Flux 2 and the new standards of realism

The major innovation of early 2026 is the arrival of Flux 2, particularly its Dev (Open Weights) version, which redefines standards for extreme prompt adherence and commercial photorealism. According to NVIDIA, this model specifically benefits from massive optimizations that drastically reduce its memory footprint without perceptible visual loss.

Experts state that Flux 2 stands out due to its increased parameter density, making the use of quantization formats like GGUF or NVFP4 almost mandatory for users without professional workstations. Its ability to handle complex typography and fine details makes it the designated successor for demanding production pipelines.

Choosing the right format: the key to performance in 2026

A major shift in 2026 is the diversification of formats, as downloading a model in native FP16 has become a luxury. For users with 8GB to 16GB of VRAM, the GGUF format has become essential for loading massive models like Flux 2.

As reported by NVIDIA, RTX 50 Series card owners now benefit from native support for the NVFP4 format, which reduces the memory footprint by 60% while tripling inference speed. If your hardware is older, adopting the FP8 format via the quantization framework introduced in late 2025 remains the best alternative for saving 30% to 40% of VRAM on your workflows.

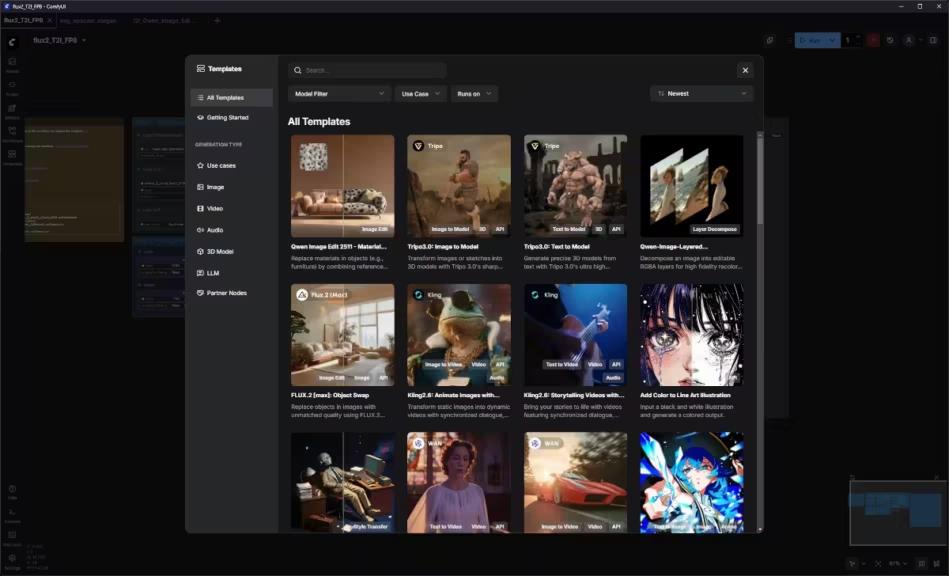

Automation and management with ComfyUI Manager V2

Manual file installation is a practice fading away in favor of secure automation. ComfyUI-Manager V2 now allows you to search and install models directly from the interface, ensuring automatic dependency resolution. According to release notes, this manager includes a security pipeline that blocks access to high-risk system features by default.

This version integrates perfectly with ComfyUI Desktop, which ensures compatibility with new node schemas. According to GitHub, using these official tools significantly reduces errors when importing complex workflows from sites like OpenArt.

Local organization optimization

To maintain a responsive system, your library organization must follow recent architectures. It is recommended to use optimization nodes like Temporal Rolling VAE for video, which reduces peak memory consumption.

In case of GPU saturation, the new Weight Streaming features allow for intelligent offloading to system RAM, preventing crashes. As suggested by Cosmo Edge technical guides, choosing the right model size for your VRAM remains the surest way to guarantee a fast workflow.

Towards a lighter model library?

The technological maturity of 2026 shows us that power no longer lies in raw file weight, but in the intelligence of their compression. Thanks to GGUF formats and adaptive quantization, access to high-quality AI like Flux 2 has been democratized across almost all computers equipped with a modern GPU. This trend toward lighter, more efficient models raises questions about the future of hardware and, more broadly, the local use of generative AI. Will we soon see peak performance models running on our personal computers or workstations?

For beginners, experts, developers, or even creative studios, I recommend reading our guide: ComfyUI: best information sources and ecosystem resources. The ecosystem is evolving fast, and so are creation methods.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!