How to use conversational AI for predictive trend analysis in 2026

Predictive analysis is no longer the exclusive domain of data science teams equipped with complex stacks and custom-built models. In 2026, conversational AI platforms such as ChatGPT, Claude, and Gemini are empowering non-technical leaders to explore trends, stress-test hypotheses, and project scenarios directly from raw datasets.

However, the term “predictive analysis with conversational AI” can be misleading. These tools do not “predict” the future in a deterministic sense. Instead, they orchestrate existing statistical methods, enrich them with qualitative context, and translate them into actionable insights for decision-making. This guide establishes a clear, methodological, and realistic framework for leveraging these tools without falling into the traps of over-promising or interpretative bias.

In this article, “predictive analysis” does not refer to autonomous forecasting models, but to scenario-based projections and statistical reasoning orchestrated through conversational AI.

Understanding the mechanics of conversational AI in predictive analytics

The shift from traditional predictive modeling to AI interfaces

Traditional predictive analytics relies on explicit statistical or probabilistic models: regressions, time-series forecasting, ARIMA models, and supervised machine learning. These approaches require deep technical expertise, specialized computing environments, and expert interpretation.

Conversational AI does not replace these foundations. It acts as an intelligent interface:

- It interprets complex instructions provided in natural language.

- It generates and executes statistical code (e.g., Python) in a sandboxed environment.

- It bridges the gap between quantitative data and qualitative context (industry reports, market sentiment, business hypotheses).

- It reframes technical outputs into strategic narratives.

The mathematical core remains grounded in classical statistics; the added value lies in accessibility and contextual synthesis.

Capabilities vs. limitations

A conversational AI agent can:

- Analyze imported time-series data (CSV, Excel, BigQuery exports).

- Identify structural trends, seasonality, and cyclical patterns.

- Execute standard statistical projections.

- Compare multiple “What-if” hypothetical scenarios.

- Synthesize signals from provided research papers or market reports.

Conversely, it cannot:

- Spontaneously access private internal data or real-time streams without explicit RAG (Retrieval-Augmented Generation) integration.

- Guarantee the accuracy of a forecast if the underlying data is biased or sparse.

- Replace professional judgment or the accountability of a strategic decision.

Projection, prediction, and probabilistic scenarios

A critical distinction must be made between three often-confused concepts:

- Projection: The mathematical extrapolation of past trends into the future.

- Prediction: A conditional estimate based on a specific model and set of variables.

- Scenarios: The exploration of multiple possible futures based on explicit parameter shifts.

Conversational AI is most powerful in probabilistic scenario modeling. It allows leaders to test hypotheses (“What happens if…”) and observe potential outcomes rather than seeking a single, deceptively precise “correct” value.

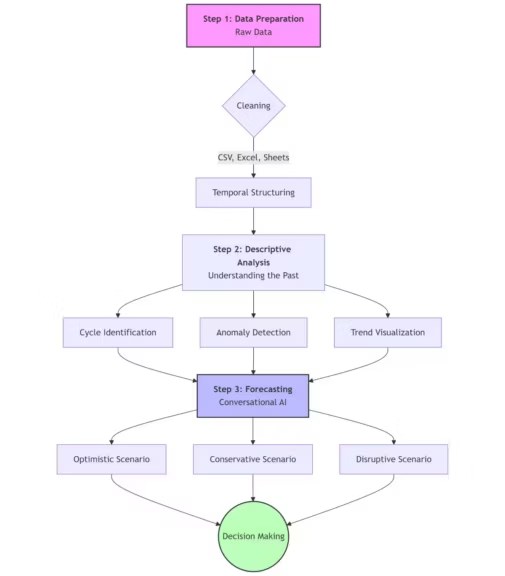

A universal framework for reliable AI-driven predictive analysis

(Click to enlarge)

Step 1 – Data preparation and structuring

Predictive analysis is only as good as its input. AI is not a corrective for poor data quality.

Core prerequisites include:

- Structured formats (CSV, JSON, or clean Excel sheets).

- Explicit temporal dimensions (consistent date-time indexing).

- Historical depth (sufficient data points to identify non-random patterns).

- Basic data hygiene (handling missing values and removing outliers).

Without these prerequisites, the AI will produce results that appear plausible but are fundamentally fragile.

Step 2 – Descriptive analysis and contextualization

Before projecting the future, one must master the past. This stage is often bypassed, yet it dictates the validity of the entire process.

AI should be tasked to:

- Summarize the global trajectory of key performance indicators (KPIs).

- Isolate seasonal cycles from noise.

- Identify historical “inflection points” or disruptions.

- Compare performance across various time-blocks or segments.

Visualizations (moving averages, decomposition plots, period-over-period comparisons) serve as a cognitive safeguard. They ensure the “story” the AI tells aligns with the observed data.

Why calling this “predictive analysis” is (almost) a misuse of language

In its strict data science meaning, predictive analysis relies on well-defined foundations: an explicit predictive model (statistical or machine learning), trained on historical data, evaluated using formal metrics (error rates, backtesting, validation sets), and designed to produce measurable conditional estimates.

From that perspective, using the term “predictive analysis” to describe conversational AI tools may appear overstated or even misleading. These systems do not, on their own, build end-to-end predictive pipelines. They do not train dedicated models, optimize loss functions, or guarantee predictive performance in a formal, reproducible sense.

However, reducing their role to mere conversational assistance would also be inaccurate. Modern conversational AIs can orchestrate classical statistical methods, contextualize user-provided data, and support scenario exploration based on explicit assumptions. They do not replace predictive modeling, but they democratize exploratory predictive reasoning, which was previously limited to technically specialized roles.

In this article, the term “predictive analysis” is therefore used in a deliberately broader and explicit sense. It refers to scenario-based, statistically assisted projection aimed at supporting decision-making, rather than to the implementation of a predictive model trained and validated according to data science standards.

This framing is not a marketing shortcut, but a matter of intellectual clarity. It draws a clear distinction between the tool, conversational AI, and the discipline, predictive analytics, while acknowledging the emergence of an intermediate space: AI-augmented planning.

2026 Toolstack: Choosing your predictive engine

In the current landscape, “one-size-fits-all” is a relic of the past. Strategic leaders now select their conversational AI based on the specific nature of their data architecture.

| Platform | Core Strength | Predictive Sweet Spot |

|---|---|---|

| ChatGPT (Advanced Data Analysis) | Python execution in sandbox | Quantitative forecasting & statistical modeling |

| Claude (Anthropic) | Nuanced reasoning & long context | Qualitative signal detection & strategy auditing |

| Gemini (Google DeepMind) | Multimodal & Workspace integration | Large-scale research & real-time sheet analysis |

ChatGPT: The statistical powerhouse

With its native ability to write and execute code, ChatGPT remains the gold standard for deterministic analysis. It doesn’t just “guess” the next number; it builds a regression model to calculate it.

- Best for: Processing large .csv exports, generating correlation matrices, and running Monte Carlo simulations.

Claude: The strategic auditor

Claude’s strength lies in its contextual window and lower hallucination rate. When analyzing 500-page industry reports alongside internal datasets, Claude identifies “weak signals” that purely quantitative models might miss.

- Best for: Scenario planning, cross-referencing market trends with internal audits, and “red-teaming” your own strategic assumptions.

Gemini: The research librarian

Gemini 1.5/3 Pro shines when predictive data is scattered across the Google ecosystem. Its massive context window allows it to “read” an entire repository of past performance files to find long-term cyclicality.

- Best for: Real-time web-grounded research and collaborative forecasting within Google Sheets.

Advanced workflows: Beyond the basic prompt

To achieve “Cosmo-Edge” level precision, basic prompting is insufficient. Enterprises are now moving toward Agentic Workflows and RAG (Retrieval-Augmented Generation).

RAG-Enhanced forecasting

The biggest limitation of general AI is the “knowledge cutoff” and lack of private data. RAG solves this by connecting the AI to your proprietary data lake. Instead of asking the AI what it knows about the market, you ask it to analyze your specific Q3 logs against current market benchmarks.

- The Result: Highly specific, grounded forecasts that cite internal sources and reduce hallucinations by up to 90%.

Agentic Analysis Loops

In 2026, we no longer use a single prompt. We use AI agents that follow an iterative loop:

- Agent A (The Cleaner) identifies outliers in your data.

- Agent B (The Analyst) runs three different statistical models.

- Agent C (The Critic) challenges the models and looks for logical fallacies.

Structural limitations and strategic safeguards

Even the most advanced LLM is subject to algorithmic drift and contextual bias. Professional predictive analysis must account for the following:

- The “Hallucination of Precision”: An AI might provide a decimal-point-perfect number that is fundamentally wrong. Always treat outputs as probability ranges, not absolute truths.

- Data Silos: Conversational AI is only as good as the context it is fed. If your supply chain data is missing from the prompt, the sales forecast is irrelevant.

- Privacy & Compliance: Ensure your stack uses Enterprise-grade privacy layers where data is not used for model training (GDPR/AI Act compliance).

Conclusion: From forecasting to decision intelligence

Conversational AI has successfully demystified predictive analytics, turning it into a structured dialogue between human intuition and machine processing power. In 2026, the competitive advantage belongs not to those who have the most data, but to those who know how to interrogate it. By moving from static dashboards to dynamic, scenario-based conversations, leaders can navigate market volatility with unprecedented agility.

The future of prediction isn’t about finding a single “right” answer; it’s about being prepared for all the ones that are likely to happen. Are your current data foundations ready to support a conversation about your company’s future?

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!