Discord’s Edge AI rollout: ultimate privacy or technical black box?

Starting March 2026, Discord will implement a global age verification mandate. Driven by the UK Online Safety Act and Australian eSafety regulations, this shift forces millions of users to verify their age to maintain full access. However, beyond the policy changes lies a massive technical feat: the deployment of on-device facial age estimation (Edge AI) across a highly fragmented hardware ecosystem.

For Cosmo-edge, we’ve dissected the underlying architecture. Is this the future of privacy-preserving verification, or a new technical bottleneck for the open web?

1. On-device inference: the “local-first” shift

In an era of frequent data breaches—including a notable leak from a Discord vendor in October 2025—Discord is moving away from centralized biometric databases. By shifting the “intelligence” to the user’s local hardware, the platform aims to meet strict GDPR and DSA requirements for data minimization.

The Stack: WebAssembly and Lightweight CNNs

Discord utilizes WebAssembly (WASM) to execute high-performance code within its Electron-based desktop client and web browsers.

- The Model: Analysis suggests the use of a lightweight Convolutional Neural Network (CNN), likely a variant of MobileNetV2. Through INT8 quantization, the model is compressed to under 10MB, allowing it to run efficiently without a dedicated GPU.

- Liveness Detection: To thwart spoofing attempts (photos or deepfakes), the system leverages facial landmarks via frameworks like Google’s MediaPipe to require real-time head movement. A notable bug during the beta phase, where Death Stranding’s photo mode successfully fooled the AI, has reportedly been patched through more rigorous skin texture and depth analysis.

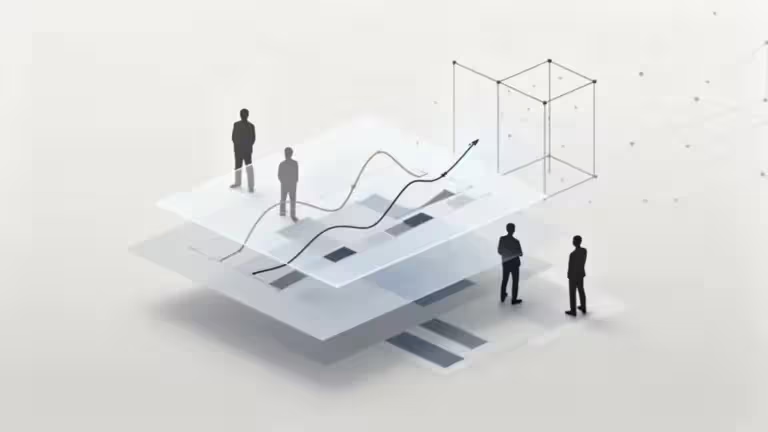

Estimated performance by hardware tier

| Hardware Tier | Inference Time | CPU Impact |

| Gaming PC (RTX/GTX GPU) | < 1s | Negligible |

| Modern Desktop (i5/Ryzen 5) | 2 – 4s | Brief thread spike |

| Entry-level Smartphone | 5 – 10s | Noticeable thermal load |

| Legacy PC (< 2018) | 8 – 12s | Potential UI lag |

2. The reliability gap: MAE and algorithmic bias

While the privacy promise is compelling, the technical reality of “apparent age estimation” remains fraught with margin-for-error challenges.

The MAE (Mean Absolute Error) problem

According to NIST (FATE) standards, age estimation models typically carry a MAE of 2 to 4 years.

The Critical Stat: Pilot tests in Australia revealed a false negative rate (adults classified as minors) between 10% and 28% for the 18–22 age bracket. Factors like poor lighting, glasses, or “youthful” facial features often trigger AI failures, forcing users back to traditional government ID uploads.

The transparency deficit

Unlike competitors like Yoti, which publish independent audits and accuracy reports, Discord’s code remains proprietary. While it checks the boxes for GDPR compliance on paper, there is no public third-party audit (such as PVID certification) confirming the absolute isolation of the on-device biometric vectors. For experts, this remains a technical “black box”—users must take the platform’s privacy claims at face value.

3. Why independent creators are left behind

At Cosmo-Edge.com and Cosmo-Games.com, we have long advocated for local AI as a tool for personalization, privacy protection, and cost reduction.

However, for independent publishers running a forum or a website, replicating Discord’s model is currently almost impossible.

- Lack of Plug-and-Play standards: There is currently no production-ready, certified, and easy-to-integrate open-source model (on Hugging Face or elsewhere) for small-scale developers.

- The burden of responsibility: Implementing custom Edge AI exposes small platforms to legal risks regarding algorithmic bias. For instance, datasets like UTKFace show that AI consistently underestimates the age of women due to makeup—a bias that independent creators lack the resources to retrain or mitigate.

Edge AI: privacy savior or corporate privilege?

Discord’s pivot to on-device AI is a powerful signal: the cloud is no longer the default for sensitive data. However, until these tools are open-sourced and independently audited, they remain a privilege of tech giants. The challenge for 2026 will be democratizing these models so every creator can protect their users with the same technical rigor.

Edge AI protects us, but it also shifts the burden onto our own hardware, and requires a leap of faith in the proprietary code running on it.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!