How to Build AI Agents Independent from Any LLM Provider

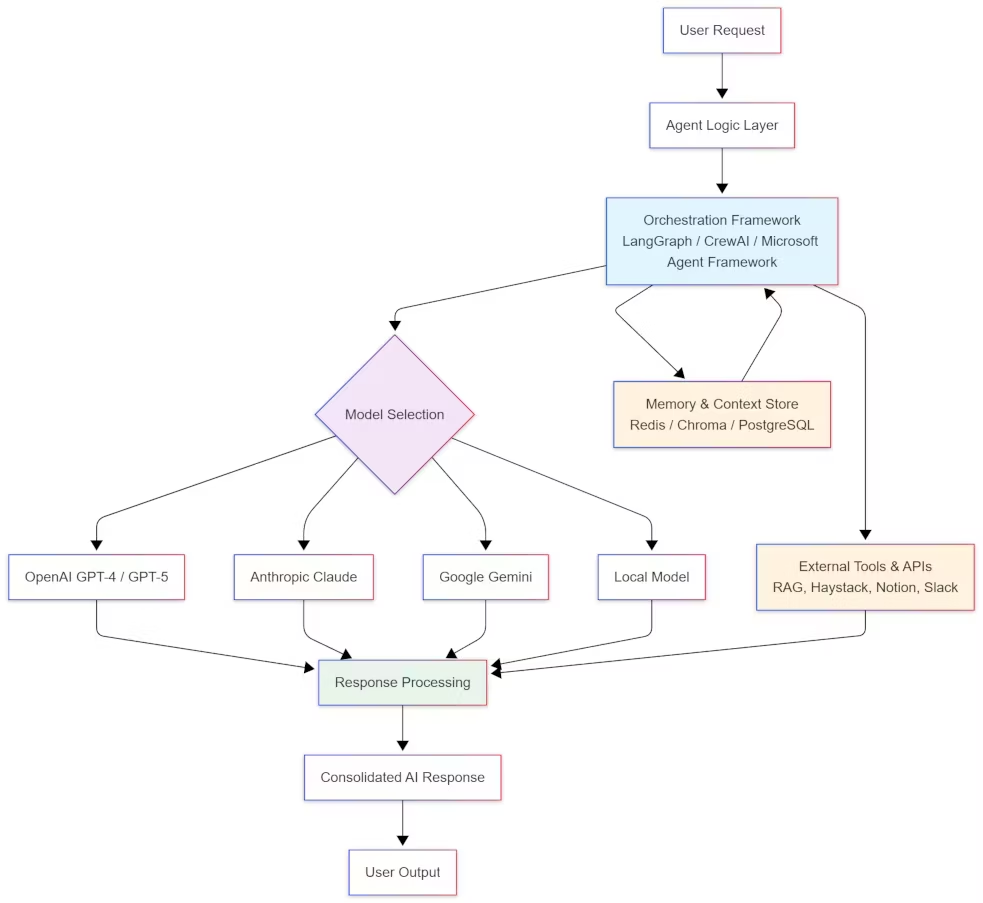

In 2025, technological independence in artificial intelligence has become a central issue. Both companies and developers are now looking to build independent AI agents capable of running without relying on a single large language model (LLM) provider. This marks a clear shift from the early generations of agents tied exclusively to OpenAI or Claude and opens a new era of autonomous AI.

Behind this movement lies one key idea: regaining control. The LLM market evolves rapidly, with fluctuating costs, shifting access policies, and the growing risk of vendor lock-in, which directly threatens data sovereignty and long-term application stability. Building an open-source AI framework capable of interacting with multiple models in parallel is no longer optional, it is essential to ensure resilience, compliance, and cost efficiency.

This LLM-agnostic approach is embodied by frameworks such as LangGraph, CrewAI, and the Microsoft Agent Framework, which represent the forefront of AI agent orchestration. These tools allow the same agentic logic to be executed across different models including OpenAI, Claude, Gemini, Mistral, or others, even when deployed locally via vLLM or Ollama. The goal is to combine performance, independence, and sovereignty without sacrificing user experience quality.

A strategic evolution: from single-model to multi-LLM architectures

Until recently, most AI agents depended on a single LLM provider. This centralization simplified development but trapped systems in proprietary logic. By 2025, the situation has reversed: major platforms (OpenAI, Anthropic, Google) are frequently adjusting their pricing and APIs, while the open-source ecosystem has gained momentum with alternatives such as Llama, Mistral, Qwen, GPT-OSS, and DeepSeek.

The multi-LLM paradigm has now become standard. By relying on open-source AI frameworks, developers can integrate multiple models within a single pipeline based on specific needs like speed, cost, precision, or context. For example, a conversational agent might use Claude for comprehension, ChatGPT for code generation, and a local Mistral model for fast text summarization.

As highlighted in the LangGraph documentation, this design decouples the agent’s logic from the underlying model, enabling seamless provider switching without rewriting code. It marks a step toward truly interoperable agentic architectures that can adapt to shifting market and compliance constraints.

Why LLM independence is becoming critical

Resilience and data sovereignty

Technological resilience is a cornerstone of modern architectures. A provider outage, API modification, or pricing change can instantly paralyze a production system. By making AI agents LLM-agnostic, organizations shield themselves from these risks.

The second dimension is data sovereignty. As noted in Microsoft’s introduction of the Microsoft Agent Framework, enterprises must be able to deploy their agents on-premises, in private, or hybrid clouds to comply with regulations such as GDPR or FedRAMP. The hybrid model and BYOC (Bring Your Own Cloud) paradigm have become key guarantees for compliance and control.

Economic optimization and adaptive performance

The multi-LLM strategy also introduces a new form of economic arbitration. Simple tasks can be delegated to lightweight open-source models, while more demanding queries can leverage premium commercial LLMs. Both CrewAI and LangGraph provide this dynamic routing through built-in conditional policies, as detailed in their official documentation.

This strategy improves both performance and cost efficiency: an agent can automatically switch from a paid model to a local model when quotas are reached or response quality drops. A custom fine-tuned corporate model can also be integrated. This approach, already used by Azure teams through the Microsoft Agent Framework, is reshaping how large-scale AI workloads are managed.

The three key frameworks: LangGraph, CrewAI, and Microsoft Agent Framework

| Critère | LangGraph | CrewAI | Microsoft Agent Framework |

|---|---|---|---|

| Origine / Éditeur | Développé par LangChain | Projet open source indépendant | Microsoft (fusion d’AutoGen et Semantic Kernel) |

| Architecture | Basée sur un graphe d’exécution (chaque nœud = étape, outil ou sous-agent) | Organisation en équipes d’agents spécialisés (“crew”) | Infrastructure modulaire et centralisée, pensée pour la production |

| Philosophie | Orchestration robuste et mémoire persistante | Collaboration multi-agents, approche distribuée | Gouvernance, conformité et observabilité d’entreprise |

| Persistance d’état / Mémoire | Oui, via Redis, PostgreSQL ou Chroma (mémoire externe durable) | Non native, dépend des intégrations externes | Oui, intégrée à Azure (stockage et reprise de contexte) |

| Multi-LLM | Support indirect via LangChain | Oui, un LLM différent par agent possible | Oui, compatible avec plusieurs LLM via connecteurs Azure |

| Interopérabilité | Compatible LangChain, LangSmith, Qdrant, Chroma | Intégration avec +100 outils (Gmail, Notion, Slack, etc.) + MCP | Connecté à l’écosystème Microsoft (Azure, Entra ID, Power Platform) |

| Observabilité / Supervision | Oui, via LangSmith (self-hosted ou cloud) | Basique, orientée logs ou monitoring externe | Intégrée (Azure Monitor, Log Analytics) |

| Cas d’usage typique | Orchestration d’agents complexes, mémoire longue, projets multi-LLM | Collaboration IA entre rôles spécialisés, automatisation de tâches variées | Déploiements en entreprise nécessitant conformité et traçabilité |

| Niveau de maturité | Très stable, éprouvé en production | En développement actif, flexible mais moins standardisé | Récent mais soutenu par Microsoft, adoption rapide |

| Cible principale | Développeurs et architectes IA | Équipes d’automatisation et makers | Entreprises soumises à des normes de sécurité et conformité |

| Licence / Open Source | Open source (LangChain ecosystem) | Open source | Open source (MIT, Microsoft GitHub) |

LangGraph: robust orchestration and persistent memory

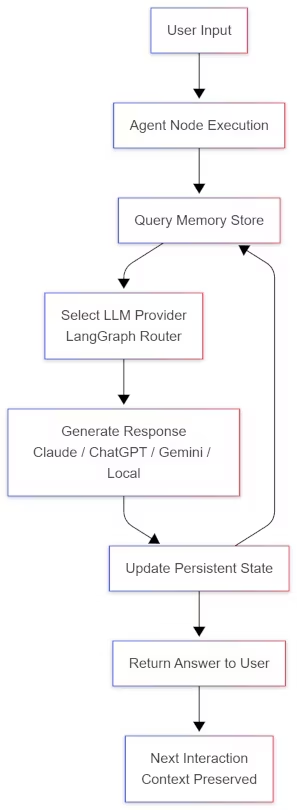

Developed by LangChain, LangGraph has become a reference among open-source AI orchestration frameworks for building complex and durable agents. It is based on a graph architecture, where each node represents a step, a tool, or a sub-agent. This transparent structure enables parallel decision flows and simplifies maintenance and debugging.

LangGraph stands out for its state persistence, thanks to integration with vector databases and external storage systems like Redis, PostgreSQL, or Chroma that preserve long-term context and memory across sessions. An agent can resume its work after interruption without losing context, leveraging external memory persistence. When combined with LangSmith, it provides full observability of workflows, essential for multi-LLM production deployments.

Alose read : LangGraph: the open-source backbone of modern AI agents

CrewAI: multi-agent collaboration and operational flexibility

CrewAI takes a more collaborative multi-agent approach. It enables the creation of AI agent teams (or “crews”), each with a distinct role such as researcher, writer, developer, or reviewer. Every agent can use a different LLM according to its role, a multi-LLM-per-agent design well described in the official documentation.

This model mirrors the structure of modern workflows: a decentralized task organization managed by specialized AI roles. CrewAI integrates with more than 100 external tools (Gmail, Notion, Slack, HubSpot) and supports the Model Context Protocol (MCP), an interoperability standard adopted by both Microsoft and OpenAI.

Microsoft Agent Framework: governance and enterprise compliance

A more recent yet highly influential player, the Microsoft Agent Framework unifies the AutoGen and Semantic Kernel projects into a single open-source foundation. Its purpose is to deliver a production-ready agent orchestration engine compliant with SOC2 and FedRAMP standards.

This framework targets organizations with strict governance needs, featuring built-in identity management via Entra ID, role-based access control (RBAC), and native observability through Azure Monitor and Log Analytics. Its rapid adoption confirms that AI governance and compliance are becoming as critical as performance for enterprise-grade AI deployments.

Technical architecture: the foundations of an independent AI agent

Decoupled logic from the model

The foundation of any multi-LLM framework lies in separating business logic from the language model configuration. This setup is handled through environment variables:

AGENT_LLM_PROVIDER=openai

AGENT_LLM_MODEL=gpt-5This decoupling makes it possible to switch models without refactoring code. Both LangGraph and CrewAI rely on this modular approach, ensuring full portability across any compatible provider or infrastructure.

Semantic search and local RAG

Independent AI agents often rely on a Retrieval-Augmented Generation (RAG) strategy. By integrating an engine such as Haystack or FAISS, the agent can retrieve information from local vector databases, reducing reliance on external APIs. As explained in the Haystack documentation, this technique enhances precision while strengthening data privacy.

Persistent memory and long-term context

A functional agentic AI architecture must preserve decision history. LangGraph and CrewAI offer persistent memory systems backed by vector stores like Chroma, as well as Redis or PostgreSQL. These components ensure continuity of reasoning even after a restart, a crucial feature for achieving true AI autonomy.

Security, governance, and auditability

Multi-LLM agents often handle sensitive corporate data. Secret management relies on vault systems like HashiCorp Vault or Azure Key Vault, while the Microsoft Agent Framework integrates Entra ID authentication by default.

To enhance compliance, Microsoft is already experimenting with prompt shields and automatic PII detection, mechanisms that open-source frameworks can replicate via middleware such as Guardrails AI or Rebuff.

This alignment between security and compliance makes it possible to design governable and auditable agent architectures suited for enterprise environments.

Observability and traceability

Observability is a core requirement in multi-LLM architectures. LangGraph and LangSmith provide detailed tracking of API calls, latency, and error logs. Execution traces can be exported to analytical dashboards or linked with business intelligence tools.

CrewAI Studio includes a visual workflow monitor that allows developers to replay interactions, fine-tune prompts, or adjust routing policies dynamically. These tools make AI performance optimization measurable and continuous.

Multi-LLM routing: flexibility, cost control, and resilience

Multi-LLM routing transforms dependency into a strength. Depending on workload, cost, or latency, an agent can dynamically select the optimal model.

LangGraph offers straightforward configuration:

AGENT_LLM_PROVIDER=anthropic

AGENT_LLM_MODEL=claude-opusEach node can therefore use a different model. This modularity enables intelligent arbitration: Claude for analytical synthesis, ChatGPT for generation tasks, Gemini for contextual retrieval, and a local model for internal queries.

The most effective routing policies rely on a balance between cost, latency, and quality:

- Heuristic: fixed model selection per task

- Adaptive learning: dynamic switching based on response quality

- Automatic failover: fallback to a secondary model in case of outage or quota limits

The Microsoft Agent Framework already documents such model arbitration strategies in Azure AI Foundry. When combined with observability tools such as LangSmith or Power BI, these strategies allow fine-grained cost monitoring and transparent decision tracking across providers.

Deployment models: cloud, on-prem, and hybrid infrastructures

LLM independence also depends on deployment flexibility. Modern frameworks adapt to any environment:

- Managed cloud: via Azure AI Foundry for quick deployment with integrated supervision

- On-prem or BYOC: full data control and predictable costs. LangGraph runs fully self-hosted with monitoring through LangSmith

- Hybrid: combining both approaches. CrewAI can connect local models through Ollama or vLLM, while using remote ones depending on agent roles

Hybrid architectures provide the best balance between performance and data sovereignty. Intensive computation stays in the cloud, while sensitive data remains on-site. This approach is increasingly favored by European organizations seeking to reconcile innovation and compliance.

Continuous evaluation and best practices

The efficiency of an independent AI agent is not defined solely by its response speed. Frameworks like LangGraph and the Microsoft Agent Framework include quality benchmarking and automated QA features:

- Exact match, F1 score, human evaluation, p50/p95 latency

- Fallback rate, cost per task, error rate

- A/B testing and canary releases to compare models in production

The emerging best practices are consistent across frameworks:

- Externalize configuration (never hard-code models)

- Document every tool and make it idempotent — ensuring multiple executions always produce the same final state

- Establish clear AI governance: encryption, anonymization, and audit trails

- Maintain constant observability using tools such as LangSmith or Azure Monitor

These practices, once exclusive to cloud setups, are now essential for on-prem or hybrid AI deployments.

Toward true AI autonomy: 2025 market trends

The rise of independent AI agents signals a paradigm shift. After years of centralized LLM dominance, the industry is now embracing decentralized intelligence.

This shift aligns with broader ecosystem trends:

- OpenAI and Anthropic are refocusing on premium, closed services

- Microsoft is expanding its agentic model through Azure AI Foundry

- The open-source ecosystem is growing around frameworks like LangGraph, CrewAI, and vLLM, which make AI agent development more accessible and less dependent on proprietary solutions. This also simplifies prototyping through hybrid infrastructures and on-prem model execution

In the medium term, multi-LLM architectures are expected to become the default standard, with frameworks integrating proprietary, customized, and community-driven models.

AI autonomy does not mean isolation but the ability to orchestrate multiple intelligences depending on context, confidentiality, and cost.

The LLM-agnostic movement therefore represents the natural extension of the open-source philosophy: finding the right balance between performance and independence, innovation and sustainable governance.

Conclusion: building resilience through openness

Building an AI agent independent from any LLM provider is no longer just a technical challenge, but a strategic necessity. Frameworks like LangGraph, CrewAI, and the Microsoft Agent Framework already provide all the tools needed to create LLM-agnostic systems that can combine multiple models, run locally or in the cloud, and meet the strictest compliance requirements.

This approach ensures three key advantages:

- Freedom of technological choice, without dependency on a single provider

- Optimized performance and cost through multi-LLM routing

- Sovereignty and compliance via auditable hybrid deployments

AI autonomy is no longer a theoretical goal. It is a present reality for organizations adopting open, resilient, and interoperable architectures. By combining cloud power with local control, they are shaping the future of a more distributed, transparent, and sustainable artificial intelligence.

Resources and frameworks mentioned

- LangGraph (LangChain) – AI orchestration and persistent memory

- CrewAI – open-source multi-agent framework with multi-LLM support

- Microsoft Agent Framework – enterprise-grade AI governance and compliance

- Haystack – open-source RAG engine for local semantic search

- LangSmith – observability and tracing for multi-LLM workflows

- Guardrails AI and Rebuff – prompt security and filtering for AI agents

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!