Public AI arrives on Hugging Face: a new era for open source inference

The Hugging Face ecosystem is expanding. Since September 2025, Public AI has been officially integrated as an Inference Provider on the Hugging Face Hub (source: Hugging Face). This integration is significant as it opens the door to simplified, free, and sovereign access to open source AI models directly from the leading platform for researchers, developers, and AI enthusiasts.

The announcement sparked strong reactions across the community, as it is not limited to adding a new inference provider. It illustrates a broader trend: building a public AI ecosystem capable of competing with major private cloud infrastructures while promoting transparency and digital sovereignty.

Public AI on Hugging Face: a strategic integration

With this move, users can now select Public AI as an inference provider directly from Hugging Face model pages. This means it becomes as simple to run a request on a model hosted by Public AI as it is with established providers like Together AI or Fireworks.

The benefits are clear:

- Immediate accessibility: no need to set up your own infrastructure to test a model.

- Interoperability: the API is fully compatible with OpenAI’s API (source: Hugging Face), making project migration seamless.

- AI sovereignty: available models come from public and national initiatives such as the Swiss AI Initiative or AI Singapore (source: Public AI).

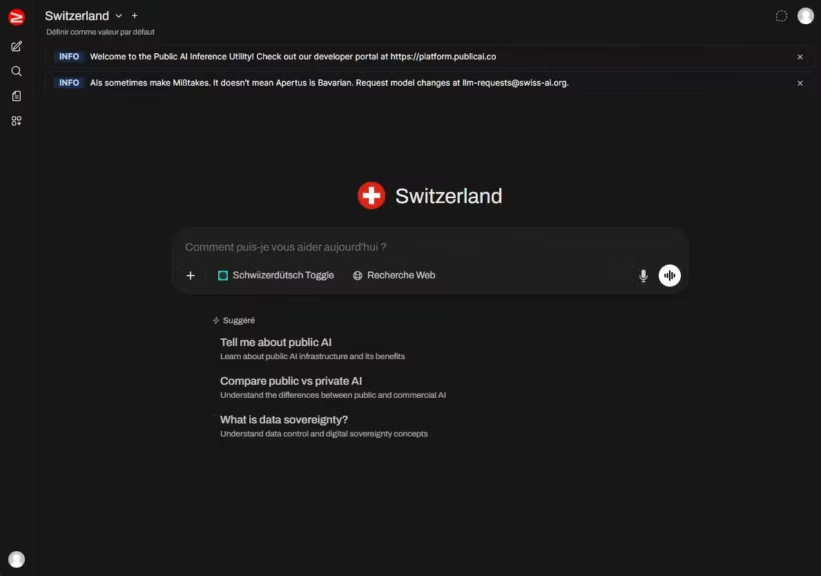

In practice, developers can access these models either with their Hugging Face API key or a key generated on the Public AI platform. This flexibility is designed for both casual users and professional teams that want to integrate Public AI into their long-term workflows.

A seamless integration in the Hugging Face interface

Hugging Face designed this integration to be almost invisible for users. Three main points stand out:

- User preferences: in the Hugging Face account settings, it is now possible to rank inference providers by preference. Developers can set Public AI as their default choice (documentation Hugging Face).

- Model page widget: every model page displays available providers. If Public AI is supported, it appears in the inference options, allowing quick testing.

- Python and JS SDKs: Hugging Face updated its libraries (huggingface_hub and @huggingface/inference) to support Public AI. The official example with the Swiss model Apertus-70B shows that simply adding provider=”publicai” switches to this infrastructure.

This focus on developer experience shows Hugging Face’s intent to diversify inference providers, reduce reliance on its own servers, and highlight public AI projects that promote transparency and governance.

Which AI models are available via Hugging Face and Public AI?

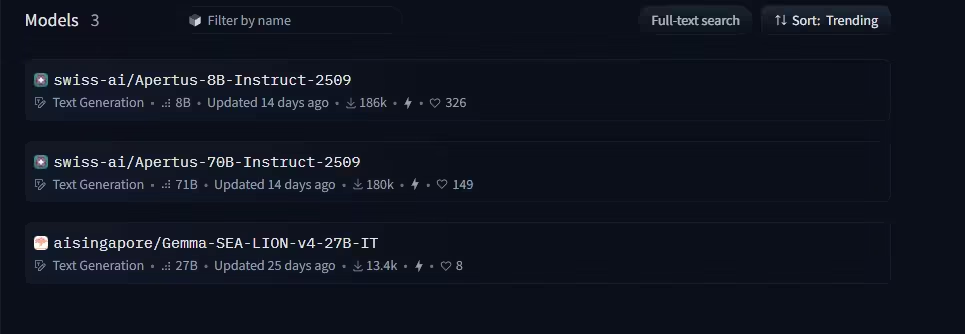

For now, Public AI highlights two flagship sovereign AI models:

- Apertus-70B (Switzerland), developed by the Swiss AI Initiative. The 8B version is also accessible.

- SEA-LION v4 (Singapore), built by AI Singapore.

These open source AI models can be accessed both via the Hugging Face API and the web interface chat.publicai.co. This chat system is powered by Open WebUI, an open source project known for its modular and intuitive interfaces (source: Public AI Inference Utility).

The official roadmap mentions upcoming partnerships with other countries, including Spain and Canada (About the Inference Utility). The catalog can be tracked on the Hugging Face list of compatible models.

An infrastructure designed for resilience

Behind this integration lies an ambitious technical architecture:

- vLLM backend: optimized inference engine for speed and memory efficiency.

- Distributed deployment: servers hosted across public and private clusters worldwide.

- OpenAI-compatible APIs: standardized for developer adoption.

- Global load balancing: transparent routing of inference requests based on available resources (source: Hugging Face).

The goal is to ensure stable availability, even though part of the infrastructure depends on GPU time and resources donated by national and industrial partners.

Uncertainties remain, such as the real scalability of this infrastructure under massive workloads.

Free today, viable tomorrow?

One of the most striking aspects: using Public AI through Hugging Face is currently free. According to official documentation, this free access relies on two levers:

- GPU time donated by institutions and private partners.

- Advertising subsidies covering part of the cost.

In the long run, sustainability will depend on:

- public contributions (states, institutions),

- subscription models (Plus or Pro tiers under consideration),

- and a public utility approach, similar to essential services such as water or electricity (About the Inference Utility).

Pricing models and subscription timelines have not yet been disclosed.

A step toward sovereign public AI

Beyond the technical side, this integration is a milestone in Hugging Face’s strategy:

- Offering more choices to developers.

- Supporting an open source, non-profit AI project focused on transparency and public governance.

- Providing visibility for national AI projects aiming to be credible alternatives to dominant private APIs.

As the Public AI manifesto highlights, the vision is to build AI as public infrastructure, much like libraries or power grids.

The arrival of Public AI on Hugging Face does not solve all challenges. Funding, scalability, and competitiveness against private inference providers remain open issues. But it sends a strong signal: the open source AI ecosystem is determined to build reliable, accessible, and sustainable alternatives.

Conclusion

With the integration of Public AI on Hugging Face, open source inference takes a decisive step forward. Testing and deploying sovereign models like Apertus-70B or SEA-LION v4 is now as simple as running a query on GPT or LLaMA.

Apertus-70B ranks among the best open source AI models but still falls short compared to closed-source LLMs like ChatGPT 5 or Claude 4 in versatility and performance.

This collaboration between a global platform and a public initiative reflects a deeper movement: making AI a common good rather than just a commercial product.

Points to watch:

- Which new national AI models will be added?

- How will free quotas and pricing policies evolve?

- How will Public AI perform compared to established private inference providers?

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!