The rise of public AI providers towards digital sovereignty

Artificial intelligence is often associated with private giants like OpenAI, Google or Anthropic. Yet another path is emerging, that of public AI providers, designed as public utility services. Just like electricity or water, access to AI could one day be considered a common good, managed by public institutions or nonprofit consortia.

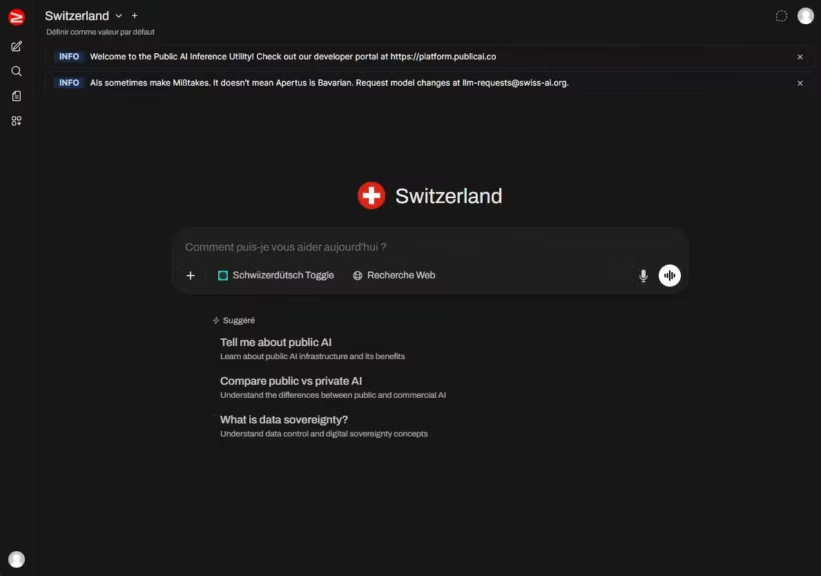

This is the vision behind Public AI, an open source project that aims to make inference accessible to everyone, either free or at low cost, while guaranteeing transparency and digital sovereignty (Public AI, About us).

Public AI, artificial intelligence as a public service

The Public AI Inference Utility presents itself as an equivalent to essential services, an AI on tap where anyone can access sovereign AI models as easily as turning on a faucet (About the Inference Utility). The analogy highlights the idea that AI should not remain restricted to those with large financial resources but be available in a stable and equitable way.

The current infrastructure relies on two main pillars:

- A vLLM backend optimized to run large-scale AI models efficiently.

- An open source interface based on Open WebUI, offering a simple chat experience, accessible for free at chat.publicai.co.

Users can interact with Apertus-70B (Swiss model) or SEA-LION v4 (Singaporean model) through a clear interface without needing command-line execution like many open source AI projects. This approach embodies a real digital public service, designed to prioritize accessibility over technical complexity. However, in its current form, this service is still far from reaching the performance, multimodality and precision of ChatGPT or Claude.

An open API under constraints

For developers and researchers, Public AI also offers an inference API with full documentation (Public AI docs). It follows a familiar standard, an OpenAI-compatible API that is easy to integrate into existing projects. Hugging Face even decided to integrate this API into its system of Inference Providers, allowing Public AI models to be called directly through official Hugging Face SDKs in Python and JavaScript.

Yet this openness comes with strict limitations to avoid overload:

- 20 requests per minute during specific events such as Swiss AI Weeks (Public AI documentation).

While sufficient for occasional usage, this quickly becomes a bottleneck for larger-scale AI projects.

Hugging Face as a distribution hub

The integration with Hugging Face marks an important turning point. Until now, accessing sovereign AI models often required complex technical steps. Today, it is enough to select Public AI as an inference provider directly in Hugging Face Hub or through the official SDKs.

This provides three immediate benefits:

- Increased accessibility: the technical barrier is drastically reduced.

- Global visibility: public AI models are placed on equal footing with private company offerings.

- Interoperability: the OpenAI-compatible API allows developers to reuse existing code seamlessly.

However, this broader distribution also raises challenges. While inference via the web interface remains controllable (one user sending one request at a time), inference via the API can create massive loads if automated calls multiply.

Hugging Face has implemented quotas and prioritization systems to protect the Public AI infrastructure and ensure reliable service:

- Account-based quotas: free-tier users get best-effort access with implicit limits on requests and storage. Pro, Team and Enterprise accounts benefit from higher quotas, faster APIs and priority compute resources (Hugging Face storage limits).

- Prioritization: Pro subscribers and above enjoy priority access with lower throttling risks and shorter delays, using mechanisms like ZeroGPU for Spaces (Artificial Intelligence News).

- Infrastructure protection: Public AI runs on a distributed vLLM backend with global load balancing, ensuring fair distribution of requests and reducing risks of saturation (Hugging Face blog).

In short, Hugging Face combines quota management, subscription-based prioritization and resilient backend infrastructure to keep the nonprofit Public AI ecosystem stable.

The stakes of digital sovereignty

The rise of public AI providers goes beyond technology. It addresses a geopolitical and cultural challenge: avoiding complete dependency on American or Chinese AI giants for access to artificial intelligence models.

Public AI is already supported by initiatives such as the Swiss AI Initiative, AI Singapore, AI Sweden and the Barcelona Supercomputing Center (Public AI, About us). This international approach shows that digital sovereignty in AI should not be limited to national strategies but can be organized through networks of countries sharing the same vision.

Free access with conditions

Today, Public AI offers free inference access. This model is sustained by:

- GPU time donations from public and private partners.

- Grants and funding from organizations such as Mozilla and the Future of Life Institute.

- In the future, the adoption of hybrid economic models including ads, citizen contributions similar to Wikipedia, and Pro subscriptions (About the Inference Utility).

While this free access is a powerful argument, it also reveals a fragility, namely the long-term financial and technical sustainability of such a service. Without strong institutional support, a public AI utility may struggle to withstand scaling demands.

The ambition of public AI providers

Public AI providers embody a promise: to make artificial intelligence a common good, accessible to all while ensuring transparency and sovereignty. Public AI demonstrates this ambition by combining a user-friendly chat interface at chat.publicai.co, an open API and strategic integration with Hugging Face.

Yet the experiment also highlights critical challenges: infrastructure scalability, economic sustainability, and the capacity to compete with private infrastructures remain open questions. At the same time, progress is accelerating. With advances in hardware and software optimization, it is already possible to run small AI models directly on a personal computer. The gap between open source LLMs and proprietary AI solutions is steadily shrinking.

If AI is to become a universal tool, the path of public inference services is likely to gain importance in the coming years. The success or failure of Public AI will serve as a reference point to evaluate whether we can truly achieve digital sovereignty in artificial intelligence.

Also read : Install vLLM with Docker Compose on Linux (compatible with Windows WSL2)

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!