Which Qwen 3 model should you choose? Complete comparison of the 235B, 32B, 30B, 14B, 8B and 4B versions

Launched in 2025 by Alibaba Cloud, the Qwen 3 series has become one of the most complete families of open-source AI models on the market (or more precisely, open-weight models). From the ultra-light Qwen3-1.7B designed for modest PCs to the computing powerhouse Qwen3-235B-A22B, each version fits a specific use case: research, development, local inference, or large-scale cloud deployment.

But which Qwen 3 model should you pick based on your hardware and workflow? Should you go for the Dense Qwen3-32B, known for its accuracy, or the Mixture-of-Experts Qwen3-30B-A3B, praised for its speed and lower VRAM usage? And how do the mid-range options like Qwen3-14B and Qwen3-8B perform, often underestimated yet ideal for local inference on 16 GB GPUs?

The Qwen 3 project represents more than a technical evolution. It reshapes the hierarchy of open-weight large language models. Each version offers an extended context window (up to 128K tokens or even 1 million, according to the QwenLM report) and supports 119 languages, from French and English to Chinese and Arabic.

This guide reviews the 235B, 32B, 30B, 14B, 8B and 4B models, comparing their reasoning performance, VRAM footprint and ideal use cases. The goal is to help you choose the best Qwen 3 model for your setup, whether you’re running an RTX 5090, a laptop, or an AI server.

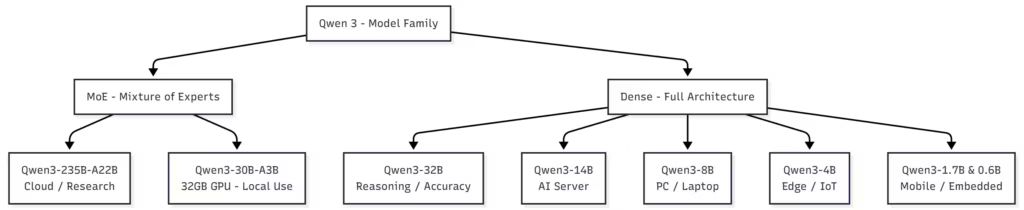

Overview of the Qwen 3 family: Dense, MoE and Thinking Mode

The Qwen 3 lineup uses a flexible architecture designed to scale from laptops to GPU clusters. The idea is simple: deliver a model optimized for every environment without sacrificing reasoning quality or inference speed.

According to the official QwenLM blog, the family is split into two main categories, the Dense models and the Mixture-of-Experts (MoE) models, plus one major innovation introduced with this generation, the Thinking Mode.

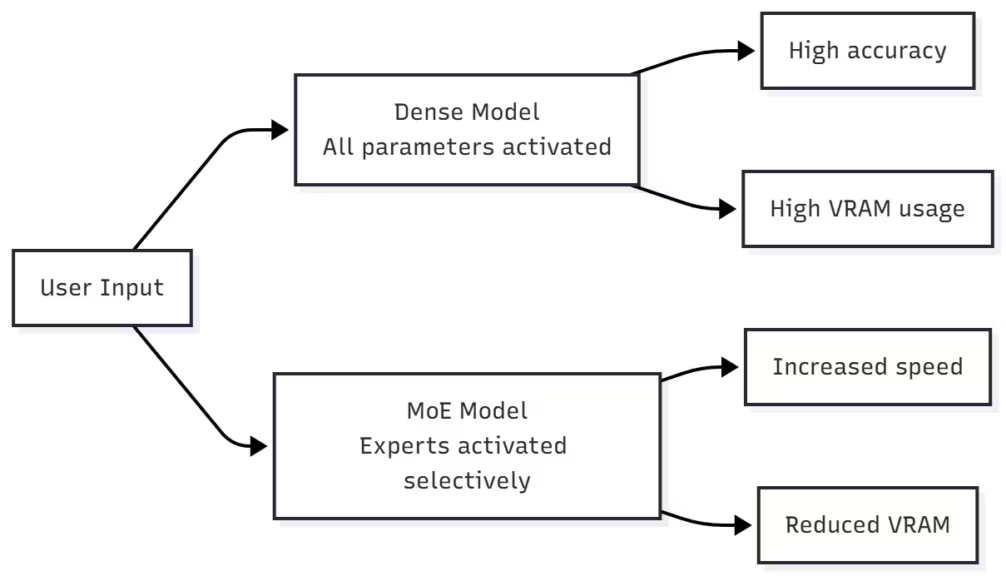

The two main architectures

Dense models, such as Qwen3-32B or Qwen3-14B, follow a traditional architecture where all parameters are activated at each computation step. This ensures maximum stability and high precision for complex reasoning tasks like mathematics, coding or logical analysis. The trade-off is higher VRAM usage and slightly slower token generation.

MoE (Mixture-of-Experts) models, such as Qwen3-30B-A3B or Qwen3-235B-A22B, take a smarter approach: only a few “experts” are activated per token, reducing memory load and boosting throughput. This structure enables models to generate tokens up to 4-6 times faster than dense models of similar size, with only a minor quality drop in typical tasks.

The Thinking Mode

Another major improvement is the Thinking Mode. Built directly into Qwen 3 (not limited to QwQ), it activates a step-by-step reasoning process similar to chain-of-thought. The model can “think” before answering, improving accuracy on complex or multi-step questions. You can toggle it with the /think and /no_think commands, as mentioned on Hugging Face’s Qwen3-32B page.

The Thinking Mode is particularly useful for:

- advanced coding and code planning,

- mathematical or logical problems,

- autonomous AI agents, where reliable reasoning is essential.

Context windows and compatibility

One of the strongest advantages of the Qwen 3 series is its large context window. All models handle at least 128 K tokens, with some extending to 256 K or even 1 million tokens using RoPE scaling and YaRN extension techniques. This makes Qwen 3 a credible alternative to proprietary models such as GPT-4 Turbo for RAG (Retrieval-Augmented Generation) and large-scale document analysis.

In practice, effective context length depends on both model and hardware. A Qwen3-30B-A3B quantized at 4-bit can handle extended contexts on an RTX 5090 (32 GB), while a Qwen3-8B runs more comfortably with a 64 K token window.

Quick comparison of Qwen 3 models

The Qwen 3 family covers a wide range of models, from the 0.6B built for mobile and IoT devices to the massive 235B designed for cloud-scale AI research. Each version aims for a specific balance between speed, accuracy and memory consumption.

The table below summarizes the core specifications to help you quickly visualize the main differences between Dense and Mixture-of-Experts (MoE) architectures.

| Model | Type | Total parameters | Active parameters | VRAM (Q4) | Context window | Typical use case |

|---|---|---|---|---|---|---|

| Qwen3-235B-A22B | MoE | 235 B | 22 B | 80 GB + | 256 K – 1 M | Research, cloud AI, large-scale reasoning |

| Qwen3-32B | Dense | 32 B | 32 B | 27 GB | 128 K | Complex reasoning, analytical tasks |

| Qwen3-30B-A3B | MoE | 30 B | 3 B | 19 GB | 128 K | Local inference, fast and efficient |

| Qwen3-14B | Dense | 14 B | 14 B | 12 GB | 128 K | Enterprise AI, internal servers |

| Qwen3-8B | Dense | 8 B | 8 B | 8 GB | 128 K | PC, laptop, local AI apps |

| Qwen3-4B | Dense | 4 B | 4 B | 5 GB | 128 K | Edge devices, lightweight servers |

| Qwen3-1.7B | Dense | 1.7 B | 1.7 B | 3 GB | 128 K | Mobile or CPU-only offline AI |

| Qwen3-0.6B | Dense | 0.6 B | 0.6 B | 2 GB | 128 K | IoT, embedded automation tools |

Key takeaways from the comparison

- MoE models (30B-A3B and 235B-A22B) deliver unmatched efficiency, since only a few experts are activated per token.

- Dense models, such as Qwen3-32B or 14B, remain the most accurate for logic-heavy or analytical workloads.

- Mid-range models (14B and 8B) provide the best performance-to-accessibility ratio, ideal for 16 GB GPUs.

- Light models (4B, 1.7B) ensure low latency and easy deployment for portable or embedded setups.

According to RunPod and LLM-Stats, the Qwen3-30B-A3B remains the best overall compromise for local inference on 32 GB GPUs. It often outperforms the 32B Dense model in speed while staying lighter to load.

Qwen3-235B-A22B: the flagship model

The Qwen3-235B-A22B represents the top tier of the Qwen lineup. Developed by Alibaba Cloud, it embodies the project’s goal to provide an open-weight LLM rivaling proprietary systems like GPT-4 or Gemini 1.5 Ultra.

This model uses a Mixture-of-Experts (MoE) structure: out of 235 billion total parameters, only 22 billion are active per token. This approach drastically reduces GPU requirements while maintaining deep reasoning and exceptional linguistic consistency. According to the QwenLM blog, each forward pass activates 8 experts out of 128, balancing diversity and performance.

Capabilities and performance

- Context window: 256 K tokens by default, extendable to 1 million using YaRN and RoPE scaling (Qwen3 arXiv report).

- Languages supported: 119 languages and dialects, including English, French, Chinese, Arabic and Spanish.

- Performance: over 90% scores on AIME 2025, LiveBench Math and Arena Hard benchmarks, according to LLM-Stats.

- Use cases: fine-tuning for research, distributed inference, large-scale RAG, multimodal analysis with Qwen3-VL.

Hardware requirements

This model targets high-end infrastructures only. A full run requires more than 80 GB of VRAM, or multiple GPUs connected through NVLink. Even with 4-bit quantization, a single 32 GB GPU (RTX 5090, A100 or H100) cannot provide a smooth inference experience. Local users must rely on:

- multi-GPU clusters,

- or cloud services such as RunPod, Lambda Labs, or vLLM Cloud.

According to Tech Reviewer, a dual-H100 setup reaches around 120 tokens/s in INT4 mode while consuming over 600 W of GPU power.

Summary

| Feature | Detail |

|---|---|

| Architecture | Mixture-of-Experts (22 B active / 235 B total) |

| Context window | 256 K to 1 M tokens |

| Languages | 119 languages and dialects |

| Performance | > 90% on logic and math benchmarks |

| Ideal use | Cloud AI, research, autonomous reasoning, RAG |

| Limitation | Not executable locally without multi-GPU setup |

Qwen3-32B vs Qwen3-30B-A3B: the key comparison

This is where the real dilemma lies for most users. Both the Qwen3-32B (Dense) and the Qwen3-30B-A3B (MoE) are the most popular choices for local inference on high-end GPUs. They deliver an excellent balance of reasoning power, efficiency and VRAM usage, but follow very different architectural philosophies.

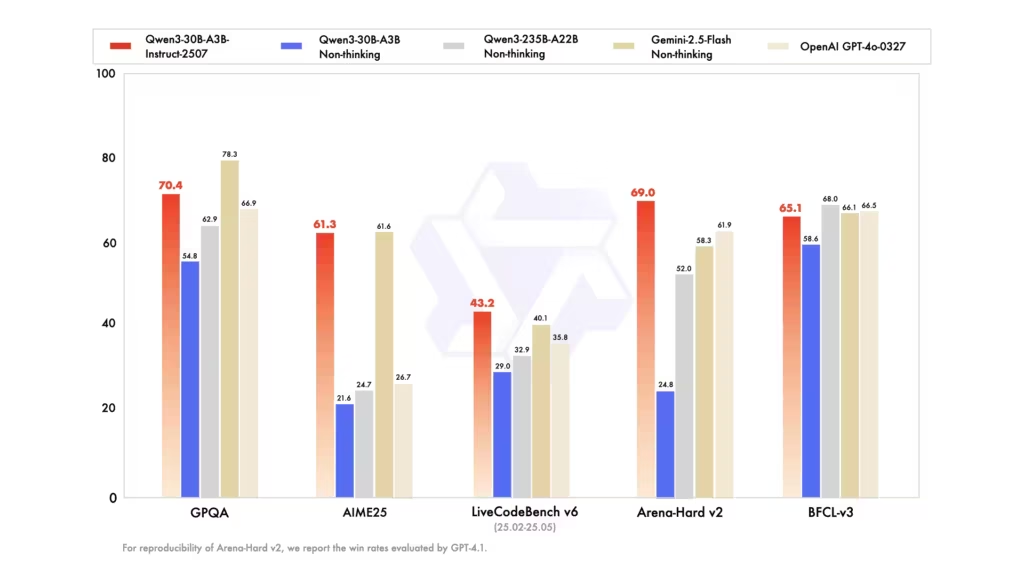

Performance and speed

Community benchmarks clearly show a speed advantage for the 30B-A3B. Based on tests shared on Reddit /r/LocalLLaMA, the model reaches up to 190 tokens/s on an RTX 5090 in Q4_K_M quantization with an 8K context. By comparison, the 32B Dense usually stays around 50–60 tokens/s under similar conditions. There is currently no data available for the new NVFP4 format, yet these models are already listed on Hugging Face in the optimized NVFP4 version.

This difference comes from the Mixture-of-Experts mechanism: only a few experts are activated per token, which drastically lowers GPU load. During long conversations or multi-turn chat sessions, the Qwen3-30B-A3B maintains low latency and strong contextual coherence.

According to Kaitchup Substack, this model can be up to 6× faster in certain generation scenarios with equivalent batch sizes, while keeping dense-like accuracy across general benchmarks.

VRAM usage and efficiency

The Qwen3-30B-A3B is notably lighter:

- around 19 GB VRAM in INT4,

- versus 27 GB for the Qwen3-32B Dense, according to Unsloth.

This makes it possible for most users with RTX 4090 or 5090 (32 GB) cards to run the model entirely in GPU memory without CPU offload, leaving headroom for large context windows up to 128 K tokens.

Precision and behavior

On logical and mathematical benchmarks such as AIME, Arena Hard and LiveBench, the Qwen3-32B Dense still holds a slight accuracy edge. It produces fewer arithmetic mistakes and fewer hallucinations in long-form reasoning. It remains the top choice for:

- scientific or analytical tasks,

- advanced code validation,

- or agent-based computation chains.

The 30B-A3B, however, excels in conversational, creative and generative use cases. Its fluidity and speed make it ideal for text generation, RAG applications, or local AI assistants.

Summary

| Criterion | Qwen3-30B-A3B (MoE) | Qwen3-32B (Dense) |

|---|---|---|

| Architecture | Mixture-of-Experts (3B active / 30B total) | Fully dense |

| VRAM (Q4) | ~19 GB | ~27 GB |

| Speed (RTX 5090) | 140–190 tok/s | 50–60 tok/s |

| Logical precision | Good | Excellent |

| Ideal use case | Fast local AI, prototyping, agents | Logic, math, complex reasoning |

| Languages supported | 119 | 119 |

| Context window | 128 K | 128 K |

In practice, the Qwen3-30B-A3B stands out as the best choice for smooth local inference on 32 GB GPUs, while the Qwen3-32B remains the reference for users prioritizing maximum precision and deterministic reasoning.

Mid-range models: Qwen3-14B and Qwen3-8B

Between the high-end cloud-ready models and the ultra-light local versions, Qwen3-14B and Qwen3-8B occupy a strategic position. They target users who want solid reasoning performance with moderate VRAM needs, without investing in expensive hardware. Both follow the Dense architecture, maintaining the Qwen philosophy: open, multilingual, and built for robust reasoning, yet still manageable on local machines.

Qwen3-14B: the balanced choice

The Qwen3-14B is often described as the sweet spot in the lineup. It delivers stability close to the 32B while reducing hardware demand by roughly 40%. According to Hugging Face benchmarks, it easily handles 128 K context windows, showing excellent linguistic understanding and sustained coherence during long dialogues.

Key specs:

- Architecture: Dense, 14 billion parameters

- VRAM (Q4): ~12 GB

- Speed: ~70 tok/s on RTX 5090 (from RunPod benchmarks)

- Recommended uses: enterprise servers, internal assistants, complex conversational agents

The 14B fits perfectly on GPUs with 16–24 GB VRAM (RTX 4080 / 4090), making it an excellent option for independent developers or small AI teams that want a reliable, open-weight model without relying on the cloud.

Qwen3-8B: the local all-rounder

The Qwen3-8B sits at the intersection of performance and accessibility. With 8 billion parameters, it runs comfortably on 8 GB GPUs while delivering results that surpass other models in the same range, such as Mistral 7B. The QwenLM GitHub documentation notes it was trained on 36 trillion multilingual tokens, ensuring strong grammar and fluency across 119 languages.

Highlights:

- VRAM (Q4): ~8 GB

- Context window: 128 K tokens

- Speed: ~120 tok/s on RTX 4070

- Best use cases: text generation, local chatbots, creative writing, AI tools for prototyping

For PC users or modern laptops, Qwen3-8B is the best entry point into the Qwen 3 ecosystem. It allows users to experiment with the Thinking Mode, run complex prompts, and build custom AI agents without any cloud dependency.

Summary

| Criterion | Qwen3-14B | Qwen3-8B |

|---|---|---|

| Architecture | Dense | Dense |

| VRAM (Q4) | ~12 GB | ~8 GB |

| Speed (RTX 5090) | ~70 tok/s | ~120 tok/s |

| Context window | 128 K | 128 K |

| Ideal use case | Enterprise AI, internal assistants | Local PC, laptop, prototyping |

| Languages supported | 119 | 119 |

These two models are ideal gateways into the Qwen 3 family. They are powerful enough for demanding reasoning tasks yet light enough for comfortable local inference on mainstream GPUs.

Lightweight models: Qwen3-4B, Qwen3-1.7B and Qwen3-0.6B

The Qwen3-4B, 1.7B, and 0.6B models represent the most accessible side of the family. They are designed for low-power machines, or even CPU-only environments, allowing developers to use generative AI on devices with limited resources such as mini servers, laptops, embedded systems, or IoT hardware.

Despite their smaller size, these models retain full compatibility with the Qwen 3 ecosystem, including Hugging Face Transformers, vLLM, Ollama, and LM Studio.

Qwen3-4B: portable yet capable

The Qwen3-4B is the smallest model that still delivers serious usability. With 4 billion parameters, it fits in around 5 GB of VRAM when quantized to Q4, while maintaining coherent text generation over long contexts. It’s well-suited for personal servers, compact workstations, or Windows 11 setups with 8 GB GPUs.

According to RunPod, it maintains response times under 300 ms/token, with support for 128 K token contexts. Its main limits appear in deep logical reasoning or long multi-turn conversations.

Recommended uses: offline chatbots, local summarization tools, lightweight automation, or embedded AI assistants on microservers.

Qwen3-1.7B: made for laptops and low-end PCs

The Qwen3-1.7B is even more compact, optimized to run on 4 GB GPUs or directly on CPU. It supports Thinking Mode and keeps the full multilingual structure with 119 languages. Its main strength lies in its instant reactivity: startup takes less than two seconds, and it produces smooth text without long loading times.

Ideal applications include:

- offline personal assistants,

- automation scripts on Windows or Linux,

- embedded conversational interfaces in desktop or web apps.

Even with its small size, it generates natural and balanced text, especially in short commands and concise instructions.

Qwen3-0.6B: ultra-light for IoT and edge AI

At only 600 million parameters, the Qwen3-0.6B is built for micro devices, Raspberry Pi 5, and IoT edge systems. Its memory footprint is below 2 GB, and it can perform inference in real time on ARM CPUs. It’s obviously limited in reasoning and long-context coherence, but it excels in classification, keyword detection, or text-command generation.

This model perfectly illustrates the modular philosophy of the Qwen 3 family, covering every possible use case, from large-scale servers to embedded offline systems.

Summary

| Model | Parameters | VRAM (Q4) | Context | Ideal use case |

|---|---|---|---|---|

| Qwen3-4B | 4 B | 5 GB | 128 K | Local AI, compact servers |

| Qwen3-1.7B | 1.7 B | 3 GB | 128 K | Laptops, low-end PCs |

| Qwen3-0.6B | 0.6 B | 2 GB | 128 K | IoT, edge AI, ARM CPU setups |

These smaller models demonstrate the scalability of the Qwen 3 ecosystem. They cannot match the reasoning depth of the 30B or 32B versions, but they guarantee minimal latency and easy integration into lightweight AI pipelines.

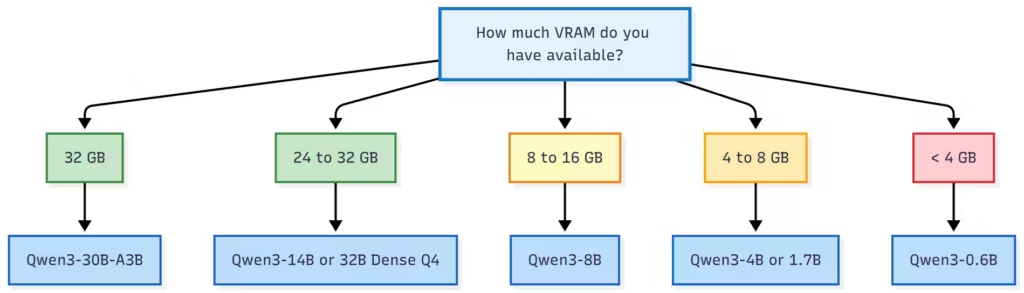

How to choose the right Qwen 3 model

Choosing the right Qwen 3 model depends on your hardware, your use case, and the level of precision you need. All models share the same core architecture, but their behavior varies greatly depending on VRAM capacity, context length, and task complexity. Here are the key selection factors to consider.

1. Available VRAM capacity

GPU memory is the first constraint to check. Dense models require more VRAM since all parameters are active, while MoE models activate only a fraction of experts. According to RunPod and Unsloth:

- Qwen3-30B-A3B (MoE) → ~19 GB (Q4)

- Qwen3-32B (Dense) → ~27 GB (Q4)

- Qwen3-14B → ~12 GB

- Qwen3-8B → ~8 GB

- Qwen3-4B → ~5 GB

👉 Tip: Always keep at least 2–4 GB of free VRAM on your GPU to avoid swap delays and KV cache slowdowns.

2. Desired generation speed

Token generation speed depends directly on model type, quantization, and batch size. MoE models like 30B-A3B are typically 4–6× faster than their dense equivalents, according to Kaitchup Substack. If you want a responsive model for coding, chat, or interactive tasks, go for:

- Qwen3-30B-A3B for GPUs ≥ 24 GB,

- or Qwen3-8B / 14B for GPUs ≤ 16 GB.

For long-running tasks like document analysis, RAG, or structured text generation, dense models provide more consistent output even if they’re slower.

3. Required reasoning precision

Benchmarks consistently show:

- Qwen3-32B tops AIME, Arena Hard, and LiveBench Math,

- Qwen3-30B-A3B performs close in conversational and general-purpose contexts,

- Qwen3-14B and 8B remain reliable for writing, code generation, and support chat.

👉 For analytical or technical AI assistants, choose 32B Dense. For versatile local AI agents, 30B-A3B is more efficient.

4. Context window size

All Qwen 3 models support at least 128 K tokens, and some like 235B-A22B and 30B-A3B extend up to 256 K or even 1M tokens using YaRN or RoPE scaling (QwenLM arXiv report). If your workflow involves long documents, PDFs, or source code, pick a model with an extended context window. For most everyday tasks, 128 K is more than enough.

5. Execution environment

| Environment | Recommended model | Why it fits well |

|---|---|---|

| High-end PC (RTX 5090, 32 GB) | Qwen3-30B-A3B | Fast, smooth, excellent VRAM/perf ratio |

| Pro workstation (RTX 4090, 24 GB) | Qwen3-14B or 30B-A3B (Q4) | Balanced between precision and efficiency |

| Mid-range PC (RTX 4070 / 4070 Ti) | Qwen3-8B | Stable, lightweight, full multilingual support |

| Laptop AI / GPU 8 GB | Qwen3-4B | Instant startup, low latency |

| Cloud / multi-GPU cluster | Qwen3-235B-A22B | Maximum power and reasoning depth |

| Edge / IoT / ARM CPU | Qwen3-1.7B or 0.6B | Minimal power usage, offline AI capability |

In short, the best Qwen 3 model is the one that balances power, speed, and VRAM usage for your hardware. 32 GB GPUs fully unlock the 30B-A3B, while mid-range setups are best served by 14B or 8B. Ultra-light models (4B, 1.7B, 0.6B) are perfect for embedded or offline AI applications.

Recommendations by user profile

The diversity of the Qwen 3 lineup makes it easy to find a model that fits your exact setup and workflow. Whether you’re a developer, a researcher, or an AI enthusiast, your best choice depends on your GPU power and how you intend to use the model.

For demanding users: GPUs with 32 GB or more

If you have a RTX 5090, A100, or H100, you can leverage the high-end models. The top option for local inference is Qwen3-30B-A3B (MoE). It combines high throughput, efficient memory usage (~19 GB in Q4), and excellent accuracy for general reasoning.

Best suited for:

- advanced AI assistants,

- multi-agent systems,

- RAG workflows that require quick contextual responses.

The Qwen3-32B Dense, while heavier, is ideal if your priority is logical precision or scientific code validation.

Best suited for:

- complex reasoning,

- math-heavy computations,

- data validation and research.

According to LLM-Stats, the Dense version remains ahead on reasoning benchmarks but trades off raw generation speed.

For professional workstations and AI developers

With a RTX 4090 (24 GB) or equivalent setup, two models stand out:

- Qwen3-14B, perfect for enterprise servers, internal chatbots, and code assistants.

- Qwen3-30B-A3B (quantized), if you can allocate enough VRAM or use offloading through vLLM.

The 14B provides full multilingual reasoning and robust text consistency while staying power-efficient. It’s a strong choice for corporate AI tools, support bots, or developer agents.

Also read : Install vLLM with Docker Compose on Linux (compatible with Windows WSL2)

For creators, tinkerers and independent researchers

If you’re running a GPU with 8–16 GB, your best picks are:

- Qwen3-8B for local experimentation, creative text generation, and assistant development.

- Qwen3-4B for lightweight testing and offline integration.

The Qwen3-8B offers a perfect balance between responsiveness and linguistic fluency. It’s fast enough for real-time applications in LM Studio or Ollama, and versatile enough for scripting, coding, or writing assistants. According to Hugging Face, it supports all 119 languages and performs smoothly on 8 GB GPUs.

For mobile and embedded environments

The Qwen3-1.7B and 0.6B models stand out for ultra-low-power AI use. They can run fully offline and integrate easily into:

- mobile or desktop apps,

- edge AI hardware (like Raspberry Pi or Jetson Nano),

- voice or command interfaces in IoT systems.

While limited in reasoning depth, they’re ideal for classification, text parsing, and command generation, enabling small devices to perform real-time AI tasks locally.

For cloud and large-scale research

The Qwen3-235B-A22B is reserved for enterprise-scale or academic infrastructures. It shines in massive RAG pipelines, distributed fine-tuning, and multimodal reasoning with Qwen3-VL. As reported by TechCrunch, its efficiency is comparable to GPT-4, while remaining open-weight and customizable.

Summary table

| User profile | Recommended model | Key advantages | Ideal environment |

|---|---|---|---|

| GPU 32 GB and above | Qwen3-30B-A3B | High speed, efficient MoE, RAG-ready | RTX 5090, H100 systems |

| AI workstation (24 GB) | Qwen3-14B | Stable, accurate, professional-grade | RTX 4090 |

| GPU 8–16 GB | Qwen3-8B | Fast, multilingual, lightweight | PC, laptop |

| Mini PC / Edge devices | Qwen3-4B / 1.7B | Very low latency, CPU-friendly | Laptop, IoT hardware |

| Cloud / Cluster setups | Qwen3-235B-A22B | Maximum performance, deep reasoning | Multi-GPU servers |

These recommendations cover every hardware tier, from home PCs to data centers. The modular design of Qwen 3 allows developers to use the same framework across different scales, switching between models without changing the overall pipeline.

FAQ – Common questions about Qwen 3 models

This section compiles the most frequent questions from the community about Qwen 3 models, from setup to performance. The answers are based on user feedback from Hugging Face, Reddit /r/LocalLLaMA, and the official QwenLM GitHub documentation.

What is the difference between Dense and MoE models?

A Dense model activates all its parameters for every generated token. This ensures maximum precision and coherence, but it’s VRAM-heavy and slower. A MoE (Mixture-of-Experts) model activates only a subset of experts (usually 8 out of 128) at each step, reducing memory usage and improving speed.

👉 Example: Qwen3-30B-A3B activates only 3 billion parameters per token, compared to 32 billion for the Qwen3-32B Dense model. As Kaitchup Substack shows, this makes MoE models 4–6× faster on average with minimal quality loss.

Can I run Qwen3-32B on a 24 GB GPU?

Yes, but only with strong quantization (Q4) and partial CPU offload via vLLM or ExLlama V2. The model requires around 27 GB VRAM, so stable execution needs good cache and swap handling. For smoother real-time use, the Qwen3-30B-A3B is more efficient and responsive.

Which version gives the best logical or mathematical reasoning?

The Qwen3-32B Dense leads on reasoning benchmarks:

- AIME 2025

- Arena Hard

- LiveBench Math

The Qwen3-30B-A3B performs nearly as well in general tasks but is slightly less consistent for step-by-step math or logic chains. See LLM-Stats for detailed comparisons.

Do Qwen 3 models support French and other languages?

Yes. All models were trained on 36 trillion multilingual tokens covering 119 languages and dialects. According to the Qwen 3 arXiv report, this linguistic coverage is among the broadest in the open-source ecosystem, surpassing Qwen 2.5 and rivaling Gemini 1.5. French, English, Chinese, Arabic, and Spanish are all well supported with natural tone and grammar accuracy.

Where can I download Qwen 3 models?

Official repositories include:

Available formats:

- Safetensors (FP16, FP8)

- GGUF (Q4, Q5, Q6, Q8)

- Optimized versions for vLLM and Unsloth

Are Qwen 3 models compatible with Ollama or LM Studio?

Yes. Quantized GGUF versions are fully compatible with LM Studio, Ollama, and KoboldCPP. They work on Windows, macOS, and Linux. Performance depends on quantization type:

- Q4_K_M → best balance between speed and quality

- Q6_K → improved accuracy

- Q8_0 → best for long contexts or translation

Which version should I use for offline or mobile AI?

- Qwen3-4B → ideal for low-end PCs or GPUs (8 GB).

- Qwen3-1.7B → works on laptop or CPU with fast startup.

- Qwen3-0.6B → best for Raspberry Pi, IoT, or command-line tasks.

These lightweight models launch almost instantly, though they’re limited in reasoning depth.

Is Qwen 3 open source?

Yes. All Qwen 3 models are released as open weights under a permissive license similar to Qwen 2.5. You can use them commercially, modify, or fine-tune locally. Weights are available in FP16, INT8, FP8, and Q4 formats, making integration easy into any AI workflow.

Conclusion

The Qwen 3 family represents a new generation of open-source AI models: powerful, scalable, and accessible for every hardware profile. From the high-end Qwen3-235B-A22B built for cloud-scale research to the Qwen3-4B designed for local computing, every model has a clear purpose.

The Qwen3-30B-A3B stands out as the best overall choice for smooth local inference on a 32 GB GPU. It offers the efficiency of a Mixture-of-Experts architecture while keeping solid reasoning ability, making it perfect for AI assistants, agents, and RAG pipelines. For those seeking maximum reasoning precision, the Qwen3-32B Dense remains the top performer in math, logic, and code reliability. Meanwhile, the 14B and 8B models serve as excellent entry points for smaller teams or developers running AI locally on mainstream GPUs.

Finally, the lightweight versions (4B, 1.7B, 0.6B) confirm the modular strength of Qwen 3: enabling low-latency, on-device AI even without a discrete GPU. This full spectrum—from data centers to laptops—positions Qwen 3 as a credible open alternative to proprietary models.

To go further, explore:

- the official QwenLM documentation,

- detailed benchmarks on Hugging Face,

- and community comparisons on LLM-Stats.

Summary table

| Use case | Recommended model | Strengths |

|---|---|---|

| Research / Cloud AI | Qwen3-235B-A22B | Extreme power, long context window |

| High-end local AI | Qwen3-30B-A3B | Fast, efficient MoE architecture |

| Maximum reasoning accuracy | Qwen3-32B Dense | Stable, precise logical reasoning |

| Balanced performance | Qwen3-14B | Great VRAM/performance ratio |

| Mainstream local setup | Qwen3-8B | Lightweight, multilingual, smooth |

| Edge / embedded devices | Qwen3-4B / 1.7B / 0.6B | Low latency, CPU-compatible |

In 2025, Qwen 3 confirms the maturity of open-source AI. It delivers the performance, transparency, and adaptability modern users need, from developers to research labs. Whether you’re building a cloud-scale AI agent or a local assistant on a laptop, there’s now a Qwen 3 model tailored to your setup, combining open innovation with real-world efficiency.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!