Top 10 trending models on Hugging Face in September 2025

September 2025 is yet another milestone for open-source artificial intelligence. On Hugging Face, the go-to platform for researchers, developers, and AI enthusiasts, some models clearly stand out for their popularity, measured through downloads, frequent updates, and the number of likes.

This article tours the top 10 trending models on Hugging Face in September 2025, a ranking that reflects current needs in Large Language Models (LLM), multimodal models (text, image, audio, video), and specialized tools such as translation models, image generation, or semantic search.

Why track trending models on Hugging Face?

Hugging Face has become much more than a simple AI model repository. It’s a true barometer of innovation and usage in artificial intelligence. Popular AI models help you understand which architectures, techniques, and use cases dominate the market. Tracking the most downloaded and liked models also helps you anticipate future trends, whether it’s an embedding model for semantic search, a text-to-image diffusion model with high resolution, or an LLM optimized for code and long-context reasoning.

For companies and indie developers alike, choosing a trending model on Hugging Face means accessing technology already validated by a large community, ensuring better documentation, more feedback, and performance tested at scale.

methodology and selection criteria for trending ai models

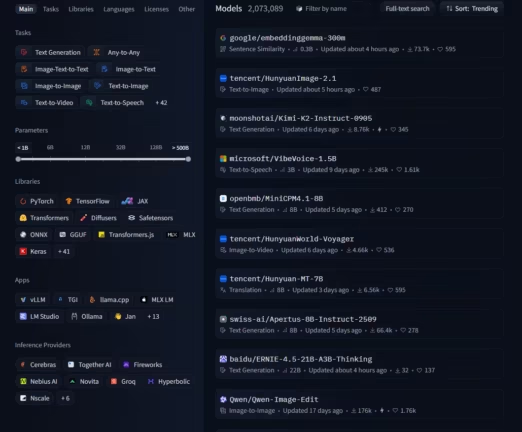

This top-10 ranking of trending models on Hugging Face in September 2025 relies on several objective criteria:

- Number of downloads: a direct indicator of community adoption.

- Number of likes: a measure of user satisfaction and trust.

- Last update date: recent models are often more performant and better aligned with current needs.

The selection spans multiple categories: open-source LLMs, multilingual translation models, image generation and editing models, audio models for text-to-speech, as well as models specialized in search and semantic similarity. Combining these criteria, the article offers a global view of AI trends on Hugging Face in 2025, useful for researchers and professionals integrating AI into their products.

| Model | Parameters | Downloads | Likes |

|---|---|---|---|

| google/embeddinggemma-300m | 0.3B | 73.7k | 595 |

| tencent/HunyuanImage-2.1 | – | 487 | – |

| moonshotai/Kimi-K2-Instruct-0905 | – | 8.76k | 345 |

| microsoft/VibeVoice-1.5B | 3B | 245k | 1.61k |

| openbmb/MiniCPM4.1-8B | 8B | 412 | 270 |

| tencent/HunyuanWorld-Voyager | – | 4.66k | 536 |

| tencent/Hunyuan-MT-7B | 8B | 6.56k | 595 |

| swiss-ai/Apertus-8B-Instruct-2509 | 8B | 66.4k | 278 |

| baidu/ERNIE-4.5-21B-A3B-Thinking | 22B | 32 | 137 |

| Qwen/Qwen-Image-Edit | – | 176k | 1.76k |

1. google/embeddinggemma-300m: a next-generation multilingual embedding model

Among the most popular AI models on Hugging Face in September 2025, google/embeddinggemma-300m holds a prime spot. Developed by Google DeepMind, this lightweight 300-million-parameter model is an ideal solution for semantic similarity, text classification, and document clustering.

The main strength of EmbeddingGemma lies in its multilingual training covering over 100 languages, making it a powerful tool for search and information retrieval in multilingual environments. As Google’s official documentation highlights, it’s designed to work on cloud infrastructure as well as on resource-constrained local machines (PCs, laptops, even smartphones).

Technically, it produces embedding vectors of dimension 768 (with possible reduction to 512, 256, or 128 thanks to Matryoshka Representation Learning). This makes it particularly suited to modern use cases:

- Enterprise internal search engines.

- Content recommendation on digital platforms.

- Text similarity analysis for duplicate or anomaly detection.

- Retrieval-Augmented Generation (RAG) combined with LLMs.

In September 2025, the model’s success on Hugging Face is explained by its small footprint, multilingual performance, and seamless integration with Sentence Transformers, which eases community adoption.

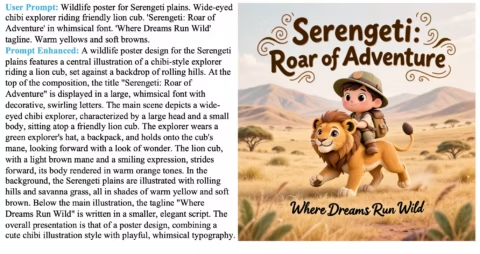

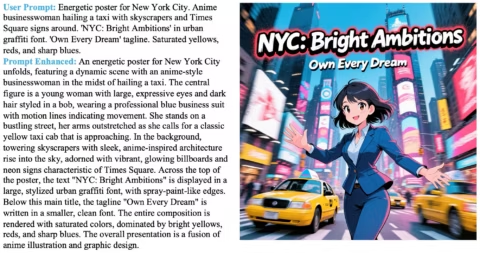

2. tencent/HunyuanImage-2.1: high-fidelity text-to-image at 2k resolution

The second standout model is tencent/HunyuanImage-2.1, a text-to-image diffusion model notable for generating ultra-high-resolution images (2048×2048) while maintaining strong text-image alignment.

Released in early September 2025, this model combines several technical innovations:

- Prompt Enhancer that automatically enriches textual instructions.

- Refiner Model to sharpen details and improve clarity.

- Reinforcement Learning from Human Feedback (RLHF) applied to optimize aesthetics and accuracy.

Benchmarks like the Structured Semantic Alignment Evaluation (SSAE) show that HunyuanImage-2.1 competes with top closed models such as GPT-Image and outperforms several open-source models like Qwen-Image.

Use cases include:

- Art creation and graphic design.

- Marketing and advertising illustration.

- Creative content production for social media.

- Rapid prototyping for film, animation, and video games.

With this model, Tencent confirms its position as a key player in multimodal models, and its massive adoption on Hugging Face in September 2025 reflects rising demand for image-focused generative AI.

3. moonshotai/Kimi-K2-Instruct-0905: a giant for reasoning and code

The model moonshotai/Kimi-K2-Instruct-0905 is among the trending LLMs on Hugging Face in September 2025. Developed by Moonshot AI, it embodies a new generation of Mixture-of-Experts (MoE) with a staggering 1 trillion total parameters, of which 32B are activated per token.

Its strength rests on three pillars:

- Extended 256k-token context, making it one of the best models for handling complex projects and long documents.

- Outstanding performance on code benchmarks like SWE-Bench, where it rivals giants such as Claude Opus 4 or DeepSeek V3.1.

- Advanced tool-calling capabilities, making it particularly useful in agentic AI environments.

In practice, Kimi-K2-Instruct-0905 suits developers working on:

- Code generation and debugging.

- Smart conversational agents.

- Projects requiring structured long-form reasoning such as scientific research.

As of September 2025, this model is favored by developer communities for its raw power as well as its compatibility with vLLM, SGLang, and TensorRT-LLM, streamlining production deployment.

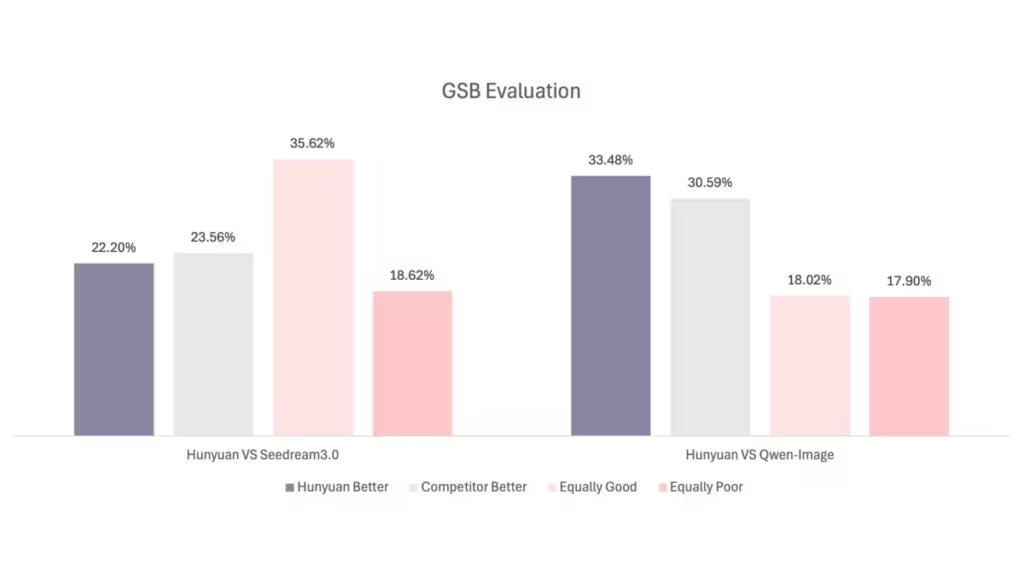

4. microsoft/VibeVoice-1.5B: the future of expressive text-to-speech

Microsoft—already present with Copilot and Azure AI—takes a step forward with VibeVoice-1.5B, a Text-to-Speech (TTS) model that stands out from classic systems by generating natural, expressive audio conversations close to human experience. Note that a VibeVoice-7B version is also available.

This model is based on an innovative architecture combining:

- Acoustic and semantic tokenizers preserving audio quality while reducing compute cost.

- A next-token diffusion framework improving fluency and voice naturalness.

- Multi-speaker management, able to produce up to 90 minutes of audio with 4 distinct voices.

However, Microsoft warns about misuse risks, especially voice deepfakes. To address this, VibeVoice-1.5B embeds two safeguards:

- An invisible watermark in generated audio.

- An audible message automatically inserted in every file: “This segment was generated by AI”.

Hugging Face shows rapid adoption among podcasters, audiobook creators, and human-computer interaction researchers. It’s a decisive step toward immersive artificial conversations. As of now the model is limited to English and Chinese; for a broader choice see this guide to the best open-source TTS models.

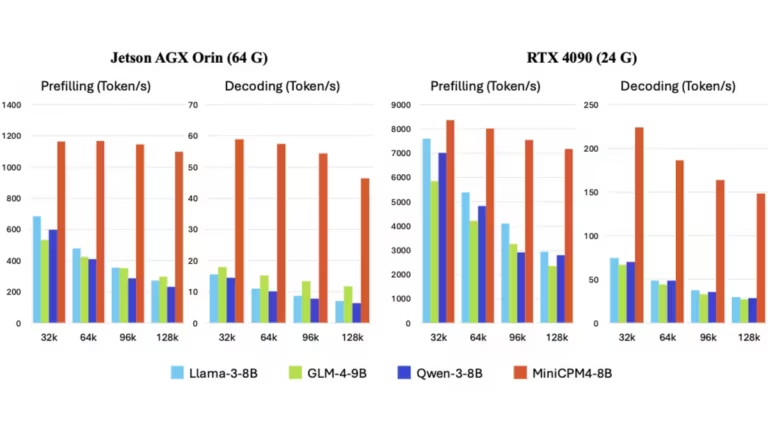

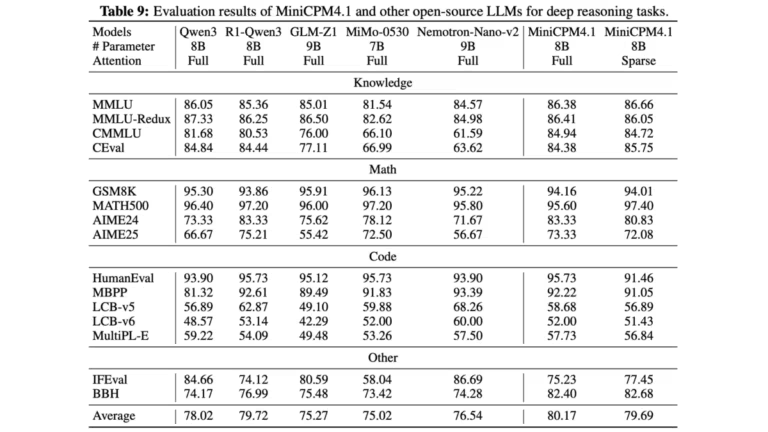

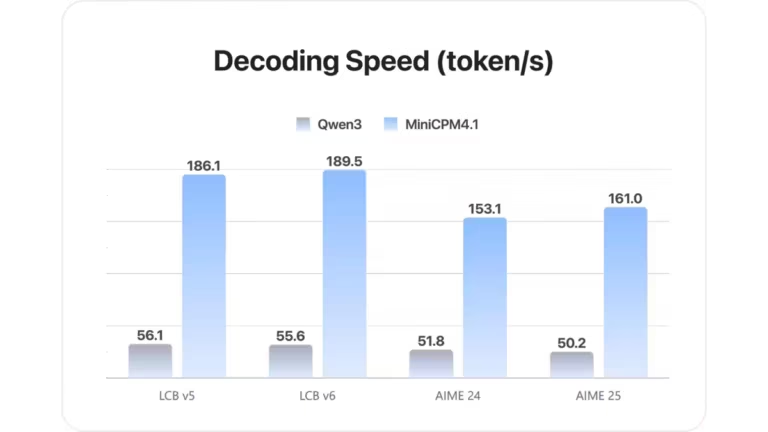

5. openbmb/MiniCPM4.1-8B: an llm optimized for edge devices

The model openbmb/MiniCPM4.1-8B illustrates another strong trend on Hugging Face: the quest for efficiency on edge devices and resource-constrained environments. Unlike heavyweights like Kimi-K2 or ERNIE, this model focuses on cost-efficiency.

Key innovations include:

- InfLLM v2, a trainable sparse attention that drastically reduces long-sequence cost.

- BitCPM, a ternary quantization cutting parameter size by 90%.

- Hybrid reasoning mode, switching between deep reasoning and fast generation as needed.

- Long context up to 65k tokens, extendable to 128k with RoPE scaling.

In concrete terms, MiniCPM4.1-8B is used for:

- Mobile AI applications.

- Embedded projects (robots, voice assistants).

- Hybrid cloud-edge environments.

With native support for CUDA, SGLang, and vLLM, it appeals to developers seeking a balance between power and lightness. Its rise in popularity in September 2025 shows that the AI race isn’t limited to supercomputers—it’s also about accessible, embedded AI.

6. tencent/HunyuanWorld-Voyager: 3d-consistent video from a single image

With tencent/HunyuanWorld-Voyager, we enter another space: 3D-consistent video generation. This model relies on an innovative video diffusion framework capable of transforming a single image into a 3D video sequence while following a user-defined camera path.

Key features include:

- Joint generation of RGB and depth videos, simplifying 3D reconstruction.

- Long-term scene consistency, ensuring realism despite changing angles.

- Real-world applications for virtual reality (VR), video games, and immersive cinema.

As shown in the associated scientific publication (Huang et al., 2025), Voyager paves the way for a new generation of interactive, explorable content—a strong trend on Hugging Face as multimodal models gain visibility.

7. tencent/Hunyuan-MT-7B: state-of-the-art multilingual translation

The model tencent/Hunyuan-MT-7B is a standout in machine translation. Supporting 33 languages (including minority Chinese languages), it targets both the general public and academic/industrial projects requiring reliable, fluent translation.

What sets it apart:

- An architecture optimized for cross-lingual pretraining (CPT) and supervised fine-tuning (SFT).

- A companion model, Hunyuan-MT-Chimera-7B, combining multiple translation outputs for enriched results.

- A first-place finish at WMT25 in 30 out of 31 categories.

This success stems from its blend of linguistic quality, multilingual robustness, and performance on languages rarely covered by major LLMs. On Hugging Face, Hunyuan-MT-7B appeals to developers, researchers, and translators seeking an open-source alternative to closed services like DeepL or Google Translate.

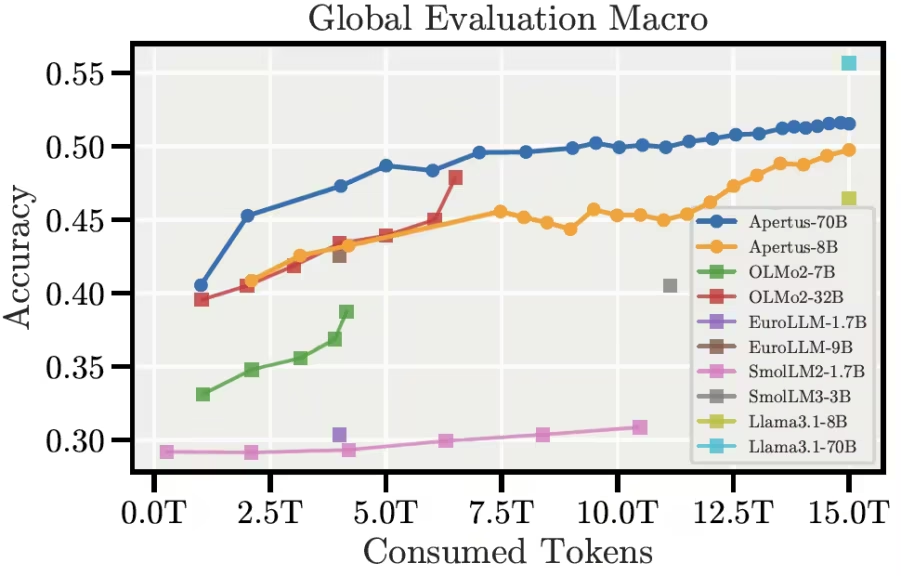

8. swiss-ai/Apertus-8B-Instruct-2509: swiss transparency for trustworthy ai

Launched by the Swiss National AI Institute (SNAI), Apertus-8B-Instruct-2509 embodies a different approach to AI: radical openness and transparency. Unlike many closed models, Apertus provides:

- Open-source weights.

- Full training recipes.

- A dataset built with opt-out compliance and European regulations in mind.

With 8 billion parameters, this multilingual model supports over 1,800 languages—a first in the field. Benchmarks show it competes with LLaMA 3.1 and Qwen 2.5 while ensuring an ethical, responsible approach.

Use cases include:

- Teaching and academic research.

- Open-source projects requiring full transparency.

- Institutions deploying AI in compliance with the EU AI Act.

Its popularity surge on Hugging Face in September 2025 illustrates a growing demand for trust and control in generative AI.

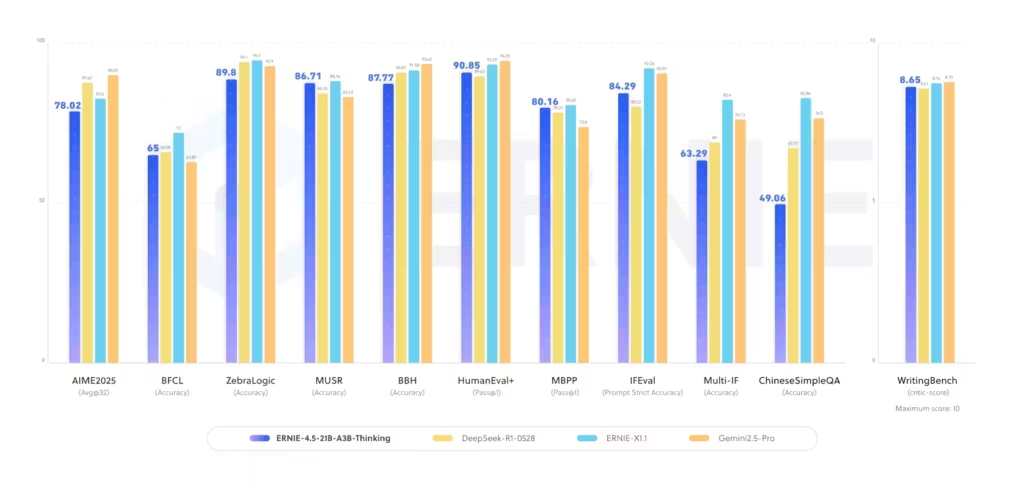

9. baidu/ERNIE-4.5-21B-A3B-Thinking: power for complex reasoning

Baidu advances with ERNIE-4.5-21B-A3B-Thinking, a Mixture-of-Experts model designed for intensive reasoning. With 21B parameters (3B activated) and an extended context of 128k tokens, it positions itself as a direct competitor to DeepSeek and OpenAI.

Highlights:

- Excellent results on logical, mathematical, and scientific reasoning.

- Built-in tool calling for agentic AI usage.

- Native support for FastDeploy and vLLM, easing production deployment.

As Baidu’s documentation notes, the model is intended for scenarios where reasoning quality trumps raw speed. This explains adoption across education, advanced research, strategic consulting, and scientific assistance.

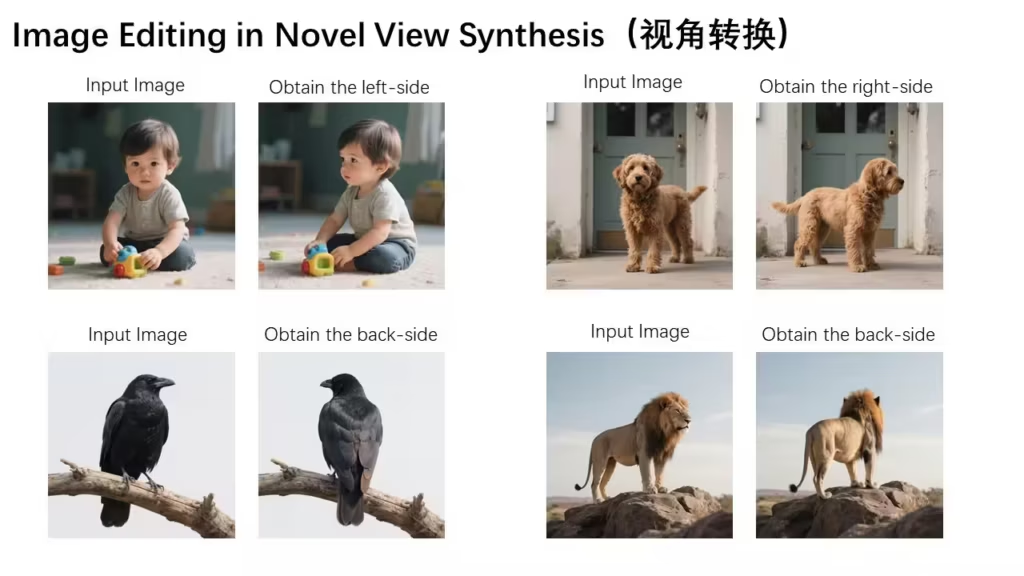

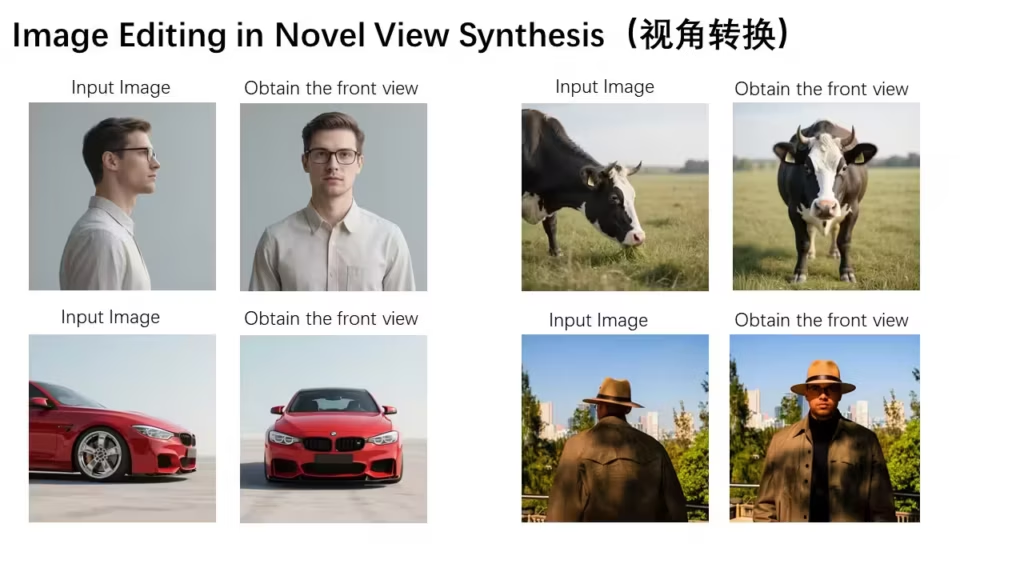

10. Qwen/Qwen-Image-Edit: next-generation image editing

To close the ranking, we can’t ignore Qwen/Qwen-Image-Edit, a model specialized in image editing. Derived from Qwen-Image (20B parameters), it brings unique innovations to image editing.

Key strengths:

- Accurate text editing inside images, including Chinese and English.

- Appearance edits (add/remove objects, refine visual details).

- Semantic transformations (object rotation, style transfer, IP creation).

As Qwen’s official demos show, you can turn a simple poster into a corrected, translated, or stylized version in seconds. Applications are vast: advertising, graphic design, multimedia content creation.

Its success on Hugging Face in September 2025 reflects a broader trend: AI is no longer limited to generation, it edits and enhances existing content, opening the door to fully AI-assisted creative workflows. For easier access to this type of model, consider using ComfyUI.

what these models reveal about the future of ai

Looking at this ranking of the top 10 most popular AI models on Hugging Face in September 2025, a clear pattern emerges: AI is becoming increasingly multimodal and specialized. Where early LLMs focused solely on text, today’s models span a wider spectrum:

- Text: next-gen LLMs (Kimi-K2, MiniCPM4.1, ERNIE).

- Image: 2K image generation and editing (HunyuanImage-2.1, Qwen-Image-Edit).

- Video: coherent 3D sequences (HunyuanWorld-Voyager).

- Audio: expressive, natural voices (VibeVoice-1.5B).

- Translation: high-performing multilingual models (Hunyuan-MT-7B).

- Embeddings: semantic search and classification (EmbeddingGemma).

In other words, AI no longer just writes—it can speak, see, hear, and build interactive worlds. This foreshadows a future where multimodal AI agents fluidly orchestrate specialized models across different media.

This diversity also mirrors competition between tech giants (Google, Microsoft, Tencent, Baidu, Alibaba) and open-source initiatives (OpenBMB, Swiss AI). The former bet on brute force, versatility, and performance; the latter on openness, specialization, efficiency, and transparency.

opportunities and limits for researchers and enterprises

These trending models offer many opportunities:

- Greater accessibility: models like MiniCPM4.1 or EmbeddingGemma are designed to run on regular PCs, opening advanced AI to more users.

- Unprecedented performance: ERNIE-4.5 or Kimi-K2 show that complex reasoning is now accessible via open-source models.

- Creative innovation: Qwen-Image-Edit and HunyuanImage-2.1 expand the possibilities of graphic and artistic creation.

But there are also limitations:

- Resource consumption: some models (like HunyuanImage-2.1 or ERNIE-4.5) require high-end GPUs (36–80 GB VRAM).

- Abuse risks: VibeVoice raises concerns about voice deepfakes; Qwen-Image-Edit about visual manipulation.

- Regulatory oversight: with the gradual rollout of the EU AI Act, using these models will require new transparency and safety obligations.

So while these models represent the future of open-source AI, they also demand a responsible, thoughtful approach—both for researchers and for enterprises aiming to integrate them into real-world solutions.

faq – trending ai models on hugging face in september 2025

What are the most downloaded models on Hugging Face in 2025?

In September 2025, the most downloaded models include Google’s EmbeddingGemma, Tencent’s HunyuanImage-2.1, and Microsoft’s VibeVoice, each in a different category (embeddings, image, audio).

What’s the difference between an LLM, a TTS model, and a diffusion model?

An LLM (Large Language Model) generates or understands text. A TTS (Text-to-Speech) model converts text to voice. A diffusion model generates images or videos from noise guided by a textual prompt.

How can I use Hugging Face models for free?

Most are accessible via the Hugging Face API or downloadable for local use. Some require powerful GPUs, but quantized versions enable use on PCs.

Which AI models are best for code and development?

Moonshot AI’s Kimi-K2-Instruct-0905 and OpenBMB’s MiniCPM4.1-8B are currently among the top performers for software development and long-context reasoning.

Which image models are the strongest right now?

HunyuanImage-2.1 (2K generation) and Qwen-Image-Edit (editing and transformation) dominate image generation and image editing.

Can these models be deployed locally on a regular PC?

Yes, but only the lighter ones like EmbeddingGemma (300M parameters) or MiniCPM4.1 (optimized 8B). Massive models like ERNIE-4.5 (21B) require professional hardware.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!