Unsloth Dynamic 2.0 GGUFs: the new benchmark for LLM quantization in 2025

Since April 2025, the announcement of Unsloth Dynamic 2.0 GGUFs marked a new step in the field of large language model quantization. This approach, presented as a major evolution from traditional quants, promises to reconcile two goals that were hard to achieve together until now: reducing model size while preserving maximum accuracy.

Now, in September 2025, the conclusion is clear: Dynamic 2.0 has become a de facto standard in the open source artificial intelligence ecosystem. It is widely adopted by independent developers as well as large communities such as Llama.cpp, Ollama, and Open WebUI. Unsloth’s collaboration with companies like Meta, Microsoft, Mistral, Google, and Qwen confirms the relevance of this technology.

A major evolution over traditional quants

According to the official Unsloth documentation, the transition to Dynamic 2.0 relies on a complete overhaul of layer selection for quantization. Unlike traditional methods such as imatrix, which apply a uniform strategy, Dynamic 2.0 adjusts quantization layer by layer, following a scheme specific to each model.

This flexibility results in:

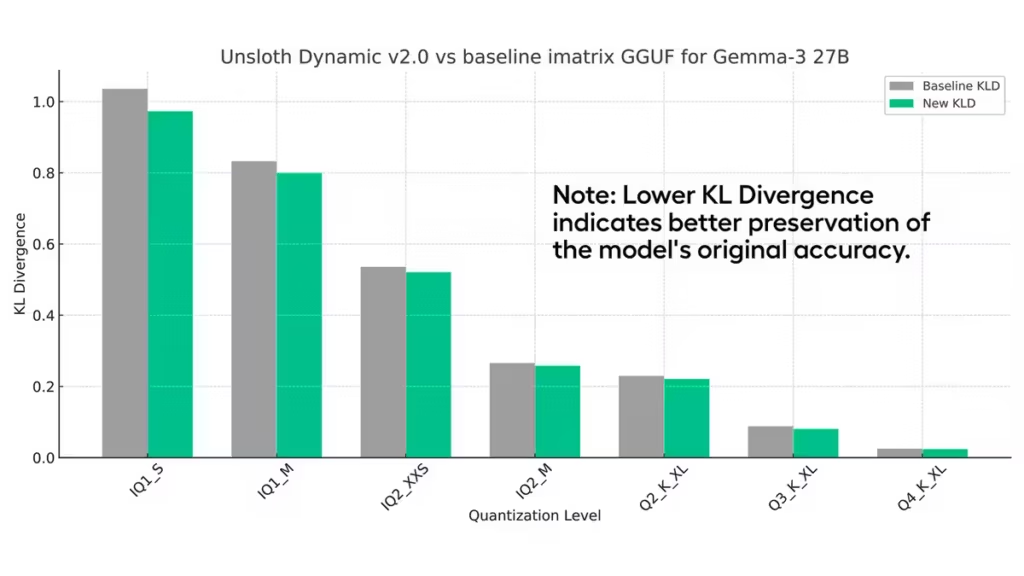

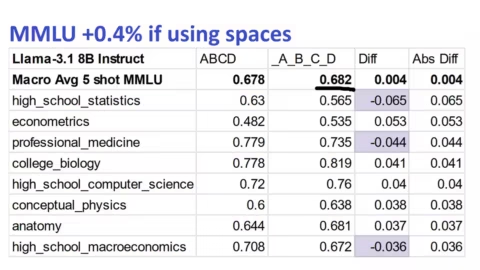

- a significant reduction in KL Divergence (KLD), a reliable metric to measure how close a model remains to its full-precision version, as explained in the scientific publication Accuracy is Not All You Need;

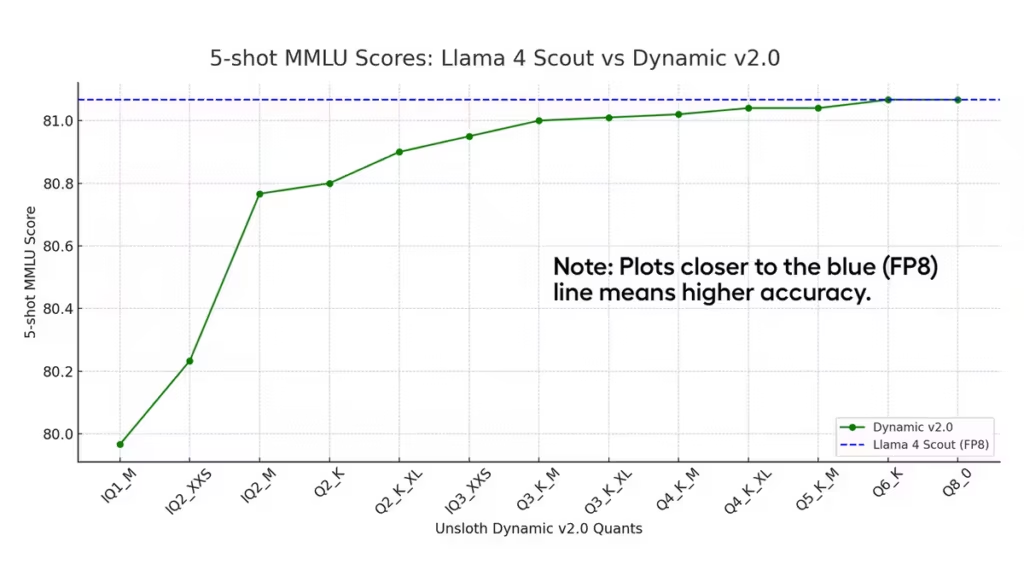

- MMLU (5-shot) performance close to or even better than some QAT (Quantization Aware Training) versions, while saving several gigabytes of disk space;

- better adaptability to heterogeneous models, whether MoEs (Mixture of Experts) like DeepSeek, or dense models like Gemma 3 and Llama 4.

Benchmarks confirm the gains

Numbers published in April 2025 show that on Gemma 3 (27B), Dynamic 2.0 quantization in Q4_K_XL reached 71.47% on MMLU, compared to 70.64% for Google’s official QAT version, while being 2 GB lighter (Unsloth, April 2025).

| Metric | 1B | 4B | 12B | 27B |

|---|---|---|---|---|

| MMLU 5 shot | 26.12% | 55.13% | 67.07% (67.15% BF16) | 70.64% (71.5% BF16) |

| Disk space | 0.93 GB | 2.94 GB | 7.52 GB | 16.05 GB |

| Efficiency | 1.20 | 10.26 | 5.59 | 2.84 |

Another striking example: on September 10, 2025, the Unsloth team announced that its Dynamic 3-bit DeepSeek V3.1 GGUF achieved 75.6% on Aider Polyglot, surpassing several full-precision models considered State of the Art. These results confirm that the approach is not just a tradeoff between size and accuracy but can actually outperform more computationally expensive solutions.

Rapid adoption in the open source ecosystem

Another factor that accelerated the adoption of Dynamic 2.0 is Unsloth’s commitment to fixing critical bugs in major models. As highlighted in the September 10 update, the team worked directly with Meta (Llama 4), Google (Gemma 1–3), Microsoft (Phi-3/4), Mistral (DevStral), and Qwen3 to improve stability and accuracy.

This proactive approach won over the community. On Reddit (r/LocalLLaMA), user feedback emphasizes both the performance gains and the responsiveness of the team. Some practical issues remain (CPU overload with llama.cpp, compatibility with certain ARM formats), but overall the consensus is positive.

Models already available in Dynamic 2.0

As of September 16, 2025, many models are already available in Dynamic 2.0 Quants:

- Qwen 3 Coder, Qwen 3 30B, Qwen 3 235B

- Kimi K2

- Llama 4

- Gemma 3 (12B and 27B)

- DevStral

- Phi 4

- DeepSeek

- More models are available at this link

This wide availability shows the intent to cover a variety of use cases: coding (Qwen 3 Coder), consumer-facing conversational assistants (Llama 4, Gemma 3), and experimental architectures (DeepSeek).

Download statistics on Hugging Face clearly show that Unsloth Dynamic 2.0 Quants are experiencing massive adoption, often surpassing QAT (Quantization Aware Training) or full precision versions. Models like Qwen3-30B-A3B-GGUF exceed 399,000 downloads, while specialized versions such as Qwen3-Coder-30B reach 167,000 downloads. By comparison, QAT versions of Gemma 3 (12B and 27B) remain under 32,000 downloads. This gap suggests that the community clearly prefers Dynamic 2.0 quants, considered lighter and more accessible without sacrificing accuracy.

Usage confirms this trend: Dynamic 2.0 quants dominate not only in download volume but also across diverse audiences. “Coder” models are particularly popular with developers, while generalist variants like GPT-OSS or Gemma 3 attract users looking for open source alternatives to proprietary models. Full precision versions, heavier and more resource-hungry, are less downloaded and mostly confined to research environments or official benchmarks. This dynamic highlights the real-world adoption of Unsloth Dynamic 2.0 quants as the de facto standard for local inference and rapid prototyping.

Remaining challenges

Despite these advances, several challenges remain:

- ARM and Apple Silicon compatibility: although formats like Q4_NL and Q5.1 are gradually being integrated, CPU optimization on ARM remains limited.

- Multi-benchmark evaluation: MMLU is still the main metric, but more comparisons on other tasks (translation, reasoning, code) are needed to confirm robustness.

Conclusion: a standard that could redefine quantization

In less than six months, Unsloth Dynamic 2.0 GGUFs have established themselves as the reference for LLM quantization in 2025. Their performance, combined with rapid adoption in the open source ecosystem, makes them an essential solution for anyone looking to run powerful models on consumer-grade machines or resource-constrained environments.

To measure the importance of Dynamic 2.0, it is necessary to place this approach within the broader continuity of Unsloth’s work.

- First generation: Unsloth Dynamic (v1)

In November 2024, Unsloth introduced its first Dynamic Quants, including 4-bit versions (Q4_K, Q4_XL, etc.). These quants already delivered notable performance gains over traditional methods (imatrix, GPTQ), but their usage was limited. They were mainly effective for MoE (Mixture of Experts) architectures, such as the first DeepSeek models. This generation spread under the label “4-bit Dynamic Quants“, which created some confusion since it was in fact the first generation. - April 2025: Unsloth Dynamic 2.0

With the official release of Dynamic 2.0, the method scaled up. Layer selection for quantization became intelligent and model-specific, enabling coverage of both MoE and dense models (Llama 4, Gemma 3, Qwen 3). This generation set new MMLU benchmarks and drastically reduced KL Divergence, becoming the reference for the open source community.

In summary, the 4-bit Dynamic Quants belong to the first generation and were mainly proof of concept. Dynamic 2.0 lays the solid foundation of a universal and reliable method. A new Dynamic 3.0 version may follow, but no official announcement has been made so far.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!