Unsloth Dynamic 4-bit vs FP16/BF16: is dynamic LLM quantization a viable solution?

Since the rise of open source models like LLaMA, Mistral, Qwen and DeepSeek, the size and complexity of large language models, LLMs, have kept growing. Their growth creates a major challenge, memory efficiency and compute cost. Running a compressed multi-billion parameter model in full precision, FP16 or BF16, requires high-end GPUs with 48 to 80 GB of VRAM, which limits access for most developers.

Dynamic quantization answers this problem. While early LLM quantization methods caused significant accuracy loss, newer approaches like GPTQ, AWQ and Unsloth Dynamic 4-bit enable a drastic LLM size reduction while preserving accuracy.

Unsloth provides an innovative 4-bit dynamic quantization (Unsloth Dynamic v1) that combines inference optimization with minimal accuracy loss. According to the official Unsloth documentation, this selective approach quarters model size while keeping 98 to 99 percent of original performance.

The question is no longer if AI model compression helps, but which method to choose between FP16/BF16 for fidelity or quantization for accessibility. This article offers a deep comparison of Unsloth quantization vs FP16 BF16 to identify the best choice for your needs.

Also read : Unsloth Dynamic 2.0 GGUFs: the new benchmark for LLM quantization in 2025

What is quantization in LLMs?

To understand the value of Unsloth dynamic quantization versus FP16/BF16, start with the concept of quantization. In Large Language Models, LLMs, quantization means reducing the numeric precision of model weights. Instead of storing each parameter in 16 or 32 bits, you compress them to 8 bits, 4 bits, even 2 bits. This is why model names carry flags like K2, K4, K6, K8. A K2 model has stronger accuracy loss, while K8 offers a better balance.

The main goal is twofold, reduce required GPU memory and speed up inference without significant accuracy loss. That makes quantized open source models usable on consumer hardware, notably Nvidia RTX cards or even laptops.

Quantization acts on the numeric representation of weights and activations, optimizing performance for inference and also for LLM fine tuning.

Concrete example, a non quantized 13B model can need over 24 GB of GPU VRAM in FP16, pushing you to a RTX 4090 or 5090, or a Nvidia A100. With Unsloth 4-bit dynamic quantization, it runs on an 8 GB VRAM card while keeping performance close to the original model.

FP16 and BF16 formats, reference precision

FP16, reduced precision standard

FP16, float16, encodes each weight in 16 bits with a reduced mantissa that is still enough for most inference tasks. Its main advantage, it halves GPU memory use versus classic FP32, while keeping stable precision for training.

According to Nvidia, FP16 enabled the first wave of massive large language models, LLM. However, FP16 still costs a lot of GPU VRAM for models over 10 billion parameters.

BF16, optimized numeric stability

BF16, bfloat16, is a FP16 variant that changes bit allocation between mantissa and exponent. The idea is to keep an exponent similar to FP32, which gives better numeric stability, especially in heavy compute phases, deep training, scientific workloads, very sensitive contexts.

As Google Research notes, BF16 is widely used in the TPU ecosystem and is now available on many modern GPUs. In practice, that means fewer rounding errors and more robustness on complex calculations.

FP16/BF16 limits

While these formats guarantee maximum quality, their resource cost remains high:

- A DeepSeek R1 32B in BF16 exceeds 64 GB of GPU VRAM

- Local inference optimization on RTX 5060 or 5070 becomes impractical

- Edge deployment AI requires servers with professional GPUs

| Method | Selectivity | Accuracy | VRAM usage |

|---|---|---|---|

| BitsAndBytes | No | Average | Low |

| AWQ | Block, layer | Good | Medium |

| GPTQ | Block, layer | Very good | Medium |

| Unsloth Dynamic | Per layer | Excellent | Low |

Comparison table, main methods

Unsloth Dynamic 4-bit, quantization optimization

This is where Unsloth 4-bit dynamic quantization comes in, a much lighter alternative that stays close to FP16/BF16 precision. As shown by LearnOpenCV, this method cuts model size and memory by more than half, without significant performance loss on benchmarks like MMLU.

The key innovation in Unsloth is its 4-bit dynamic quantization, an approach that differs from classic methods. Where classic methods apply uniform quantization, every layer gets the same precision reduction, Unsloth uses a selective and adaptive strategy.

| Method | Selectivity | MMLU benchmark | GPU VRAM |

|---|---|---|---|

| BitsandBytes 4-bit | No | Average | Low |

| GPTQ vs AWQ vs Unsloth – AWQ | Block, layer | Good | Medium |

| GPTQ vs AWQ vs Unsloth – GPTQ | Block, layer | Very good | Medium |

| Unsloth Dynamic 4-bit | Per layer | Excellent | Very low |

Selective, dynamic calibration

Unsloth does not reduce all weights uniformly, it analyzes layer sensitivity using calibration datasets then decides which layers to keep in FP16 to avoid critical loss. This preserves layers that are essential for contextual reasoning.

Massive AI memory efficiency gains

This quantization method drastically reduces quantized model size. A DeepSeek R1 14B goes from about 28 GB in BF16 to 14 GB in Unsloth, a 50 percent saving, Unsloth source.

Direct impact on required GPU VRAM:

- 8B → 8 to 10 GB VRAM

- 14B → 14 to 16 GB VRAM

- 32B → 32 to 36 GB VRAM

This LLM size reduction enables inference on a simple RTX 5060 or 5070. As open source LLMs grow, it also lets you run 32B or larger models on workstations or servers with limited resources.

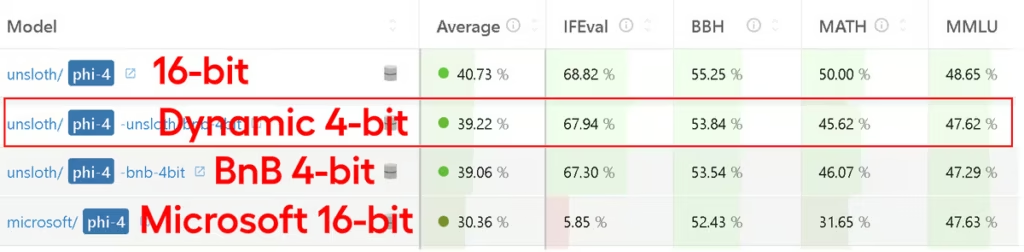

MMLU benchmarks and comparative performance

Evaluation on the MMLU benchmark, Massive Multitask Language Understanding, shows the relevance of dynamic quantization versus classic formats. This test assesses large language models, LLMs across academic and professional tasks.

Per task analysis

While global MMLU means already show Unsloth 4-bit dynamic quantization rivaling FP16/BF16, a finer per task view shows how well this approach preserves original quality. As in results from the Open LLM Leaderboard, accuracy usually lands between 98 percent and 99 percent of the BF16 baseline, which is exceptional for a model compressed by four.

Concrete per task score examples

- MMLU 5-shot, Unsloth 4-bit reaches about 99 percent of FP16/BF16 performance.

- ARC-C, 0-shot, scores stay around 98 to 99 percent of baseline.

- GSM8k, 8-shot math reasoning, Unsloth sometimes slightly exceeds BF16, likely due to better numeric robustness in some operations, Reddit LocalLLaMA source.

- HellaSwag and Winogrande, Unsloth 4-bit stays above 98 percent of the FP16 reference.

- TruthfulQA, one of the rare cases where the gap can reach 2 to 3 points, yet the model remains usable and far better than naive 4-bit quantization.

Why are losses so small?

The reason is simple, Unsloth does not apply uniform quantization. As explained in its technical post, some critical layers, notably attention projections and normalization layers, are kept in FP16, which avoids the fidelity loss seen with methods like GPTQ or BitsandBytes when applied without distinction.

DeepSeek R1 results, accuracy vs performance

| Model | BF16 accuracy | Unsloth Dynamic 4-bit |

|---|---|---|

| DeepSeek R1 8B | 65.20% | 64.85% |

| DeepSeek R1 14B | 68.40% | 67.95% |

| DeepSeek R1 32B | 72.80% | 72.15% |

Loss usually stays under 1 percent, which is remarkable for a quantized AI model that halves or quarters size, as the Unsloth documentation indicates.

Accuracy and GPU memory gains

Alongside this near unchanged accuracy, the AI memory efficiency gains are large:

| Model | BF16 memory | Unsloth quantization |

|---|---|---|

| DeepSeek R1 8B | ~16 GB | ~8 GB |

| DeepSeek R1 14B | ~28 GB | ~14 GB |

| DeepSeek R1 32B | ~64 GB | ~32 GB |

A model that needed a Nvidia A100 becomes runnable on a RTX 5090, which LearnOpenCV confirms by highlighting accessibility for users with consumer GPUs.

Practical performance and compatibility

Inference optimization and speed

Numeric precision reduction lowers data volume and simplifies operations, which makes inference 2 to 5 times faster than FP16/BF16. As Hyperbolic.ai notes, Unsloth couples its dynamic quantization with other optimizations:

- FlashAttention 2 to speed long sequence processing

- Paged optimizers for efficient GPU memory management

- CUDA kernel fusion that reduces redundant calls

Accessible LLM fine tuning

While full FP16/BF16 fine tuning demands a Nvidia A100 with 80 GB VRAM, the Unsloth approach lets you train a 13B model on a single 8 GB GPU using dynamic quantization plus LoRA.

Ollama quantization and tool compatibility

Quantized open source models from Unsloth exported as llama.cpp GGUF work with popular tools:

- llama.cpp for optimized local C++ execution

- Hugging Face Transformers for developers

- Ollama that simplifies installation on Mac and Linux

- vLLM

- LM Studio and ComfyUI for user interfaces

This compatibility enables wide adoption, as the Unsloth documentation notes.

Accuracy trade offs, when Unsloth is not enough

Despite strong results, Unsloth 4-bit dynamic quantization is not magic. Even if it keeps 98 to 99 percent of FP16/BF16 accuracy on most benchmarks, there are cases where FP16 or BF16 is still preferable. As Arxiv explains, limits appear in complex reasoning scenarios, in multimodality, or when handling very long contexts.

Deep reasoning and sensitive tasks

For applications where every decimal matters, such as scientific modeling, complex financial computation, or some medical and pharma tasks, a small accuracy drop can have significant effects. In such cases, BF16 or FP16 remain the standard because they guarantee calculation fidelity and numeric stability, as Google Research reminds for BF16.

Multimodality and specialized models

4-bit quantization also shows limits with multimodal models, text plus image, audio, or video. Signal processing layers, visual or audio, are especially sensitive to precision loss. If some layers are quantized too aggressively, you can see visible errors, poor image description, audio transcription mistakes, or loss of nuance in generated videos.

Long contexts and extended memory

Another case where Unsloth can struggle is inference with very long contexts, hundreds of thousands of tokens. Even if selective quantization reduces the risk, 16-bit precision offers better robustness when handling extended windows without coherence loss.

A balance between accessibility and reliability

In practice, the trade off is simple:

- Unsloth Dynamic 4-bit is ideal for most uses, chatbots, agents, RAG, content generation, non critical academic research.

- FP16/BF16 remain best for ultra sensitive cases where error tolerance is near zero.

The right question is not if quantization is better or worse, but choosing the method that fits the real need, speed and accessibility with Unsloth, absolute precision with FP16/BF16.

Use cases and recommendations

When to choose Unsloth Dynamic 4-bit

Current adoption and use cases

Adoption of Unsloth Dynamic 4-bit mainly sits in open source communities, independent developers and research projects rather than commercial deployments from established companies:

Development and collaborations:

- Unsloth actively maintains the method and publishes reference models, Llama, Gemma, DeepSeek, Qwen, on Hugging Face

- Microsoft collaborated on dynamic quantization of the Phi 4 model, reaching accuracy comparable to full precision models on the Open LLM benchmarks

- The AI community widely uses this dynamic quantization for DeepSeek R1, Llama, Gemma, Qwen and Mistral as shown in various Reddit posts, LinkedIn notes and tutorials.

Typical deployment scenarios:

- Edge Computing AI and constrained environments, IoT, embedded systems

- Consumer GPUs on RTX 4060, 5060, 5070, 5080

- Development, rapid prototyping with optimized inference speed. Thanks to 2 to 5 times faster inference than FP16, you can iterate quickly without heavy infrastructure.

- LLM fine tuning via LoRA on 8 to 12 GB VRAM cards

- Consumer applications, chatbots, content generation, personal assistants

- Benchmarking, low cost inference and experimentation under resource constraints

| Organization | Use of Unsloth Dynamic 4-bit | Source |

|---|---|---|

| Unsloth | Main development, official releases | Official documentation |

| Microsoft | Phip 4 models quantized in collaboration | Open LLM benchmarks |

| AI community | Wide adoption, model benchmarks | LearnOpenCV, Reddit |

Note, no established commercial client has publicly disclosed using Unsloth Dynamic 4-bit in production as of September 2025, adoption is mainly open source and experimental.

When to prefer FP16/BF16

- Scientific and medical research that needs absolute precision

- Large language models, LLMs with sensitive multimodality, text plus image plus audio

- Full training of large models on A100 or H100 GPUs

- Ultra long contexts, 100k+ tokens that need numeric stability

- Sensitive multimodal applications

Synthetic comparison

| Criterion | FP16 | BF16 | Unsloth Dynamic 4-bit |

|---|---|---|---|

| Accuracy | Excellent | Excellent, stability | ~98 to 99 percent on MMLU benchmark |

| GPU VRAM | High, 16 to 32 GB | High | Very low, about 7.5 GB for 12B |

| AI inference speed | Standard | Standard | 2 to 5 times faster |

| Model size | 16 to 32 GB | 16 to 32 GB | 4 to 5 times lighter |

| LLM fine tuning | High end GPU | High end GPU | Accessible on 8 to 12 GB VRAM |

| Compatibility | Wide | Wide, recent TPU, GPU | Ollama quantization, llama.cpp GGUF |

Conclusion and practical tips

The Unsloth vs FP16 BF16 comparison shows that dynamic quantization is no longer a mere compromise, it is a viable alternative to run large language models, LLMs in constrained environments.

Key takeaways

- Unsloth 4-bit dynamic quantization cuts size by 4 to 5 times while keeping 98 to 99 percent accuracy on the MMLU benchmark

- Gains in GPU VRAM and AI inference speed, 2 to 5 times, democratize 27B models on consumer GPUs

- llama.cpp GGUF export ensures compatibility with Ollama quantization and popular tools

- LLM fine tuning becomes more accessible, even on 8 to 12 GB VRAM GPUs

Decision logic

Unsloth quantization fits developers seeking AI memory efficiency on affordable hardware, while FP16/BF16 fit environments where absolute reliability comes first.

The future of quantization methods points to hybrid approaches, most use cases can benefit from 4-bit dynamic quantization, yet some critical applications will still need the precision and performance of 16-bit or even 32-bit.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!