Regulate or Stall? Europe Under Pressure in the Global AI Race

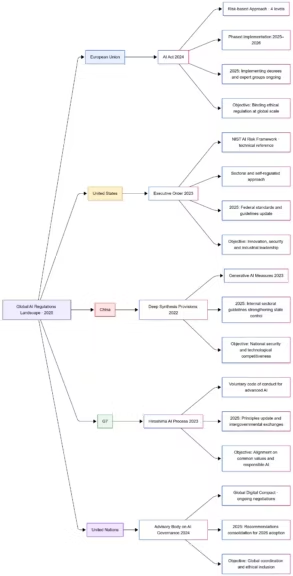

While the United States and China accelerate their artificial intelligence race, the European Union takes a cautious approach. Its flagship regulation, the AI Act, faces delayed implementation, caught between innovation, security, and digital sovereignty. Behind this hesitation lies a central question: can Europe regulate AI without sidelining itself on the global stage?

Find every analysis and summary in our AI Weekly News section to stay updated on the latest trends shaping artificial intelligence.

The European AI Act: Ambitions and Reality

A groundbreaking legal framework for artificial intelligence

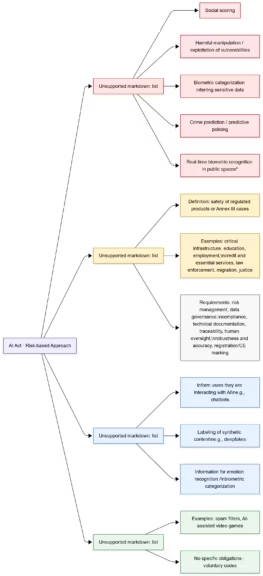

Adopted in 2024, the AI Act is the first comprehensive regulatory framework dedicated to artificial intelligence. Inspired by the GDPR, it classifies AI systems according to their level of risk, from consumer chatbots to foundational models like ChatGPT or Claude.

Its goal is clear: to ensure trustworthy and transparent AI that respects fundamental rights. The law requires companies to meet documentation, auditing, and supervision standards that vary depending on the level of identified risk.

Beyond ethics, the EU aims to establish itself as a global leader in AI regulation, just as it did with data protection.

Click to enlarge

Political ambitions behind a pioneering regulation

The AI Act reflects Brussels’ ambition to assert its technological sovereignty. By setting its own standards, the Union seeks to promote a model based on responsibility, safety, and human rights.

This approach resonates with many researchers and advocates of ethical technology. It positions Europe as a counterweight to the commercially driven approach of the United States and the centralized control of China.

However, the implementation raises a growing concern: overregulation could slow innovation and widen Europe’s lag in artificial intelligence.

First technical and legal hurdles

Critics converge on three key points: the text’s complexity, high compliance costs, and legal ambiguity surrounding the definition of “AI systems”.

Compliance requirements could penalize European SMEs and start-ups, already operating with limited resources. In response, countries like France and Germany advocate for a gradual rollout to avoid stifling competitiveness.

A Europe Hesitating Under Global Pressure

The partial delay in enforcement

According to the Financial Times, the European Commission is considering targeted pauses in the AI Act’s enforcement, particularly for so-called “foundation models.” Officially, the goal is to give member states time to adapt.

In practice, however, the delay reflects an economic concern: that European companies could fall behind their American and Chinese counterparts, which operate without comparable regulatory constraints.

This caution reveals Brussels’ central dilemma: how to protect citizens while preserving Europe’s tech competitiveness?

Heavy lobbying from American tech giants

Major technology companies have launched an intensive lobbying campaign to influence the AI Act. OpenAI, Microsoft, Google, and Anthropic have repeatedly approached EU institutions to soften obligations around transparency and the disclosure of training data.

Their argument is familiar: excessive regulation could slow innovation and deprive Europe of access to the latest AI advancements. Combined with Europe’s dependence on American technologies, these pressures have pushed Brussels to reconsider its timeline.

Balancing innovation and control

Between protection and innovation, Europe is searching for balance. Some Members of the European Parliament call for an agile regulation, capable of adapting to rapid technological evolution. Others argue that strict oversight is essential to prevent social and ethical risks.

This internal divide illustrates the dual nature of European regulation: a model of caution for some, a brake on competitiveness for others.

The United States and China: Two Opposite Models, One Goal

The US approach: market-driven regulation

Across the Atlantic, AI regulation is largely self-governed by the private sector. The US government favors a flexible approach centered on public-private cooperation.

Guidelines from the National Institute of Standards and Technology (NIST) and voluntary commitments by Big Tech establish safety principles without binding legal constraints. The result is an environment that fosters rapid AI model growth, driven by a strong culture of experimentation.

This flexibility gives the United States a structural advantage, as its regulatory approach evolves more quickly than Brussels’ legislative process.

China’s centralized control serving competitiveness

In contrast, China relies on a state-controlled regulatory model tightly linked to its industrial strategy. Beijing strictly oversees algorithms while investing heavily in research and infrastructure.

Its compliance rules, particularly regarding generated content, are designed to maintain political control and ensure a stable domestic market. This authoritative yet efficient system allows China to move fast in strategic sectors such as robotics, defense, and semiconductors.

Europe caught between caution and marginalization

Faced with these two models, Europe risks falling out of sync. It stands out with an advanced ethical framework, yet lacks the industrial and financial capacity to compete.

While Washington and Beijing invest massively in GPUs, data centers, and AI start-ups, Brussels is mired in consultations and amendments. The risk is clear: regulating without producing.

AI Governance Under Strain

Regulation as a geopolitical instrument

In the global AI race, regulation has become a tool of power. Europe hopes the AI Act will allow it to export its standards worldwide, just as it did with the GDPR.

But this strategy requires industrial weight to influence global players. Without strong infrastructure and European AI champions, regulation may become symbolic rather than strategic.

Diverging interests among EU member states

Internal disagreements slow implementation. While France and Germany advocate a pro-innovation approach, countries like Sweden and the Netherlands emphasize citizen protection and transparency.

These divergences highlight a major political challenge: building a unified European voice in a field where adaptability is key.

Calls for global AI governance

Given the scope of AI challenges, international institutions such as the G7, United Nations, and Global Partnership on AI (GPAI) are urging global coordination. The objective is to harmonize standards on safety, transparency, and traceability.

However, without consensus on data ownership, digital sovereignty, or surveillance, global AI governance remains fragmented.

Innovation and Competitiveness: Europe’s Balancing Act

Talent flight and fragile ecosystems

The tightening of regulation worries entrepreneurs. Several European start-ups are already relocating operations to the United States or the United Kingdom, where legal frameworks are more favorable to experimentation.

This trend fuels a technological brain drain, deepening Europe’s dependence on foreign solutions. Without change, the continent risks becoming a consumer of AI rather than a producer.

Europe’s growing dependence on US AI models

Models from OpenAI, Anthropic, and Google Gemini are already pervasive across European enterprises. The absence of competitive local alternatives reinforces a structural dependence on the American AI ecosystem, both for models and cloud infrastructure.

This dependency undermines Europe’s technological sovereignty and poses long-term economic risks.

Also read : OpenAI scales up: toward a global multi-cloud AI infrastructure

Toward a European model of responsible innovation

In response, the European Commission seeks to rebalance its strategy. Initiatives such as AI Made in Europe and dedicated open-source AI funding aim to support responsible innovation rooted in European values.

The challenge is to combine ethical oversight with technological progress, ensuring security without halting innovation.

What’s Next for Global AI Regulation?

The AI Act as a potential international model

Despite delays, the AI Act still holds global influence. Countries such as Canada, Japan, and Brazil are using it as a blueprint for their own AI laws.

If the EU can prove that balanced regulation can coexist with an innovative economy, it may yet become a reference point in AI governance.

From regulation to global cooperation

The future of AI regulation depends on international cooperation. AI models and their impacts transcend borders. With its normative expertise, Europe has a role to play in shaping a global framework for innovation, safety, and fairness.

AI Between Regulation and Technological Power

Europe stands at a crossroads. If it manages to reconcile AI regulation and innovation, it could define a third way between American deregulation and Chinese state control.

But if caution turns into inertia, the continent may become a mere spectator in the AI revolution. The fate of the AI Act will determine whether Europe chooses to regulate to exist or regulate in slow motion.

Sources and References

Tech Media

- Nov 7, 2025, according to Financial Times

- Nov 7, 2025, based on Reuters

- Nov 6, 2025, via TechCrunch

- Nov 4, 2025, reported by Wired

- Nov 3, 2025, according to Forbes

Companies

- Nov 6, 2025, press release Business Wire

- Nov 6, 2025, from Anthropic

- Nov 4, 2025, newsroom IBM

Institutions

- Nov 7, 2025, from Euronews

- Nov 6, 2025, according to Wall Street Journal

Official sources

- Nov 7, 2025, Google Blog

- Nov 6, 2025, newsroom Lockheed Martin

- Nov 6, 2025, CNBC

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!