AI coding agents as IDE extensions or terminal-based agents: what should you choose in 2025?

In 2025, artificial intelligence has become a must-have tool for developers. AI coding agents are no longer gadgets, they are actively reshaping how we write, test, and ship code. But a crucial question remains for anyone who wants to ride this wave: should you prioritize AI agent extensions built into code editors like VS Code, or choose terminal-based AI agents that live in the command line, closer to the system and often more flexible? And can you use both at the same time?

With the power of new GPUs like the RTX 5090 and the rise of open-source, code-specialized models such as DeepSeek-Coder, Qwen3-30B, Llama 3 70B, and GPT-OSS:20B, it’s now feasible to run these agents locally. The benefits are major: privacy, speed, and independence from the cloud.

In this article, we dive deep into the differences between IDE AI extensions and terminal AI agents, compare use cases, advantages, and limits, then help you decide which approach fits your needs in 2025.

What is an AI coding agent extension?

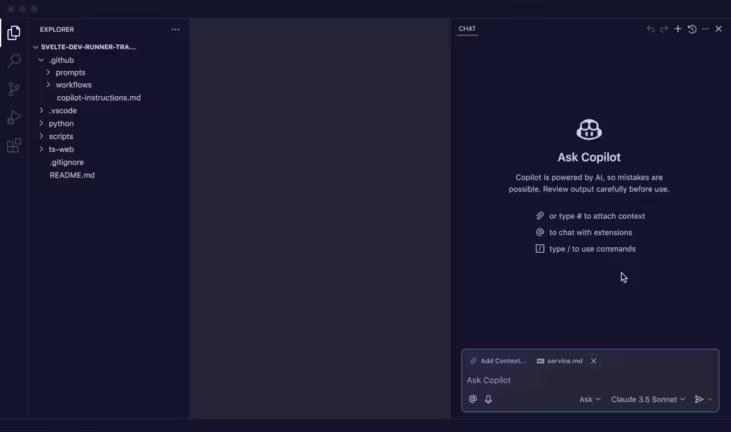

AI coding agent extensions are tools integrated directly into editors like Visual Studio Code or JetBrains. The goal is simple: assist developers in real time without leaving the workspace. They act like a virtual assistant, proposing solutions, explaining code, or generating unit tests.

Among the best known are GitHub Copilot (limited free tier, then $10/month or $39) and Tabnine. GitHub Copilot leverages a native integration with VS Code to provide smart autocompletion and even handle more complex workflows like opening pull requests. As the Visual Studio Code blog often highlights, the experience is smooth and designed to boost day-to-day developer productivity.

Other extensions like ChatGPT Code add a chat interface so you can ask questions directly inside the editor.

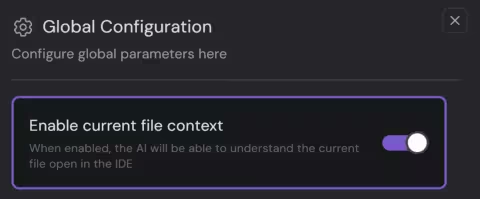

The core advantage of IDE AI extensions is ease of use: install a plugin and instantly tap into an LLM to assist you. Most AI extensions offer a cloud subscription with usage quotas. However, some extensions can connect to an open-source model hosted locally or on your own servers. Open-source models like DeepSeek-Coder, Qwen3, or Code Llama 3 can run locally via tools such as Ollama or LM Studio.

What is a terminal-based AI agent?

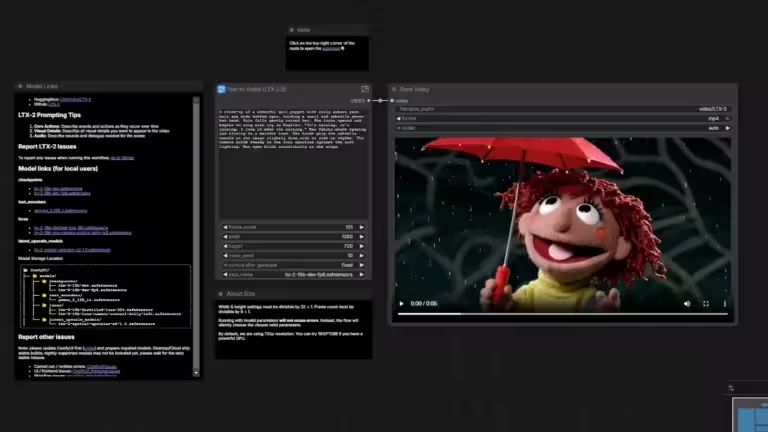

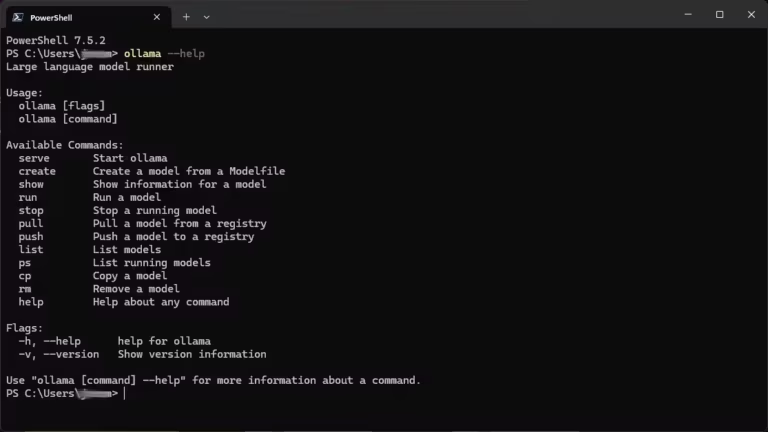

Terminal-based AI coding agents run directly in your shell (Bash, Zsh, PowerShell). Unlike extensions embedded in an IDE, these tools execute in a minimal environment and offer deeper control over the system, files, and workflows.

Their role goes far beyond autocompletion. A terminal AI agent can generate code across multiple files, launch unit tests, run scripts, manage Git commits, or even auto-fix errors detected in logs. In practice, they behave like a virtual command-line developer capable of automating complex sequences.

Popular tools in 2025 include aider (cloud and local), known for smooth Git integration, Claude Code (paid), Cline, and Gemini CLI (open source with many forks), all of which let you interact with powerful AI models. There are also open-source projects like OpenDevin and Smol Developer experimenting with greater autonomy for multi-step tasks.

As Shakudo.io points out, terminal AI coding agents appeal especially to DevOps-oriented developers or teams working on highly automated projects. Their strength is flexibility and closeness to the infrastructure—even if the user experience is less visual than with an IDE extension. That said, nothing stops you from driving an AI agent via the terminal and then opening the same project in VS Code (or another IDE) to fine-tune with an AI code extension.

Extensions vs Terminal Agents — Side-by-Side Comparison

In 2025, the question comes up a lot: should you favor an IDE AI extension like VS Code’s, or a terminal-based agent? Both aim to boost productivity and automate tasks, but they target different profiles.

IDE extensions shine for comfort. With a simple plugin, you get an integrated coding assistant that can complete, refactor, or document code in real time. Tools like GitHub Copilot slot perfectly into a developer’s daily workflow with a clear, intuitive UI.

By contrast, terminal-based agents favor power and flexibility. They’re especially appreciated for complex tasks: test automation, Git workflows, multi-file generation, and pipeline execution. Solutions like aider or Claude Code can even orchestrate entire projects from the terminal, which suits DevOps and full-stack developers.

| Criterion | IDE AI Extensions | Terminal-Based AI Agents |

|---|---|---|

| Primary use | Autocompletion, refactoring, debugging in VS Code/JetBrains | Automation, project orchestration, system commands |

| Examples | GitHub Copilot, ChatGPT CodeGPT, Tabnine, Continue, Local LLM Copilot | aider, Claude Code, Gemini CLI, OpenDevin, Smol Developer |

| Strengths | Simplicity, visual comfort, immediate productivity gains | Flexibility, control, Git integration, advanced automation |

| Limits | Tied to the IDE, often cloud-dependent | Less intuitive, requires terminal proficiency |

As Microsoft TechCommunity suggests, the future likely leans toward a hybrid approach that blends extensions and terminal agents based on the task at hand.

Productivity impact in 2025

Adoption of AI coding agents, whether IDE extensions or terminal agents, is transforming developer productivity in 2025, across several dimensions.

For individual developers, an AI extension in VS Code saves time on repetitive tasks: smart autocompletion, documentation generation, and straightforward bug fixes. As the official Visual Studio Code blog notes, these tools integrate so well that the productivity boost can feel invisible, until you realize certain tasks now take half the time.

For terminal agents, the win is advanced automation. A tool like aider or Claude Code can manage an entire cycle: run tests, fix errors, create a Git commit, and even document changes. That’s a big gain for DevOps teams where tight coordination between development and infrastructure is key.

Finally, running these agents locally on a high-end GPU like the RTX 5090 further accelerates adoption. Latency drops and privacy improves. Granting a cloud AI full access to your codebase is often problematic. As MarkTechPost has highlighted, models like DeepSeek-Coder or Qwen3-30B deliver top-tier performance without cloud dependency.

When to choose an IDE AI extension

IDE-based AI coding agents are ideal when you want seamless, immediate integration in your development environment. They’re best for developers who spend most of their time in an IDE like Visual Studio Code or JetBrains and want an embedded AI assistant to save time every day.

In practice, an AI extension is perfect to:

- write faster with contextual autocompletion

- refactor a complex file effortlessly

- generate unit tests in seconds

- get clear explanations of a code block

- accelerate debugging directly inside the editor

Solutions like GitHub Copilot offer a full experience, suggesting multi-language code and assisting across entire projects. Others, like Cline, prioritize transparency and compatibility with locally running open-source LLMs such as DeepSeek-Coder or Llama 3 70B quantized via Ollama or LM Studio.

The main advantage is simplicity: a few clicks to install, and you have a genuine coding companion. For immediate productivity without changing habits, IDE extensions are hard to beat.

When to choose a terminal-based AI agent

Terminal AI agents target developers who value flexibility and advanced automation. Unlike IDE extensions, these tools live in the shell and can orchestrate entire workflows that go far beyond plain autocompletion.

A terminal agent is particularly useful when you need to:

- automate creation and modification of files across a complex project

- run a unit-test suite and then fix detected errors

- drive continuous integration with Git commits and CI/CD pipelines

- execute system scripts or infrastructure-specific commands

- work in a minimal environment with no IDE dependency

Tools like aider, Cline, Claude Code, or Gemini CLI are already favored for DevOps and full-stack projects. Others, like OpenDevin and Smol Developer, explore even more autonomous scenarios, handling multi-step development with little human intervention.

One major advantage of terminal agents is their partial or full compatibility with open-source models running locally or on your servers. With LLMs like Qwen3-30B, DeepSeek-Coder 33B, or gpt-oss, you can build an agent that’s powerful, fast, and completely cloud-independent—perfect for experienced developers who want full control.

Best open-source models and local tools in 2025

A big recent shift is the ability to run AI coding agents locally thanks to high-end GPUs like the RTX 5090 (32 GB VRAM). This enables a new wave of open-source, code-optimized models accessible without the cloud and customizable to your stack.

Standout options include:

- Qwen3-30B (quantized): known for speed (up to ~60 tokens/s on RTX 5090) and strong reasoning. Great for multilingual, complex projects.

- DeepSeek-Coder 33B/34B: code-specialized models with robust generation and fix-up capability.

- Llama 3 70B Instruct (4–5-bit quantized): versatile across many languages with solid performance.

- StarCoder2 (15B or 30B): designed for software development, shines at scripting and multi-language projects.

- GPT-OSS:20B: a strong “lightweight” choice; with MXFP4 quantization it can run in ~16 GB VRAM.

Deployment tools that make this easier:

- Ollama for terminal-first workflows

- LM Studio for a friendly desktop UI

- Nut Studio to manage multiple models

- llama.cpp for highly optimized, open-source inference

This combo, quantized models plus local deployment tools, yields powerful, fast, privacy-respecting coding agents.

Practical recommendations by profile

- Solo developer or freelancer: if your goal is to speed up writing and fixing code, an IDE AI extension like GitHub Copilot is the simplest path. The experience is fluid and stays inside your usual environment (VS Code, JetBrains).

- DevOps-oriented or full-stack team: for projects heavy on automation (tests, deployments, pipelines), terminal agents like aider, Claude Code, or OpenDevin fit better. They centralize and automate entire workflows directly from the shell.

- Hardware/performance enthusiast: if you have an RTX 5090 (or similar), running a quantized local LLM (Qwen3-30B, DeepSeek-Coder 33B, GPT-OSS:20B) via Ollama or LM Studio delivers power, privacy, and cloud independence.

In the end, the two approaches are complementary: use an IDE extension for everyday editor tasks, and a terminal agent to automate pipelines and tests. As Microsoft TechCommunity suggests, the future belongs to hybrid workflows.

FAQ – Common questions

What’s the difference between an IDE AI extension and a terminal AI agent?

An IDE AI extension integrates into editors like VS Code to assist writing and debugging. A terminal agent works in the shell to automate broader tasks like testing, Git management, or deployments.

Do AI coding agents work offline?

Yes, if you use local open-source LLMs like Qwen3-30B or GPT-OSS:20B via tools such as Ollama or LM Studio. This improves privacy and latency.

Best open-source coding models in 2025?

DeepSeek-Coder, Llama 3 70B (quantized), StarCoder2, and Qwen3-30B are among the most popular.

Do I need a powerful PC?

An RTX 5090 (32 GB VRAM) is ideal for 30B–70B models. With 4-bit quantization, lighter options like GPT-OSS:20B run in ~16 GB VRAM.

Is GitHub Copilot private?

Copilot is cloud-based, so data may transit to external servers. For maximum privacy, choose local agents.

Conclusion

In 2025, AI coding agents are no longer curiosities, they’re real productivity levers. Choosing between an IDE extension like VS Code’s and a terminal-based agent depends on your needs and work style.

If you want simplicity and instant gains, IDE extensions like GitHub Copilot or Cline turn your editor into a true programming assistant. If you prioritize flexibility, automation, and control, terminal agents like aider or Claude Code are the natural fit.

With modern GPUs (RTX 5090) and open-source models like DeepSeek-Coder, Qwen3-30B, or GPT-OSS:20B, you can now run agents locally without the cloud, combining privacy and speed. While these LLMs may be less precise than top proprietary models like Claude, their low cost enables heavier usage, and with the right implementation the results can rival cloud offerings.

There isn’t a single right choice, there’s a complementarity. The future likely belongs to developers who combine IDE extensions and terminal agents to get the best of both worlds.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!