AI coding agents: the reality on the ground beyond benchmarks

As we enter 2026, the software industry is undergoing a structural mutation marked by the rise of autonomous agents. Independent analyses estimate that Claude Code could already be involved in approximately 4% of public commits on GitHub, a figure that illustrates the scale of the phenomenon even if it is not an official statistic. Yet, behind the promises of total automation carried by new models like Claude Opus 4.6 or GPT-5.3 Codex, a gap persists between laboratory scores and real-world production.

The value of an AI agent no longer lies in its ability to solve isolated algorithmic puzzles, but in its capacity to navigate the complexity and disorder inherent in a real project: technical debt, unforeseen dependencies, shared states, and business constraints.

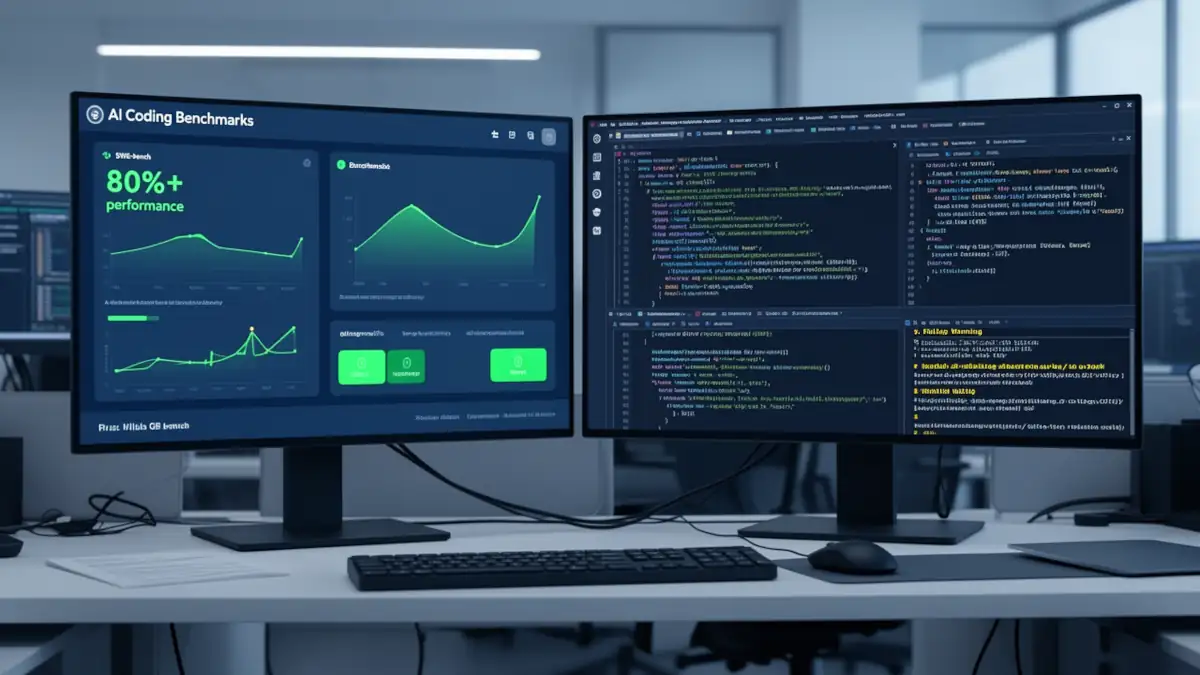

The illusion of scores: why benchmarks do not deliver your code

Traditional indicators such as SWE-bench show impressive success rates, with scores now exceeding 80% on SWE-bench Verified for the most powerful models. However, these figures mask critical operational limits.

The risk of technical self-referentiality

Some researchers and practitioners highlight a risk of circularity: when models contribute to generating or adapting the tests themselves, scores may reflect internal optimization rather than generalizable robustness. This tendency toward self-referentiality can create a performance mirage that collapses as soon as the agent is confronted with a non-standardized environment.

Confrontation Terminal Bench vs Production

While next-generation tools claim massive adoption, their behavior in the command line (CLI) reveals systemic fragilities. On GPT-5.3 Codex, behaviors like the “CAT pattern”—a complete and often destructive rewriting of files instead of surgical patch application—have been documented in several community reports.

This lack of granularity, analyzed in our agentic failure audit, highlights a persistent difficulty in managing legacy code without introducing regressions. This is a critical point that OpenAI began to address as early as GPT-5.1 with dedicated patch tools, an approach later consolidated in versions such as GPT-5.2 and GPT-5.3 Codex.

Agentic architecture: sub-agents and verification loops

To overcome these limits, system architecture is evolving toward multi-layered structures. It is no longer a single model coding, but a coordinated team of agents.

Modern orchestration and protocols

Modern agentic architectures, including those presented by Anthropic, rely on specialized sub-agents and protocols like the Model Context Protocol (MCP). This approach allows for better-framed code execution via strict pillars:

- Role Definition: Each unit possesses specific tools and permissions.

- Fan-out Management: Limiting the depth of sub-tasks to prevent the AI from scattering.

- Context Isolation: Each agent has its own token window to maintain clear reasoning.

Verification loops: automating auto-review

An agent’s effectiveness now depends on its feedback loop. Instead of delivering raw code, systems integrate validation steps: execution of unit tests, static analysis, and state comparison. This architectural rigor, detailed in our analysis of securing via MCP and smolagents, is indispensable for limiting unnecessary token burn during complex tasks.

Privacy and sovereignty: from Cloud to Edge AI

One of the major roadblocks to the adoption of agents in regulated sectors (Finance, Defense, Health) remains data leakage. Entrusting a professional codebase to a third-party SaaS agent is a strategic risk that many companies are no longer willing to take in 2026.

The failure of “Pure SaaS” for sensitive code

The current paradigm, where every terminal command is sent to a central server to be interpreted by an LLM, creates an unacceptable attack surface. This is why we are seeing the emergence of ECCC (Edge Code Cloak Coder) type frameworks. These solutions allow for local reasoning on optimized models (Open-Weights), where only high-level architectural metadata is synchronized with the Cloud, while the code itself never leaves the infrastructure.

The rise of local and hybrid models

The democratization of high-performance local execution (via specialized NPUs or optimized quantization) allows agents to run directly on the developer’s machine or on a sovereign private cloud. This shift toward Edge AI is not just a matter of security; it also significantly reduces latency and costs associated with API calls, making agents more responsive and autonomous in offline or restricted environments.

The agentic economy: shifting from seats to outcomes

The massive integration of agents is also disrupting the economic model of software development tools.

From subscription to results

The traditional “per seat” (SaaS) model is being challenged. Companies are beginning to favor “outcome-based” billing: you no longer pay for access to a tool, but for the successful completion of a task (e.g., a PR merged after passing all tests). This transition pushes providers to maximize the reliability of their agents rather than just the time spent on the platform.

The new face of ROI

With an estimated 10x to 30x ROI for companies that have successfully automated their maintenance and CI/CD pipelines via agents, the investment is shifting from headcount to orchestration infrastructure. As noted in the SemiAnalysis study, the goal is no longer to replace the developer, but to eliminate the “toil” (low-value tasks) to let human intelligence focus on product design and complex auditing.

FAQ: Understanding the 2026 agentic landscape

What is the difference between an AI assistant and an AI agent?

An assistant (like the early versions of Copilot) suggests code as you type. An agent (like Claude Code or Codex) takes a goal as input (e.g., “fix bug #42”), explores the file system, runs tests, and iterates autonomously until the task is completed.

Is GPT-5.3 Codex better than Claude Opus 4.6 for coding?

It depends on the use case. Codex excels in raw volume and rapid script generation, but Claude Opus 4.6, combined with the MCP protocol, currently offers better consistency for long tasks and superior security in “Expert” environments.

What is the difference between MCP and simple function calling?

The Model Context Protocol (MCP) is an interoperability standard. Unlike traditional function calling, it allows for connecting AI to external data sources and tools in a uniform and secure way, without rewriting integrations for every single model.

Beyond code, the supervision

The integration of these technologies is redefining the development lifecycle. The engineer is not disappearing; they are shifting their stance to become a flow architect and a guardian of business intent.

The agentic revolution is not a matter of replacement, but of control. In a world where code is generated at industrial speeds, the human capacity to arbitrate between multiple solutions becomes the most valuable skill. We will explore this mutation in our next guide dedicated to the “Vibe Coder”—understood here not as an improvised coder, but as an AI-augmented developer capable of orchestrating, supervising, and governing agents with professional rigor.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!