LangGraph: the open-source backbone of modern AI agents

In the fast-evolving world of artificial intelligence, where every month brings new large language models, one name is increasingly emerging as the open-source backbone of agentic AI: LangGraph. Built by the LangChain team, this framework has become a 2025 reference for building autonomous AI agents capable of orchestrating multiple language models, whether commercial or open source, without relying on a single provider. For a broader overview of building autonomous agents, see our guide How to build AI agents independent of any LLM provider.

The era when an AI agent depended exclusively on OpenAI or Anthropic is ending. Companies now seek technological sovereignty and multi-LLM flexibility. LangGraph addresses this need through a modular and transparent approach centered on graph-based orchestration of intelligent workflows.

Understanding the graph-based orchestration approach

LangGraph does more than control language models. It structures an agent’s logic as an orchestration graph, where each node represents an action, decision, or sub-agent, and the edges define the flow of data and control.

This approach, described in the official LangGraph documentation, provides three key advantages:

- Readable flow: the agent’s reasoning becomes visual and traceable.

- Parallelization: multiple tasks can run simultaneously.

- Dynamic replanning: the agent adjusts execution based on context or detected errors.

Unlike traditional sequential architectures (where tasks run linearly), LangGraph enables agents to act as adaptive systems, capable of reevaluating their own reasoning. This is what differentiates it from simpler frameworks like AutoGen or Semantic Kernel (now integrated into the Microsoft Agent Framework).

A LangGraph orchestration can include:

- Action nodes (model call, API query, computation)

- Decision nodes (conditions, validations, LLM routing)

- Memory nodes (context storage and updates)

This graph-based architecture is now adopted in advanced AI infrastructures because it provides greater observability, fine-grained workflow control, and natural resilience in case of model errors or unavailability.

State persistence: the memory core of AI agents

One of LangGraph’s most powerful features is state persistence, the ability to resume a task after interruption without losing context. Where other frameworks restart from zero, LangGraph saves the internal state of each graph node.

This persistent memory relies on external storage systems that developers can choose depending on their needs:

- Redis for ultra-fast access. Ideal for in-memory persistence and volatile state graphs. Simple integration via LangGraph Memory or Celery-like workers.

- PostgreSQL for transactional durability. A stable relational database with SQL queries, logging, and easy auditing. Perfect for tracking runs outside LangSmith.

- Qdrant, Milvus, Chroma, or FAISS for vector-based semantic memory and retrieval.

An AI agent built with LangGraph can therefore remember conversations, keep track of partial task queues, or resume a computation interrupted by network failure.

As highlighted in LangSmith documentation, this persistence enables detailed traceability, the ability to replay execution flows, and precise debugging of decisions (LangChain source).

By combining relational storage (state and logs) and a vector database for semantic memory, LangGraph achieves a rare balance between reliability and contextual intelligence.

Comparison summary:

| Main objective | Recommended solution | Justification |

|---|---|---|

| Fast orchestration, queues, volatile states | Redis | Ideal for in-memory persistence and transient graphs. Easy integration via LangGraph Memory or Celery-like workers. |

| Persistent run logs and state tracking | PostgreSQL | Stable SQL database, queryable logs and easy audits. Ideal if you need to trace runs outside LangSmith. |

| Semantic memory (context, embeddings, RAG) | Vector DB (FAISS, Chroma, Qdrant, Milvus, Weaviate) | Similarity search, great for conversational or contextual AI agents. Can be combined with Postgres. |

Recommended setup

- Redis to start, light and simple.

- PostgreSQL for structured tracing outside LangSmith.

- Vector DB for advanced usage if your agents use embeddings or RAG pipelines (Retrieval-Augmented Generation).

Practical examples

| Use case | Recommended stack |

|---|---|

| Agent workflow testing | LangGraph + Redis |

| Stable production deployment | LangGraph + PostgreSQL (+ Redis cache) |

| Context-aware AI agents with retrieval | LangGraph + Vector DB (Qdrant or Chroma) |

For a complete agentic AI development environment (persistence + contextual memory), PostgreSQL + Qdrant offers the best versatility. Adding Redis improves caching and inter-process coordination. Start with PostgreSQL + Qdrant for advanced capabilities, or LangGraph + Redis for a lightweight introduction.

A truly LLM-agnostic framework

LangGraph fully embraces the LLM-agnostic framework principle. Its configuration relies on simple environment variables:

AGENT_LLM_PROVIDER=openai

AGENT_LLM_MODEL=gpt-5Switching models becomes effortless.

This abstraction allows agents to move seamlessly between ChatGPT, Claude, Gemini, Mistral, or local models via Ollama or vLLM, without touching core logic.

This dynamic multi-LLM routing is essential for hybrid environments where cost, latency, and accuracy vary by task. For instance:

- Claude handles analytical reasoning

- ChatGPT generates natural language

- Mistral provides fast summarization

- A local fine-tuned model analyzes private data

This flexibility gives LangGraph unique resilience. If one provider fails, the agent continues running with a fallback model. Microsoft applies similar logic in its Agent Framework, where each service includes fallback providers (official Microsoft blog).

Memory, tools and extensions: the LangGraph ecosystem

LangGraph leverages the strength of the LangChain ecosystem. Developers can integrate tools, RAG pipelines, and enterprise connectors.

LangGraph is not a semantic search engine itself, but it interoperates with open-source retrieval frameworks. Among them, Haystack by deepset.ai stands out as a leading retrieval and question-answering framework (Haystack documentation).

An agent can, for example:

- Query APIs like Notion, Slack or HubSpot

- Use an open-source RAG engine such as Haystack to enrich answers

- Store its context in Qdrant or Weaviate

- Deploy as a service via LangServe for web or production use

This modularity relies on declarative tools, often defined with OpenAPI schemas. For stability, each tool must be idempotent, repeated executions should not alter global state (for example, duplicate object creation). This prevents cascading errors in multi-LLM environments or automatic retries.

LangSmith provides the observability layer, tracing every model call, routing decision, and performance metric.

LangGraph and LangSmith work as two sides of the same system: one orchestrates, the other observes.

Observability and governance: from transparency to accountability

In a world where enterprises must balance innovation and compliance, LangGraph also stands out for its AI governance readiness. Through LangSmith, every decision, LLM interaction, and node execution is logged.

This complete observability enables:

- End-to-end auditability of agent workflows

- Anomaly and drift detection

- Compliance reporting aligned with GDPR or SOC2 requirements

As noted by LangChain in its documentation, this traceability integrates easily into CI/CD pipelines. Developers can replay sessions, compare model versions, and automate agent testing.

This culture of transparency mirrors the Microsoft Agent Framework, but with the open-source philosophy: freedom of hosting, adaptability, and no Azure dependency.

A natural fit within the open-source AI stack

LangGraph integrates seamlessly into a complete open-source AI stack, typical of modern agentic infrastructure:

| Component | Role | Compatible tools |

|---|---|---|

| Orchestration | Agent workflow | LangGraph |

| Memory | Context and persistence | Qdrant, PostgreSQL, Redis |

| RAG | Semantic retrieval | Haystack, Weaviate |

| Monitoring | Observability and QA | LangSmith |

| Deployment | API and UI | LangServe, FastAPI, Streamlit |

This technological decoupling allows a BYOC (Bring Your Own Cloud) approach, letting developers choose their environment. The system can run locally, in private cloud, or hybrid setups with identical behavior and performance. This reinforces data sovereignty, now a key criterion for enterprise AI adoption.

Limitations and best practices

LangGraph is powerful but requires disciplined design. The following recommendations, inspired by open-source projects and LangChain documentation, help maintain stability and scalability:

- Clarity before complexity: avoid deeply nested graphs that are hard to debug.

- Idempotence and timeouts: ensure reliable tools and predictable error handling.

- Observability from the start: enable LangSmith early in the project.

- Environment isolation: deploy in separate contexts (dev, staging, production).

LangGraph, the engine of AI autonomy

LangGraph has become the technical foundation of AI autonomy: an open-source, flexible, interoperable, and resilient framework. It gives developers rare freedom, to choose their models, tools, and infrastructure, while maintaining coherent reasoning and state persistence.

Against proprietary solutions, LangGraph offers a strong alternative aligned with multi-LLM orchestration, transparency, and technological sovereignty.

For organizations, it’s also a future-proof investment: ensuring that their AI agents remain viable even as the LLM market evolves.

More than a framework, LangGraph is the structural backbone of a new generation of AI agents designed to operate independently of any single provider.

Case study: building an autonomous AI agent with LangGraph

To better grasp the framework’s potential, consider an enterprise developing an internal AI agent to assist engineers with tech monitoring and report drafting.

Project context

The goal was to build an agent that:

- runs locally to preserve confidentiality

- can understand and summarize technical documentation

- and communicates with multiple models depending on the task

Chosen architecture

The team created a fully open-source AI stack based on LangGraph:

- LangGraph for agent orchestration

- Qdrant for vector memory

- Haystack for semantic document retrieval

- vLLM for local execution of open-source LLMs

- LangSmith for observability and error tracking

The LangGraph structure contained three agents:

- Analyst Agent: extracts key insights using Claude

- Writer Agent: synthesizes results into fluent text with ChatGPT

- Reviewer Agent: validates content through a local fine-tuned model

Each agent connects through linked nodes and stores its state in persistent memory. If a model becomes unavailable, LangGraph automatically reroutes the task to an alternative provider (failover).

Results

- 25% cost reduction through hybrid cloud + local inference.

- Zero context loss across long-running sessions.

- Full observability via LangSmith logs and quality dashboards.

This example shows that an AI agent can be autonomous, auditable, and independent, without external APIs. LangGraph acts as a conductor, maintaining reasoning consistency, state recovery, and stable execution flows. It also scales naturally, organizations can integrate new models or expand local LLM infrastructure without redesigning their system.

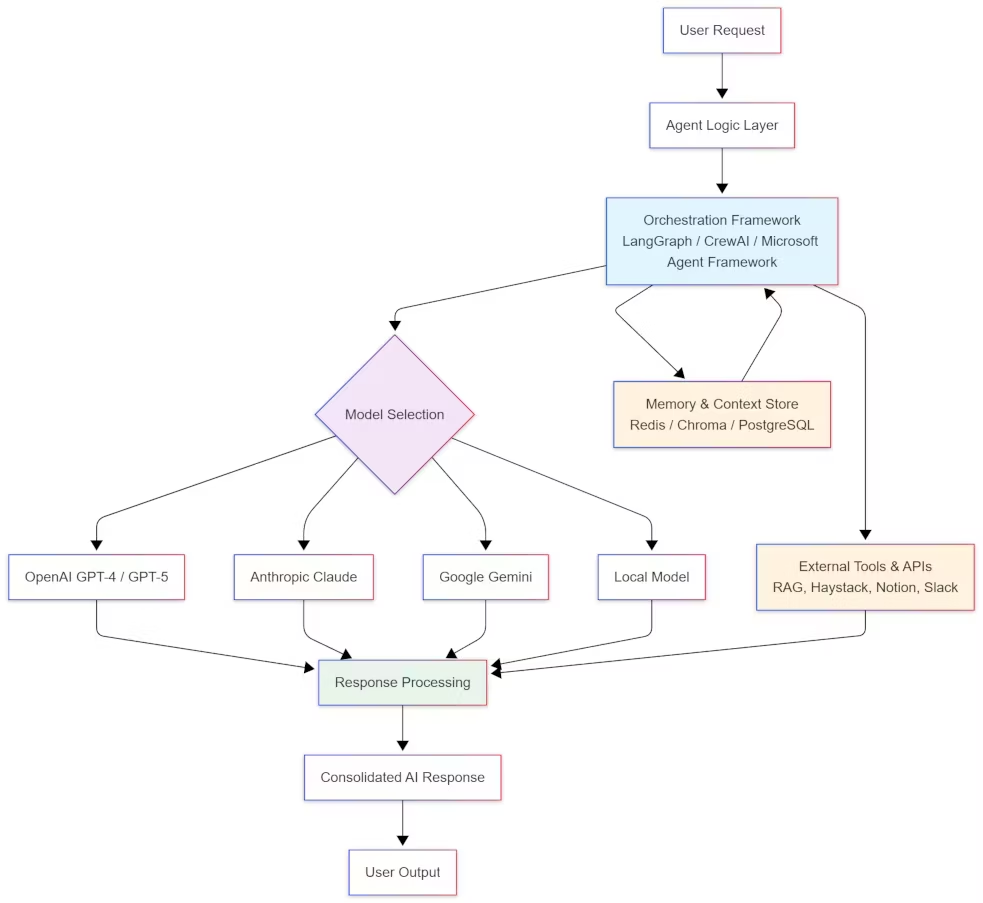

Diagram: simplified architecture of an AI agent with LangGraph

This illustration would show the graph orchestration concept: each agent acts as a node within a coordinated flow managed by LangGraph, with persistent memory and built-in observability.

Conclusion

LangGraph is more than just another tool in the open-source AI ecosystem. It is a benchmark for orchestrating AI agents that use different language models within architectures capable of learning, reasoning, and persisting over time.

Through its graphical, modular, and traceable structure, LangGraph turns experimental prototypes into reliable, auditable, and extensible systems. Combined with components like LangSmith, Chroma, or Weaviate, it forms a complete foundation for LLM-agnostic and interoperable AI agents.

Its real strength lies in its adaptability to the fast evolution of LLMs. As enterprises begin to train or customize their own open-weight models, LangGraph naturally integrates them alongside commercial models. This makes it a durable bridge between cloud-hosted and locally deployed LLMs, a key advantage for cost control and data sovereignty.

In a world where AI autonomy, technological resilience, and data privacy are strategic priorities, LangGraph demonstrates how open source paves the way for innovation and independence. It is more than a framework, it is the evolving framework of distributed AI intelligence, ready for the next generation of large language models.

Recommended resources

- Official LangGraph documentation

- LangSmith – observability, measurement, and QA for AI agents

- LangServe – deploy LangGraph agents as APIs

- Chroma DB – open-source vector database for memory

- Haystack RAG – open-source semantic search engine

- CrewAI – collaborative multi-agent framework

- Microsoft Agent Framework – AI agent orchestration with governance focus

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!