OpenAI scales up: toward a global multi-cloud AI infrastructure

OpenAI is entering a new phase of expansion. The company led by Sam Altman has just signed a historic $38 billion partnership with Amazon Web Services (AWS). This strategic move marks a break from its reliance on Microsoft Azure and signals a clear ambition: to build a global multi-cloud AI infrastructure.

Find every analysis and summary in our AI Weekly News section to stay updated on the latest trends shaping artificial intelligence.

A historic alliance with Amazon Web Services

A $38 billion deal to secure computing power

On November 3, 2025, OpenAI signed a multi-year, $38 billion contract with AWS. According to Reuters, this is the largest infrastructure collaboration ever concluded by the company outside Microsoft Azure.

The goal: to secure access to hundreds of thousands of Nvidia GB200 and GB300 GPUs hosted in AWS data centers across the United States, with full deployment expected by the end of 2026. This initiative strengthens OpenAI’s ability to meet the growing compute demand of its future AI language and image models.

The deal inaugurates an era of AI cloud diversification, where major players no longer rely on a single provider but build distributed, resilient, and interoperable infrastructures.

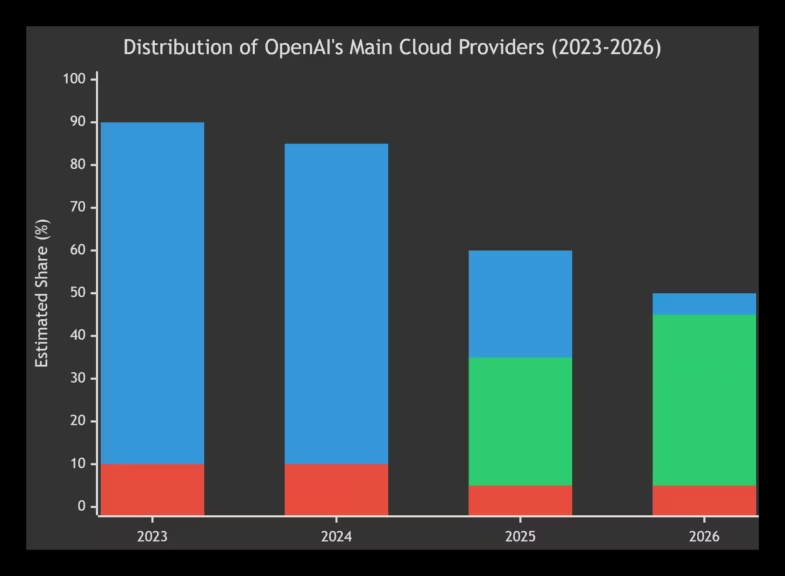

Color Legend:

- 🟦 Blue: Microsoft Azure – Leading cloud provider with a dominant share gradually decreasing from 90% to 50% between 2023 and 2026

- 🟧 Orange: Amazon Web Services (AWS) – New entrant growing significantly, increasing from 0% to 45% over the period

- 🟩 Green: Oracle Cloud – Stable minority provider with a consistent share around 5-10%

Reducing dependence on Microsoft Azure

Since 2019, Microsoft Azure has served as the foundation for OpenAI’s infrastructure under a partnership valued at over $13 billion. This relationship remains key, but relying on a single cloud provider has become a strategic risk: resource saturation, rigid contracts, and an imbalance of control.

By expanding to AWS, OpenAI embraces a logic of cloud resilience, similar to that of critical industries. This approach reduces operational risk and allows the company to rebalance its power dynamic with Microsoft, its long-time but now non-exclusive partner.

A global expansion of AI infrastructure

As Wired reports, OpenAI plans to deploy its computing resources across multiple continents, balancing GPU availability, energy costs, and regulatory constraints. The goal is to ensure continuous, distributed compute capacity worldwide, a key step to support next-generation AI models.

The rise of OpenAI’s global compute infrastructure

$1.4 trillion in commitments over eight years

A few days after the AWS deal, Sam Altman revealed a colossal plan: $1.4 trillion in infrastructure commitments over the next eight years. According to TechCrunch, the investment covers data center construction, GPU purchases, and compute capacity leasing.

OpenAI expects to surpass $20 billion in annual revenue by the end of 2025, confirming its new status as a full-fledged AI infrastructure provider. The company aims for vertical integration: designing, training, and hosting its models on its own resources.

An expansion model without public funding

Unlike other tech giants, OpenAI is not seeking government aid. As Reuters notes, Sam Altman prefers 100% private financing, believing that autonomy ensures greater strategic agility.

This choice avoids political constraints but increases financial pressure. The company must prove the profitability of AI compute power in a market where it faces Google DeepMind, Anthropic, and Mistral AI.

The energy and environmental challenge of mega data centers

OpenAI’s rapid growth also raises energy concerns. Each data center consumes vast amounts of electricity and cooling. The company is exploring partnerships with renewable energy producers, yet large-scale deployment remains a challenge.

Computational efficiency is now a major issue. As AI models grow in complexity and performance, their carbon footprint rises. Balancing power and sustainability will be one of the defining challenges of the coming decade.

A new phase in AI cloud geopolitics

Balancing power among global cloud giants

OpenAI’s multi-cloud strategy reshapes the power balance between AWS, Microsoft Azure, and Google Cloud. Each is vying to become the essential platform for AI compute workloads.

This competition fuels innovation but also reinforces the concentration of compute power among a handful of corporations. For OpenAI, the hybrid multi-cloud approach offers both efficiency and leverage in negotiating with cloud partners.

GPU tensions and export restrictions

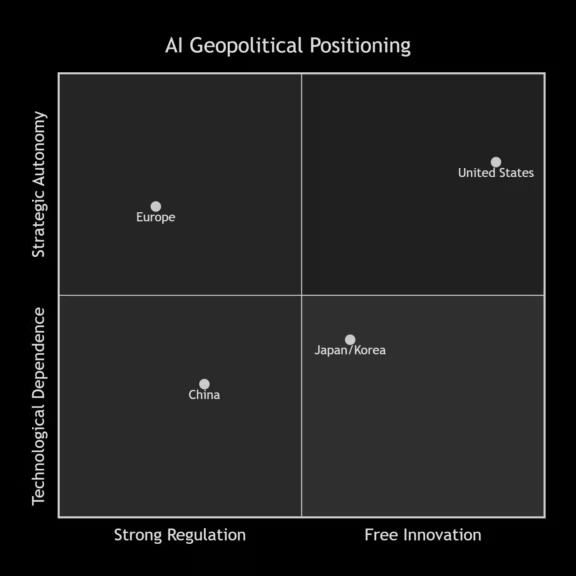

Hardware dependency is also tied to geopolitics. US President Donald Trump has confirmed an export ban on Nvidia’s Blackwell B100, B200, and B300 chips to China and its allies.

According to Forbes, the measure aims to limit the expansion of Chinese AI infrastructure. Nvidia CEO Jensen Huang confirmed that no active discussions are ongoing to sell these chips in China (Reuters).

OpenAI now secures its GPU supply through Western suppliers, reinforcing the geopolitical lock-in of AI computing.

Europe lags behind the American acceleration

While the United States multiplies private investments, Europe struggles to keep up. According to the Financial Times, Brussels is considering delaying certain provisions of the AI Act under pressure from the tech industry.

This gap highlights the transatlantic technology divide: the US dominates AI compute infrastructure, while Europe remains dependent on American cloud providers.

Emerging players in next-generation AI

Inception: diffusion models as an alternative

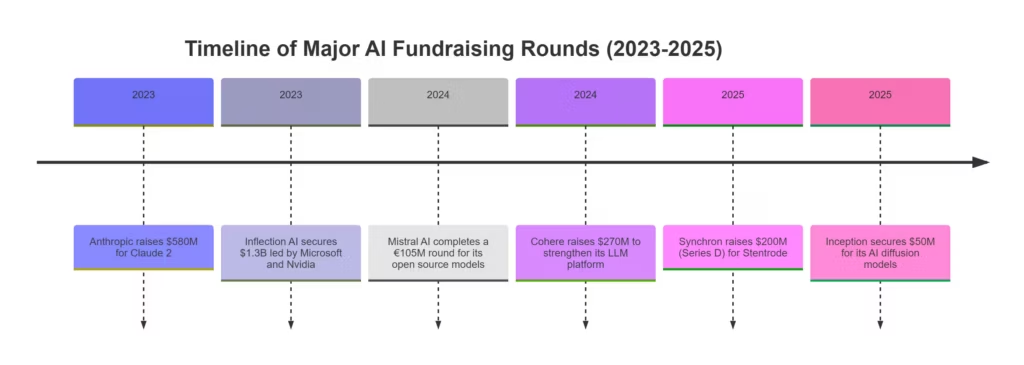

The startup Inception, founded by Stanford’s Stefano Ermon, has raised $50 million to develop diffusion-based language models. As TechCrunch reports, this approach achieves ten times better performance than traditional Transformer architectures while using less energy.

These diffusion-based LLMs could revolutionize the field, enabling more efficient and sustainable model training with fewer GPUs.

Synchron and neurotechnological convergence

The company Synchron has raised $200 million for its brain-computer interface, known as Stentrode, according to Business Wire.

This innovation symbolizes the fusion between biological and artificial intelligence, opening new possibilities for human-AI interaction and showing how the AI research frontier extends beyond traditional cloud infrastructure.

A startup ecosystem backed by Nvidia and Microsoft

Around OpenAI, a dense ecosystem is emerging, fueled by NVentures (Nvidia), M12 (Microsoft), and Snowflake Ventures. These strategic investments aim to diversify AI approaches and prepare for the post-Transformer generation of AI models.

OpenAI, governance, and technological sovereignty

Who controls global computing power?

With its new investments, OpenAI is becoming a quasi-sovereign actor. Its compute capacity now rivals that of nation-states, directly influencing the balance of global digital power.

This centralization raises a critical question: who controls knowledge and computation? Governments see OpenAI both as a strategic asset and as a potential source of technological dependency.

The dilemma of transparency and independence

OpenAI operates between two opposing forces: scientific transparency and industrial secrecy. Some components of its models remain open source, while others are protected to maintain competitive advantage.

This tension reflects a broader truth: the AI power race comes with an urgent need for global governance, beyond technical innovation alone.

Also read : Regulate or Stall? Europe Under Pressure in the Global AI Race

What future for a decentralized global AI ecosystem?

Toward cross-cloud interoperability

OpenAI’s multi-cloud strategy could mark the beginning of cloud interoperability: multiple infrastructures capable of working together through shared standards. Such an approach would strengthen resilience and reduce dependence on any single provider.

The race for compute power and digital sovereignty

The alliance with AWS goes beyond technology. It is part of a reconfiguration of global digital sovereignty, where computing power becomes as strategic as energy or data.

The real challenge is no longer just creating the best AI models, but owning the infrastructure capable of sustaining them.

A new era for global AI infrastructure

OpenAI is no longer just building AI models, it is shaping the backbone of global artificial intelligence. Through its multi-cloud strategy and massive investments, the company positions itself as a central actor in AI compute.

This marks the dawn of a new era: AI as infrastructure and geopolitics, embedded in the power dynamics of the decade.

Sources and references

Tech media

- November 7, 2025, according to Financial Times

- November 7, 2025, per Reuters

- November 6, 2025, via TechCrunch

- November 4, 2025, according to Wired

- November 3, 2025, per Forbes

Companies

- November 6, 2025, release on Business Wire

- November 6, 2025, Anthropic

- November 4, 2025, IBM newsroom

Institutions

- November 7, 2025, via Euronews

- November 6, 2025, Wall Street Journal

Official sources

- November 7, 2025, Google Blog

- November 6, 2025, Lockheed Martin newsroom

- November 6, 2025, CNBC

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!