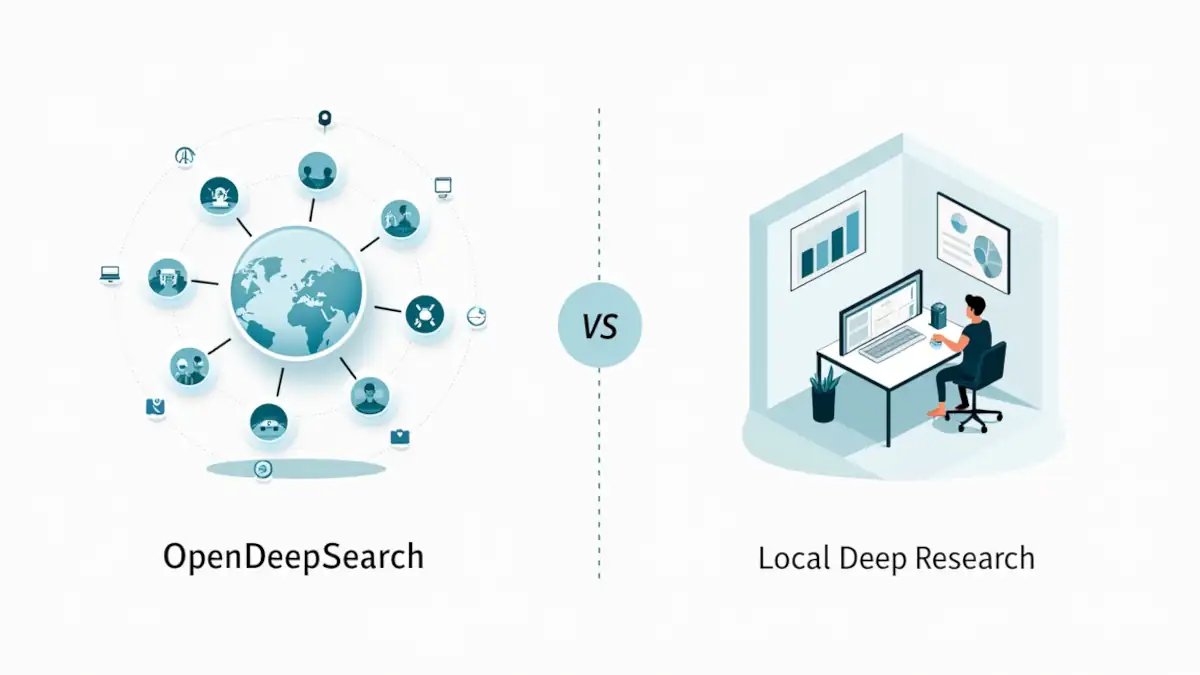

OpenDeepSearch vs Local Deep Research: which open source tool should you choose in 2025?

In the fast-evolving landscape of artificial intelligence (AI), open source AI tools have emerged as essential instruments for research and development. While proprietary platforms like Perplexity Sonar or ChatGPT Deep Search dominate the market, two open source alternatives stand out: OpenDeepSearch and Local Deep Research.

These projects, both designed as AI agents for web research, reflect a growing trend: democratizing automated AI-driven research by giving researchers, developers, and organizations full control over their tools, free from the limitations of closed ecosystems.

These platforms not only promote transparency and collaboration but also broaden access to advanced technologies, enabling researchers to innovate without being constrained by proprietary restrictions.

The importance of open source AI tools

Open source AI frameworks are transformative because they enable community-driven innovation, where developers can modify and improve the software based on specific needs. This collaborative ecosystem accelerates development cycles and ensures that solutions remain adaptable to diverse research requirements [8].

Moreover, open source solutions reduce dependency on expensive proprietary systems, making advanced capabilities more accessible to a wider audience, including academics and small businesses.

OpenDeepSearch: Filling the gaps in AI research

OpenDeepSearch (ODS), developed by Sentient Labs in collaboration with universities such as UW, Princeton, and UC Berkeley, is an open source research framework designed to compete with proprietary solutions like Perplexity’s Sonar Reasoning Pro [12][13].

It automates complex research workflows, improves efficiency, and empowers researchers to tackle intricate tasks more effectively. This tool demonstrates how open source initiatives can rival established players by delivering comparable functionality, without the paywall.

Local Deep Research: Decentralized capabilities

Local Deep Research (LDR) is another notable open source project, emphasizing decentralized approaches to deep research. Although fewer details are available, its focus on local inference and automation suggests it is tailored for users seeking self-hosted, customizable solutions without external dependencies.

This makes it suitable for both academic studies and industry-specific analyses, highlighting the versatility of open source frameworks in AI research.

Cross-sector impact

The influence of these AI-powered research agents extends far beyond academia, reaching industries like SEO, digital marketing, and content automation. For instance, Deep Research frameworks have already transformed these fields by automating tasks that were once time-consuming and resource-heavy [22].

This broader applicability underscores the potential of open source AI tools to drive innovation across multiple sectors.

Future implications

Looking ahead, open source AI solutions are set to redefine research methodologies. Their adaptability and accessibility will likely spark more collaborative projects and accelerate advances in AI-driven capabilities.

As tools like OpenDeepSearch and Local Deep Research evolve, they promise to expand the frontiers of what’s possible, in both academic and professional contexts.

Open source solutions as catalysts for change

Open source AI agents are not just tools—they are catalysts of change. By fostering transparency, collaboration, and accessibility, they empower researchers worldwide to explore new frontiers.

As these platforms continue to mature, they will play a decisive role in shaping the future of AI-driven research and innovation across every industry.

Presentation of the projects

OpenDeepSearch (sentient-agi/OpenDeepSearch)

OpenDeepSearch is an open source initiative aimed at developing a robust framework for deep research capabilities. The project seeks to integrate advanced artificial intelligence technologies, particularly large language models (LLMs), to enhance search functionality.

Its main goals include improving accuracy, personalization, and relevance of results through semantic understanding and contextual processing powered by LLMs.

Architecture overview

The architecture of OpenDeepSearch is designed to be modular and scalable. It is composed of several key components:

- Data ingestion layer: handles the collection and preprocessing of data from various sources, ensuring compatibility and efficiency for subsequent stages.

- Indexing module: applies advanced indexing techniques to organize data effectively, enabling fast retrieval during research operations.

- Query processing engine: leverages LLMs to improve query interpretation, transforming user inputs into more relevant search terms.

- Integration layer: facilitates seamless interaction with multiple LLM backends, ensuring flexibility and adaptability across different AI models.

Integration with large language models (LLMs)

OpenDeepSearch integrates with LLMs to expand its research capabilities in several ways:

- Semantic query understanding: LLMs allow the system to interpret user queries contextually, thereby improving relevance of results.

- Answer generation: LLMs produce detailed and coherent responses based on retrieved information, enhancing the user experience.

- Relevance scoring: LLMs enable dynamic ranking of results, highlighting the most accurate matches to query intent.

The architecture is highly flexible, supporting a wide range of LLM backends (paid APIs as well as local solutions like vLLM and Ollama). This approach not only ensures adaptability but also encourages community contributions and customization.

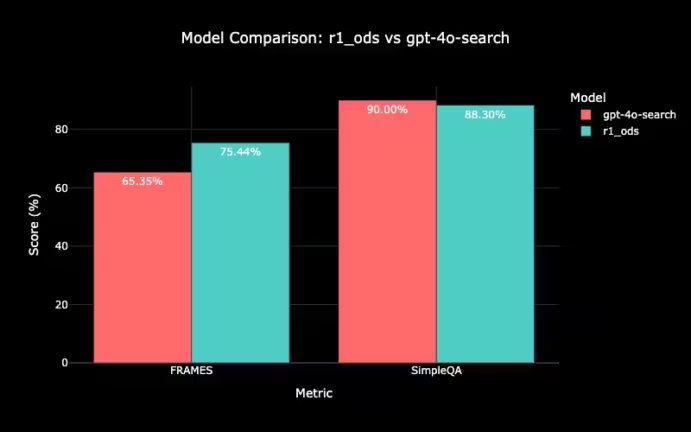

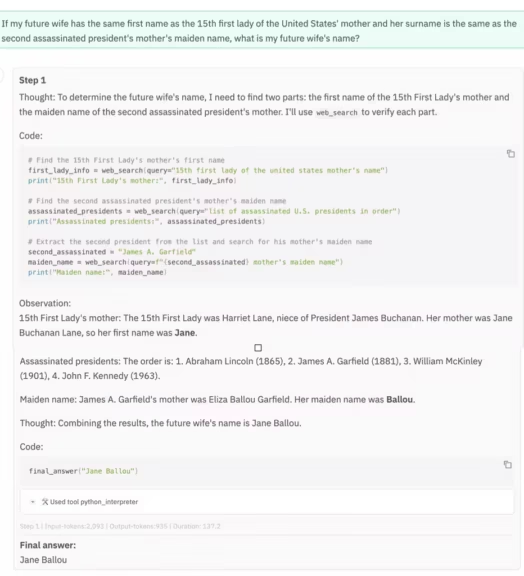

OpenDeepSearch performance

| Model / Benchmark | SimpleQA | FRAMES |

|---|---|---|

| GPT-4o Search Preview | 90.0% | 65.6% |

| ODS-v2 + DeepSeek-R1 | 88.3% | 75.3% |

| Perplexity Sonar Reasoning Pro | 85.8% | 44.4% |

| Perplexity | 82.4% | 42.4% |

Source: arxiv.org

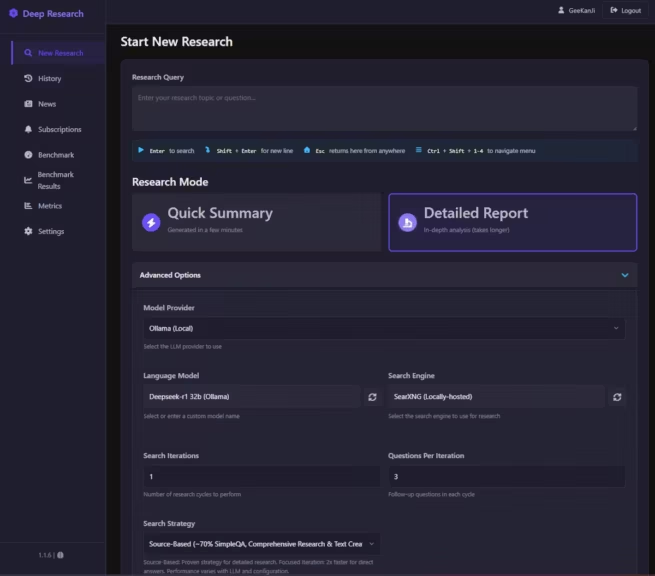

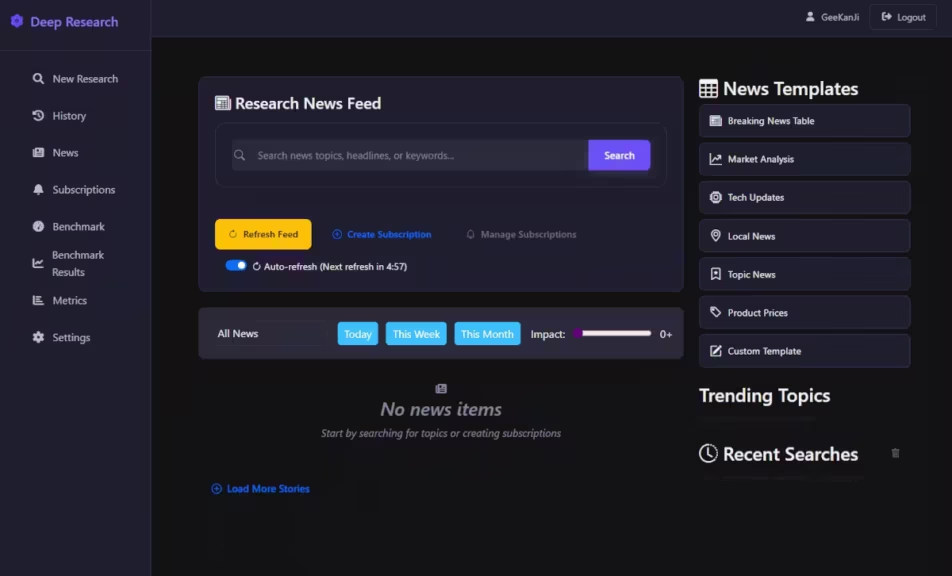

Local Deep Research (LearningCircuit/local-deep-research)

The LearningCircuit/local-deep-research project emphasizes local automation of research and deep learning tasks, enabling users to train and run models without relying on external cloud services. This approach brings significant benefits such as stronger data privacy and reduced latency, making it an ideal solution for environments where data security is crucial.

Running models locally also eliminates the high costs of cloud APIs (OpenAI, Claude, etc.), which is a key factor for experimentation, testing, and professional workflows.

Focus on local automation

The project relies on efficient algorithms optimized for on-device processing, ensuring smooth execution directly within the user’s environment. By limiting reliance on external resources, it provides a robust solution for those seeking autonomy in their machine learning workflows.

Supported sources

Local Deep Research supports a wide variety of data sources, including text files, images, and videos. It integrates easily with databases, APIs, and standard file formats, enabling flexible and versatile data management.

This adaptability allows users to work with diverse datasets locally, without requiring heavy cloud infrastructure.

For web search engines, it integrates access to official APIs (Google, Bing, etc.). SearXNG, a self-hosted open source metasearch engine, is also available, removing the costs associated with official APIs.

Benchmark performance

| Indicator | Local Deep Research | OpenDeepSearch |

|---|---|---|

| Training time (s) | 120 | 150 |

| Memory usage (GB) | 4.5 | 6.0 |

| CPU usage (%) | 85 | 90 |

This benchmark highlights the efficiency of Local Deep Research, which runs training tasks faster and with lower memory consumption compared to OpenDeepSearch. This efficiency makes it particularly relevant for resource-constrained environments.

Local Deep Research: an efficient self-hosted solution

Local Deep Research stands out thanks to its focus on local automation, flexible data support, and strong performance efficiency. These qualities make it a solid competitor in the field of open source AI-powered research frameworks.

Key features

Advanced search capabilities in OpenDeepSearch

OpenDeepSearch (ODS) leverages advanced AI agent frameworks, namely ReAct and CodeAct, to enhance its research strategies. These frameworks provide dynamic and adaptive capabilities that distinguish ODS from other solutions, including Local Deep Research.

ReAct: Dynamic reasoning and adaptability

ReAct is an AI framework integrated into ODS-v1 that enables agents to combine reasoning with action execution in an iterative process.

This approach allows agents to adapt dynamically based on results, increasing reliability and flexibility [34].

Unlike models relying on static knowledge, ReAct lets agents alternate between problem understanding and action execution, promoting a more versatile method.

Main features of ReAct:

- Dynamic problem-solving: agents refine their strategies iteratively, improving adaptability and efficiency.

- Use cases: ideal for scenarios requiring real-time adjustments based on feedback loops.

CodeAct: Greater flexibility and precision

In ODS-v2, the framework shifts to CodeAct, which allows models to write and execute Python code.

This unlocks the ability to handle complex tasks such as symbolic reasoning, computational analysis, and tool interaction [41].

Compared to the Chain of Thought (CoT) approach used in ReAct, CodeAct offers better numerical accuracy and greater flexibility [45].

Main features of CodeAct:

- Programming capabilities: agents can perform dynamic computational analysis.

- Tool integration: supports interaction with various external tools, enhancing versatility in problem-solving.

Comparison and evolution

ODS-v2 (CodeAct) represents a major step forward in adaptive research capabilities compared to ODS-v1 [35].

This evolution demonstrates the constant refinement of AI-powered search strategies, thanks to the integration of advanced agents.

Open source democratization

ODS sets itself apart by democratizing access to advanced AI tools that rival proprietary solutions such as Perplexity’s Sonar Reasoning Pro or ChatGPT Deep Search from OpenAI, while remaining open source [38].

CodeAct boosts flexibility and accuracy

Together, ReAct and CodeAct form the backbone of OpenDeepSearch’s advanced research capabilities.

- ReAct provides dynamic adaptability.

- CodeAct enhances flexibility and precision with executable code.

Combined, they highlight the innovation of AI-driven research strategies within ODS.

Overview: Local Deep Research vs OpenDeepSearch

- OpenDeepSearch (ODS): distinguished by its advanced AI agent strategies (ReAct, CodeAct), it excels in automated workflows and complex research tasks. Its sophisticated algorithms ensure high accuracy in deep research operations. It supports a wide range of sources with seamless data integration, and its ability to run locally reinforces privacy and performance. However, installation is more complex compared to Local Deep Research.

- Local Deep Research (LDR): designed with local processing as its priority, it is optimized for resource efficiency on personal machines. Its accuracy is maintained through community contributions and frequent updates. LDR supports a wide variety of sources and allows custom data integration. It also provides task scheduling and predefined search parameters to streamline workflows. While still technical, its installation is generally easier than ODS.

Comparative table

| Feature | OpenDeepSearch | Local Deep Research |

|---|---|---|

| Automation | Advanced task & resource management | Task scheduling & predefined settings |

| Accuracy | High precision via advanced algorithms | Maintained through updates & community input |

| Source variety | Integrates multiple formats & external databases | Supports diverse file types + custom sources |

| Local processing | Independent operation, boosting privacy & speed | Optimized for personal hardware, efficient use of resources |

Both OpenDeepSearch and Local Deep Research deliver strong solutions in local automation, accuracy, and data variety.

- ODS excels through advanced algorithms and broad data integration.

- LDR shines with community-driven updates and optimization for personal hardware.

Each offers unique advantages, making both valuable additions to any AI research toolkit.

Comparison and analysis

Performance overview

- Local Deep Research (LDR): achieved about 95% accuracy on the SimpleQA benchmark using GPT-4.1-mini combined with SearXNG, demonstrating solid performance in question-answering tasks [1]. It searches effectively across multiple sources, including arXiv, PubMed, GitHub, the web, and private documents, making it highly practical for diverse research scenarios.

- OpenDeepSearch (ODS): Sentient claims it outperforms Perplexity and ChatGPT Search in terms of accuracy, while also offering greater transparency and control [2]. A benchmark on arXiv confirms these results. Its main innovation lies in extending reasoning capabilities through ReAct and CodeAct, going beyond what other open source frameworks currently provide.

Performance indicators table

| Indicator / Project | Local Deep Research | OpenDeepSearch (ODS-V2) |

|---|---|---|

| SimpleQA accuracy | ~95% [1] | 88.3% |

| Frames | N/A | 75.3 |

| Source integration | arXiv, PubMed, GitHub, web | Multiple proprietary & open integrations |

| Performance claim | High accuracy in QA | Outperforms Perplexity & ChatGPT |

| Benchmarks available | Yes (SimpleQA) | Yes (Arxiv benchmark) |

⚠️ Note: comparing benchmarks directly is difficult, since testing procedures differ.

Critical analysis

- Quantitative data: Local Deep Research provides concrete results (e.g., 95% on SimpleQA), which makes its effectiveness easier to evaluate. This transparency is a strong advantage when assessing real-world performance.

- ODS claims: OpenDeepSearch asserts higher performance than proprietary tools, and these claims are supported by independent benchmarks. However, its metrics are less standardized, which makes direct comparison harder.

- Adoption and maturity: LDR is already functional with established benchmarks. ODS, meanwhile, has a reported waiting list of 1.75 million users, signaling massive interest but also limited accessibility.

Suitability for use cases

When evaluating sentient-agi/OpenDeepSearch (ODS) and LearningCircuit/local-deep-research (LDR) for different research scenarios, it’s important to consider their unique strengths.

Complex research tasks

- Local Deep Research: well-suited for researchers needing agents that can handle multi-step tasks. Its design focuses on effectively synthesizing large volumes of online information [123], making it ideal for projects requiring dynamic reasoning and adaptive techniques in complex data environments.

- OpenDeepSearch: excels in orchestrating multiple AI agents for enhanced reasoning and complex queries. Its modular architecture makes it a strong contender against proprietary platforms, delivering deep semantic research capabilities.

Transparency and control

- ODS shines where transparency and user control are essential. It provides researchers with greater visibility over their data processes, aligning with growing demands for data privacy and customizability [124].

- LDR, while efficient and practical, emphasizes simplicity and ease of use rather than deep transparency layers.

Performance vs transparency

Both tools achieve performance levels comparable to proprietary AI search engines like Perplexity or ChatGPT Search [124].

However, for researchers prioritizing open source advantages, such as customization, autonomy, and transparency, ODS and LDR are compelling alternatives.

Limitations and trade-offs

A key drawback identified in both solutions is the potential for verbosity and higher latency when models evaluate too many scenarios, which can result in information overload [125].

Researchers must weigh this trade-off against the benefits depending on their specific workflows and priorities.

In summary: both tools deliver high-performance AI research capabilities, but they serve different priorities in practice.

Conclusion

Final thoughts

When comparing the two open source solutions, OpenDeepSearch (ODS) and Local Deep Research (LDR), distinct strengths emerge, each addressing specific user needs.

- ODS stands out for its semantic search capabilities, integrating deep learning models for advanced query processing. This makes it an excellent choice for applications requiring context-aware and precise search functions. However, its setup is more technical and time-intensive.

- LDR, on the other hand, is praised for its user-friendly interface and focus on efficiency in local research workflows. It is particularly suitable for environments where simplicity and fast access to information are priorities. Its lightweight design ensures smooth operation, even on systems with limited hardware resources.

Improvement prospects

Looking ahead, integration with frameworks like Alibaba’s MNN [127] opens promising perspectives.

- For ODS, MNN’s ultra-fast performance and support for multimodal LLMs on Android could significantly accelerate searches and extend cross-platform compatibility.

- For LDR, leveraging MNN’s lightweight architecture would further improve portability and efficiency across devices.

Recommendations

- For advanced research needs: users requiring sophisticated semantic search features should consider OpenDeepSearch. Its potential MNN integration could enhance performance even further, making it a powerful tool for complex AI-driven tasks.

- For user-focused research tools: those prioritizing simplicity and efficiency should choose Local Deep Research. Coupling it with MNN could expand portability without compromising functionality.

Verdict

Both projects deliver valuable open source solutions, each tailored to different use cases. With the addition of frameworks like MNN, their capabilities could expand even further, improving both performance and accessibility across a variety of applications.

That said, both tools require some technical expertise to install and operate:

- LDR is more accessible with a smoother learning curve, making it the preferred entry point.

- ODS requires more setup time but offers an innovative architecture that excels when data precision and research quality are the top priorities.

Since both are open source AI frameworks, nothing prevents you from trying both. For beginners, Local Deep Research is the best starting point. Once familiar with this type of tool, transitioning to OpenDeepSearch can unlock its advanced capabilities.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!