What is a Vector Database? Understanding Its Role in AI and RAG Models

In 2025, vector databases have become one of the core pillars of modern artificial intelligence. Once confined to semantic search and natural language processing, they are now the semantic backbone of contextual AI, enabling models to access memory, reasoning, and meaning comprehension, capabilities that traditional databases could never provide efficiently.

Their function is based on the storage of embeddings, or vector representations, which encode the meaning of unstructured data such as text, images, code, or audio. These vectors allow AI systems to compare and retrieve information by meaning, rather than by simple keyword matching. This principle powers today’s semantic search engines, AI assistants, and autonomous agents capable of understanding the context of a question or conversation.

In modern architectures, vector databases play a central role in RAG (Retrieval-Augmented Generation) systems, a method that combines the linguistic power of Large Language Models (LLMs) with an external vector memory. The model no longer relies solely on its training data, but queries its database to find the most relevant information before generating a contextualized response.

Today, this technology forms the foundation of the most advanced AI ecosystems. Tools like Milvus, Qdrant, Pinecone, and Chroma power applications ranging from RAG pipelines to long-term AI memory, multimodal search, and anomaly detection in complex datasets.

In short, the vector database is no longer just an infrastructure layer, but the semantic core of modern AI systems. Without it, natural interactions, contextual understanding, and persistent memory would be impossible, all of which are essential to what we now call agentic AI.

What is a Vector Database?

A vector database is a data management system designed to store and search numerical vectors, known as embeddings. These embeddings are mathematical representations that encode the meaning of unstructured content—text, image, audio, or code—within a multidimensional space.

Each piece of data is transformed into a vector through an embedding model such as nomic-embed, e5-small, or text-embedding-ada-002. These models capture the semantics of words and sentences rather than their literal forms. As a result, two documents expressing the same idea with different wording will produce vectors located close to each other in this semantic space.

Unlike traditional databases that rely on exact matches or string comparisons, a vector database operates through semantic similarity. It can associate queries like “how does a GPU work” and “graphics processor explanation”, even if no words are identical.

This property makes it a cornerstone for semantic search, context-aware AI agents, and intelligent recommendation systems. Vector databases allow large language models (LLMs) to reason using data not included in their internal weights, by consulting relevant external sources through Retrieval-Augmented Generation (RAG).

In practice, the vector database acts as a semantic memory that connects a model’s linguistic capacity to external knowledge stored in documents, archives, or domain-specific databases. This approach enables a new generation of AI-powered tools, from research assistants and writing copilots to document analysis engines, all capable of leveraging meaning instead of syntax.

Open-source initiatives like Chroma, Qdrant, and FAISS make this technology accessible, allowing developers and researchers to integrate vector search directly into their AI pipelines. These tools are the foundation of modern RAG systems used by vLLM, Ollama, and Open WebUI, which combine high performance, local privacy, and contextual precision.

How a Vector Database Works

The functioning of a vector database follows a precise sequence of steps that enable an artificial intelligence system to index, compare, and retrieve information according to semantic proximity.

1. Embedding generation

It all starts with converting raw data (text, image, code, or audio) into numerical vectors, known as embeddings. An embedding model, such as text-embedding-ada-002, nomic-embed-text, or e5-base, translates the meaning of the content into a series of floating-point values, usually spanning hundreds or thousands of dimensions. Each dimension encodes a semantic feature—tone, topic, structure, or context—allowing the machine to understand the similarity in meaning between two items.

2. Vector indexing and organization

Once the embeddings are generated, they are stored in a vector database such as FAISS, Chroma, Qdrant, or Milvus. These systems use optimized index structures like HNSW graphs or product quantization to accelerate similarity searches across millions of vectors. The goal is simple: retrieve the most semantically similar vectors in milliseconds for a given query.

This approach powers large-scale systems like Pinecone, Zilliz, and enterprise deployments of Milvus, which can handle billions of embeddings with minimal latency.

3. Semantic search

When a user submits a question, it is transformed into an embedding. The vector database then calculates the distance between this query vector and those in the index, identifying the most semantically relevant passages. This semantic retrieval process replaces traditional keyword search, which is often limited and sensitive to phrasing.

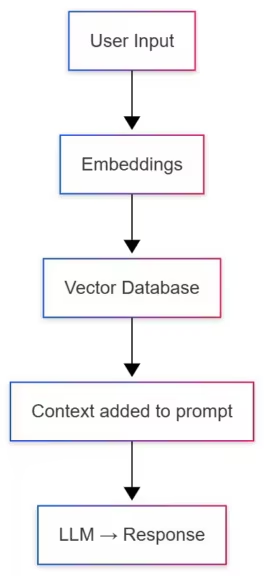

4. Supplying context to the AI model

The selected passages are then sent to the language model (LLM), executed for example via vLLM or Ollama. The model incorporates these excerpts into its prompt before generating a contextualized response. This is the essence of Retrieval-Augmented Generation (RAG): linking a model’s linguistic reasoning to the relevance of a vector knowledge base.

5. Result: a knowledge-connected AI

Through this synergy, an AI system can accurately answer questions about recent, specialized, or private topics without requiring retraining. The vector database acts as an external memory system, extending the model’s comprehension with temporal continuity and contextual depth.

Main Open-Source Solutions

The open-source ecosystem has played a crucial role in democratizing vector databases. By 2025, several free, efficient, and self-hostable solutions allow researchers, developers, and enterprises to benefit from semantic search and vector storage without relying on the cloud.

| Tool | License | Key features | Strengths |

|---|---|---|---|

| FAISS | MIT (Meta) | C++/Python library | Extremely fast, ideal for large-scale data |

| Chroma | Apache 2.0 | Lightweight Python database | Ideal for local, simple, or experimental use. Compatible with LangChain, LlamaIndex, and Open WebUI. |

| Qdrant | Open source (Rust) | REST and gRPC API | Highly scalable, supports filters and metadata. Ideal for advanced integration with LangChain, LlamaIndex, and Open WebUI. Production Ready |

| Milvus | Apache 2.0 | Distributed architecture | High performance, production-ready |

FAISS

Developed by Meta AI, FAISS (Facebook AI Similarity Search) is a C++ library with Python bindings. It remains a reference in large-scale vector similarity search. FAISS is known for its speed, memory efficiency, and GPU compatibility, making it ideal for research environments and embedded systems requiring ultra-low latency.

Chroma

Chroma is a Python-based vector database designed for simplicity and direct integration with modern AI frameworks such as LangChain, LlamaIndex, and Open WebUI. It is perfect for local deployments and RAG prototypes thanks to its clean interface and automatic metadata handling. Chroma is the most accessible choice for vLLM or Ollama users experimenting with contextual chat using their own documents.

Qdrant

Built with Rust, Qdrant provides complete REST and gRPC APIs, ultra-fast vector search, and excellent scalability. It combines performance and flexibility, supporting advanced filtering and hybrid search (text + vector). Qdrant is increasingly used in industrial applications where speed and precision are critical, such as cybersecurity or financial data analysis.

Milvus

Designed for large-scale environments, Milvus is a distributed vector database under the Apache 2.0 license. It offers high fault tolerance, native clustering, and optimizations for multi-GPU architectures. Milvus is often paired with its commercial variant Zilliz Cloud, which provides a managed infrastructure for billions of embeddings.

Which solution should you choose?

The choice depends on your use case:

- FAISS: high-performance local search and prototyping

- Chroma: fast integration with RAG pipelines or Open WebUI

- Qdrant: scalable backends written in Rust

- Milvus: distributed setups and massive data volumes

These open-source tools form the backbone of retrieval-augmented generation infrastructures, allowing both cloud and local models (via vLLM, Ollama, or LM Studio) to access up-to-date knowledge without retraining.

When AI Meets Vector Databases: The Role of RAG

A vector database alone does not “understand” language, and an LLM alone does not know your documents. Together, they form the foundation of Retrieval-Augmented Generation (RAG).

RAG combines the linguistic power of the model with the memory of a vector database. When a query is made, the system vectorizes the question, searches for the most relevant passages, then injects them into the model’s prompt. The result is a contextual AI capable of providing precise, up-to-date answers based on your own data.

This architecture powers tools such as “Chat with your documents” and enterprise document copilots. Frameworks like LangChain and LlamaIndex make such integrations straightforward.

From RAG to Agentic RAG: The Rise of Intelligent Agents

The natural evolution of RAG has led to a new generation of intelligent AI agents, capable of reasoning, planning, and interacting autonomously with their environment. In this transformation, the vector database is no longer just a technical component but becomes the cognitive memory at the core of an agent’s reasoning.

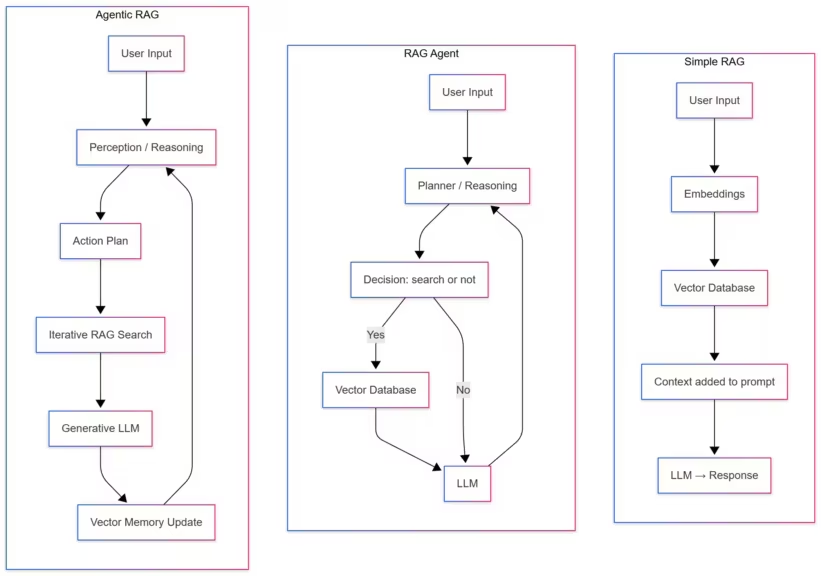

1. Classic RAG: a linear system

Traditional RAG follows a sequential architecture. A query is vectorized, the database retrieves the most relevant passages, and the model generates a response. This approach is effective but static: it does not take initiative or plan actions. It works well for focused use cases like automated FAQs, documentation assistants, or topic-based search engines.

2. Agentic RAG: reasoning and decision-making

The RAG Agent goes one step further by integrating a planning engine. The agent decides when and how to query the vector database, whether to summarize, compare, or trigger a new search. It can chain multiple RAG queries in a single reasoning process. This approach, implemented in frameworks such as LangGraph and OpenDevin, produces assistants capable of analyzing large document corpora, evaluating sources, and creating structured summaries.

Also read : LangGraph: the open-source backbone of modern AI agents

3. The Agentic RAG: living memory

Agentic RAG represents a new generation of autonomous and self-learning systems. Here, the vector database becomes a dynamic memory space. The agent writes back what it learns, stores the results of its own reasoning, and reuses this information to improve future decisions.

This cycle—observation, reasoning, action, memory update—forms a closed cognitive loop, similar to adaptive human behavior. It is the foundation of what is now called Agentic AI, where knowledge is no longer static but constantly evolving.

4. A key architecture for next-generation AI

Agentic RAG enables advanced use cases:

- Autonomous monitoring systems that continuously index and analyze information streams

- Self-updating copilots that maintain internal knowledge without human input

- Industrial AI systems that adapt behavior to operational context

According to Hackernoon, vector-database-based architectures have become the standard for building agents with persistent and contextual memory. They allow models to retain reasoning traces, learn over time, and link decisions to coherent knowledge.

In short, Agentic RAG transforms the relationship between models and data. The vector database evolves from a static repository into a living memory that captures the AI’s experience itself.

Open WebUI: Bringing Vector Databases to Everyone

The rise of vector databases is no longer limited to engineers and researchers. Thanks to tools like Open WebUI, the power of local RAG is now accessible to everyone. This open-source interface transforms complex AI integration into a simple, visual experience—no code required.

A dashboard for contextual AI

Open WebUI acts as a bridge between local AI models such as Ollama, vLLM, or LM Studio, and vector databases like Chroma, Qdrant, or LanceDB. Users can import their files (PDFs, Markdown notes, technical documents), launch automatic indexing, and query their database through a chat-like interface.

This integration simplifies semantic search and RAG pipelines. Each document is transformed into embeddings, stored in a vector database, and injected into the model’s context during generation. In practice, this enables users to “chat” with their own archives while keeping data completely local.

Privacy and local control

The open-source community quickly adopted Open WebUI for one main reason: everything runs locally. Documents, embeddings, and queries stay on the user’s machine, ensuring complete data privacy. This aligns with the growing trend of local AI, where the performance of recent models allows for advanced inference without any cloud dependency.

Frameworks like LangChain and LlamaIndex already offer native integrations with Open WebUI, making it easy to create custom RAG assistants that query internal data. Combined with Chroma (often used as the default vector engine), this setup can deploy a fully private document assistant in minutes.

A key tool for RAG democratization

By making semantic search accessible, Open WebUI plays a role similar to Ollama for local LLMs: it democratizes AI deployment on personal machines. For writers, researchers, or developers, it provides a hands-on way to understand how a vector database works and how RAG improves response quality.

Open WebUI is now a standard interface in open-source projects combining LLMs and local vector search, as confirmed by community feedback on GitHub.

Should You Build Your Own Local Vector Database?

The idea of building a personal vector database is gaining traction among authors, developers, and researchers. But is it really worth indexing all your data for semantic search? The answer depends on your needs, data volume, and desired level of control.

Why build a local vector database

Creating a local vector database allows you to leverage your own knowledge base. Every article, technical document, or research note can be converted into embeddings and stored as vectors. This forms a personal semantic memory that can be queried by a local model such as vLLM or Ollama.

The benefits are immediate:

- Full data privacy: nothing leaves your machine

- Editorial consistency: the AI retrieves content aligned with your own tone and terminology

- Productivity boost: instantly reuse existing knowledge or past writings

For instance, a technical writer can query their local vector database to retrieve an explanation, hardware comparison, or conclusion previously written in another article.

Limitations to consider

Indexing all your files is not practical. Each document converted into embeddings consumes disk space, compute, and memory. An oversized database can quickly produce noisy or less relevant results.

A better approach is to organize your data into thematic knowledge bases:

- Articles and publications for editorial content

- Technical documentation for structured data

- Notes and projects for research and analysis

This segmentation simplifies maintenance and improves search relevance.

Recommended tools and integrations

Solutions like Chroma and Qdrant make it easy to create local vector databases. They can connect to Open WebUI, LangChain, or LlamaIndex, forming a complete RAG pipeline:

Documents → Embeddings → Vector Database → RAG → AI Response

This architecture is now considered the backbone of local AI, combining independence, performance, and data privacy.

Conclusion: Vector Databases as the Semantic Memory of AI

Vector databases have become the invisible backbone of modern AI. They are more than a search tool—they represent the semantic memory that allows AI models and intelligent agents to reason, understand context, and maintain continuity.

Their role is central in RAG (Retrieval-Augmented Generation) and Agentic RAG architectures, connecting human knowledge with computational power. They give AI persistent memory, contextual understanding, and the ability to evolve with new data—without retraining.

Thanks to the open-source ecosystem—Chroma, FAISS, Qdrant, Milvus, and Open WebUI—this technology, once reserved for cloud giants, is now accessible to everyone. It powers both local research assistants and autonomous enterprise agents, forming the foundation of personal and sovereign AI capable of running offline.

Ultimately, the vector database is to artificial intelligence what memory is to the human mind: a space for continuity, meaning, and learning. Without it, models remain static and disconnected from reality. With it, they become contextual, adaptive, and truly intelligent.

The global vector database market is expanding rapidly, driven by the explosion of generative AI, cloud computing, and data-intensive industries. According to reports from The Business Research Company, Polaris Market Research, and SNS Insider, the market is projected to reach between $3.04 and $3.2 billion in 2025, up from $2.46 billion in 2024.

Ideas to Explore with Vector Databases

Beyond RAG and AI agents, vector databases open many practical possibilities. Here are some areas worth exploring:

- Document search: create an assistant that retrieves relevant passages from thousands of internal files

- Customer support: power chatbots with company documentation for faster and more consistent answers

- Editorial consistency: help writers maintain tone and vocabulary using previous content

- Trend monitoring: automatically track sector trends by indexing articles, reports, or publications

- Software engineering: query a vector database containing source code and API documentation

These are only a few examples. Vector databases offer a vast experimentation field where every domain—from journalism to scientific research—can build its own semantic memory to better leverage its knowledge.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!