AI Weekly News: Infrastructure, Chips, Agents and Regulation

This report synthesizes the systemic AI transition between December 30, 2025, and January 27, 2026, marked by record $475 billion Big Tech capital expenditures and the emergence of autonomous agents. By connecting massive infrastructure expansion to the integration of Google Gemini into Apple’s Siri, this period defines a new era of agentic intelligence. The year 2026 is now positioned as the year of practical adoption, where raw compute power transforms into measurable enterprise productivity.

This page displays AI news covering the specified time period. Full archives from previous weeks are available at the bottom of this page or on the page that lists all AI news, both past and current.

The $475B Infrastructure Surge: Capex as a Competitive Moat

Major technology companies reported record AI capital expenditures during the Q4 2025 and Q1 2026 earnings cycle, signaling a shift toward long-term infrastructure dominance. Collective capex from Microsoft, Meta, Amazon, and Google is projected at approximately $475 billion for 2026, more than double the spending recorded in 2024. This unprecedented financial commitment serves as a competitive moat, ensuring these entities control the underlying compute necessary for next-generation model training.

Big Tech Earnings & The $475B Milestone

Microsoft, Meta, and Alphabet have provided guidance that underscores the intensifying race for AI infrastructure. Meta announced capital expenditure guidance of $153 to $160 billion for 2026, representing a year-over-year increase of up to 36%. Microsoft’s Q1 FY26 capex reached $34.9 billion, exceeding its prior guidance of $30 billion, driven primarily by sustained demand for Azure AI workloads.

| Entreprise | Capex 2025 (Est.) | Capex 2026 (Proj.) | Croissance (YoY) | Impact Stratégique |

| Amazon (AWS) | ~$125 Md | $125 Md+ | +61% (vs 2024) | Souveraineté IA (GovCloud) et puces Trainium |

| Alphabet (Google) | ~$91-93 Md | $115 Md+ | ~25% | Expansion TPU et défense du modèle Search |

| Microsoft | ~$95 Md | $100 Md+ | +5% (vs 2025) | Infrastructure Azure IA et intégration OpenAI |

| Meta | ~$72 Md | ~$100 Md | +36% | Entraînement Llama et transformation publicitaire |

| Total Hyperscalers | ~$443 Md | $602 Md* | +36% | 75% du budget dédié à l’infrastructure IA |

Amazon’s $50B US GovCloud AI Infrastructure

Amazon has committed $50 billion to develop AI and high-performance computing infrastructure specifically for U.S. federal government customers. This initiative targets Top Secret, Secret, and GovCloud environments, with plans to deploy approximately 1.3 gigawatts of AI compute capacity. Construction is slated to begin in 2026, with the infrastructure becoming fully operational by 2028 to support models like Anthropic Claude and Amazon Nova.

xAI’s $20B Series E: Building the 1M GPU Supercomputer

xAI secured a record $20 billion Series E funding round to facilitate the expansion of its compute infrastructure. This capital supports the deployment of over 1 million H100 GPU equivalents across its supercomputing facilities, aimed at accelerating the development of Grok 5. The investment highlights the massive scale required for frontier model development in the current market.

Supply Chain Dominance: The New Chip Hierarchy

The semiconductor industry is undergoing a structural reorientation as AI infrastructure requirements begin to eclipse traditional consumer electronics demand. This shift is most evident in the changing customer profiles of major foundries, where high-performance computing now represents the primary growth engine. For CTOs, this transition necessitates a more granular approach to hardware procurement and GPU throughput planning.

Nvidia Overtakes Apple: TSMC’s New Center of Gravity

Nvidia has surpassed Apple to become the largest customer of Taiwan Semiconductor Manufacturing Company (TSMC). Analyst estimates project that Nvidia will generate $33 billion, or 22% of TSMC’s total revenue, in 2026. In comparison, Apple is expected to contribute approximately 18%, reflecting a significant pivot in the global semiconductor supply chain toward AI-centric hardware.

GPU Throughput & Scarcity: Strategic Implications for CTOs

TSMC reported that high-performance computing (HPC) sales constituted 55% of net revenue in Q4 2025, up from 40% in the previous year. CEO C.C. Wei forecasts continued HPC growth in the mid-to-high 50% range through 2029. For enterprise leaders, this concentration of compute power suggests that GPU availability will remain a critical bottleneck, requiring sophisticated strategies for inference stack optimization and cost-per-token management.

The Agentic Transition: From Chatbots to Digital Employees

The AI industry is moving beyond simple conversational interfaces toward autonomous agentic systems capable of executing complex, multi-step tasks. These systems, described as digital employees, integrate deeply with existing software ecosystems to perform actions on behalf of the user. This transition is supported by new open standards and personalized intelligence frameworks that allow models to access local and cloud-based data securely.

Apple + Google: Reforming Siri with Gemini

Apple and Google have formed a strategic partnership to deploy Gemini models as the foundational layer for a redesigned Siri. This integration, expected to debut in beta via iOS 26.4 in February 2026, will feature improved on-screen content understanding and personalized context awareness. Privacy protections include the execution of Gemini models on Apple’s Private Cloud Compute infrastructure, rather than standard public cloud servers.

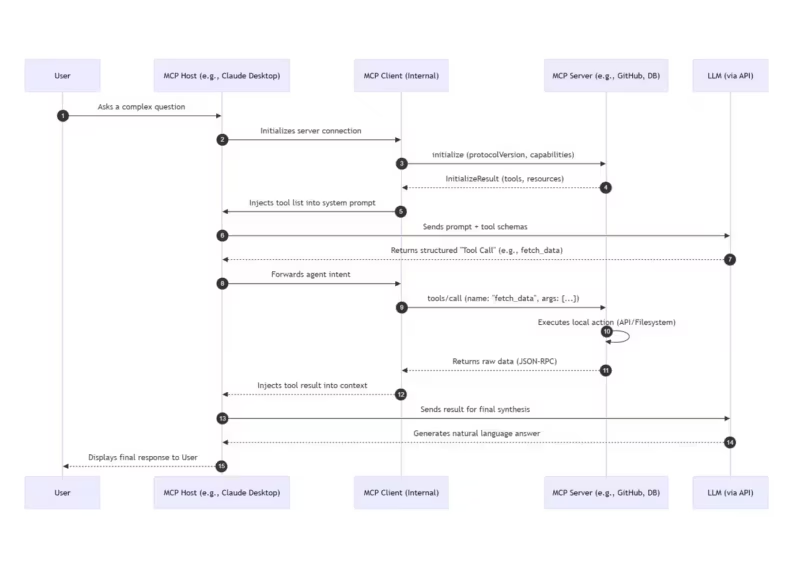

Anthropic Claude Cowork & The MCP Open Standard

Anthropic announced Claude Cowork, a general-purpose AI agent designed for knowledge workers to automate file organization and data transformation. The tool utilizes the Model Context Protocol (MCP), an open standard that enables authenticated, interactive access to third-party services like Slack, Canva, and Figma. Developers can leverage MCP to build agents that navigate web interfaces and execute workflows with high autonomy.

Google Personal Intelligence: Deep Ecosystem Integration

Google’s new Personal Intelligence feature allows Gemini to connect directly with Gmail, Calendar, and Photos through single-tap authentication. This ecosystem integration enables the model to perform tasks like drafting reports from disparate emails or organizing photo libraries based on natural language queries. Concurrent updates to the Veo video model and Gemini API timelines further support this push toward a more capable, action-oriented assistant.

Governance & The Workforce: The Reality of “Practical Adoption”

As AI capabilities expand, the focus is shifting toward the practical adoption of these tools within regulated industries and the broader workforce. This phase is characterized by the development of reasoned ethical frameworks and the quantification of AI’s impact on high-skill roles. Ensuring safety, compliance, and helpfulness has become a hierarchical priority for leading AI laboratories as they move into enterprise implementation.

Anthropic’s AI Constitution: CC0 Ethics as a Standard

Anthropic has released a comprehensive constitutional document that outlines the behavioral guidelines and training principles for its Claude models. The constitution prioritizes safety and ethics in a hierarchical order, moving away from rigid constraints toward a more reasoned framework. Released under a Creative Commons CC0 public domain license, the document serves as both an internal training guide and an external transparency artifact for the industry.

The Davos Forecast: AI vs. Software Engineering

At the World Economic Forum in Davos, Anthropic CEO Dario Amodei forecasted that AI models could assume the majority of software engineering responsibilities within 6 to 12 months. Amodei noted that engineers at Anthropic have already transitioned from manual coding to editing AI-generated output. He further predicted that 50% of white-collar jobs could be impacted within five years, although chip manufacturing constraints may temper this timeline.

OpenAI 2026: Bridging the Gap to Enterprise Implementation

OpenAI CFO Sarah Friar announced that the company’s strategic focus for 2026 is practical adoption across healthcare, science, and the enterprise. The company’s computational capacity has grown significantly, from 0.2 GW in 2023 to 1.9 GW in 2025, supporting an annualized revenue exceeding $20 billion. OpenAI is exploring diversified monetization models, including IP-based licensing, to maintain financial discipline while scaling its real-world implementation.

Toward a Unified AI Stack

The convergence of record infrastructure investment, semiconductor dominance, and agentic software indicates that the AI industry has reached a stage of structural maturity. As Big Tech firms deploy their $475 billion capex, the focus for organizations will shift from experimenting with chatbots to integrating autonomous agents into core business logic. For developers and CTOs, mastering the Model Context Protocol and managing GPU throughput will be the primary technical hurdles in achieving practical adoption throughout 2026.

Archives of past weekly AI news

- AI News for December 22–30: Infrastructure, Chips, Agents and Regulation

- AI News for December 15–20: Latest Developments, Models, Policy Shifts and Industry Impact

- AI News for December 8–13: GPT-5.2 Benchmarks & Federal AI Regulation

- AI News December 1–6: Chips, Agents, Key Oversight Moves

- AI News November 24–29 : Breakthrough Models, GPU Pressure, and Key Industry Moves

- AI News – Highlights from November 14–21: Models, GPU Shifts, and Emerging Risks

- AI Weekly News – Highlights from November 7–14: GPT-5.1, AI-Driven Cyber Espionage and Record Infrastructure Investment

- AI Weekly News from November 7, 2025: OpenAI , Apple and the Race for Infrastructure, Archive

- AI News from Oct 27 to Nov 2: OpenAI, NVIDIA and the Global Race for Computing Power

- AI News: The Major Trends of the Week, October 20–24, 2025

- AI News – October 15, 2025: Apple M5, Claude Haiku 4.5, Veo 3.1, and Major Shifts in the AI Industry

Sources and references

1. Tech media

- As reported by CNBC, Big Tech companies are projected to spend $475 billion on AI infrastructure in 2026. (2026-01-27)

- According to CNET, Apple will integrate Google Gemini with Siri starting in February. (2026-01-26)

- As reported by TechCrunch, Anthropic has launched interactive Claude apps for workplace tools. (2026-01-25)

- According to The Verge, OpenAI is focusing on “practical adoption” for 2026. (2026-01-19)

- As reported by Reuters, DeepSeek plans to launch a new coding model in February. (2026-01-09)

2. Companies

- According to the Google Blog, Google has introduced Personal Intelligence for Gemini. (2026-01-13)

- The documentation from Anthropic details the new constitution for Claude AI. (2026-01-20)

- According to AboutAmazon, Amazon is investing $50 billion in AI infrastructure for federal agencies. (2025-11-23)

3. Institutions

- As reported by Yahoo Finance, Anthropic’s CEO discussed AI replacing software engineers at the World Economic Forum. (2026-01-22)

4. Official sources

- The Oracle OCI Release Notes confirm the release of Grok 4.1 Fast. (2026-01-20)

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!