AI News for December 22–30: Infrastructure, Chips, Agents and Regulation

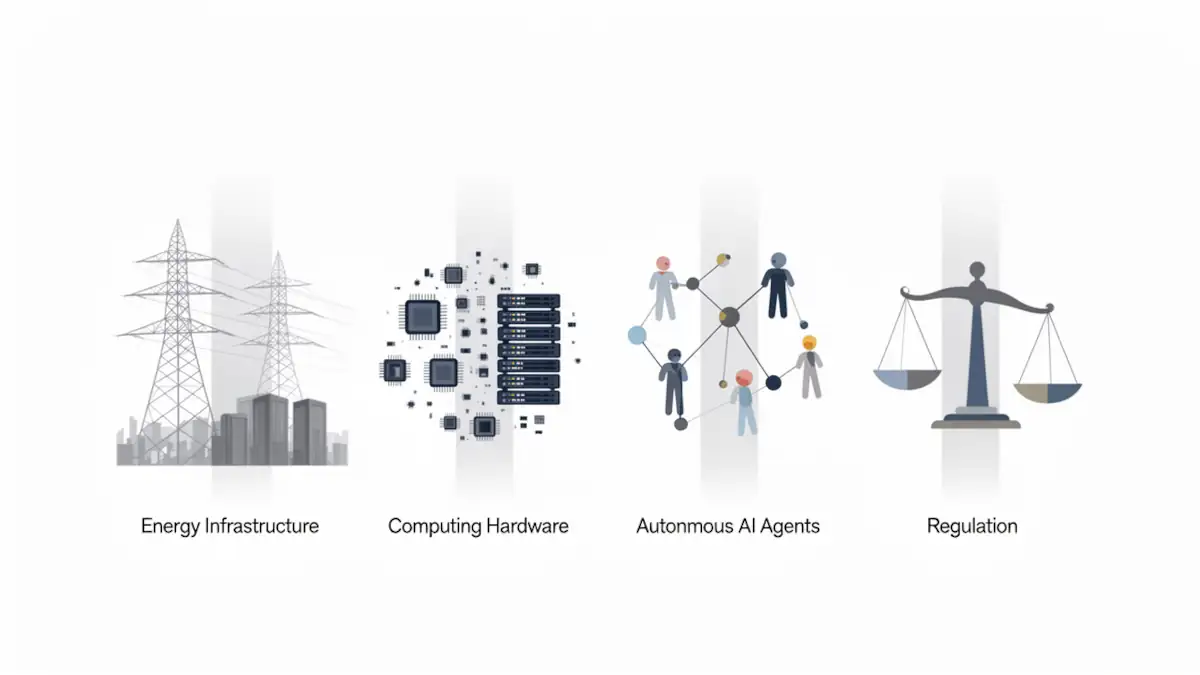

From December 22 to December 30, 2025, AI weekly news was shaped less by new models than by structural constraints across power, hardware, enterprise automation, and regulation. This article provides a verified, systems-level reference based exclusively on primary sources, with confirmed facts clearly separated from interpretation or regulatory uncertainty. The signal this week is consistent, AI progress is now gated as much by energy, inference economics, and law as by model capability.

Find the latest weekly AI news on our main page, updated regularly.

Week at a glance: the facts that shaped AI this week

The numbers and dates that matter

On December 22, Alphabet agreed to acquire Intersect Power for $4.75 billion to secure renewable energy capacity for AI data centers expected to come online through 2028, as reported by Bloomberg and confirmed via Alphabet Investor Relations. During the same week, ByteDance confirmed plans to spend roughly $23 billion on AI infrastructure in 2026, including large-scale semiconductor procurement, according to Reuters and the Financial Times. On December 24, Nvidia entered a non-exclusive licensing and acqui-hire agreement with Groq focused on inference technology, per Groq’s announcement. On December 29, Meta agreed to acquire Manus, reported by Reuters and also covered by CGTN.

Regulatory developments were equally material. On December 24, Italy’s competition authority ordered Meta to suspend WhatsApp Business API terms that restricted rival AI chatbots, as reported by Reuters and covered by TechCrunch. On December 22, the U.S. Federal Trade Commission voted to vacate a prior consent order against Rytr, per the FTC release. That same day, the National Institute of Standards and Technology announced $20 million in funding for AI economic security centers operated by MITRE, according to NIST and MITRE. On December 23, multiple authors filed a copyright lawsuit against xAI, OpenAI, Google, Anthropic, Meta, and Perplexity over training data use, as reported by Reuters and covered by Engadget.

Synthesis table: AI week summary

| Company / Institution | Amount | Purpose | Date | Technical or Regulatory Impact |

|---|---|---|---|---|

| Alphabet (Google) | $4.75B | Acquire Intersect Power to secure renewable energy for AI data centers | Dec 22, 2025 | Long-term power availability for hyperscale AI infrastructure |

| ByteDance | ~$23B (planned) | AI infrastructure and semiconductor procurement (2026 capex) | Dec 23, 2025 | Sustained pressure on GPU supply and AI capacity planning |

| Nvidia / Groq | Not disclosed (license + acqui-hire) | License inference technology and hire Groq engineering team | Dec 24, 2025 | Focus on inference latency, throughput, and cost per token |

| Meta / Manus | Not disclosed | Acquire AI agent startup (“digital employees”) | Dec 29, 2025 | Shift toward enterprise AI agents and task automation |

| Italy Competition Authority (AGCM) | N/A | Interim order against WhatsApp AI restrictions | Dec 24, 2025 | Increased antitrust scrutiny on AI platform access |

| U.S. FTC / Rytr | N/A | Vacate prior consent order on AI-generated reviews | Dec 22, 2025 | Regulatory rollback signal for AI product enforcement |

| NIST / MITRE | $20M | Launch AI economic security centers | Dec 22, 2025 | Public investment in AI security and critical infrastructure |

| Authors vs. AI labs | N/A | Copyright lawsuit over AI training data | Dec 23, 2025 | Elevated legal risk around data provenance |

Infrastructure and energy constraints: AI meets the grid

Alphabet acquires Intersect Power to secure AI energy

On December 22, Alphabet agreed to acquire Intersect Power for $4.75 billion to secure a 10.8 GW pipeline of renewable energy assets intended to support future AI data centers, as detailed in the Alphabet Investor Relations announcement and reported by Bloomberg. The transaction excludes existing Texas and California assets and is designed to underpin expansion plans through 2028. These details are confirmed by company statements and contemporaneous reporting.

The strategic relevance is straightforward. Power availability has become a first-order constraint for AI infrastructure. Hyperscale data centers increasingly face grid congestion, permitting delays, and volatile electricity pricing. Alphabet’s move reduces exposure to these risks by integrating energy supply directly into long-term infrastructure planning.

For the broader ecosystem, this acquisition reinforces a shift toward energy-aware AI deployment. Securing predictable power capacity is now as critical as securing accelerators, particularly for multi-year infrastructure investments.

ByteDance’s ~$23B AI capex signals supply-side pressure

On December 23, reports confirmed that ByteDance plans to raise capital expenditure to RMB 160 billion, approximately $23 billion, in 2026, largely allocated to AI infrastructure and semiconductor purchases, according to Reuters and the Financial Times. Both reports connect the capex plan to AI infrastructure and chip procurement. The exact breakdown by hardware type has not been specified in the available reporting.

These figures represent confirmed spending intentions rather than speculative commitments. What remains uncertain is the precise hardware mix and deployment geography, as export controls and supplier availability continue to evolve. Nevertheless, the scale of the investment signals sustained pressure on global GPU supply.

Taken together, Alphabet’s energy acquisition and ByteDance’s capex plans illustrate a structural shift. AI capacity growth is increasingly constrained by access to power and hardware, not by software differentiation alone.

What changes for infrastructure teams

For infrastructure and data center teams, the implications are practical. Power procurement and site selection must be planned alongside compute expansion. Long-term energy contracts and renewable pipelines reduce exposure to grid volatility. Persistent GPU scarcity also argues for phased deployments and diversified supplier strategies.

Chips and inference strategy: specialization over brute force

Nvidia–Groq: licensing, acqui-hire, and inference focus

On December 24, Nvidia announced a non-exclusive licensing agreement with Groq, alongside the hiring of Groq’s core engineering team, including founder Jonathan Ross, as stated in Groq’s announcement. Nvidia described the transaction as a technology license and talent acquisition. Some financial outlets characterized the deal as being valued at approximately $20 billion, as in this CNBC coverage, a figure reflecting market characterization rather than a confirmed acquisition price.

What is confirmed is the structure. Groq remains an independent company under new leadership, while Nvidia gains access to inference-focused technology and engineering expertise, per the same Groq statement. This distinction matters for understanding Nvidia’s strategy without overstating consolidation.

The agreement underscores Nvidia’s growing emphasis on inference efficiency alongside its established position in training workloads. Rather than pursuing outright acquisition, Nvidia opted for flexibility through licensing and targeted integration.

Latency, throughput, cost per token: why this deal matters

Inference workloads increasingly dominate AI operating costs, particularly at scale. Latency, throughput, and cost per token have become primary optimization targets. Groq’s announcement frames the partnership around accelerating inference at scale, which aligns with these system-level concerns, per Groq’s release.

The Nvidia–Groq agreement points to a broader industry shift toward inference specialization. This does not imply immediate displacement of GPUs, but it highlights growing interest in heterogeneous inference stacks tailored to specific workloads and service-level requirements. The valuation language used in some coverage remains a characterization, not a confirmed contract price, as illustrated by CNBC.

For developers, the takeaway is architectural rather than transactional. Choosing inference runtimes aligned with latency-sensitive or high-throughput use cases is becoming a competitive advantage.

What changes for developers and ML engineers

Developers and ML engineers should expect increased emphasis on benchmarking inference stacks under real workloads. Evaluating latency consistency, throughput scaling, and total cost of ownership will matter more than peak theoretical performance. This week reinforces the need to treat inference as a first-class system design concern.

Also read : AI Inference Cost in 2025: Architecture, Latency, and the Real Cost per Token

From chatbots to AI agents: Meta and Manus

Meta acquires Manus: facts and positioning

On December 29, Meta agreed to acquire Manus, an AI agent startup developing general-purpose systems capable of autonomous task execution, as reported by Reuters and covered by CGTN. Manus was founded in China and is now legally headquartered in Singapore, a jurisdiction often chosen by AI startups seeking regulatory stability and access to international capital amid rising US–China technology tensions.

Meta’s stated objective is to strengthen enterprise automation capabilities, often framed internally as “digital employees.” This positioning reflects a shift from conversational interfaces toward task-oriented agents embedded in workflows. The sources emphasize the direction of travel rather than technical implementation details.

Details on product integration and deployment timelines have not yet been announced, leaving implementation specifics uncertain.

“Digital employees”: what agents change in practice

AI agents differ from chatbots by operating across tools, data sources, and multi-step tasks with limited supervision. In enterprise environments, this raises questions around authentication, auditability, and error handling. The Manus coverage centers on autonomous task execution and enterprise-oriented utility, as described by Reuters.

The acquisition highlights growing interest in agents that execute business processes rather than simply generate responses. This transition increases potential productivity gains while also amplifying governance and security requirements. The “digital employees” framing is useful as a product lens, not as a guarantee of capability.

For organizations, agent-based systems move AI adoption from experimentation toward operational dependency, increasing the cost of failure.

What changes for enterprises and CTOs

For CTOs, agent deployment requires a governance-first approach. Clear autonomy boundaries, robust logging, and integration with identity and access management systems are essential. This week’s developments suggest accelerating agent adoption, but benefits will accrue primarily to organizations prepared to manage these controls.

Regulation and legal risk: pressure builds on AI platforms

Italy orders Meta to open WhatsApp to rival AI

On December 24, Italy’s competition authority issued an interim order requiring Meta to suspend WhatsApp Business API terms that would have blocked third-party AI chatbots, as reported by Reuters and summarized by TechCrunch. The measure aims to prevent anti-competitive foreclosure while the investigation proceeds. The action is explicitly interim in the reporting.

This action is an interim regulatory step, not a final ruling. Meta has stated its intention to appeal. The immediate scope is limited to WhatsApp Business, but the precedent matters for platform interoperability.

The case illustrates intensifying scrutiny of AI access restrictions on dominant digital platforms.

FTC vacates Rytr order: a regulatory rollback signal

On December 22, the U.S. Federal Trade Commission voted to vacate a 2024 consent order against Rytr that had restricted certain AI-generated review features, per the FTC press release. The decision cited a broader directive to reduce regulatory burdens on AI innovation.

This move reflects a change in enforcement posture rather than comprehensive deregulation. It signals increased tolerance for AI experimentation while leaving future consumer protection standards unresolved.

For AI providers, the near-term U.S. regulatory environment appears more permissive, though uncertainty remains.

NIST launches AI economic security centers

Also on December 22, NIST announced $20 million in funding to establish two AI economic security centers operated by MITRE, according to NIST and MITRE. The centers are focused on securing U.S. manufacturing and critical infrastructure against AI-enabled threats. The announcement frames the effort as resilience-oriented.

This represents a concrete public investment in AI security and resilience rather than commercial acceleration. It reflects growing government recognition that AI risk management requires institutional capacity.

The initiative adds a public-sector counterbalance to private-sector AI expansion.

Copyright lawsuits against AI labs: training data risk

On December 23, investigative journalist John Carreyrou and other authors filed a lawsuit against xAI, OpenAI, Google, Anthropic, Meta, and Perplexity, alleging unauthorized use of copyrighted books for training, as reported by Reuters and covered by Engadget. The lawsuit is confirmed, while legal outcomes remain uncertain. The filings elevate the importance of training data provenance.

This case adds to mounting pressure around training data provenance. It does not establish liability but increases litigation risk for model developers and potentially for downstream users.

Enterprises relying on AI models may face indirect exposure depending on how courts interpret training practices.

What this week changes for builders and decision-makers

Infrastructure and capacity planning

This week reinforces that AI capacity planning must integrate power, land, and compute considerations. Energy-secured data centers and long-term hardware contracts are increasingly prerequisites for scalable deployment.

Inference architecture choices

Inference efficiency has become a strategic variable. Developers should benchmark latency, throughput, and cost per token across heterogeneous stacks rather than defaulting to uniform GPU deployments.

Agents, governance, and compliance

AI agents raise governance stakes across organizations. Aligning agent deployment with access controls, audit trails, and data governance frameworks is essential as regulatory scrutiny intensifies.

Overall, this AI weekly news cycle underscores a transition from model-centric narratives toward systems constraints that shape real-world deployment.

Archives of past weekly AI news

- AI News for December 15–20: Latest Developments, Models, Policy Shifts and Industry Impact

- AI News for December 8–13: GPT-5.2 Benchmarks & Federal AI Regulation

- AI News December 1–6: Chips, Agents, Key Oversight Moves

- AI News November 24–29 : Breakthrough Models, GPU Pressure, and Key Industry Moves

- AI News – Highlights from November 14–21: Models, GPU Shifts, and Emerging Risks

- AI Weekly News – Highlights from November 7–14: GPT-5.1, AI-Driven Cyber Espionage and Record Infrastructure Investment

- AI Weekly News from November 7, 2025: OpenAI , Apple and the Race for Infrastructure, Archive

- AI News from Oct 27 to Nov 2: OpenAI, NVIDIA and the Global Race for Computing Power

- AI News: The Major Trends of the Week, October 20–24, 2025

- AI News – October 15, 2025: Apple M5, Claude Haiku 4.5, Veo 3.1, and Major Shifts in the AI Industry

Sources and references

1. Tech media

- As reported by CGTN (Dec 30, 2025), Meta agreed to acquire Manus: CGTN coverage of the Manus acquisition

- As reported by Reuters (Dec 29, 2025), Meta agreed to acquire Manus: Reuters report on Meta acquiring Manus

- As reported by Reuters (Dec 24, 2025), Italy’s watchdog ordered Meta to halt WhatsApp terms barring rival AI chatbots: Reuters report on Italy, Meta, and WhatsApp terms

- As reported by TechCrunch (Dec 24, 2025), Italy told Meta to suspend its policy banning rival AI chatbots from WhatsApp: TechCrunch coverage of the Italy order

- As reported by CNBC (Dec 24, 2025), Nvidia’s Groq deal was characterized as about $20B by some outlets: CNBC report on Nvidia and Groq

- As reported by the Financial Times (Dec 23, 2025), ByteDance plans a $23B AI infrastructure spend: Financial Times report on ByteDance capex

- As reported by Reuters (Dec 23, 2025), ByteDance plans to spend ~$23B toward AI infrastructure in 2026: Reuters report on ByteDance AI infrastructure spend

- As reported by Engadget (Dec 23, 2025), authors filed a lawsuit against AI companies over training: Engadget coverage of the copyright lawsuit

- As reported by Reuters (Dec 22, 2025), authors filed a lawsuit against AI companies over chatbot training: Reuters report on the copyright lawsuit

- As reported by Bloomberg (Dec 22, 2025), Alphabet agreed to buy Intersect Power for $4.75B: Bloomberg report on Alphabet and Intersect Power

2. Companies

- According to Groq (Dec 24, 2025), the Nvidia agreement is a non-exclusive inference technology licensing deal: Groq announcement on the Nvidia licensing agreement

- According to Alphabet Investor Relations (Dec 22, 2025), Alphabet announced an agreement to acquire Intersect Power: Alphabet IR announcement on the Intersect acquisition

3. Institutions

- According to NIST (Dec 22, 2025), $20M was allocated to launch AI centers for manufacturing and critical infrastructure: NIST announcement on AI centers

- According to MITRE (Dec 22, 2025), the new NIST AI centers are operated in collaboration with MITRE: MITRE release on NIST AI centers

- According to the FTC (Dec 22, 2025), the Rytr final order was reopened and set aside: FTC press release on the Rytr order

4. Official sources

- The FTC documentation states the decision to reopen and set aside the Rytr final order: FTC official release on Rytr

- NIST documentation outlines the mandate and funding of the new AI security centers: NIST official announcement

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!