AI News December 1–6: Chips, Agents, Key Oversight Moves

This weekly briefing highlights the AI developments that matter most, from new accelerator hardware and enterprise agent pivots to global oversight milestones shaping deployment in 2026. Covering December 1 to 6, 2025, it distills the week’s most consequential announcements and explains their technical, economic, and regulatory implications for developers, researchers, CTOs, and compliance teams. Together, these signals reveal where AI performance, adoption, and governance are heading.

Find the latest weekly AI news on our main page, updated regularly.

This Week’s AI News at a Glance

The first week of December delivered strong indications of where AI infrastructure, enterprise readiness, and global governance are moving. Across major vendors, chip makers, policymakers, and research groups, the week showcased accelerating compute capability, tightening supply constraints, shifts in enterprise strategies, and clearer signals about how organizations should prepare for 2026.

Summary bullets: the five signals shaping the week

- AWS introduced Trainium3 and its UltraServer platform, delivering up to 4.4x more compute performance and 4x greater energy efficiency compared with Trainium2. (Source : Reuters and an official announcement from Amazon).

- Alphabet’s TPU roadmap expanded, with analysts estimating that external TPU sales could represent a market opportunity approaching 900 billion dollars, as discussed by Bloomberg.

- A worsening global memory shortage reduced DRAM inventories to historic lows, as documented by Reuters.

- Enterprise AI realigned: OpenAI declared an internal “code red”, Microsoft reduced agent-focused targets, and Anthropic continued its enterprise-first momentum, as covered by the Wall Street Journal, Ars Technica, and Deadline.

- Oversight accelerated worldwide: the Future of Life Institute published a new AI Safety Index, the EU AI Act clarified enforcement dates, and Australia introduced a national AI plan.

| Story | Date (2025) | Impact Level | Affected Stakeholders |

|---|---|---|---|

| AWS launches Trainium3 and UltraServer, doubling training performance | 3 Dec | High | Cloud providers, LLM training teams, infra engineers, hyperscalers |

| Alphabet’s TPU expansion and projected external accelerator market (~900B) | 4 Dec | High | Enterprises, AI labs, hardware buyers, cloud strategists |

| Global DRAM and HBM memory shortage intensifies (inventories drop to 2–4 weeks) | 3 Dec | High | Data centers, GPU/TPU buyers, HPC teams, procurement managers |

| Enterprise AI pivots: OpenAI “code red”, Microsoft reduces agent quotas, Anthropic maintains 10× growth | 1–5 Dec | Medium–High | CTOs, CIOs, engineering leads, compliance teams |

| Governance accelerates: AI Safety Index, EU AI Act enforcement timeline, Australia’s national AI plan | 3–5 Dec | High | Regulated industries, compliance teams, policymakers, public-sector IT |

Why these stories matter now

These developments highlight three structural forces shaping AI’s near-term trajectory. Compute is scaling quickly while memory constraints create bottlenecks, enterprise adoption is advancing unevenly and pushing vendors to adjust their strategies, and governance frameworks are solidifying with unprecedented speed. For teams preparing deployments in 2026, this combination creates both friction and clarity.

AI Infrastructure: Chips, Capacity, and Global Bottlenecks

The infrastructure layer continues to dictate the pace of AI research and deployment. This week’s hardware announcements underscore rapid gains in accelerator performance while supply-chain constraints highlight systemic limitations that will shape compute availability through 2027.

AWS Trainium3 and the UltraServer platform

AWS unveiled Trainium3, the successor to Trainium2, delivering up to 4.4x more compute performance and 4x greater energy efficiency compared with Trainium2. According to reporting by Reuters and Amazon’s official statement, the new Trn3 Gen2 UltraServer connects 144 Trainium3 accelerators to provide up to 362 PFLOPS (or 0.362 exaFLOPS) of peak compute performance using the FP8/MXFP8 format. The system is designed for large-scale training workloads, positioning it as a direct competitor to Nvidia’s GB300 NVL72 rack. Amazon’s official statement confirms a strategic focus on lowering cost per training run and improving energy efficiency, aligning with its broader AI infrastructure investments.

The platform is designed for frontier-scale workloads, emphasizing optimized collective operations and reduced interconnect latency. While AWS is advancing its proprietary silicon portfolio, it continues to invest in interoperability with Nvidia technologies, indicating a dual-vendor strategy likely to shape enterprise compute planning throughout 2026.

Alphabet’s TPU expansion and external sales strategy

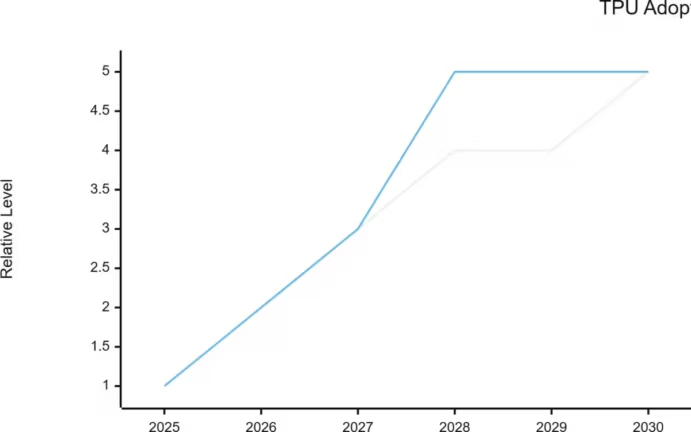

Analysis from Bloomberg suggesting Alphabet may ramp TPU production to several million units per year by 2027 to 2028, with a potential external accelerator market approaching 900 billion dollars. Although Alphabet has not issued a detailed public announcement, partnerships with organizations such as Anthropic and ongoing discussions with Meta signal increased willingness to commercialize TPU hardware.

Engineers evaluating the performance gap between TPU generations can refer to our in-depth comparison TPU v6e vs v5e/v5p: How Trillium Delivers Real AI Performance Gains, which details compute scaling, MXU tile improvements, HBM bandwidth changes, and real-world SDXL and LLM throughput differences.

This diagram provides a qualitative view of how TPU adoption and the potential external market for Google’s accelerator hardware may evolve through 2030. Adoption is expected to increase steadily as TPUs become more widely available beyond Google’s internal workloads, while external market potential grows faster, driven by demand for alternative accelerators amid GPU shortages and escalating training costs. The curve does not represent exact numerical forecasts; instead, it captures the relative momentum indicated by industry reporting, production plans, and early signals of TPU commercialization.

If TPU access expands beyond Google Cloud, enterprises could gain a viable alternative to GPU-centric compute stacks, though this requires factoring in the effort of code porting and optimization, potentially reducing cost pressures as supply stabilizes.

For readers who want a deeper technical breakdown of Google’s latest TPU architecture, including memory topology, MXU design, and bandwidth improvements, see our detailed analysis in Understanding Google TPU Trillium: How Google’s AI Accelerator Works.

The global memory chip shortage: a structural constraint

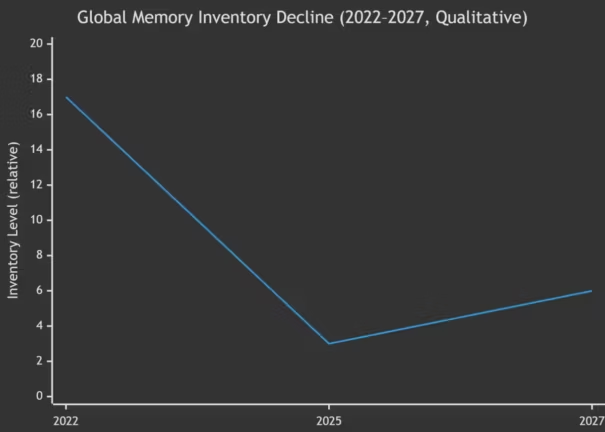

A Reuters report described a worsening memory shortage affecting DRAM and NAND supply, with inventories dropping to two to four weeks from 17 weeks in 2022. The surge in AI demand is the primary driver, as hyperscalers absorb unprecedented quantities of memory to scale training clusters. Suppliers, including Samsung and SK Hynix, anticipate constraints persisting through late 2027. Source: Reuters

| Metric | DRAM (2025) | NAND (2025) | 2026 Risk Assessment |

|---|---|---|---|

| Price volatility | High due to AI-driven demand and limited supply | Medium, less affected but trending upward | Elevated risk for DRAM-driven workloads |

| Lead times | Critically short (2–4 weeks of inventory) | Short but not quantified | DRAM shortages likely to slow training/inference capacity |

| Supply constraints | Severe, driven by hyperscaler consumption | Moderate, not reported as critical | DRAM remains the main bottleneck for AI expansion |

| Impact on AI infra | High, affects GPU/TPU availability and cluster expansion | Medium, impacts storage-heavy workloads | Persistent risk until late 2027 |

For AI teams, the shortage impacts the availability and pricing of high-memory compute instances, slows expansion of GPU clusters, and forces greater emphasis on model efficiency. For a deeper analysis of how GPU scarcity is slowing down training cycles, LLM scaling, and data center expansion in 2025, see our dedicated report GPU Shortage: Why Data Centers Are Slowing Down in 2025.

What this means for developers and CTOs

Developers should prioritize techniques that reduce memory consumption, from quantization to pruning and more efficient architecture choices. CTOs need to anticipate continued volatility in memory-dependent hardware procurement, implement multi-vendor strategies, and align large training runs with realistic supply timelines. Memory has become a strategic dependency on par with compute.

Related technical deep dive

Hardware shortages are pushing the industry toward more efficient software-side optimizations for LLMs.

DFloat11 is just one example: DFloat11: Lossless BF16 Compression for Faster LLM Inference explains how BF16-level precision can be preserved while reducing bandwidth pressure on memory-bound workloads.

Other approaches include NVIDIA’s new 4-bit format, detailed in NVFP4: Everything You Need to Know About NVIDIA’s New 4-Bit Format for AI, and a broader comparison of low-precision formats in NVFP4 vs FP8 vs BF16 vs MXFP4.

Enterprise AI Reality Check: Agents, Adoption, and Market Repositioning

This week revealed widening gaps between vendor ambitions and what enterprises are ready to deploy. Agent frameworks, reasoning models, and automation tooling continue to evolve, but adoption friction is forcing companies to rethink priorities and reassess how AI integrates into operational workflows.

OpenAI’s internal “code red” and the reasoning model push

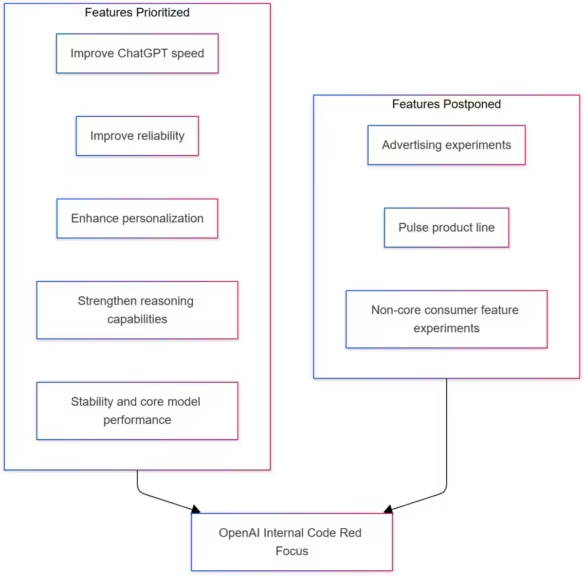

According to reporting from the Wall Street Journal, OpenAI leadership issued an internal “code red” aimed at improving ChatGPT’s speed, reliability, personalization, and especially its reasoning capabilities. Several initiatives, including early-stage advertising experiments and the Pulse product line, were postponed to refocus engineering resources on core model performance and infrastructure stability.

This pivot reflects rising competitive pressure from Google’s Gemini 3, particularly around structured reasoning and evaluation benchmarks. For developers relying on OpenAI APIs, this shift suggests upcoming improvements in inference consistency, context handling, and multi-step reasoning.

Microsoft’s agent quotas and adoption friction

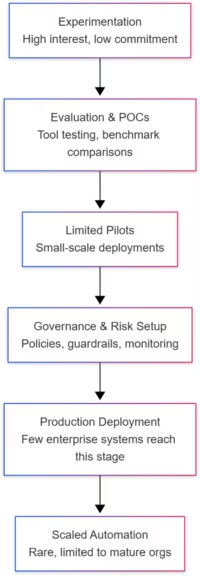

Coverage from Ars Technica and Reuters highlighted Microsoft’s decision to revise AI agent sales targets after fewer than 20 percent of sales teams achieved agent-related quotas. Despite strong Azure growth, enterprises remain cautious about deploying fully autonomous agent systems, often citing concerns around reliability, safety validation, and integration complexity.

This underscores a persistent maturity gap. Organizations are willing to experiment with generative AI, but production-grade agentic automation requires robust guardrails, domain adaptation, and clearer monitoring frameworks.

Anthropic’s enterprise-first strategy and 10× annual growth

As reported by Deadline, Anthropic continues its enterprise-focused trajectory with three consecutive years of 10× growth and a projected year-end range of 8 to 10 billion dollars. CEO Dario Amodei emphasized reliability, safety, and consistency as differentiators, especially for regulated industries like finance, biomedical research, and energy.

Anthropic’s approach resonates with organizations seeking predictable model failure modes, robust safety evaluations, and high governance standards. This differentiates Anthropic in a market where consumer-first strategies often prioritize rapid feature releases over stability.

Implications for enterprise decision-makers

For 2026 planning, organizations should expect vendors to emphasize stability, safety verification, and integration tooling rather than launching broad agentic platforms. Procurement decisions should factor in model refresh cadence, reproducibility, and vendor transparency. Enterprise deployment is shifting toward narrower, high-value use cases with clearer governance pathways.

Open-Source and Model Innovation: Beyond Text Generation

Model innovation this week extended into embodied reasoning, autonomous systems, and socio-technical evaluation. Open-source releases help rebalance a landscape dominated by closed frontier models, offering greater transparency and reproducibility.

Nvidia’s Alpamayo-R1 and the shift toward physical AI

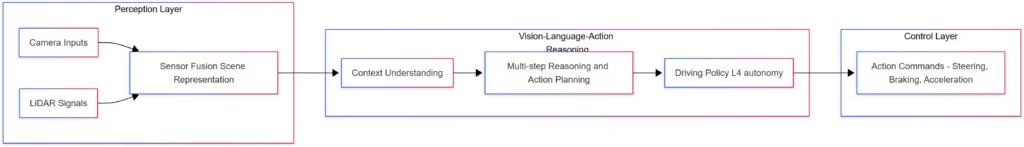

At NeurIPS 2025, Nvidia introduced Alpamayo-R1, an open-source vision-language-action (VLA) model designed for Level 4 autonomous driving. According to Nvidia’s communication channels, Alpamayo-R1 integrates camera, LiDAR, and language inputs, enabling multi-step reasoning to navigate complex driving scenarios. Nvidia also released synthetic data tools and evaluation suites designed to test edge-case robustness.

Because Alpamayo-R1 is distributed under a non-commercial license, it enables academic research and prototyping while restricting commercial deployment. This marks an important milestone in the broader shift toward embodied and physical AI systems.

This video below demonstrates the anticipation capabilities of an AI system designed for autonomous driving. In the scenario, a ball suddenly rolls across the street, with no child yet in sight. The AI immediately recognizes this as a known risk indicator and decides to stop the vehicle before any visible danger appears. Moments later, a child runs into the road to retrieve the ball.

The example highlights how an autonomous system can interpret indirect cues, predict a potentially hazardous situation, and take preventive action, a critical requirement for real-world safety.

The video below shows another scenario: a school safety officer raises a stop sign. The AI recognizes both the environment and the signal, correctly stopping the vehicle. This illustrates its ability to interpret human instructions and context in real time.

Anthropic Interviewer dataset release

Anthropic published a structured dataset of 1,250 interviews capturing diverse perspectives on AI’s workplace impact. According to Anthropic’s documentation, the interviews were conducted using Claude and include standardized prompts designed to reveal trust levels, adoption barriers, and perceived risks.

| Interview Category | Description | Recurring Themes |

|---|---|---|

| Workplace impact | How workers expect AI to reshape tasks, roles, and workflows | Task automation concerns, augmented productivity, job redesign |

| Trust and reliability | Perceived dependability of AI systems in daily work | Fear of errors, desire for transparent reasoning, need for oversight |

| Adoption barriers | Factors preventing effective use of AI tools | Lack of training, unclear benefits, workflow integration issues |

| Risk perception | How individuals evaluate the downside of AI adoption | Misuse, bias, loss of control, regulatory uncertainty |

| Industry-specific views | Sector-level differences in how AI is expected to affect jobs | Highly variable across finance, healthcare, education, engineering |

| Preferences for AI assistance | What users want from AI systems at work | Personalization, better guidance, safety guarantees |

This dataset enhances research on socio-technical alignment, human feedback modeling, and participatory input for constitutional AI frameworks.

Why open-source momentum matters now

Open-source projects offer a counterweight to proprietary models, providing researchers and developers with transparent architectures, reproducible evaluation methods, and greater experimental flexibility. As proprietary APIs increasingly abstract away model internals, open-source systems like Alpamayo-R1 become essential for understanding how multimodal reasoning scales in real-world environments.

Regulation, Safety, and Global Policy Movements

Policymakers accelerated AI governance this week, providing clearer direction on safety expectations, compliance timelines, and oversight structures. These changes will shape deployment strategies for any organization using AI at scale.

Future of Life AI Safety Index findings

The Future of Life Institute released its Winter 2025 AI Safety Index, evaluating eight major AI developers across 35 criteria. None of the evaluated organizations demonstrated credible loss-of-control mitigation strategies for advanced AI scenarios. The index also noted insufficient independent auditing and limited transparency around safety thresholds.

| Safety Gap | Description | Observed Across Labs |

|---|---|---|

| Lack of credible loss-of-control mitigation | No lab demonstrated tested or validated strategies for preventing runaway model behavior | Yes |

| Insufficient independent auditing | External safety assessments are limited or absent, and internal evaluations lack transparency | Yes |

| Undefined or undisclosed safety thresholds | Labs do not specify clear limits, red lines, or shutdown criteria for high-risk models | Yes |

| Limited transparency on training data and methods | Key details on datasets, fine-tuning processes, and evaluation pipelines remain partially opaque | Yes |

| Incomplete risk evaluation protocols | Most labs lack systematic testing for dangerous capabilities or long-horizon reasoning failures | Yes |

| Weak post-deployment monitoring | Few labs have well-defined processes for tracking model behavior after release | Yes |

For compliance teams, this underscores the need to establish internal evaluation systems that go beyond vendor claims, especially for high-stakes or safety-critical deployments.

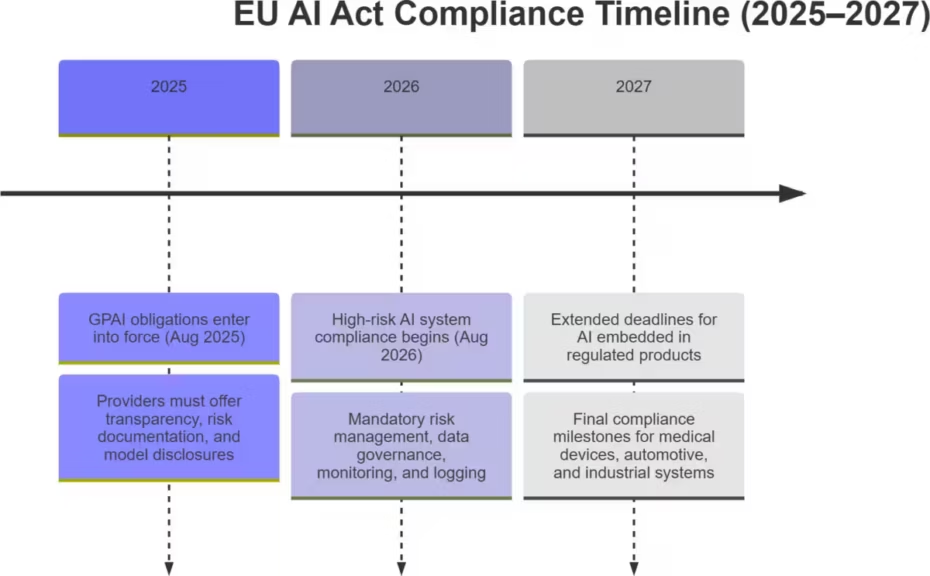

EU AI Act: timeline and obligations for 2026 to 2027

The European Commission confirmed enforcement dates for the EU AI Act, including GPAI obligations active since August 2025 and high-risk system requirements beginning in August 2026. Extensions apply to AI embedded in regulated products through 2027. These updates mention ongoing amendments to strengthen the EU AI Office and streamline SME compliance.

Organizations must prepare traceability workflows, risk classification tools, and model documentation systems well ahead of enforcement deadlines.

Australia’s national AI plan and governance mandates

Australia introduced a national AI framework requiring agencies to appoint accountability officers, maintain internal AI use-case registers, conduct impact assessments, and provide staff training. Early policy outlines and forthcoming risk evaluation methods led by a new national AI Safety Institute set to open in 2026.

These measures indicate increasing global alignment around governance-first AI strategies, with emphasis on transparency and accountability.

UNDP and OECD reports: economic acceleration and inequality risk

A United Nations Development Programme report projects that AI could add up to one trillion dollars to Asian economies over the next decade, boosting GDP by up to two percentage points annually. At the same time, the report warns of widening inequality, especially affecting women and early-career workers. OECD economic revisions similarly highlight AI investment as a key growth driver but caution against overestimating productivity gains while global tariffs rise. (Source : OECD, UNDP, )

These findings position AI as both an accelerator of economic performance and a potential amplifier of structural risk.

What This Week Means for Developers, Researchers, CTOs, and Compliance Teams

This week’s AI news cycle translates into concrete priorities for technical teams and decision-makers planning 2026 roadmaps. The combination of chip announcements, memory constraints, enterprise repositioning, and tightening regulation makes it harder to treat AI as an isolated R&D topic: it now directly shapes infrastructure, governance, and hiring strategies.

For developers

For developers, the global memory shortage is an explicit signal to invest in efficiency-oriented techniques. Quantization, pruning, low-rank adaptation, and mixed-precision training can significantly reduce VRAM pressure for both training and inference. Model architecture choices that minimize KV-cache growth and sequence length can also provide durable benefits as high-memory instances remain constrained.

Open-source releases like Nvidia’s Alpamayo-R1, documented on the Nvidia Blog, expand the space of experiments beyond standard LLM-based workflows. They offer a practical route into physical AI and embodied reasoning without locking experimentation to proprietary APIs.

For researchers

Researchers face two major themes. First, the emphasis from OpenAI and others on reasoning quality suggests that benchmarks, evaluation protocols, and robustness metrics will evolve quickly. Work on long-horizon reasoning, tool use, and multi-step verification will likely become central to assessing next-generation models.

Second, datasets such as Anthropic’s Interviewer corpus, released via Anthropic Official, provide structured material for studying how different groups perceive AI in the workplace. This enables more grounded research into socio-technical alignment, trust calibration, and how AI reshapes professional identities.

For CTOs

For CTOs, the AI News December 1–6, 2025 cycle underlines the need for multi-vendor compute strategies. AWS Trainium3, Alphabet TPUs, and Nvidia’s ongoing roadmap are not mutually exclusive but complementary levers for balancing cost, capacity, and performance. Planning for 2026 should include scenario models that combine GPU clusters with alternative accelerators, particularly for training workloads that can tolerate porting effort.

The memory shortage documented by Reuters and CNBC means that capex planning must consider procurement lead times and supply risk, not just theoretical FLOPS. Large-scale retraining runs, foundation model fine-tuning, and high-volume inference backends should be sequenced with realistic expectations of hardware availability.

For compliance teams

Compliance teams can no longer treat AI as a peripheral concern. The AI Safety Index from the Future of Life Institute highlights gaps in current safety practices, while the OECD and UNDP reports show how AI investments intersect with macroeconomic risk and inequality. Regulatory instruments such as the EU AI Act, documented on the EC Digital Strategy portal and the Artificial Intelligence Act Portal, define concrete obligations and enforcement timelines.

Internal governance should now include risk classification for AI systems, documentation of model provenance, monitoring of high-risk deployments, and clear ownership for compliance tasks. Australia’s national AI plan, described by the Australian Digital Government and summarized by the IAPP, illustrates how national administrations are operationalizing these ideas with accountability officers, impact assessments, and use-case registers.

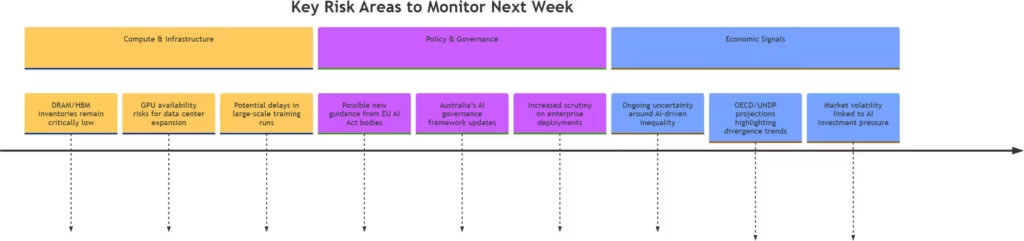

Forward Signals: What to Watch for Next Week

The next cycle of AI news will likely deepen the themes that emerged between December 1 and 6. On the infrastructure side, analysts will watch for additional disclosure from AWS and Alphabet on availability windows for Trainium3 and TPUs, as well as any early indicators that the memory shortage is easing or worsening. Enterprises will look for concrete benchmarks from new reasoning models and clearer case studies demonstrating production-grade agent deployments, beyond pilot programs and prototypes.

Policymakers and institutions are expected to continue refining their frameworks. The OECD, which already highlighted AI-driven growth and tariff risk in its latest outlook via Reuters, may provide additional guidance on how to balance investment enthusiasm with productivity realism. The UNDP, which warned about divergence between AI-adopting and non-adopting countries in its report covered by UN News and UNDP Asia-Pacific, will likely remain a key reference for assessing global equity impacts.

For practitioners, the core message is consistent. AI News December 1–6, 2025 shows that AI is now shaped by three converging forces: increasingly diversified accelerator hardware under memory pressure, enterprise adoption dynamics that reward stability over hype, and governance regimes that are gradually becoming more concrete. Teams that align their infrastructure planning, model evaluation, and compliance workflows with these trends will be better positioned for 2026 than those betting solely on headline models or single-vendor stacks.

Archives of past weekly AI news

- AI News November 24–29 : Breakthrough Models, GPU Pressure, and Key Industry Moves

- AI News – Highlights from November 14–21: Models, GPU Shifts, and Emerging Risks

- AI Weekly News – Highlights from November 7–14: GPT-5.1, AI-Driven Cyber Espionage and Record Infrastructure Investment

- AI Weekly News from November 7, 2025: OpenAI , Apple and the Race for Infrastructure, Archive

- AI News from Oct 27 to Nov 2: OpenAI, NVIDIA and the Global Race for Computing Power

- AI News: The Major Trends of the Week, October 20–24, 2025

- AI News – October 15, 2025: Apple M5, Claude Haiku 4.5, Veo 3.1, and Major Shifts in the AI Industry

Sources and references

Tech media

- As reported by Reuters on AWS’s chip roadmap and server rollout: Reuters (AWS and Nvidia partnership).

- Analysis of Alphabet’s TPU expansion and market opportunity by Bloomberg and Yahoo Finance.

- Coverage of the global memory chip shortage by Reuters and CNBC.

- Reporting on Microsoft’s AI agent sales adjustments from Ars Technica and Reuters.

- Insights into Anthropic’s growth and positioning from Business Insider and Deadline.

- Context on Meta’s publisher licensing deals provided by Reuters, The Verge, and TechCrunch.

- Growth trajectory and positioning of Micro1 as covered by TechCrunch and Yahoo Finance.

- Reporting on Apple’s AI leadership changes from Apple Newsroom and Bloomberg.

- Coverage of the AI Safety Index’s findings by NBC News and Euronews.

Companies

- AWS’s official announcement of Trainium3 and UltraServer on the AWS Official Blog.

- Nvidia’s presentation of Alpamayo-R1 and related tools on the Nvidia Blog and coverage by The Decoder.

- Anthropic’s description of the Interviewer dataset and methodology on Anthropic Official.

- OpenAI’s internal strategic shift as reported by the Wall Street Journal and summarized in additional coverage from the New York Post.

Institutions

- The Future of Life Institute’s AI Safety Index Winter 2025 report, available from the Future of Life Institute.

- OECD’s revised global growth forecasts and commentary on AI investments via Reuters.

- UNDP’s analysis of AI’s economic impact and inequality risks, as covered by UN News and UNDP Asia-Pacific.

Official sources

- Australia’s national AI plan and government AI governance policy published by the Australian Digital Government and analyzed by the IAPP.

- The European Commission’s AI Act framework and implementation timeline, detailed on the EC Digital Strategy and the Artificial Intelligence Act Portal.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!