ComfyUI: choosing the right model size for your VRAM

Do you want to unleash the full power of ComfyUI without crashing your PC? Struggling to find the right balance between model size, rendering quality, and your available VRAM? With increasingly complex AI workflows, choosing the right ComfyUI model size for your VRAM has become essential for generating stable, fast, and high-quality images. The default ComfyUI workflows vary widely: some are designed for small configurations with lower rendering quality, while others use oversized models that can overwhelm even a RTX 5090.

In this guide, we’ll walk you through how ComfyUI VRAM is used, how ComfyUI diffusion models and CLIP models impact your GPU memory, and most importantly, how to adapt your choices depending on your GPU and creative goals. You’ll find practical tips, examples for different VRAM amounts, 12 GB, 24 GB, 32 GB, or 48 GB, and optimization tricks to improve your ComfyUI workflows without sacrificing output quality.

Whether you’re an image creator, developer, or AI enthusiast, this guide will help you choose the best ComfyUI model for your VRAM, avoid crashes, and improve productivity while making the most of your hardware to bring your visual projects to life in ComfyUI. This guide is also useful for those who want to use ComfyUI as simply as possible with default workflows, without diving into deep configuration or custom workflow design. Even if ComfyUI feels a bit complex, it makes local image generation accessible to everyone.

Why VRAM is crucial with ComfyUI

VRAM in ComfyUI is critical for ensuring stable and fast generations. Choosing the right model size for your GPU makes all the difference. This video memory, also called graphics memory, allows your GPU to load ComfyUI diffusion models, ComfyUI CLIP models, text encoders, and all generation steps. Once you exceed your available VRAM, execution times skyrocket, and errors often occur.

In ComfyUI, VRAM usage directly depends on the ComfyUI model size you choose. The larger the model, the more graphics memory it needs, which can quickly saturate VRAM, even on powerful cards like an RTX 4090 or RTX 5090. By optimizing your ComfyUI workflow, you can reduce memory usage while still maintaining high-quality output, adjusting choices based on your GPU, resolution, and the ComfyUI diffusion model used.

To choose the right model size for your VRAM, you must understand that each step consumes video memory: diffusion models, text encoders, and intermediate processes. Optimizing GPU memory usage in ComfyUI allows you to generate images or videos without crashes while using the best possible model size for your VRAM, whether you’re working with heavy workflows or lightweight projects.

Model size in ComfyUI: understanding the differences

When trying to choose the right ComfyUI model size for your VRAM, it’s important to understand why a model file that shows 20 GB on disk can take up 24–28 GB of GPU memory when executed in ComfyUI. ComfyUI VRAM isn’t only used to store model weights: it also handles intermediate activations, temporary buffers, and workflow calculations, which all increase GPU memory consumption. That means you cannot load a 24 GB model into 24 GB of VRAM without massive performance loss. On top of that, GPU drivers and other modules already use 1–2 GB of VRAM. As a rule, with 24 GB of VRAM, aim for models of around 20 GB maximum if no other models are loaded.

ComfyUI models come in several formats, directly impacting VRAM usage. FP32 (32-bit float) models offer maximum precision but require huge amounts of video memory. FP16 (16-bit float) models cut memory usage in half with negligible quality loss, while FP8 is becoming increasingly common. To optimize VRAM, you can also use quantized Q4 or Q8 models, which drastically reduce memory footprint while maintaining good enough quality for most ComfyUI use cases.

It’s also essential to distinguish between ComfyUI diffusion model size and the size of ComfyUI CLIP models or T5 text encoders. These models, especially large T5 XXL or LLaMA ones, can consume several extra GB of GPU memory if loaded on the GPU. To optimize your ComfyUI workflow, you can offload these text models to the CPU to free VRAM, allowing you to choose a heavier ComfyUI model for diffusion without overwhelming GPU memory. However, keep in mind that some models don’t handle CPU offloading well and may cause freezes or crashes.

Example of a model exceeding VRAM capacity

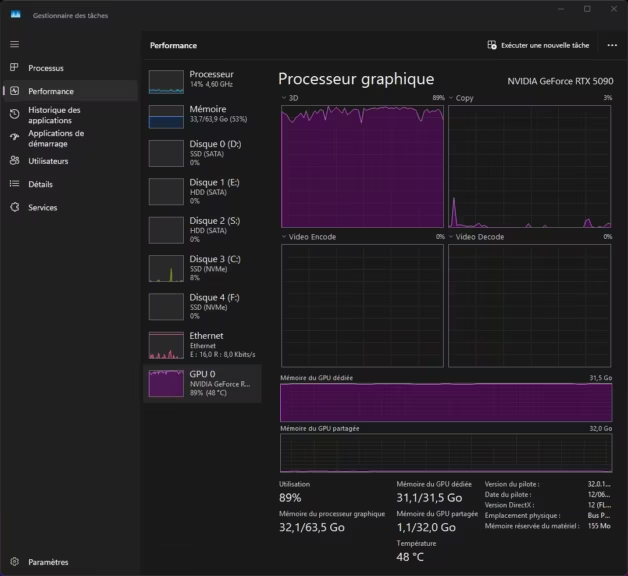

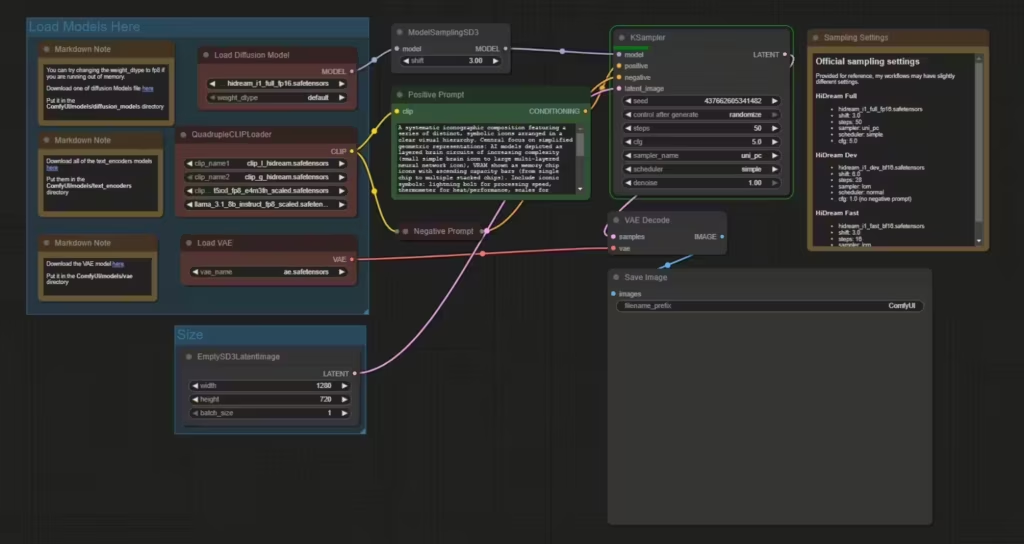

Windows Task Manager is enough to tell when a model, or more broadly, a workflow, exceeds your VRAM capacity. The screenshot below illustrates the issue using the 33 GB (on-disk size) hidream_i1_full_fp16.safetensors model and a workflow that also loads T5XXL FP8 and Llama 3.1 8B. I purposely picked a very heavy model for the example, but the logic is the same whenever the model exceeds your VRAM capacity.

Note the GPU process is not at 100%, signaling a slowdown due to VRAM/RAM swapping

This doesn’t throw an error, but render time becomes far too long. This is a baseline, non-optimized workflow. There are many ways to run this model properly.

To optimize this workflow and get acceptable execution times, here are your options:

- Swap out the hidream_i1_full_fp16.safetensors file for an FP8 or GGUF build (with a GGUF UNet Loader). You’ll lose some precision, but in many cases quality remains acceptable. Run tests to find the right model for your setup.

- Offload CLIP models to the CPU: it’s slower for text encoding, but frees VRAM for the diffusion model. The diffusion step, being the heaviest, may run faster and overall render time can drop.

- Implement workflow-level memory optimizations (more advanced).

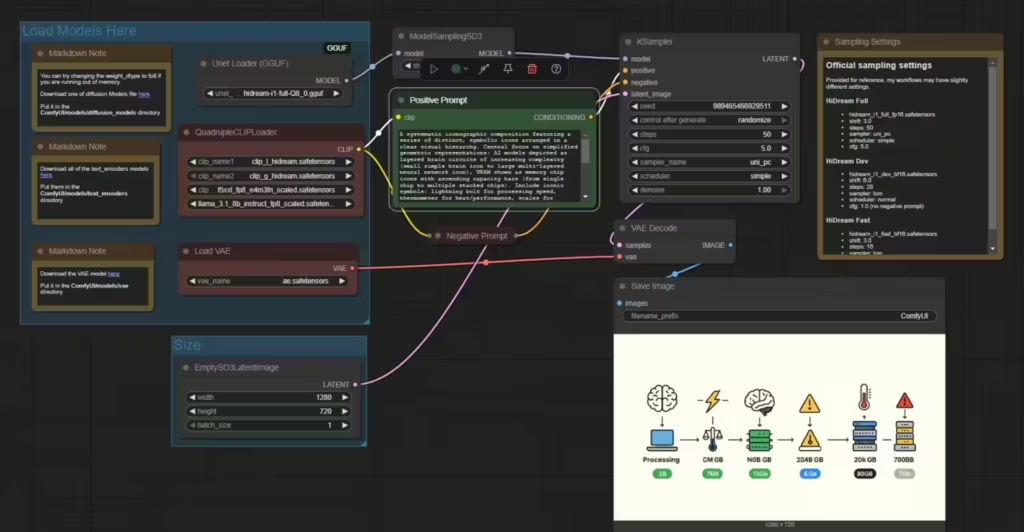

I modified the workflow to use an 8-bit quantized GGUF at 8K (hidream-i1-full-Q8_0.gguf by city96 on Hugging Face), which is an 18.7 GB file. Execution time then dropped to 120 seconds, with VRAM at the limit. By also switching the CLIP models to GGUF, the execution time fell to 60 seconds. You can also use the FP8 release.

How to choose the right ComfyUI model size for your VRAM

To choose the right ComfyUI model size for your VRAM, start by checking how much graphics memory your GPU provides (RTX 4070, 4080, 4090, 5090, etc.), and then tune your workflows accordingly to avoid excessive execution times and “out of memory” errors in ComfyUI. This approach helps you optimize ComfyUI VRAM while keeping generation quality high.

If your GPU has 12 GB of VRAM, prioritize diffusion models quantized in FP8 or Q6, keep batch size at 1 (prefer the Run button with multiple iterations), and consider loading your ComfyUI CLIP models on the CPU to free up video memory.

With 24 GB of VRAM, you can use mid-sized FP16 models and generate high-resolution images while staying stable in ComfyUI. For users with 32 GB or 48 GB of VRAM, it’s possible to choose heavier ComfyUI models in FP16 or even FP32 depending on the project, while working at higher resolutions and with larger batches.

To optimize GPU memory consumption in ComfyUI:

- Use quantized models (Q4, Q8) whenever available, especially for large diffusion models.

- Offload CLIP models to the CPU to preserve VRAM for the diffusion step.

- Keep batch size at 1 during generation to limit memory spikes. Prefer the Run button with a specified number of iterations.

- Monitor VRAM usage with Task Manager or nvidia-smi so you can adapt your workflow and resolution to the project.

This method will help you choose the ComfyUI model best suited to your VRAM, optimize video memory, avoid crashes, and maintain high image quality in ComfyUI.

| Criterion | FP16 | FP8 | GGUF Q8 |

|---|---|---|---|

| Type | 16-bit float (half precision) | 8-bit float | 8-bit int quantized (GGUF) |

| Image quality | ✅ Excellent | ✅ Very good (slight loss) | ⚠️ Good (minor visible losses) |

| VRAM usage | ⚠️ High | ✅ Medium (30–40% gain vs FP16) | ✅ Very low (60–70% gain vs FP16) |

| Performance (speed) | Medium to fast | ✅ Fast on compatible GPUs | Variable (CPU slower, GPU varies) |

| Compatibility | Broad (all GPUs, standard ComfyUI) | Limited (newer GPUs, backend support needed) | Broad (via ComfyUI-GGUF, llama.cpp) |

| Recommended use | When VRAM is available and max quality matters | Limited VRAM but quality still important | Very limited VRAM or CPU-first use |

Practical examples: ComfyUI with 12 GB, 24 GB, 32 GB, 48 GB of VRAM

To choose the right ComfyUI model size for your VRAM, here are concrete examples based on your graphics card and video memory to avoid memory errors and optimize workflows in ComfyUI:

With 12 GB VRAM (e.g., RTX 5070):

- Use Q4 or Q8 quantized models or light FP16 models (3–8 GB on disk).

- Load the ComfyUI CLIP model on the CPU to free VRAM.

- Limit resolution (for example, 768×768) and keep batch size at 1.

- Avoid complex workflows with many simultaneous memory-hungry steps.

With 24 GB VRAM (RTX 3090, RTX 4090):

- You can pick mid-sized FP16 models (10–19 GB on disk).

- Keeping CLIP on the GPU is fine, but for complex workflows, offloading it to CPU is still recommended.

- Working at 1024×1024 is feasible without saturating VRAM.

With 32 GB VRAM (RTX 5090):

- Allows heavy FP16 models or large Q8 quantized models (roughly 14–26 GB on disk).

- High resolutions like 1024×1024 or 1536×864 without crash risk.

- Possibility to keep the ComfyUI CLIP model on the GPU while running a large diffusion model.

With 48 GB VRAM (RTX 6000 ADA 48 GB, A6000):

- You can choose very large FP16 or FP32 ComfyUI models (20–30 GB on disk).

- Very high resolutions (up to 1536×1536, or 2048×1152 depending on the model).

- Higher batch sizes (2, 4 or more) for batch generation.

- Complex workflows with multiple models (high-res VAE, LoRAs, heavy UNets) handled without issues.

Among professional GPUs, the latest RTX Pro 6000 Blackwell offers 96 GB of VRAM, but pricing shoots above €10,000.

Optimize ComfyUI without sacrificing quality: best practices

To choose the right ComfyUI model size for your VRAM without sacrificing rendering quality, adopt methods that reduce graphics memory usage while maintaining strong generation performance.

Reducing batch size is one of the simplest ways to lower your ComfyUI VRAM usage. A batch size of 1 often frees several GB, enabling heavier diffusion models or higher resolutions without crashing. To run multiple passes, prefer the Run button and specify the number of iterations.

Using quantized models (Q4, Q8) can shrink in-memory size by 30–70% with minimal quality loss in Q6 or Q8 for most cases. This lets you choose a bigger ComfyUI model even with modest VRAM, while keeping excellent output quality.

Offloading the ComfyUI CLIP model to the CPU is effective, especially with large text models like T5 XXL or LLaMA. While it slightly slows text encoding, it frees several GB of VRAM for the diffusion model, critical for heavy workflows.

Monitoring GPU memory consumption is essential, use nvidia-smi or Windows Task Manager, to adjust resolution, batch size, or even model size on the fly according to project needs.

Finally, prioritize essential models in your workflows and avoid stacking bulky models or unnecessary steps. This stabilizes generations and uses ComfyUI VRAM more efficiently. These best practices help you harness your GPU, pick the right ComfyUI model size for your VRAM, avoid errors, and still produce high-quality images.

FAQ: model size and VRAM in ComfyUI

Not necessarily. While larger diffusion models can improve detail or complex renders, their usefulness depends on your available ComfyUI VRAM on the GPU. It’s better to choose the ComfyUI model size that fits your VRAM rather than overloading graphics memory.

If you encounter an “out of memory” error, immediately reduce the ComfyUI model size by switching to a quantized version (Q4 or Q8), lower the resolution or batch size, or move the ComfyUI CLIP model to the CPU to free VRAM. Monitor your graphics memory with nvidia-smi to spot spikes and fine-tune settings.

Currently in ComfyUI, CLIP models remain in GPU or CPU memory during a workflow, but you can choose to load the ComfyUI CLIP model on the CPU before generation to free VRAM for diffusion. Segmenting your workflow (e.g., generate text embeddings, then run diffusion) is also an effective way to optimize memory usage.

With 12 GB VRAM: 768×768 at batch size 1.

With 24 GB VRAM: 1024×1024 at batch size 1–2 depending on the model.

With 32 GB VRAM: up to 1536×864 or 1024×1536 at batch size 1–2.

With 48 GB VRAM: 1536×1536 or 2048×1152 at batch size 1–4 depending on the model.

Adjusting resolution to your ComfyUI VRAM helps stabilize workflows while maintaining image quality without compromising projects in ComfyUI.

Conclusion: pick the right ComfyUI model size for your VRAM

To get the most out of ComfyUI, it’s essential to choose the ComfyUI model size that fits your VRAM so you can fully leverage your RTX GPU without running into “out of memory” errors. By understanding the differences between FP32, FP16, and quantized models, and the impact of resolution, batch size, and CLIP, on GPU memory consumption in ComfyUI, you can build stable workflows while keeping generation quality high.

Remember that graphics memory (VRAM) isn’t just a number in ComfyUI: it dictates usability, generation speed, and whether you can handle higher resolutions. By applying best practices like quantized models, offloading CLIP to CPU, and monitoring your VRAM, you can optimize projects without sacrificing image quality.

Ultimately, adjusting ComfyUI model size to your VRAM lets you tailor workflows to your creative and technical needs, ensuring fast, crash-free sessions even with 12 GB VRAM, and tapping the maximum capabilities of your hardware when you have 24 GB, 32 GB, or 48 GB of graphics memory.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!