Practical guide: organize AI prompts with Obsidian and Templater (advanced users)

Do you prefer to keep full control of your data? Does the idea of storing your valuable AI prompts in some random cloud make you uneasy? Welcome to the community of digital independents who value privacy and control over convenience. If this mindset resonates with you, Obsidian and its plugin ecosystem will become your best allies to build a local, flexible, and powerful AI prompt library.

Obsidian is like the Swiss army knife of note-taking for tech enthusiasts: local Markdown files with infinite extensibility through community plugins. But what really makes the difference when you want to manage AI prompts with Obsidian is the Templater plugin, which transforms simple text files into dynamic templates with variables, scripts, and automations.

In this detailed tutorial, we will build together a complete system for organizing AI prompts in Obsidian: from creating a logical folder structure to automating prompt workflows, with a focus on mastering Templater to elegantly handle variables. Whether you are a developer, researcher, content creator, or a curious tinkerer, this guide will give you the tools for local AI prompt management that is professional, flexible, and entirely under your control. For broader insights, check out the main guide here: Organize AI prompts: complete solutions guide.

Why Obsidian is perfectly suited for AI prompts

Local storage and full privacy

With Obsidian AI prompts, your templates stay on your machine. No forced sync to third-party servers, no blind acceptance of vague terms of service, no risk of pricing policies changing overnight. Your Markdown files live in your file system, and that is final.

This local-first approach is especially appealing for professionals handling sensitive data: consultants working on confidential client strategies, researchers protecting proprietary protocols, or creators securing their methodologies. With Obsidian, the question “where are my prompts stored?” has a simple answer: on your hard drive, encrypted if you want, backed up according to YOUR rules.

Native Markdown: simplicity and durability

Obsidian relies exclusively on Markdown, the lightweight markup language that has become a universal standard in the AI era. No proprietary formats, no obscure databases. Just .md files that any text editor can open, today or decades from now.

This Markdown durability guarantees your AI prompt library will remain accessible in any context. If Obsidian disappeared tomorrow, your files would still be instantly usable in VS Code, Typora, or even Notepad. Compare that with proprietary tools where your content is locked in closed ecosystems.

For AI prompt management, Markdown offers the perfect syntax: code blocks to isolate templates, lists to structure instructions, and links between files to create interconnected prompt networks.

Powerful community plugins

Obsidian itself is not open source, but many plugins are. And open source means an active community constantly creating extensions for every imaginable need. For Obsidian prompt organization, some plugins are essential:

- Templater (the hero of this guide): variable substitution, script execution, advanced automations

- Dataview: SQL-like queries to filter and dynamically display prompts

- QuickAdd: fast creation of new prompts with custom macros

- Buttons: add clickable buttons inside notes to trigger actions

- Git: automatic version control for your vault with commits, branches, and full history

This endless extensibility lets you design a workflow that matches your exact needs. Do you want a button that instantly generates a pre-filled prompt based on a template, saves it in the right folder, and opens ChatGPT? It is possible with the right plugin combination.

Free, forever

Unlike Notion, which heavily restricts its free plan for teams, or commercial tools like PromptLayer that cost $50 per user per month, Obsidian is completely free for personal use. No storage limits, no functionality restrictions, no countdown before you must pay.

Optional services exist (official Sync cloud, Publish for sharing online), but for local AI prompt management, you do not need them at all. Install, configure, and use: everything is free for life.

What is Obsidian’s exact license?

Obsidian is not open source, but proprietary software. Its source code is not public, although the app is free for personal, commercial, or nonprofit use.

The developers offer optional paid licenses:

- Catalyst license for early access to beta versions,

- and a commercial license for companies.

Users keep full ownership of their data, which is stored locally, unlike cloud-only tools. Some community extensions are open source, but the core application remains under a proprietary license (Obsidian License, Official Forum, Wikipedia).

In short, Obsidian is a free but closed tool, combining a proprietary model with an open source ecosystem through its plugins.

Create a folder structure for AI prompts in Markdown

Designing the optimal folder hierarchy

The first step: set up your Obsidian vault to host a structured and scalable AI prompt library. The beauty of file systems is their simplicity: folders, subfolders, and files. It looks basic, but the key is adopting a consistent organization from the start.

Here is a sample folder tree that works well for AI prompt organization in Obsidian:

📁 AI-Prompts/

├── 📁 01-Content-Generation/

│ ├── 📁 Blog-Articles/

│ │ ├── seo-long-tail-article.md

│ │ ├── viral-listicle-article.md

│ │ └── complete-guide-article.md

│ ├── 📁 Social-Media/

│ │ ├── linkedin-thought-leadership.md

│ │ └── twitter-thread-engagement.md

│ ├── 📁 Fact-Checking/

│ │ ├── fact-checking-process.md

│ │ └── cross-fact-checking-process.md

│ └── 📁 Email-Marketing/

│ ├── weekly-newsletter.md

│ └── welcome-sequence.md

├── 📁 02-Data-Extraction/

│ ├── named-entity-extraction.md

│ ├── sentiment-analysis.md

│ └── structured-summary.md

├── 📁 03-Classification/

│ ├── email-priority-sorting.md

│ └── customer-feedback-categorization.md

├── 📁 04-Programming/

│ ├── specification-writing.md

│ ├── architecture-drafting.md

│ ├── todo-list-generation.md

├── 📁 05-Conversation/

│ ├── customer-support-assistant.md

│ └── faq-chatbot.md

├── 📁 Templates/

│ ├── _template-prompt-generation.md

│ ├── _template-prompt-extraction.md

│ └── _template-prompt-classification.md

└── 📁 Workflows/

├── full-article-workflow.md

└── feedback-analysis-workflow.md

This hierarchical structure brings several advantages:

Intuitive navigation: quickly find the type of prompt you need Scalability: easily add new categories or subcategories without breaking existing organization Naming conventions: numbered folders (01-, 02-, …) force logical ordering Separation of templates and prompts: generic templates stay isolated, ready for reuse

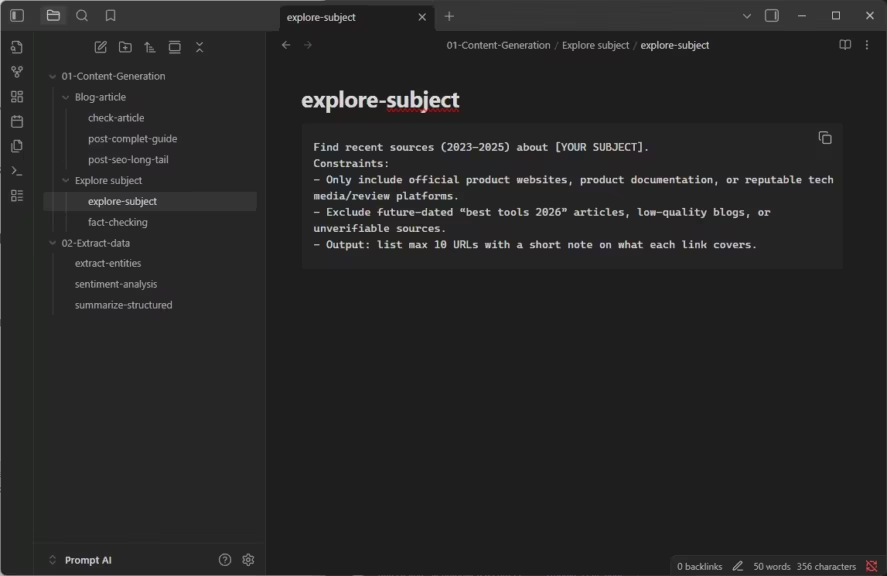

Structuring the content of a prompt file

Each Markdown file in your Obsidian AI prompt library should follow a standardized format. This makes maintenance easier and lets plugins like Dataview automatically extract metadata.

Recommended structure for a prompt:

---

type: generation

category: blog-article

tags: [seo, long-form, tutorial]

models: [gpt-4, claude]

complexity: intermediate

status: validated

created: 2025-01-15

modified: 2025-03-20

---

# SEO Long-Tail Article

## Objective

Generate an SEO-optimized blog article on a long-tail keyword, with a clear H2/H3 structure and natural keyword optimization.

## Required variables

- *{{main_keyword}}*: target keyword (ex: "organize AI prompts Obsidian")

- *{{secondary_keywords}}*: list of LSI keywords (ex: "templater, markdown, local management")

- *{{length}}*: target word count (ex: 2000)

- *{{tone}}*: article tone (ex: "educational and accessible")

## Prompt

You are an expert SEO web writer. Write an optimized blog article.

**Main keyword**: {{main_keyword}}

**Secondary keywords**: {{secondary_keywords}}

**Target length**: {{length}} words

**Tone**: {{tone}}

Required structure:

- Catchy introduction (150-200 words)

- 4-5 H2 sections with H3 subsections

- Conclusion with call-to-action

SEO optimization:

- Naturally integrate the main keyword in H1, intro, and conclusion

- Distribute secondary keywords across H2/H3

- Keyword density: max 1-2%

- Use synonyms and semantic variations

## Sample outputs

[Insert 1-2 sample outputs generated with this prompt]

## Notes and optimizations

- Works better with temperature 0.7

- ChatGPT tends to over-optimize, Claude gives more natural results

- Previous version (v1) in [[seo-article-v1]]

## Related links

- [[complete-guide-article]] – variant for long guides

- [[viral-listicle-article]] – listicle format

- [[full-article-workflow]] – workflow including this prompt

This standardized structure combines:

- YAML frontmatter: metadata usable by Dataview for filtering and advanced queries

- Clearly identified sections: Objective, Variables, Prompt, Examples, Notes

- Internal links: smooth navigation across related prompts via [[wikilinks]]

- Manual versioning: reference to older versions

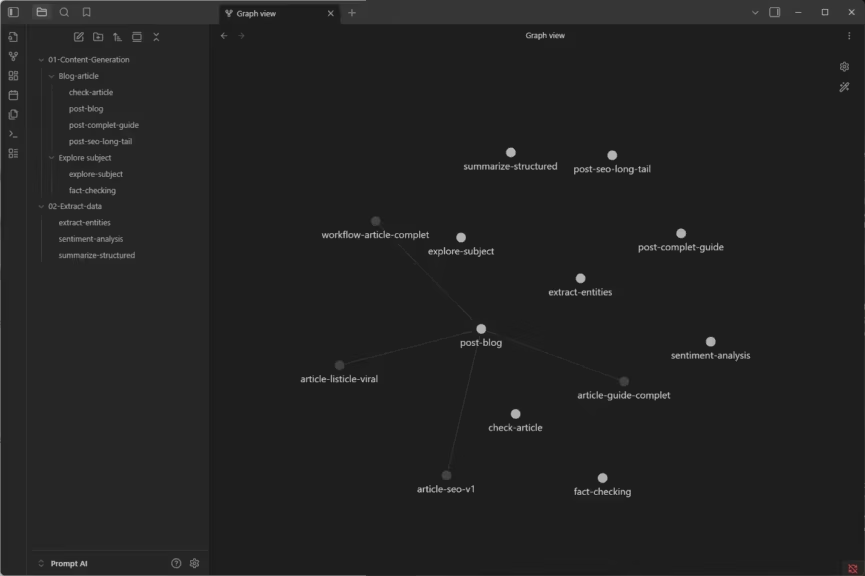

Using tags and links for advanced navigation

The real power of Obsidian in AI prompt management emerges when you fully leverage tags and wikilinks. Unlike rigid folder structures, these meta-structures create a multidimensional knowledge graph.

Tagging strategy:

tags: [#generation, #marketing, #seo, #long-form, #chatgpt, #claude, #validated]You can then search for prompts with #marketing #seo or display only #validated #chatgpt prompts. Dataview even allows dynamic tables:

TABLE category, models, status

FROM #generation AND #marketing

WHERE status = "validated"

SORT modified DESCThis query displays all validated marketing generation prompts, sorted by last modified date. A dynamic view that updates automatically with each edit or addition.

Link networks:

Connect prompts with wikilinks [[filename]] to create semantic associations:

- Similar prompts: See also: [[complete-guide-article]]

- Component prompts: Uses tone from [[professional-friendly-tone]]

- Workflows: Included in [[full-article-workflow]]

Obsidian’s graph view will then reveal hidden structures in your AI prompt library.

Creating MOCs (Maps of Content) for thematic navigation

For large libraries (100+ prompts), create MOCs (Maps of Content) that serve as thematic indexes. Example: a file _MOC-Marketing.md listing all marketing prompts with descriptions:

# 🎯 MOC: Marketing Prompts

## Content generation

- [[seo-long-tail-article]] – SEO-optimized blog posts

- [[linkedin-thought-leadership]] – LinkedIn thought leadership posts

- [[weekly-newsletter]] – Engaging newsletters

## Analysis

- [[sentiment-analysis-feedback]] – Extract customer sentiments

- [[customer-feedback-categorization]] – Classify feedback by theme

## Advertising

- [[ad-facebook-conversions]] – Facebook ads optimized for conversionThese MOCs act as navigation hubs, far more efficient than simple folders once your library scales.

Is there an Obsidian plugin to auto-generate MOCs from tags?

Yes, Obsidian has a dedicated plugin for automatic Maps of Content (MOC) management: AutoMOC.

This plugin automatically imports tags, backlinks, and aliases into a note, keeping lists of related notes up to date without manual editing. It is free and distributed under GPL-3.0 (Obsidian Forum, Reddit).

Additionally, some users build MOCs with Dataview queries, while the Auto Classifier plugin uses the ChatGPT API to automatically generate tags.

In summary, AutoMOC is the most effective way to auto-generate or maintain MOCs from tags in Obsidian.

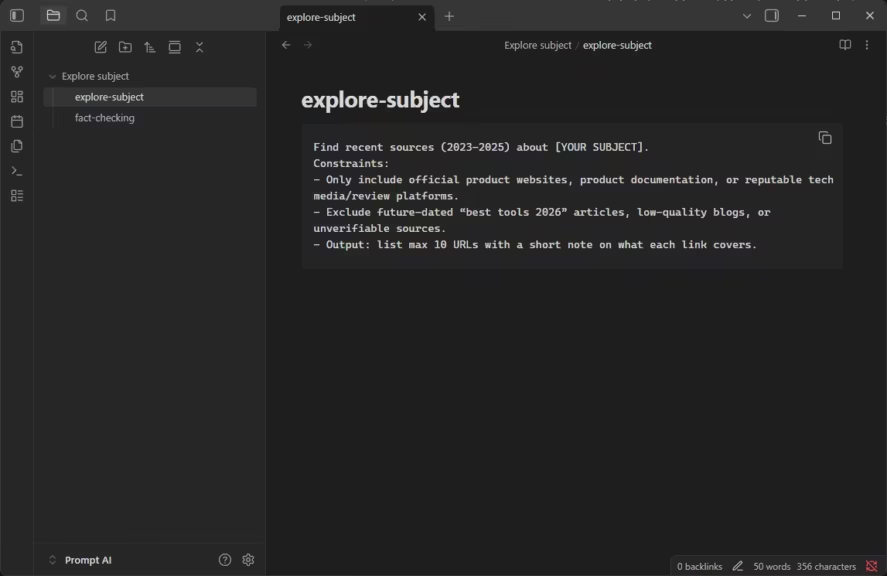

Using the Templater plugin for dynamic variables

Installing and configuring Templater

Now for the serious part: turning static prompts into dynamic templates with Templater. This plugin is the cornerstone for automating variable substitution in your Obsidian AI prompt library.

How to install Templater:

- In Obsidian, open Settings → Community plugins

- Enable “Community plugins” mode if it is not already active

- Click “Browse” and search for Templater

- Install and enable the plugin

- In Templater settings, define your templates folder (e.g. Templates/)

Recommended settings:

- Template folder location: Templates/ (where your generic templates are stored)

- Trigger Templater on new file creation: enabled (applies a template automatically)

- Enable folder templates: enabled (default templates by folder)

- Syntax highlighting: enabled (color-coded Templater commands)

Basic syntax for Templater variables

Templater uses a simple but powerful syntax: <% %> for code execution, <%= %> to display output.

User input variables:

<%*

const topic = await tp.system.prompt("Article topic");

const length = await tp.system.prompt("Word count");

const tone = await tp.system.prompt("Tone (professional/casual/etc.)");

-%>

# Article about <%= topic %>

Write an article of <%= length %> words on the following subject: **<%= topic %>**

Tone: <%= tone %>

...

When you create a new file from this template, Templater shows pop-ups for input, and fills the values automatically.

System variables:

---

created: <%= tp.file.creation_date("YYYY-MM-DD") %>

modified: <%= tp.file.last_modified_date("YYYY-MM-DD") %>

author: <%= tp.user.name %>

---

These variables auto-fill with creation date, last modification date, username, etc.

Dynamic file variables:

# <%= tp.file.title %>

This prompt is stored in: <%= tp.file.folder() %>

Title and folder update automatically depending on the file’s location.

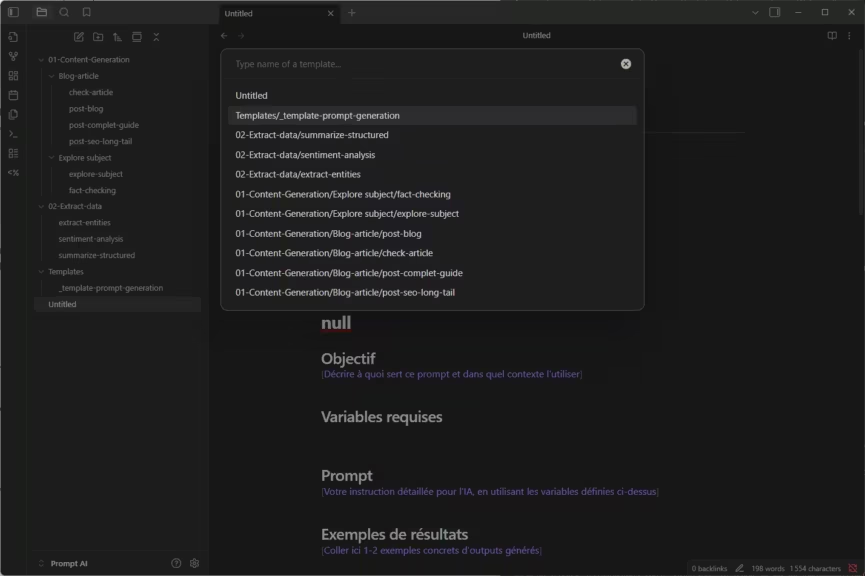

Building a prompt template with Templater

Let’s create a full AI generation prompt template with Templater. This will be the base for quickly generating structured prompts.

File Templates/_template-prompt-generation.md:

<%*

const promptName = await tp.system.prompt("Prompt name");

const category = await tp.system.suggester(

["Blog Article", "Social Media", "Email", "Advertising", "Other"],

["blog-article", "social-media", "email", "advertising", "other"]

);

const models = await tp.system.prompt("AI models tested (comma separated)");

const complexity = await tp.system.suggester(

["Simple", "Intermediate", "Advanced"],

["simple", "intermediate", "advanced"]

);

%>

---

type: generation

category: <% category %>

tags: [generation, <% category %>, draft]

models: [<% models %>]

complexity: <% complexity %>

status: draft

created: <% tp.file.creation_date("YYYY-MM-DD") %>

modified: <% tp.file.last_modified_date("YYYY-MM-DD") %>

---

# <% promptName %>

## Objective

[Describe what this prompt does and in which context to use it]

## Required variables

<%*

const varCount = await tp.system.prompt("How many variables?", "3");

for (let i = 1; i <= parseInt(varCount); i++) {

const varName = await tp.system.prompt(`Variable ${i} - name`);

const varDesc = await tp.system.prompt(`Variable ${i} - description`);

tR += `- {{${varName}}}: ${varDesc}n`;

}

%>

## Prompt

[Detailed instruction for the AI using the defined variables]

## Sample outputs

[Paste 1-2 concrete output examples here]

## Notes and optimizations

- Recommended parameters: temperature X, max tokens Y

- Works better on [model]

- Limitations: [list issues]

## Related links

- [[]] – similar prompts

- [[]] – workflows including this prompt

This Templater template is interactive: it asks questions when you create a new file, generates YAML frontmatter with metadata, and even loops through multiple variables.

To use it:

- Open a new file (this will be the target)

- Click on Templater in the side menu

- Select the template (Templates/_template-prompt-generation)

- Fill in the fields

- The base structure appears, ready for final details

Automating variable substitution in prompts

Once your prompts contain variables like {{variable_name}}, you can use Templater to replace them instantly before sending to your AI.

Replacement command template:

File Templates/_fill-variables.md:

<%*

let content = await tp.file.content;

// Find all variables {{xxx}}

const regex = /{{([^}]+)}}/g;

const matches = [...content.matchAll(regex)].map(m => m[1]);

// Deduplicate

const uniqueVars = [...new Set(matches)];

// Ask for values

const values = {};

for (const varName of uniqueVars) {

values[varName] = await tp.system.prompt(`Value for {{${varName}}}`);

}

// Replace

for (const [varName, value] of Object.entries(values)) {

const reg = new RegExp(`{{${varName}}}`, "g");

content = content.replace(reg, value);

}

// Create new file

const newFile = tp.file.title + "-filled";

await tp.file.create_new(content, newFile, false, tp.file.folder(true));

// Return confirmation

tR += `Variables replaced! New file created: ${newFile}`;

%>

How to use it:

- Open a prompt with variables {{xxx}}

- Run Templater command → Insert template → _fill-variables

- Enter values in the pop-ups

- A new file with substituted variables is generated

- Copy-paste the finalized prompt into ChatGPT or Claude

This semi-automated workflow saves significant time compared to manually searching and replacing variables.

Creating reusable snippets with Templater

For repeated elements across multiple prompts (format rules, sample structures, constraints), create reusable Templater snippets.

Example snippet for JSON format:

File Templates/_snippet-json-format.md:

The output must be valid JSON structured like this:

```json

{

"result": "...",

"confidence": 0.95,

"metadata": {

"model": "...",

"timestamp": "..."

}

}

Strictly follow this format for easier parsing.

In your prompts, include it with:

```markdown

<% tp.file.include("[[_snippet-json-format]]") %>

This modular approach avoids duplication and centralizes reusable instructions. Update the snippet once, and all prompts referencing it will update too.

Automating prompt workflows with Templater and scripts

Creating a simple multi-prompt workflow

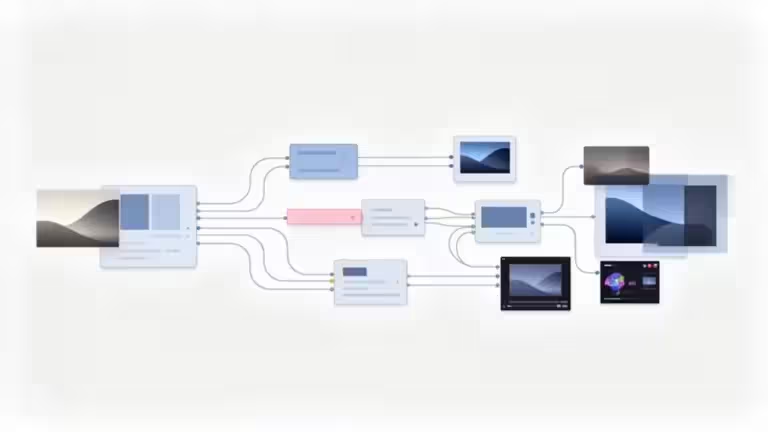

One advanced use case for organizing AI prompts with Obsidian is chaining multiple prompts together to complete a complex task. For example, writing a full article often requires:

- Keyword research

- Structured outline generation

- Section-by-section drafting

- Final review and optimization

Instead of manually running these four prompts, you can build a workflow template that generates the full sequence at once.

File Workflows/full-article-workflow.md:

<%*

// Full article creation workflow

const topic = await tp.system.prompt("Article topic");

const targetLength = await tp.system.prompt("Target word count", "2000");

// Step 1: Keyword research

tR += "# 🔍 STEP 1: SEO Keyword Researchnn";

tR += await tp.file.include("keyword-research");

tR += `nn**Topic**: ${topic}nn`;

tR += "---nn";

// Step 2: Outline generation

tR += "# 📋 STEP 2: Outline Generationnn";

tR += await tp.file.include("article-outline");

tR += `nn**Topic**: ${topic}n`;

tR += `**Length**: ${targetLength} wordsn`;

tR += `**Keywords**: [results from step 1]nn`;

tR += "---nn";

// Step 3: Drafting

tR += "# ✍️ STEP 3: Draftingnn";

tR += await tp.file.include("article-drafting");

tR += `nn**Outline**: [copy outline from step 2]nn`;

tR += "---nn";

// Step 4: Review

tR += "# 🔍 STEP 4: Review and Optimizationnn";

tR += await tp.file.include("seo-review");

tR += `nn**Article**: [copy article from step 3]nn`;

%>This workflow generates a file containing the four prompts in sequence. You then run each step sequentially in your AI tool, feeding the output of one step into the next.

It is a semi-automated workflow: Obsidian prepares everything, but you remain in control of execution. This is ideal for balancing control with productivity.

Using the Buttons plugin for quick execution

The Buttons plugin for Obsidian allows you to add clickable buttons inside notes that trigger Templater commands. Very useful for executing AI prompt workflows instantly.

Installation:

- Go to Community plugins → Buttons

- Enable the plugin

Example usage:

Inside your prompt file, add this block:

name Generate filled prompt

type command

action Templater: Insert Template

templater trueThen add an anchor line:

^button-generateThis anchor lets you reference the button in other notes using syntax like: ![[Note-Name#^button-generate]]

Clicking the button executes Templater, fills variables, and generates the final prompt. No menu navigation required.

Workflow example:

name 🚀 Launch full article workflow

type command

action Templater: Create new note from template

templater true^button-workflow-article

One click, and your multi-prompt workflow is generated in a new file, ready to use.

Writing advanced automations with JavaScript

For technical users, Templater allows custom JavaScript code inside templates. This unlocks near-infinite possibilities.

Example: automatically copy prompt to clipboard:

<%*

// Generate prompt with filled variables

const subject = await tp.system.prompt("Subject");

const prompt = `Write a complete article on ${subject}...`;

// Copy to clipboard

await tp.system.clipboard(prompt);

// Confirmation

tR += "✅ Prompt generated and copied! Paste it into ChatGPT or Claude.";

%>You run the template, answer the questions, and the finalized prompt is instantly copied to your clipboard, ready to paste into your AI tool.

Example: log prompt usage:

<%*

// Log usage in a dedicated file

const logFile = "Logs/prompts-usage.md";

const timestamp = tp.date.now("YYYY-MM-DD HH:mm");

const promptName = tp.file.title;

const logEntry = `- ${timestamp}: Used [[${promptName}]]n`;

// Append log

await app.vault.adapter.append(logFile, logEntry);

tR += `📘 Log updated: ${logFile}`;

%>Every time you use a prompt, a log entry is automatically added. Perfect for identifying your most used templates and optimizing your library.

Connecting Obsidian to external APIs (advanced)

For power users, Templater can conceptually interact with external APIs through JavaScript. In theory, you could connect Obsidian directly to OpenAI or Anthropic APIs to run prompts without leaving Obsidian.

Conceptual example:

<%*

// ⚠️ Educational example – Templater does not natively support API calls

// Illustrates a typical API request structure

const prompt = "Summarize this text: ...";

const request = {

method: "POST",

url: "https://api.openai.com/v1/chat/completions",

headers: {

Authorization: "Bearer YOUR_API_KEY",

"Content-Type": "application/json"

},

body: {

model: "gpt-4",

messages: [{ role: "user", content: prompt }]

}

};

tR += "Example OpenAI API request:nn";

tR += "```jsonn" + JSON.stringify(request, null, 2) + "n```";

%>Warning: This raises security and stability issues (API key storage, execution limits). For most users, the manual workflow (prepare prompt in Obsidian, paste into AI interface) remains safer and more flexible.

Advantages and limitations of Obsidian for AI prompts

Key strengths of Obsidian and Templater

Full control and privacy Your prompts remain entirely on your machine, encrypted if you wish, and backed up according to your own strategy. No cloud platform offers this level of sovereignty.

Completely free Zero cost compared to PromptLayer ($50/month per user) or OpenPrompt which only allows 2000 free requests per month.

Flexibility and extensibility The plugin ecosystem is vast and adaptable. Need a feature? A plugin probably exists, or you can code your own.

Markdown durability Your .md files will still be readable in ten years, regardless of Obsidian’s fate. This independence from proprietary formats ensures long-term sustainability.

Custom workflows With Templater, Buttons, Dataview, and some scripts, you can build powerful automation that commercial platforms rarely offer.

Professional version control with Git The Git plugin transforms your vault into a versioned repository with history, branches, and merges. This is impossible with Notion or closed WYSIWYG tools.

Known limitations

Steeper learning curve Unlike Notion, which is beginner-friendly, Obsidian with Templater requires learning Markdown, plugins, and JavaScript basics. Non-technical users may struggle.

Time-consuming initial setup Installing plugins, configuring Templater, creating your first templates—expect to spend half a day on a proper setup. Ready-to-use platforms like PromptHub are faster to get started with.

No built-in AI execution Obsidian does not connect natively to ChatGPT or Claude. You must copy-paste prompts manually. There are no usage logs, performance metrics, or A/B testing like you get in platforms such as PromptLayer.

Limited collaboration Teamwork in Obsidian requires Git (technical) or Obsidian Sync (paid). Real-time collaboration like in Notion is not possible. For non-technical teams, this is a major drawback.

No analytics or tracking You cannot automatically measure which prompts perform best, how many tokens they consume, or their success rate. Dedicated prompt management tools do provide such analytics.

Semi-manual workflows Even with Templater, workflows remain semi-automated. If you want fully automated branching workflows, you need frameworks like LangChain or other orchestration tools.

Conclusion: Obsidian, the choice for independent power users

Organizing AI prompts with Obsidian and Templater is the perfect balance for users who value control, flexibility, and sustainability over turnkey simplicity. The initial investment in setup may be higher, but the long-term benefits are substantial.

You build a truly sustainable AI prompt library: Markdown files you fully own, always accessible, version-controlled like code with Git. Automations with Templater, Buttons, and JavaScript scripts enable workflows perfectly tailored to your style of work.

Obsidian is ideal if you:

- Prefer local storage and data privacy

- Are comfortable with Markdown and technical tools

- Want deep customization of your workflow

- Work mostly solo or in a small technical team

- Need a free, long-term solution

Obsidian shows its limits if you:

- Need a ready-to-use tool with no setup

- Require real-time collaboration with non-technical teams

- Want automatic execution and logging of prompts

- Prefer a polished graphical interface over text files

For these use cases, check our other guides on complementary solutions:

- Organize AI prompts with Notion for a collaborative and accessible solution

- Organize AI prompts with VS Code for a developer-focused workflow with snippets

- Compare AI prompt management tools to choose the best tool for your profile

If Obsidian’s approach resonates with you, start now: install Obsidian, add the Templater plugin, set up your first folder structure. You will quickly discover the power of a local AI prompt management system where you set the rules.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!