Prompty: Automate and organize your AI prompts in VS Code

In an ecosystem where artificial intelligence is now part of every stage of software creation and development, prompt management quickly becomes a strategic issue. With the rise of large language models (LLMs) like GPT, Claude, Mistral, or Llama, developers often juggle dozens of scattered prompts stored in files, scripts, or notes. The result is a loss of consistency, difficulty testing, and the inability to efficiently automate AI workflows. For a broader overview of prompt organization methods and tools, see our article Organize AI Prompts: Complete Guide to Solutions in 2025.

This is where Prompty, an open source initiative from Microsoft, provides an elegant solution. Integrated into Visual Studio Code, this tool transforms prompt management into a clear, versioned, and reproducible process. It uses a standardized format that encapsulates the text, inputs, and model configuration in a single .prompty file. As the official documentation on GitHub explains, Prompty was designed to “improve the portability, traceability, and understanding of prompts.”

Beyond a simple text generator, Prompty is positioned as the first step toward AI workflow automation. By structuring prompts, it lays the foundation for integration into orchestration frameworks such as LangChain, Prompt Flow, or Semantic Kernel, where every element of the pipeline becomes interconnected and reproducible.

What is Prompty? A new format for organizing AI prompts

Prompty is both a standardized format and a Visual Studio Code extension designed to structure and execute prompts for language models. A .prompty file contains all the necessary elements of a prompt: the text, inputs, model configuration (OpenAI, Ollama, Azure OpenAI, etc.), and input examples.

This format is based on a clear YAML structure, making it readable, traceable, and easy to version-control. As noted on the official Microsoft GitHub page, the goal is to “standardize prompts and their execution in a single artifact to improve observability and portability.”

In practice, Prompty turns a prompt, once just an experimental string of text, into a true software component. It allows AI teams to centralize, reuse, and share their prompts while maintaining consistency. Compatible with various LLM endpoints (OpenAI, Anthropic, Mistral, Ollama, Hugging Face, etc.), it enables testing the same prompt across multiple AI models without changing its structure.

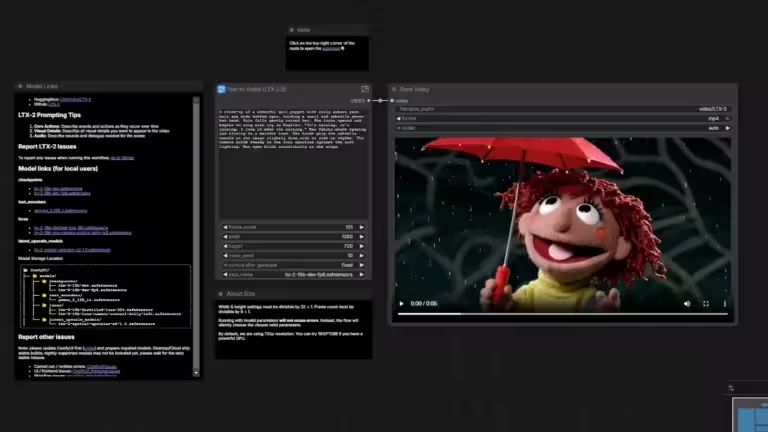

Prompty in VS Code: centralize and test your AI prompts

One of Prompty’s major strengths is its seamless integration with Visual Studio Code, already a familiar environment for most AI developers. Once installed from the official VS Code Marketplace, you can create a new .prompty file in one click using “New Prompty.”

What sets Prompty apart from a simple text editor is its Preview as Markdown feature: it displays a live preview of the rendered prompt with replaced inputs, without running any model. This allows you to refine the phrasing, verify tone consistency, and test the structure before sending it to an LLM.

By pressing F5, Prompty can also execute the prompt directly through any configured model: OpenAI, Azure, Mistral, or Ollama for local use. This flexibility allows developers to compare the behavior of the same prompt across different AI engines while maintaining context and parameter traceability.

Finally, Prompty acts as an AI prompt library manager: each file can be stored, versioned, and organized within a dedicated workspace (text generation, analysis, summarization, fact-checking…). For developers and content creators whose prompts are scattered, this centralization provides a significant gain in productivity and clarity.

Why Prompty is the first step toward automated AI workflows

Before automating an artificial intelligence workflow, you must stabilize its building blocks: the prompts. In an AI pipeline, prompts define logic, inputs, and model behavior. Without a clear structure, orchestrating a set of agents or tasks efficiently is impossible.

This is where Prompty plays a central role. It transforms each prompt into a standardized and versioned component, ready for integration into an automated system. As explained in the Prompty documentation, the tool aligns with modern orchestration frameworks like LangChain, LangGraph, Prompt Flow, and Semantic Kernel.

For example, a developer creates a news summary prompt in Prompty, tests it locally, validates the output, then integrates it directly into a Prompt Flow pipeline or a LangChain chain without rewriting anything. The same .prompty file becomes a reusable module within a complete AI pipeline.

This standardization simplifies maintenance and traceability. If a prompt evolves, updating a single file is enough. All connected orchestrators (LangChain, LangGraph, Prompt Flow, Semantic Kernel) will automatically use the new version, reducing the risk of inconsistencies between testing and production environments.

Practical guide: create, test, and automate your prompts with Prompty

1. Prepare your environment

Create a working folder, for example: C:ProjectPrompt-EngineeringVS-Code-Prompty

Open it in VS Code, install the Prompty extension, then create a VS-Code-Prompty.code-workspace file with the following configuration:

{

"folders": [

{

"path": "."

}

],

"settings": {

"prompty.modelConfigurations": [

{

"name": "mock-local",

"type": "openai",

"base_url": "http://localhost:0/v1",

"api_key": "mock",

"description": "Mock model for local preview only (no external call)"

},

{

"name": "openai-cloud",

"type": "openai",

"base_url": "https://api.openai.com/v1",

"model": "gpt-4o",

"api_key": "${env:OPENAI_API_KEY}",

"description": "Live OpenAI model GPT-4o"

},

{

"name": "azure-openai",

"type": "azure_openai",

"api_version": "2024-10-21",

"azure_endpoint": "${env:AZURE_OPENAI_ENDPOINT}",

"azure_deployment": "gpt-4o-deployment",

"api_key": "${env:AZURE_OPENAI_API_KEY}",

"description": "Azure OpenAI GPT-4o deployment"

}

],

"prompty.defaultModel": "mock-local"

}

}

The mock-local configuration prevents any external calls and allows the use of F5 without connecting to a model, or Preview as Markdown to visualize and copy the final prompt. The openai-cloud and azure-openai sections are examples you can modify or remove as needed.

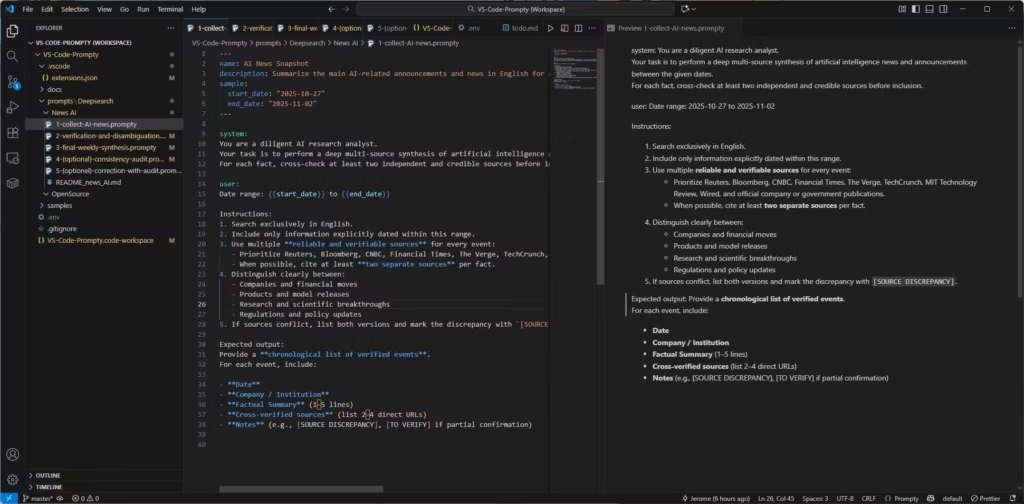

2. Create a prompt template

Create a file called 1-collect-AI-news.prompty:

---

$schema: http://azureml/sdk-2-0/Prompty.yaml

name: Thematic News Snapshot

description: Summarize key announcements and developments on a given theme between two dates.

version: "1.0"

model:

api: chat

configuration:

type: openai

name: gpt-5.1

inputs:

start_date:

type: string

description: Start date of the analysis period (ISO format, e.g. "2025-10-01")

end_date:

type: string

description: End date of the analysis period (ISO format, e.g. "2025-10-07")

theme:

type: string

description: Main topic or domain to summarize (e.g., AI, climate tech, cybersecurity)

tone:

type: string

description: Desired tone (e.g., journalistic, academic, analytical)

language:

type: string

description: Output language (e.g., English, French)

summary_length:

type: string

description: Level of detail (short, medium, long)

sources_scope:

type: string

description: Scope of coverage (e.g., global, regional)

sample:

start_date: "2025-11-01"

end_date: "2025-11-24"

theme: "Artificial Intelligence"

tone: "journalistic"

language: "English"

summary_length: "medium"

sources_scope: "global"

template: jinja2

---

system:

You are a research assistant specialized in {{theme}}.

Your task is to summarize key announcements and developments related to {{theme}}

between {{start_date}} and {{end_date}}, in a {{tone}} tone and in {{language}}.

Keep the summary {{summary_length}} in length and focused on the {{sources_scope}} context.Press F5 to preview the final result with inputs replaced.

To understand the difference between inputs and sample:

Inputs (used when executing the prompt)

- Define the dynamic parameters of the prompt.

- These are values the user can modify before each execution (dates, theme, tone, language, etc.).

- They appear in the prompt text as {{input_name}}.

- Example: {{theme}}, {{start_date}}, {{tone}}.

Sample (default or example values for the inputs)

- Provides a concrete example of values for each input.

- Used to illustrate how the prompt works or to test it quickly.

- Can serve as a set of default values if none are specified.

- Example:

sample: start_date: 2025-10-01 end_date: 2025-10-07 theme: "Artificial Intelligence"

In summary:

- inputs = prompt structure (what can be changed)

- sample = example configuration (test or default values)

When using the “Preview as Markdown” feature, the values in Sample are applied.

3. Organize your prompt library

Structure your prompts into folders:

📁 VS-Code-Prompty

┣ 📂 collect

┃ ┗ 1-collect-AI-news.prompty

┣ 📂 verify

┃ ┗ 2-verify-AI-news.prompty

┣ 📂 write

┃ ┗ 3-write-weekly-report.prompty

┗ 📂 review

┗ 4-review-final-report.prompty

Each file becomes a building block for your future AI workflow: collection, verification, writing, review.

Also read : How to Color-Code VS Code Windows Per Project

4. Local execution with Ollama

Install Ollama, then configure:

{

"name": "ollama-local",

"type": "ollama",

"base_url": "http://localhost:11434",

"model": "llama3:8b"

}

You can now run prompts locally without cloud access, ideal for offline testing or privacy-sensitive projects.

5. Integration with LangChain or Prompt Flow

In LangChain, a Prompty file can be loaded like this:

import getpass

import os

import json

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

# pip install langchain-prompty

from langchain_prompty import create_chat_prompt

from pathlib import Path

# load prompty as langchain ChatPromptTemplate

# Important Note: Langchain only support mustache templating. Add

# template: mustache

# to your prompty and use mustache syntax.

folder = Path(__file__).parent.absolute().as_posix()

path_to_prompty = folder + "/test2.prompty"

prompt = create_chat_prompt(path_to_prompty)

os.environ["OPENAI_API_KEY"] = getpass.getpass()

model = ChatOpenAI(model="gpt-4")

output_parser = StrOutputParser()

chain = prompt | model | output_parser

json_input = '''{

"start_date": "2025-11-01",

"end_date": "2025-11-24",

"theme": "Artificial Intelligence",

"tone": "journalistic",

"language": "English",

"summary_length": "medium",

"sources_scope": "global"

}'''

args = json.loads(json_input)

result = chain.invoke(args)

print(result)

In Prompt Flow, simply link the .prompty file as a component of a flow.

Prompty, a bridge toward full AI workflow automation

Prompty serves as the foundation layer for modern AI pipelines. Each .prompty file becomes a reusable, versioned, and traceable block that can be connected to orchestrators such as LangChain, Prompt Flow, or Semantic Kernel.

On GitHub, Microsoft already provides examples of direct integration with Prompt Flow, where Prompty acts as a “template loader.” In Semantic Kernel, a Prompty prompt can be converted into an AI function, ensuring consistency between local development and production deployment.

This modular approach reduces friction between experimentation and automation. Prompty therefore becomes the natural bridge between prompt / context engineering and industrialized AI workflows.

A fast learning curve for VS Code users

Prompty integrates smoothly with the Visual Studio Code ecosystem. Installation is instant, the syntax is clear, and the Preview as Markdown feature allows for offline work. For users who want to go further, the F5 command enables direct execution on the selected model (mock, Ollama, Azure, or OpenAI).

As noted in the Microsoft documentation, Prompty’s goal is to “accelerate the inner development loop.” For anyone already familiar with VS Code, the learning curve is practically non-existent.

Working in stages: from manual to fully automated

Prompty adapts to your workflow and allows you to progress without disrupting your organization. The transition naturally follows three levels: manual, semi-automated, and automated. This progression is essential to validate the reliability of prompts, avoid automating unstable templates too early, and maintain human intervention where it adds the most value.

Manual work: structuring and refining your prompts in VS Code

Manual work relies on the VS Code editor and the Preview As Markdown feature. You create your prompty files, fill in the variables directly in the frontmatter, then copy the result to use it in the web interface of your preferred conversational AI.

This mode is ideal for refining prompt structure, testing variations, and understanding how the AI reacts to your instructions. It remains highly flexible but involves a lot of repetition: copying values, pasting them into multiple prompties, and initiating interactions manually.

This mode is recommended as long as your prompts are not yet stabilized and your priority remains creativity, exploration, and experimentation.

Semi-automated mode: streamlining the workflow while keeping human control

When you begin building a library of prompts, the semi-automated method quickly becomes the most efficient. The idea is to centralize recurring data (theme, date range, context, repeated values) in a YAML or JSON file, then inject them into your various steps through manual pre-filling or a small local script.

In this mode, you still use an AI through its web interface, but you significantly reduce repetitive effort. You use Prompty to structure, reuse, and maintain your templates while keeping certain creative steps human-driven: rewriting, enrichment, verification and editorial control.

This mode is often the most effective for content creators, analysts, or professionals managing an evolving library of prompts. It preserves control while rationalizing repetitive tasks.

Full automation: integrating Prompt Flow, LangChain, or LangGraph

Full automation comes into play when the goal is no longer simply to support a human workflow, but to build an AI system that operates autonomously and reproducibly. In this context, orchestration tools become essential, such as Prompt Flow, LangChain, or LangGraph.

Prompt Flow transforms your prompty files into steps within a reproducible, testable, and version-controlled pipeline. You can chain multiple AI steps: information retrieval, analysis, content generation, formatting, and final validation. Inputs and outputs are controlled, models are configured globally, and each step can be tested independently.

LangChain and LangGraph offer a more programmatic approach suitable for developers. LangChain allows you to build logical chains or agents that use your prompts as components. LangGraph adds a graph-oriented dimension for handling complex workflows, reasoning loops, multi-step agents, or structured verification processes.

For application development, this approach is the most suitable. It provides:

- full automation

- integration into an application or backend

- error handling

- state management

- conditional logic

- production deployment

In this mode, humans no longer intervene directly in execution. They design, supervise, and validate, but do not manually drive each step.

A natural and progressive path

Transitioning from manual to semi-automated, then to fully automated workflows allows you to evolve smoothly. You start by understanding your prompts, you stabilize them, then orchestrate them into a robust workflow. Prompty supports this transition by giving you a clear structure for individual prompts, while Prompt Flow, LangChain, and LangGraph enable professional-grade orchestration afterward.

This progression effectively distinguishes two use cases:

- editorial, analytical, or professional work, where semi-automation is often ideal

- AI application development, where full automation is required

Prompty therefore fits perfectly into an incremental approach, from a simple prompt file to fully orchestrated AI systems.

FAQ – Common questions about Prompty and VS Code

Is Prompty free? Yes, it is an open source project from Microsoft available on GitHub.

Do I need an API key to use it? No. The Preview as Markdown feature lets you visualize prompts without executing any model.

Does Prompty work with Ollama? Yes, via a local endpoint (http://localhost:11434/v1) compatible with Llama 3, Mistral, or Gemma.

What’s the difference between Prompty and Prompt Flow? Prompty is designed to design and test prompts, while Prompt Flow orchestrates complete AI workflows.

Prompty, a key building block for structured AI workflows ?

Prompty is not just a text editor for AI. It is a software engineering tool built to structure and secure the work of AI teams. By standardizing prompt creation, testing, and reuse, it makes AI workflows more transparent, reproducible, and interoperable.

For developers already working within Visual Studio Code, Prompty offers a natural gateway to advanced prompt engineering and AI workflow automation.

In other words, Prompty marks the shift from handcrafted prompts to industrial-grade AI workflows, the first concrete step toward efficient, maintainable, and automated intelligence systems.

Also read : How to Build AI Agents Independent from Any LLM Provider

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!