Best practices to manage an AI prompt library

Building an AI prompt library is a bit like setting up a wine cellar. Without strict organization, you will quickly lose your best bottles at the bottom of a dusty box. Whether you are a freelancer juggling with ChatGPT every day or part of a team deploying large-scale AI workflows, managing your collection of prompt templates soon becomes a strategic challenge.

Week after week your AI prompt collection grows: content generation instructions, data extraction templates, classification scripts. Without a clear methodology, this growing richness becomes a burden. You waste time searching for the right prompt, you unknowingly duplicate existing instructions, and worse, you forget which version delivered the best results.

In this article, we will break down the recognized best practices for organizing, documenting and maintaining a high-performing AI prompt library. Whether you work solo or in a team, these proven methods will help you capitalize on your efforts and boost productivity. For an overview, check out our main page: Organize AI prompts, a complete guide to solutions in 2025.

Structuring your AI prompt library with method

Build a logical folder and category architecture

The first step to effectively manage your AI prompt library is to define a coherent classification structure. Instead of piling up all your templates in one file, organize them into thematic folders based on their main use.

Example architecture:

/prompts

├── /content-generation

│ ├── documentation

│ ├── blog-articles

│ ├── product-descriptions

│ └── marketing-emails

├── /data-extraction

│ ├── sentiment-analysis

│ └── entity-extraction

├── /classification

│ ├── email-sorting

│ └── feedback-categorization

└── /conversation

├── customer-support

└── personal-assistant

This functional categorization approach makes it possible to find the right type of prompt instantly. Structuring by use is the foundation of efficient AI prompt management.

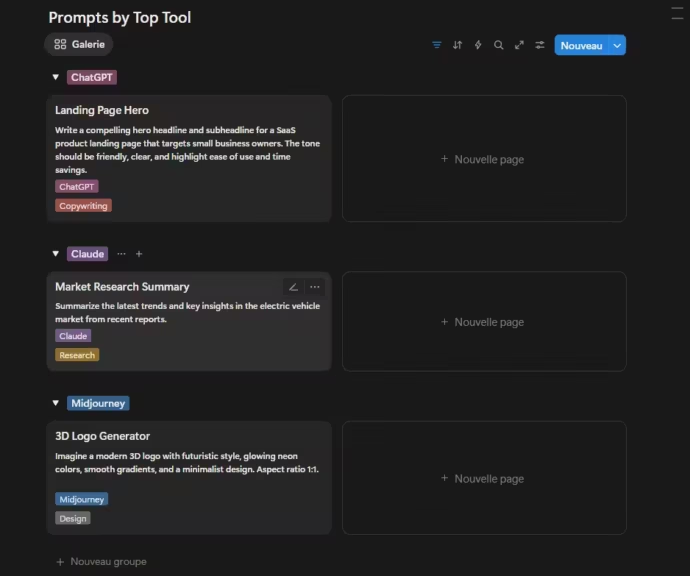

Adopt a smart tagging system

Beyond folders, tags add an essential layer of flexibility. The same AI prompt can be useful in multiple contexts: a blog article generation template can apply to both marketing and technical documentation.

Choose a multi-dimensional tagging system:

- By topic: marketing, tech, finance, healthcare

- By complexity: beginner, intermediate, advanced

- By target model: GPT-4, Claude, Gemini, open source models

- By language: English, French, multilingual

- By expected output: short, long, structured, creative

This enriched taxonomy turns your AI prompt library into a knowledge base searchable across multiple axes.

Define a consistent naming convention

Nothing is more frustrating than looking for a prompt named “prompt-marketing-final-v3-realfinal”. To avoid chaos in your AI prompt repository, establish a clear naming convention from the start.

Recommended format: [type]-[context]-[function]-v[version]

Examples:

- generation-blog-article-seo-v2

- extraction-customer-feedback-sentiment-v1

- classification-emails-priority-v3

This standardization improves visual recognition and keyword searchability. According to Langfuse, a strict naming convention becomes crucial once your library grows beyond 50 templates.

Add descriptive metadata

For every prompt, document the essential metadata:

- Author: who created this template

- Creation date and last update

- Dependencies: validated AI models

- Use cases: optimal context of application

- Required parameters: mandatory vs optional variables

- Performance: success rate, average response time

These contextual details turn a plain text file into a documented and reusable asset for the entire team. Metadata is a best practice and becomes vital as your AI prompt collection scales.

Versioning and documenting AI prompts

Why versioning is critical for prompt templates

Imagine modifying a prompt that worked perfectly and suddenly the new version produces poor results. Without history, rolling back cleanly is impossible. Prompt versioning is not optional, it is essential for any professional AI prompt library.

Unlike traditional code, prompts evolve iteratively and unpredictably. A single rewording can dramatically improve or worsen results. This makes it necessary to track every change and compare versions.

As Mirascope explains, prompt versioning should follow the same principles as software versioning with adaptations for generative AI.

Document prompt history

For each version in your AI prompt repository, log:

- Date and author of the modification

- Changes made: what exactly was altered

- Reason: why the change was necessary (better results, adaptation to a new model, bug fix)

- Observed results: measurable impact on output quality

Example:

## Version 2.1 - March 15, 2025

**Author**: Marie Dupont

**Changes**: Added examples of expected format in the instruction

**Reason**: Outputs lacked consistent structure

**Results**: +35% compliance with the requested formatThis traceability allows rollback if needed and builds learning over time. Each iteration becomes knowledge capital.

Create complete documentation for every AI prompt

A prompt without documentation is like a recipe without ingredients: usable, but painful. For every template, write a structured documentation sheet:

1. Objective

- Concrete use case

- Problem it solves

- Contexts where it should be applied

2. Parameters and variables

- List of variables ({{variable_name}})

- Mandatory vs optional parameters

- Expected format for each parameter

3. Examples of use

- Real input-output cases

- Possible variations

- Common errors to avoid

4. Validated models

- AI models tested

- Comparative performance across models

- Recommended settings (temperature, max tokens)

5. Notes and limitations

- Points requiring attention

- Contexts where it performs poorly

- Possible improvements

As Langfuse highlights, exhaustive documentation is essential when a team grows or a project spans several months.

Use suitable versioning tools

For effective AI prompt versioning, consider:

Git and traditional version control If you know Git, store your prompts in a dedicated repo. Each change is a documented commit, with branches for testing variations and a complete history.

Specialized platforms Solutions like PromptLayer or PromptHub integrate visual versioning. They are more accessible than Git and clearly display differences between versions.

Hybrid solutions Obsidian with a Git plugin or Notion with version history provide a middle ground for non-technical teams.

Notion and Obsidian differ greatly in versioning philosophy: Notion emphasizes a collaborative cloud workspace with built-in history, while Obsidian focuses on local privacy-first note-taking, with optional cloud sync and plugins for versioning.

Collaboration and team validation for AI prompts

Centralize your AI prompt library

As soon as multiple people work with AI prompts, centralization is a must. No more prompts scattered in personal Google Docs, Slack threads or desktop files.

Collaborative solutions give your team access to a centralized AI prompt repository:

- Dedicated platforms: PromptHub, OpenPrompt provide team workspaces with granular access rights

- Generic tools: Notion, Confluence, or even an internal wiki are good starters

- Git repositories: for technical teams, GitHub or GitLab with Markdown documentation remains reliable

The key is that every member can consult, suggest and improve prompts without friction. According to Latitude, centralization reduces time wasted on searching or recreating prompts by 40%.

Implement a peer-review process

Before adding a new prompt to production, set up a collective validation step. Peer review, borrowed from software development, works very well for AI prompts.

Typical workflow:

- Proposal: a member creates or modifies a prompt

- Initial testing: the author verifies outputs are correct

- Review request: submitted to colleagues

- Feedback: reviewers comment, suggest improvements, test on their cases

- Iteration: adjustments based on feedback

- Approval: final validation and addition to the main library

Although it may seem heavy, this process ensures quality and avoids weak prompts polluting the repository. Tools like PromptHub integrate reviews with comments and approvals.

Manage access rights and permissions

Not every team member needs the same level of access. Define clear roles and permissions:

- Reader: view-only, can use prompts

- Contributor: propose new prompts or edits

- Validator: approve proposed changes

- Administrator: manage structure, permissions, archives

This becomes critical when your AI prompt library contains sensitive data or when external contractors are involved.

Archive obsolete prompts properly

Never simply delete an outdated prompt. You may need it later or want to understand why it was abandoned.

Create an archive section:

/prompts

├── /active

│ └── [production prompts]

├── /experimental

│ └── [testing prompts]

└── /archive

├── /obsolete

│ └── [replaced by newer versions]

└── /deprecated

└── [abandoned with documented reasons]Each archived prompt should include:

- Date and reason for archiving

- Replacement reference if applicable

- Historical usage context

This organizational memory avoids reinventing the wheel and leverages past lessons. Latitude emphasizes that consulting archives before creating new prompts saves valuable time.

Testing, benchmarking and reusing AI prompt templates

Establish systematic testing protocols

A prompt that works once is not necessarily a reliable prompt. To validate the quality and robustness of your AI prompt templates, set up systematic testing before integrating them into production.

Recommended test protocol:

- Variability tests: run the same prompt 5 to 10 times to check output consistency

- Input variation tests: try edge cases, unusual formats and alternative instructions

- Multi-model tests: if relevant, compare results across GPT-4, Claude, Gemini and open source models

- Parameter tests: adjust temperature, max tokens, top_p to find the optimal configuration

Document the results of these tests in your AI prompt library. This helps the whole team understand under what conditions the prompt performs best.

Are there open source frameworks to automate AI prompt testing?

Yes. Several open source frameworks automate prompt testing with built-in evaluation, comparison and versioning. Notable ones include:

- Lilypad: full-featured open source tool for prompt testing and versioning, compatible with all LLM providers.

- OpenPrompt: modular prompt engineering framework with integrated evaluation.

- Promptfoo: local developer-oriented tool for automated prompt evaluation, robustness testing and side-by-side comparisons.

- Helicone: open source platform for controlled experiments, versioning and rollbacks.

- Opik: tracing and evaluation solution for both dev and production environments.

- PromptTools: toolbox for experimenting with prompts across models and vector databases.

These solutions make prompt testing reproducible and measurable, with versioning, collaboration and integration into development pipelines.

Build performance benchmarks

Beyond functional tests, measure quantitative performance of your AI prompts. Define clear metrics for each type of template:

Content generation:

- Acceptance rate (used as-is vs rewritten)

- Average generation time

- Token consumption

- Subjective quality score (1–5)

Data extraction:

- Precision (correct extractions / total)

- Recall (extracted / existing data)

- Error or hallucination rate

Classification:

- Overall accuracy

- Confusion matrix to highlight common errors

- Processing time per element

Keep historical benchmarks in your prompt library. When optimizing a prompt, compare new performance against previous results to validate improvements objectively.

Automate testing with scripts

For technical teams, automated testing scripts save time. Instead of manually running checks, let a script execute test batches.

Conceptual Python example:

def test_prompt_extraction(prompt_template, test_cases):

results = []

for input_text, expected_output in test_cases:

actual_output = execute_prompt(prompt_template, input_text)

results.append({

'input': input_text,

'expected': expected_output,

'actual': actual_output,

'match': compare_outputs(expected_output, actual_output)

})

return generate_report(results)Frameworks like LangChain make it easier to build automated pipelines for AI prompt testing.

Design reusable templates with variables

Reusability is at the core of an effective AI prompt library. Instead of creating dozens of similar prompts, design flexible templates with variables.

Without variables (rigid):

Write a blog article about digital marketing.

Tone must be professional and educational.

The article should be around 1000 words.With variables (flexible):

Write a blog article about {{topic}}.

Tone should be {{tone}} and {{style}}.

The article should be around {{length}} words.In your library, store:

- The base template with clearly defined variables

- Examples of values for each variable

- Tested combinations that yield good results

According to Langfuse, well-documented variable templates increase prompt reuse within a team by 60%.

Create a library of modular components

Push reuse further by breaking down prompts into modular components. Many instructions appear repeatedly across templates:

Reusable components:

- Formatting instructions (JSON, Markdown, XML)

- Tone and style constraints

- Few-shot examples

- Verification and self-critique instructions

Store these building blocks separately. When creating a new prompt, assemble relevant components instead of starting from scratch.

[TO VERIFY: are there tools supporting modular AI prompt construction?]

Conclusion: from theory to practice

Managing an AI prompt library is not just piling up text files. It is a dedicated discipline that requires structure, rigor and the right tools. By applying best practices such as coherent structuring, systematic versioning, organized collaboration and rigorous testing, you turn your collection of AI prompts into a strategic asset.

Benefits are tangible: less time wasted searching or recreating prompts, better output quality through continuous optimization, shared team knowledge and boosted productivity.

Now that you understand the fundamentals of AI prompt library management, it is time to put them into practice. Depending on your profile and needs, consider these guides:

- Accessible and collaborative approach: organize AI prompts with Notion

- Local and flexible solution: manage prompts with Obsidian and Templater

- Developer-oriented workflow: organize prompts with VS Code

Whichever option you choose, the key is to start organizing your AI prompt library now. You will gain efficiency, consistency and avoid those long minutes searching for the “magic prompt” that once worked perfectly but vanished in your notes.

Your comments enrich our articles, so don’t hesitate to share your thoughts! Sharing on social media helps us a lot. Thank you for your support!